Friday, April 24. 2015

Analyst Watch: Ten reasons why open-source software will eat the world

Via SD Times

-----

I recently attended Facebook’s F8 developer conference in San Francisco, where I had a revelation on why it is going to be impossible to succeed as a technology vendor in the long run without deeply embracing open source. Of the many great presentations I listened to, I was most captivated by the ones that explained how Facebook internally developed software. I was impressed by how quickly the company is turning such important IP back into the community.

To be sure, many major Web companies like Google and Yahoo have been leveraging open-source dynamics aggressively and contribute back to the community. My aim is not to single out Facebook, except that it was during the F8 conference I had the opportunity to reflect on the drivers behind Facebook’s actions and why other technology providers may be wise to learn from them.

Here are my 10 reasons why open-source software is effectively becoming inevitable for infrastructure and application platform companies:

- Not reinventing the wheel: The most obvious reason to use open-source software is to build software faster, or to effectively stand on the shoulders of giants. Companies at the top of their game have to move fast and grab the best that have been contributed by a well-honed ecosystem and build their added innovation on top of it. Doing anything else is suboptimal and will ultimately leave you behind.

- Customization with benefits: When a company is at the top of its category, such as a social network with 1.4 billion users, available open-source software is typically only the starting point for a quality solution. Often the software has to be customized to be leveraged. Contributing your customizations back to open source allows them to be vetted and improved for your benefit.

- Motivated workforce: Beyond a good wage and a supportive work environment, there is little that can push developers to do high-quality work more than peer approval, community recognition, and the opportunity for fame. Turning open-source software back to the community and allowing developers to bask in the recognition of their peers is a powerful motivator and an important tool for employee retention.

- Attracting top talent: A similar dynamic is in play in the hiring process as tech companies compete to build their engineering teams. The opportunity to be visible in a broader developer community (or to attain peer recognition and fame) is potentially more important than getting top wages for some. Not contributing open source back to the community narrows the talent pool for tech vendors in an increasingly unacceptable way.

- The efficiency of standardized practices: Using open-source solutions means using standardized solutions to problems. Such standardization of patterns of use or work enforces a normalized set of organizational practices that will improve the work of many engineers at other firms. Such standardization leads to more-optimized organizations, which feature faster developer on-ramping and less wasted time. In other words, open source brings standardized organizational practices, which help avoid unnecessary experimentation.

- Business acceleration: Even in situations where a technology vendor is focused on bringing to market a solution as a central business plan, open source is increasingly replacing proprietary IP for infrastructure and application platform technologies. Creating an innovative solution and releasing it to open source can facilitate broader adoption of the technology with minimal investment in sales, marketing or professional service teams. This dynamic can also be leveraged by larger vendors to experiment in new ventures, and to similarly create wide adoption with minimal cost.

- A moat in plain sight: Creating IP in open source allows the creators to hone their skills and learn usage patterns ahead of the competition. The game then becomes to preserve that lead. Open source may not provide the lock-in protection to the owner that proprietary IP does, but the constant innovation and evolution required in operating in open-source environments fosters fast innovation that has now become essential to business success. Additionally, the visibility of the source code can further enlarge the moat around its innovation, discouraging other businesses from reinventing the wheel.

- Cleaner software: Creating IP in open source also means that the engineers have to operate in full daylight, enabling them to avoid the traps of plagiarized software and generally stay clear of patents. Many proprietary software companies have difficulty turning their large codebases into open source because of necessary time-consuming IP scrubbing processes. Open-source IP-based businesses avoid this problem from the get-go.

- Strategic safety: Basing a new product on open-source software can go a long way to persuade customers who might otherwise be concerned about the vendor’s financial resources or strategic commitment to the technology. It used to be that IT organizations only bought important (but proprietary) software from large, established tech companies. Open source allows smaller players to provide viable solutions by using openness as a competitive weapon to defuse the strategic safety argument. Since the source is open, in theory (and often only in theory) IT organizations can skill up on and support it if and when a small vendor disappears or loses interest.

- Customer goodwill: Finally, open source allows a tech vendor to accrue a great deal of goodwill with its customers and partners. If you are a company like Facebook, constantly and controversially disrupting norms in social interaction and privacy, being able to return value to the larger community through open-source software can go a long way to making up for the negatives of your disruption.

Monday, April 13. 2015

BitTorrent launches its Maelstrom P2P Web Browser in a public beta

Via TheNextWeb

-----

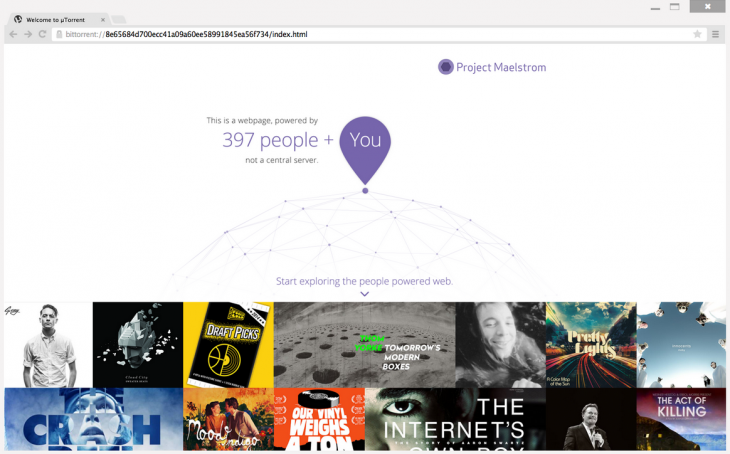

Back in December, we reported on the alpha for BitTorrent’s Maelstrom, a browser that uses BitTorrent’s P2P technology in order to place some control of the Web back in users’ hands by eliminating the need for centralized servers.

Maelstrom is now in beta, bring it one step closer to official release.

BitTorrent says more than 10,000 developers and 3,500 publishers signed

up for the alpha, and it’s using their insights to launch a more stable

public beta.

Along with the beta comes the first set of developer tools for the browser, helping publishers and programmers to build their websites around Maelstrom’s P2P technology. And they need to – Maelstrom can’t decentralize the Internet if there isn’t any native content for the platform.

It’s only available on Windows at the moment but if you’re interested and on Microsoft’s OS, you can download the beta from BitTorrent now.

? Project MaelstromThursday, February 26. 2015

Researchers produce Global Risks report, AI and other technologies included in it

Via betanews

-----

Let's face it, we're always at risk, and I speak for human kind, not just the personal risks we take each time we leave our homes. Some of these potential terrors are unavoidable -- we can't control the asteroid we find hurtling towards us or the next super volcano that may erupt as the Siberian Traps once did.

Some risks however, are well within our control, yet we continue down paths that are both exciting and potentially dangerous. In his book Demon Haunted World, the great astronomer, teacher and TV personality Carl Sagan wrote "Avoidable human misery is more often caused not so much by stupidity as by ignorance, particularly our ignorance about ourselves".

Now researchers have published a list of the risks we face and several of them are self-created. Perhaps the most prominent is artificial intelligence, or AI as it generally referred to. The technology has been fairly prominent in the news recently as both Elon Musk and Bill Gates have warned of its dangers. Musk went as far as to invest in some of the companies so that he could keep an eye on things.

The new report states "extreme intelligences could not easily be controlled (either by the groups creating them, or by some international regulatory regime), and would probably act to boost their own intelligence and acquire maximal resources for almost all initial AI motivations".

Stephen Hawking, perhaps the world's most famous scientist, told the BBC "The development of full artificial intelligence could spell the end of the human race".

That's three obviously intelligent men telling us it's a bad idea, but of course that will not deter those who wish to develop it and if it is controlled correctly then it may not be the huge danger we worry about.

What else is on the list of doom and gloom? Several more man-made problems, including nuclear war, global system collapse, synthetic biology, and nanotechnology. There is also the usual array of asteroids, super volcanoes and global pandemics. For good measure, the scientists even added in bad global governance.

If you would like to read the report for yourself it can be found at the Global Challenges Foundation website. It may keep you awake at night -- even better than a good horror movie could.

Tuesday, February 24. 2015

If software looks like a brain and acts like a brain—will we treat it like one?

Via ars technica

-----

Long the domain of science fiction, researchers are now working to create software that perfectly models human and animal brains. With an approach known as whole brain emulation (WBE), the idea is that if we can perfectly copy the functional structure of the brain, we will create software perfectly analogous to one. The upshot here is simple yet mind-boggling. Scientists hope to create software that could theoretically experience everything we experience: emotion, addiction, ambition, consciousness, and suffering.

“Right now in computer science, we make computer simulations of neural networks to figure out how the brain works," Anders Sandberg, a computational neuroscientist and research fellow at the Future of Humanity Institute at Oxford University, told Ars. "It seems possible that in a few decades we will take entire brains, scan them, turn them into computer code, and make simulations of everything going on in our brain.”

Everything. Of course, a perfect copy does not necessarily mean equivalent. Software is so… different. It's a tool that performs because we tell it to perform. It's difficult to imagine that we could imbue it with those same abilities that we believe make us human. To imagine our computers loving, hungering, and suffering probably feels a bit ridiculous. And some scientists would agree.

But there are others—scientists, futurists, the director of engineering at Google—who are working very seriously to make this happen.

For now, let’s set aside all the questions of if or when. Pretend that our understanding of the brain has expanded so much and our technology has become so great that this is our new reality: we, humans, have created conscious software. The question then becomes how to deal with it.

And while success in this endeavor of fantasy turning fact is by no means guaranteed, there has been quite a bit of debate among those who think about these things whether WBEs will mean immortality for humans or the end of us. There is far less discussion about how, exactly, we should react to this kind of artificial intelligence should it appear. Will we show a WBE human kindness or human cruelty—and does that even matter?

The ethics of pulling the plug on an AI

In a recent article in the Journal of Experimental and Theoretical Artificial Intelligence, Sandberg dives into some of the ethical questions that would (or at least should) arise from successful whole brain emulation. The focus of his paper, he explained, is “What are we allowed to do to these simulated brains?” If we create a WBE that perfectly models a brain, can it suffer? Should we care?

Again, discounting if and when, it's likely that an early successful software brain will mirror an animal’s. Animal brains are simply much smaller, less complex, and more available. So would a computer program that perfectly models an animal receive the same consideration an actual animal would? In practice, this might not be an issue. If a software animal brain emulates a worm or insect, for instance, there will be little worry about the software’s legal and moral status. After all, even the strictest laboratory standards today place few restrictions on what researchers do with invertebrates. When wrapping our minds around the ethics of how to treat an AI, the real question is what happens when we program a mammal?

“If you imagine that I am in a lab, I reach into a cage and pinch the tail of a little lab rat, the rat is going to squeal, it is going to run off in pain, and it’s not going to be a very happy rat. And actually, the regulations for animal research take a very stern view of that kind of behavior," Sandberg says. "Then what if I go into the computer lab, put on virtual reality gloves, and reach into my simulated cage where I have a little rat simulation and pinch its tail? Is this as bad as doing this to a real rat?”

As Sandberg alluded to, there are ethical codes for the treatment of mammals, and animals are protected by laws designed to reduce suffering. Would digital lab animals be protected under the same rules? Well, according to Sandberg, one of the purposes of developing this software is to avoid the many ethical problems with using carbon-based animals.

To get at these issues, Sandberg’s article takes the reader on a tour of how philosophers define animal morality and our relationships with animals as sentient beings. These are not easy ideas to summarize. “Philosophers have been bickering about these issues for decades," Sandberg says. "I think they will continue to bicker until we upload a philosopher into a computer and ask him how he feels.”

While many people might choose to respond, “Oh, it's just software,” this seems much too simplistic for Sandberg. “We have no experience with not being flesh and blood, so the fact that we have no experience of software suffering, that might just be that we haven’t had a chance to experience it. Maybe there is something like suffering, or something even worse than suffering software could experience,” he says.

Ultimately, Sandberg argues that it's better to be safe than sorry. He concludes a cautious approach would be best, that WBEs "should be treated as the corresponding animal system absent countervailing evidence.” When asked what this evidence would look like—that is, software designed to model an animal brain without the consciousness of one—he considered that, too. “A simple case would be when the internal electrical activity did not look like what happens in the real animal. That would suggest the simulation is not close at all. If there is the counterpart of an epileptic seizure, then we might also conclude there is likely no consciousness, but now we are getting closer to something that might be worrisome,” he says.

So the evidence that the software animal’s brain is not conscious looks…exactly like evidence that a biological animal’s brain is not conscious.

Virtual pain

Despite his pleas for caution, Sandberg doesn’t advocate eliminating emulation experimentation entirely. He thinks that if we stop and think about it, compassion for digital test animals could arise relatively easy. After all, if we know enough to create a digital brain capable of suffering, we should know enough to bypass its pain centers. “It might be possible to run virtual painkillers which are way better than real painkillers," he says. "You literally leave out the signals that would correspond to pain. And while I’m not worried about any simulation right now… in a few years I think that is going to change.”

This, of course, assumes that animals' only source of suffering is pain. In that regard, to worry whether a software animal may suffer in the future probably seems pointless when we accept so much suffering in biological animals today. If you find a rat in your house, you are free to dispose of it how you see fit. We kill animals for food and fashion. Why worry about a software rat?

One answer—beyond basic compassion—is that we'll need the practice. If we can successfully emulate the brains of other mammals, then emulating a human is inevitable. And the ethics of hosting human-like consciousness becomes much more complicated.

Beyond pain and suffering, Sandberg considers a long list of possible ethical issues in this scenario: a blindingly monotonous environment, damaged or disabled emulations, perpetual hibernation, the tricky subject of copies, communications between beings who think at vastly different speeds (software brains could easily run a million times faster than ours), privacy, and matters of self-ownership and intellectual property.

All of these may be sticky issues, Sandberg predicts, but if we can resolve them, human brain emulations could achieve some remarkable feats. They are ideally suited for extreme tasks like space exploration, where we could potentially beam them through the cosmos. And if it came down to it, the digital versions of ourselves might be the only survivors in a biological die-off.

Monday, February 23. 2015

Android to Become 'Workhorse' of Cybercrime

Via EE Times

-----

PARIS — As of the end of 2014, 16 million mobile devices worldwide have been infected by malicious software, estimated Alcatel-Lucent’s security arm, Motive Security Labs, in its latest security report released Thursday (Feb. 12).

Such malware is used by “cybercriminals for corporate and personal espionage, information theft, denial of service attacks on business and governments and banking and advertising scams,” the report warned.

Some of the key facts revealed in the report -- released two weeks in advance of the Mobile World Congress 2015 -- could dampen the mobile industry’s renewed enthusiasm for mobile payment systems such as Google Wallet and Apple Pay.

At risk is also the matter of privacy. How safe is your mobile device? Consumers have gotten used to trusting their smartphones, expecting their devices to know them well enough to accommodate their habits and preferences. So the last thing consumers expect them to do is to channel spyware into their lives, letting others monitor calls and track web browsing.

Cyber attacks

The latest in a drumbeat of data

hacking incidents is the massive database breach reported last week by

Anthem Inc., the second largest health insurer in the United States.

There was also the high profile corporate security attack on Sony in

late 2014.

Declaring that 2014 “will be remembered as the year of cyber-attacks,” Kevin McNamee, director, Alcatel-Lucent Motive Security Labs, noted in his latest blog other cases of hackers stealing millions of credit and debit card account numbers at retail points of sale. They include the security breach at Target in 2013 and similar breaches repeated in 2014 at Staples, Home Depot Sally Beauty Supply, Neiman Marcus, United Parcel Service, Michaels Stores and Albertsons, as well as the food chains Dairy Queen and P. F. Chang.

“But the combined number of these attacks pales in comparison to the malware attacks on mobile and residential devices,” McNamee insists. In his blog, he wrote, “Stealing personal information and data minutes from individual device users doesn’t tend to make the news, but it’s happening with increased frequency. And the consequences of losing one’s financial information, privacy, and personal identity to cyber criminals are no less important when it’s you.”

'Workhorse of cybercrime'

Indeed, malware

infections in mobile devices are on the rise. According to the Motive

Security Labs report, malware infections in mobile devices jumped by 25%

in 2014, compared to a 20% increase in 2013.

According to the report, in the mobile networks, “Android devices have now caught up to Windows laptops as the primary workhorse of cybercrime.” The infection rates between Android and Windows devices now split 50/50 in 2014, said the report.

This may be hardly a surprise to those familiar with Android security. There are three issues. First, the volume of Android devices shipped in 2014 is so huge that it makes a juicy target for cyber criminals. Second, Android is based on an open platform. Third, Android allows users to download apps from third-party stores where apps are not consistently verified and controlled.

In contrast, the report said that less than 1% of infections come from iPhone and Blackberry smartphones. The report, however, quickly added that this data doesn’t prove that iPhones are immune to malware.

The Motive Security Labs report cited findings by Palo Alto Networks in early November. The Networks discussed the discovery of WireLurker vulnerability that allows an infected Mac OS-X computer to install applications on any iPhone that connects to it via a USB connection. User permission is not required and the iPhone need not be jail-broken.

News stories reported the source of the infected Mac OS-X apps as an app store in China that apparently affected some 350,000 users through apps disguised as popular games. These infected the Mac computer, which in turn infected the iPhone. Once infected, the iPhone contacted a remote C&C server.

According to the Motive Security Labs report, a couple of weeks later, FireEye revealed Masque Attack vulnerability, which allows third-party apps to be replaced with a malicious app that can access all the data of the original app. In a demo, FireEye replaced the Gmail app on an iPhone, allowing the attacker complete access to the victim’s email and text messages.

Spyware on the rise

It’s important to note that

among varieties of malware, mobile spyware is definitely on the

increase. According to Motive Security Labs, “Six of the mobile malware

top 20 list are mobile spyware.” These are apps used to spy on the

phone’s owner. “They track the phone’s location, monitor ingoing and

outgoing calls and text messages, monitor email and track the victim’s

web browsing,” according to Motive Security Labs.

Impact on mobile payment

For consumers and mobile operators, the malware story hits home hardest

in how it may affect mobile payment. McNamee wrote in his blog:

The rise of mobile malware threats isn’t unexpected. But as Google Wallet, Apple Pay and others rush to bring us mobile payment systems, security has to be a top focus. And malware concerns become even more acute in the workplace where more than 90% of workers admit to using their personal smartphones for work purposes.

Fixed broadband networks

The Motive Security

Labs report didn’t stop at mobile security. It also looked at

residential fixed broadband networks. The report found the overall

monthly infection rate there is 13.6%, “substantially up from the 9%

seen in 2013,” said the report. The report attributed it to “an increase

in infections by moderate threat level adware.”

Why is this all happening?

The short answer to

why this is all happening today is that “a vast majority of mobile

device owners do not take proper device security precautions,” the

report said.

Noting that a recent Motive Security Labs survey found that 65 percent of subscribers expect their service provider to protect both their mobile and home devices, the report seems to suggest that the onus is on operators. “They are expected to take a proactive approach to this problem by providing services that alert subscribers to malware on their devices along with self-help instructions for removing it,” said Patrick Tan, General Manager of Network Intelligence at Alcatel-Lucent, in a statement.

Due to the large market share it holds within communication networks, Alcatel-Lucent says that it’s in a unique position to measure the impact of mobile and home device traffic moving over those networks to identify malicious and cyber-security threats. Motive Security Labs is an analytics arm of Motive Customer Experience Management.

According to Alcatel-Lucent, Motive Security Labs (formerly Kindsight Security Labs), processes more than 120,000 new malware samples per day and maintains a library of 30 million active samples.

In the following pages, we will share the hilights of data collected by Motive Security Labs.

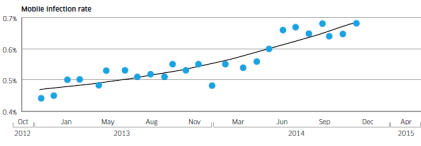

Mobile infection rate since December 2012

Alcatel-Lucent’s Motive Security Labs found 0.68% of mobile devices are infected with malware.

Using the ITU’s figure of 2.3 billion mobile broadband subscriptions, Motive Security Labs estimated that 16 million mobile devices had some sort of malware infection in December 2014.

The report called this global estimate “likely to be on the conservative side.” Motive Security Labs’ sensors do not have complete coverage in areas such as China and Russia, where mobile infection rates are known to be higher.

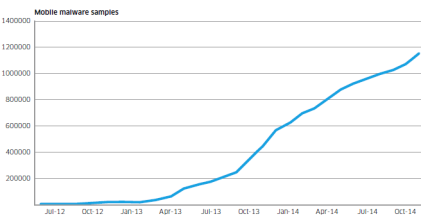

Mobile malware samples since June 2012

Motive Security Labs used the increase in the number of samples in its malware database to show Android malware growth.

The chart above shows numbers since June 2012. The number of samples grew by 161% in 2014.

In addition to the increase in raw numbers, the sophistication of Android malware also got better, according to Motive Security Labs. Researchers in 2014 started to see malware applications that had originally been developed for the Windows/PC platform migrate to the mobile space, bringing with them more sophisticated command and control and rootkit technologies.

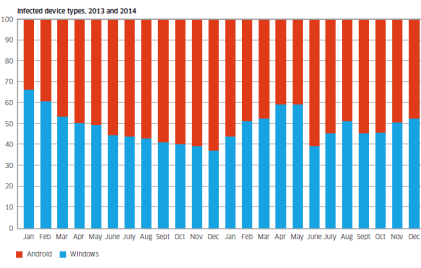

Infected device types in 2013 and 2014

The chart shows the breakdown of infected device types that have been observed in 2013 and 2014. Shown in red is Android and shown in blue is Windows.

While the involvement of such a high proportion of Windows/PC devices may be a surprise to some, these Windows/PCs are connected to the mobile network via dongles and mobile Wi-Fi devices or simply tethered through smartphones.

They’re responsible for about 50% of the malware infections observed.

The report said, “This is because these devices are still the favorite of hardcore professional cybercriminals who have a huge investment in the Windows malware ecosystem. As the mobile network becomes the access network of choice for many Windows PCs, the malware moves with them.”

Android phones and tablets are responsible for about 50% of the malware infections observed.

Currently most mobile malware is distributed as “Trojanized” apps. Android offers the easiest target for this because of its open app environment, noted the report.

Tuesday, December 30. 2014

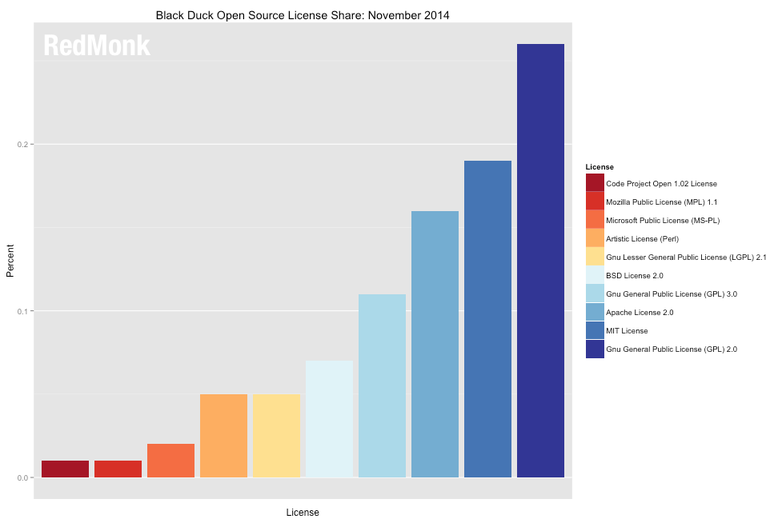

The fall of GPL and the rise of permissive open-source licenses

Via ZDNet

-----

I know very few open-source programmers, no matter how skilled they may with the intricacies of C++, who relish learning about the ins and outs of open-source licenses. I can't blame them. Like it or not, though, picking an open-source license is a necessity.

Idiots.

Things have improved a little. In July 2013 when Black Duck Software found that 77 percent of projects on GitHub have no declared license. Earlier that year Aaron Williamson, senior staff counsel at the Software Freedom Law Center, discovered that 85.1 percent of GitHub programs had no license.

In 2014, many GitHub programmers, using arguably the most popular code hosting system in the world, still don't use any license at all.

That's a big mistake. If you don't have a license, you're leaving the door open for people to fool with your code. You may think that's just fine... just like the poor sods did who used a permissive Creative Common license for "their" photographs that they'd stored on Yahoo's Flikr only to discover that Yahoo had started to sell prints of their photos ... and keeping all the money.

Maybe you're cool with that. I'm not.

Now, as Stephen O'Grady, co-founder of Red Monk, a major research group, has observed, as software is shifting to being used more in a service mode rather than deployed, you may not need to protect your code with a more restrictive license such as the GPLv3 since "if the code is not a competitive advantage, it is likely not worth protecting." Even in the case of code with little intrinsic value, however, O'Grady believes that, "permissive licenses [such as the MIT License] are a perfect alternative."

So while O'Grady using Black Duck data found that "the GPL has been the overwhelmingly most selected license," these two licenses, GPLv2 and v3 are, "no longer more popular than most of the other licenses put together."

Instead, "The three primary permissive license choices (Apache/BSD/MIT) ... collectively are employed by 42 percent. They represent, in fact, three of the five most popular licenses in use today." These permissive licenses has been gaining ground at GPL's expense. The two biggest gainers, the Apache and MIT licenses, were up 27 percent, while the GPLv2, Linux's license, has declined by 24 percent.

This trend towards more permissive licensing is not, however, just the result of younger programmers switching to thinking of code as means to an end for a cloud services such as Software-as-a-Service (SaaS). Instead, it's been moving that way since 2004 according to a 2012 study by Donnie Berkholz, a Red Monk analyst.

Berkholz learned, using data from Ohloh, an open-source code research project now known as Open Hub, that "Since 2010, this trend has reached a point where permissive is more likely than copyleft [GPL] for a new open-source project."

I'm not sure that's wise, but this is 2014, not 1988. Many program's functionality are now delivered as a service rather than from a program residing on your computer. What I do know, though, is that if you want some say in how your code will be used tomorrow, you still need to put in under some kind of license.

Yes, without any license, your code defaults to falling under copyright law. In that case, legally speaking no one can reproduce, distribute, or create derivative works from your work. You may or may not want that. In any case, that's only the theory. In practice you'd find defending your rights to be difficult.

You should also keep in mind that when you "publish" your code on GitHub, or any other "public" site, you're giving up some of your rights. Which ones? Well, it depends on the site's Terms of Service. On GitHub, for example, if you choose to make your project repositories public -- which is probably the case or why would be on GitHub in the first place? --then you've agreed to allow others to view and fork your repositories. Notice the word "fork?" Good luck defending your copyright.

Seriously, you can either figure out what you want to do with your code before you start exposing it to the world, or you can do it after it's become a problem. Me? I think figuring it out first is the smart play.

Friday, December 19. 2014

Bots Now Outnumber Humans on the Web

Via Wired

-----

Diogo Mónica once wrote a short computer script that gave him a secret weapon in the war for San Francisco dinner reservations.

This was early 2013. The script would periodically scan the popular online reservation service, OpenTable, and drop him an email anytime something interesting opened up—a choice Friday night spot at the House of Prime Rib, for example. But soon, Mónica noticed that he wasn’t getting the tables that had once been available.

By the time he’d check the reservation site, his previously open reservation would be booked. And this was happening crazy fast. Like in a matter of seconds. “It’s impossible for a human to do the three forms that are required to do this in under three seconds,” he told WIRED last year.

Mónica could draw only one conclusion: He’d been drawn into a bot war.

Everyone knows the story of how the world wide web made the internet accessible for everyone, but a lesser known story of the internet’s evolution is how automated code—aka bots—came to quietly take it over. Today, bots account for 56 percent of all of website visits, says Marc Gaffan, CEO of Incapsula, a company that sells online security services. Incapsula recently an an analysis of 20,000 websites to get a snapshot of part of the web, and on smaller websites, it found that bot traffic can run as high as 80 percent.

People use scripts to buy gear on eBay and, like Mónica, to snag the best reservations. Last month, the band, Foo Fighters sold tickets for their upcoming tour at box offices only, an attempt to strike back against the bots used by online scalpers. “You should expect to see it on ticket sites, travel sites, dating sites,” Gaffan says. What’s more, a company like Google uses bots to index the entire web, and companies such as IFTTT and Slack give us ways use the web to use bots for good, personalizing our internet and managing the daily informational deluge.

But, increasingly, a slice of these online bots are malicious—used to knock websites offline, flood comment sections with spam, or scrape sites and reuse their content without authorization. Gaffan says that about 20 percent of the Web’s traffic comes from these bots. That’s up 10 percent from last year.

Often, they’re running on hacked computers. And lately they’ve become more sophisticated. They are better at impersonating Google, or at running in real browsers on hacked computers. And they’ve made big leaps in breaking human-detecting captcha puzzles, Gaffan says.

“Essentially there’s been this evolution of bots, where we’ve seen it become easier and more prevalent over the past couple of years,” says Rami Essaid, CEO of Distil Networks, a company that sells bot-blocking software.

But despite the rise of these bad bots, there is some good news for the human race. The total percentage of bot-related web traffic is actually down this year from what it was in 2013. Back then it accounted for 60 percent of the traffic, 4 percent more than today.

Thursday, December 18. 2014

BitTorrent Opens Alpha For Maelstrom, Its New, Distributed, Torrent-Based Web Browser

Via TechCrunch

-----

BitTorrent, the peer-to-peer file sharing company, is today opening an alpha test for its latest stab at disrupting — or at least getting people to rethink — how users interact with each other and with content over the Internet. Project Maelstrom is BitTorrent’s take on the web browser: doing away with centralised servers, web content is instead shared through torrents on a distributed network.

BitTorrent years ago first made a name for itself as a P2P network for illicit file sharing — a service that was often used to share premium content for free at a time when it was hard to get legal content elsewhere. More recently, the company has been applying its knowledge of distributed architecture to tackle other modern file-sharing problems, producing services like Sync to share large files with others, Bundle for content makers to have a way of distributing and selling content; and the Bleep messaging service.

These have proven attractive to people for a number of reasons. For some, it’s about more efficient services — there is an argument to be made for P2P transfers of large files being faster and easier than those downloaded from the cloud — once you’ve downloaded the correct local client, that is (a hurdle in itself for some).

For others, it is about security. Your files never sit on any cloud, and instead stay locally even when they are shared.

This latter point is one that BitTorrent has been playing up a lot lately, in light of all the revelations around the NSA and what happens to our files when they are put onto cloud-based servers. (The long and short of it: they’re open to hacking, and they’re open to governments and others’ prying fingers.)

In the words of CEO Eric Klinker, Maelstrom is part of that line of thinking that using P2P can help online content run more smoothly.

“What if more of the web worked the way BitTorrent does?” he writes of how the company first conceived of the problem. “Project Maelstrom begins to answer that question with our first public release of a web browser that can power a new way for web content to be published, accessed and consumed. Truly an Internet powered by people, one that lowers barriers and denies gatekeepers their grip on our future.”

Easy enough to say, but also leaving the door open to a lot of questions.

For now, the picture you see above is the only one that BitTorrent has released to give you an idea of how Maelstrom might look. Part of the alpha involves not just getting people to sign up to use it, but getting people signed up to conceive of pages of content to actually use. “We are actively engaging with potential partners who would like to build for the distributed web,” a spokesperson says.

Nor is it clear what form the project will take commercially.

Asked about advertising — one of the ways that browsers monetise today — it is “too early to tell,” the spokesperson says. “Right now the team is focused on building the technology. We’ll be evaluating business models as we go, just as we did with Sync. But we treat web pages, along with distributed web pages the same way other browsers do. So in that sense they can contain any content they want.”

That being said, it won’t be much different from what we know today as “the web.”

“HTML on the distributed web is identical to HTML on the traditional web. The creation of websites will be the same, we’re just provided another means for distributing and publishing your content,” he adds.

In that sense, you could think of Maelstrom as a complement to what we know as web browsers today. “We also see the potential that there is an intermingling of HTTP and BitTorrent content across the web,” he says.

It sounds fairly radical to reimagine the entire server-based architecture of web browsing, but it comes at a time when we are seeing a lot of bumps and growing pains for businesses over more traditional services — beyond the reasons that consumers may have when they opt for P2P services.

BitTorrent argues that the whole net neutrality debate — where certain services that are data hungry like video service Netflix threaten to be throttled because of their strain on ISP networks — is one that could be avoided if those data-hungry services simply opted for different ways to distribute their files. Again, this highlights the idea of Maelstrom as a complement to what we use today.

“As a distributed web browser, Maelstrom can help relieve the burden put on the network,” BitTorrent says. “It can also help maintain a more neutral Internet as a gatekeeper would not be able to identify where such traffic is originating.

Tuesday, December 16. 2014

We’ve Put a Worm’s Mind in a Lego Robot's Body

Via Smithsonian

-----

If the brain is a collection of electrical signals, then, if you could catalog all those those signals digitally, you might be able upload your brain into a computer, thus achieving digital immortality.

While the plausibility—and ethics—of this upload for humans can be debated, some people are forging ahead in the field of whole-brain emulation. There are massive efforts to map the connectome—all the connections in the brain—and to understand how we think. Simulating brains could lead us to better robots and artificial intelligence, but the first steps need to be simple.

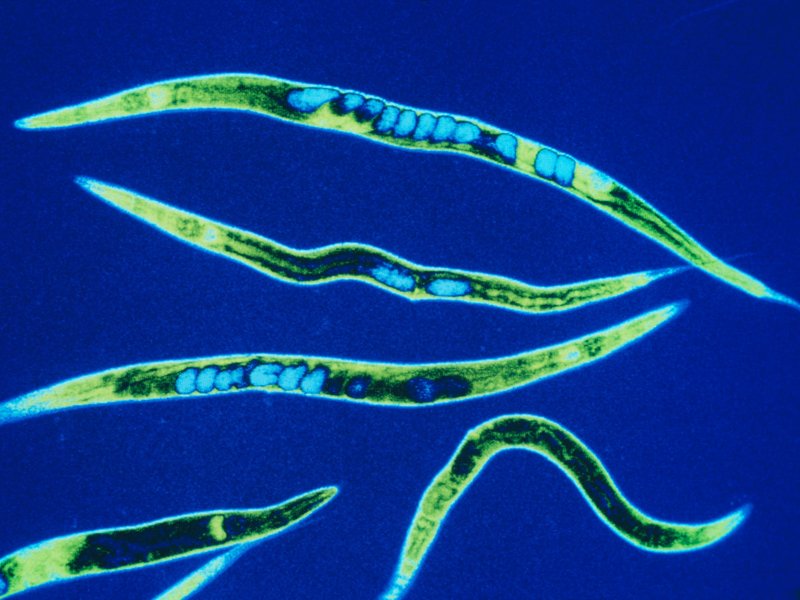

So, one group of scientists started with the roundworm Caenorhabditis elegans, a critter whose genes and simple nervous system we know intimately.

The OpenWorm project has mapped the connections between the worm’s 302 neurons and simulated them in software. (The project’s ultimate goal is to completely simulate C. elegans as a virtual organism.) Recently, they put that software program in a simple Lego robot.

The worm’s body parts and neural networks now have LegoBot equivalents: The worm’s nose neurons were replaced by a sonar sensor on the robot. The motor neurons running down both sides of the worm now correspond to motors on the left and right of the robot, explains Lucy Black for I Programmer. She writes:

---

It is claimed that the robot behaved in ways that are similar to observed C. elegans. Stimulation of the nose stopped forward motion. Touching the anterior and posterior touch sensors made the robot move forward and back accordingly. Stimulating the food sensor made the robot move forward.

---

Timothy Busbice, a founder for the OpenWorm project, posted a video of the Lego-Worm-Bot stopping and backing:

The simulation isn’t exact—the program has some simplifications on the thresholds needed to trigger a "neuron" firing, for example. But the behavior is impressive considering that no instructions were programmed into this robot. All it has is a network of connections mimicking those in the brain of a worm.

Of course, the goal of uploading our brains assumes that we aren’t already living in a computer simulation. Hear out the logic: Technologically advanced civilizations will eventually make simulations that are indistinguishable from reality. If that can happen, odds are it has. And if it has, there are probably billions of simulations making their own simulations. Work out that math, and "the odds are nearly infinity to one that we are all living in a computer simulation," writes Ed Grabianowski for io9.

Is your mind spinning yet?

Tuesday, December 02. 2014

Big Data Digest: Rise of the think-bots

Via PC World

-----

It turns out that a vital missing ingredient in the long-sought after goal of getting machines to think like humans—artificial intelligence—has been lots and lots of data.

Last week, at the O’Reilly Strata + Hadoop World Conference in New York, Salesforce.com’s head of artificial intelligence, Beau Cronin, asserted that AI has gotten a shot in the arm from the big data movement. “Deep learning on its own, done in academia, doesn’t have the [same] impact as when it is brought into Google, scaled and built into a new product,” Cronin said.

In the week since Cronin’s talk, we saw a whole slew of companies—startups mostly—come out of stealth mode to offer new ways of analyzing big data, using machine learning, natural language recognition and other AI techniques that those researchers have been developing for decades.

One such startup, Cognitive Scale, applies IBM Watson-like learning capabilities to draw insights from vast amount of what it calls “dark data,” buried either in the Web—Yelp reviews, online photos, discussion forums—or on the company network, such as employee and payroll files, noted KM World.

Cognitive Scale offers a set of APIs (application programming interfaces) that businesses can use to tap into cognitive-based capabilities designed to improve search and analysis jobs running on cloud services such as IBM’s Bluemix, detailed the Programmable Web.

Cognitive Scale was founded by Matt Sanchez, who headed up IBM’s Watson Labs, helping bring to market some of the first e-commerce applications based on the Jeopardy-winning Watson technology, pointed out CRN.

Sanchez, now chief technology officer for Cognitive Scale, is not the only Watson alumnus who has gone on to commercialize cognitive technologies.

Alert reader Gabrielle Sanchez pointed out that another Watson ex-alum, engineer Pete Bouchard, recently joined the team of another cognitive computing startup Zintera as the chief innovation office. Sanchez, who studied cognitive computing in college, found a demonstration of the company’s “deep learning” cognitive computing platform to be “pretty impressive.”

AI-based deep learning with big data was certainly on the mind of senior Google executives. This week the company snapped up two Oxford University technology spin-off companies that focus on deep learning, Dark Blue Labs and Vision Factory.

The teams will work on image recognition and natural language understanding, Sharon Gaudin reported in Computerworld.

Sumo Logic has found a way to apply machine learning to large amounts machine data. An update to its analysis platform now allows the software to pinpoint casual relationships within sets of data, Inside Big Data concluded.

A company could, for instance, use the Sumo Logic cloud service to analyze log data to troubleshoot a faulty application, for instance.

While companies such as Splunk have long offered search engines for machine data, Sumo Logic moves that technology a step forward, the company claimed.

“The trouble with search is that you need to know what you are searching for. If you don’t know everything about your data, you can’t by definition, search for it. Machine learning became a fundamental part of how we uncover interesting patterns and anomalies in data,” explained Sumo Logic chief marketing officer Sanjay Sarathy, in an interview.

For instance, the company, which processes about 5 petabytes of customer data each day, can recognize similar queries across different users, and suggest possible queries and dashboards that others with similar setups have found useful.

“Crowd-sourcing intelligence around different infrastructure items is something you can only do as a native cloud service,” Sarathy said.

With Sumo Logic, an e-commerce company could ensure that each transaction conducted on its site takes no longer than three seconds to occur. If the response time is lengthier, then an administrator can pinpoint where the holdup is occurring in the transactional flow.

One existing Sumo Logic customer, fashion retailer Tobi, plans to use the new capabilities to better understand how its customers interact with its website.

One-upping IBM on the name game is DataRPM, which crowned its own big data-crunching natural language query engine Sherlock (named after Sherlock Holmes who, after all, employed Watson to execute his menial tasks).

Sherlock is unique in that it can automatically create models of large data sets. Having a model of a data set can help users pull together information more quickly, because the model describes what the data is about, explained DataRPM CEO Sundeep Sanghavi.

DataRPM can analyze a staggeringly wide array of structured, semi-structured and unstructured data sources. “We’ll connect to anything and everything,” Sanghavi said.

The service company can then look for ways that different data sets could be combined to provide more insight.

“We believe that data warehousing is where data goes to die. Big data is not just about size, but also about how many different sources of data you are processing, and how fast you can process that data,” Sanghavi said, in an interview.

For instance, Sherlock can pull together different sources of data and respond with a visualization to a query such as “What was our revenue for last year, based on geography?” The system can even suggest other possible queries as well.

Sherlock has a few advantages over Watson, Sanghavi claimed. The training period is not as long, and the software can be run on-premise, rather than as a cloud service from IBM, for those shops that want to keep their computations in-house. “We’re far more affordable than Watson,” Sanghavi said.

Initially, DataRPM is marketing to the finance, telecommunications, manufacturing, transportation and retail sectors.

One company that certainly does not think data warehousing is going to die is a recently unstealth’ed startup run by Bob Muglia, called Snowflake Computing.

Publicly launched this week, Snowflake aims “to do for the data warehouse what Salesforce did for CRM—transforming the product from a piece of infrastructure that has to be maintained by IT into a service operated entirely by the provider,” wrote Jon Gold at Network World.

Founded in 2012, the company brought in Muglia earlier this year to run the business. Muglia was the head of Microsoft’s server and tools division, and later, head of the software unit at Juniper Networks.

While Snowflake could offer its software as a product, it chooses to do so as a service, noted Timothy Prickett Morgan at Enterprise Tech.

“Sometime either this year or next year, we will see more data being created in the cloud than in an on-premises environment,” Muglia told Morgan. “Because the data is being created in the cloud, analysis of that data in the cloud is very appropriate.”

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131054)

- Norton cyber crime study offers striking revenue loss statistics (101667)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100065)

- Norton cyber crime study offers striking revenue loss statistics (57896)

- The PC inside your phone: A guide to the system-on-a-chip (57462)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |