By Computed·By

-----

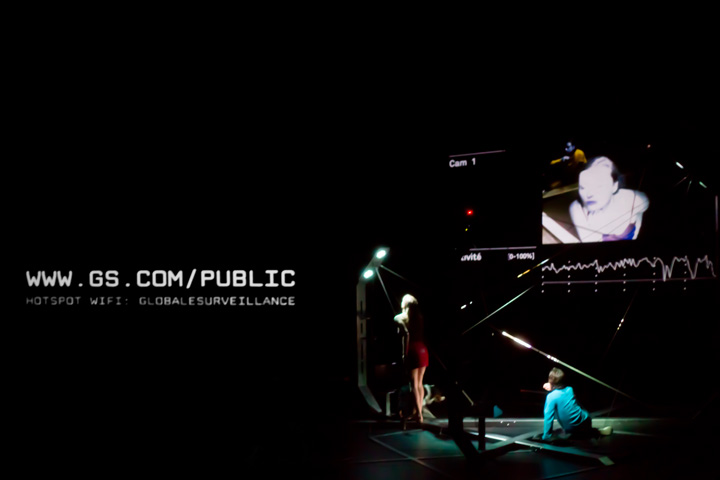

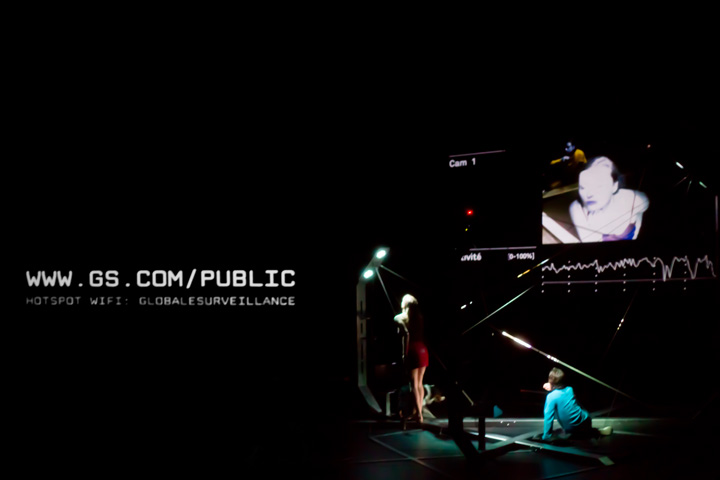

Paranoid Shelter is a recent installation / architectural device that fabric | ch finalized later in 2011 after a 6 months residency at the EPFL-ECAL Lab in Renens (Switzerland). It was realized with the support of Pro Helvetia, the OFC, the City of Lausanne and the State of Vaud.

It was initiated and first presented as sketches back in 2008 (!), in

the context of a colloquium about surveillance at the Palais de Tokyo in

Paris.

On the first

technical drawings and sketches of the Paranoid Shelter project, the

entire system was just looking like a (big) mess of wires, sensors

and video cameras, all concentrated on a pretty tiny space where humans

will have difficulties to move in. The entire space is consciously

organised around tracking methods/systems, the space being delimited

by 3 [augmented] posts which host a set of sensors, video cameras and

microphones. It includes networked [power over ethernet] video cameras,

microphones and a set of wireless ambient sensors (giving the ability

of measuring temperature, O2 and CO2 gaz concentration, current

atmospheric pressure, light, etc...).

Based on a real-time analysis of major

sensors hardware, the system is able to control DMX lights, a set of

two displays (one LCD screen and one projector) and to produce sound

through a dynamically generated text to speech process.

All programs were developed using

openFrameworks enhanced by a set of dedicated in-house C++ libraries

in order to be able to capture networked camera video flow, control

any DMX compatible piece of hardware and collect wireless Libelum sensor's

data. Sound analysis programs, LCD display program and the main

program are all connected to each other via a local network. The main

program is in charge of collecting other program's data, performing

the global analysis of the system's activity, recording system's raw

information to a database and controlling system's [re]actions

(lights, display).

The overall system can act in an

[autonomous] way by controlling the entire installation behavior

while it can also be remotely controlled when used on stage,

in the context of a theater play.

Collecting all sensor's flows is one of

the basic task. Cameras are used to track movements, microphones

measure sound activity and sensors collect a set of ambient

parameters. Even if data capture consists in some basic network based

tasks, it is easily raised to upper complexity level when each data

collection should occur simultaneously, in real-time, [without,with]

a [limited,acceptable] delay. Major raw data analysis have to occur

directly after data acquisition in order to minimize the time-shift

in the system's space awareness. This first level of data analysis

brings out mainly frequencies information, quantity of activity and

2D location tracking (from the point of view of each camera). Every

single piece of raw information is systematically recorded in a

dedicated database : it reduces system's memory footprint (by keeping

it almost constant) without loosing any activity information. From

time to time the system can access these recorded information in its

post-analysis process, when required, mainly to add a time-scale

dimension on the global activity that occurred in the monitored

space. Time isolated information can be interpreted in a rough and

basic way, while time composition of the same information or a set of

information may bring additional meanings by verifying information

consistency over time (of course, it could be in a negative or a

positive way, by confirming or refuting a first level deduced

activity information). Another level of analysis can be reached by

taking in account the spacial distribution of sensors in the overall

installation. The system is then able to compute 3D information

getting an awareness of activities within the space it is monitoring.

It generates a second level of data analysis, spatialised, that will

increase the global understanding of captured data by the system.

Recorded activities are made available

to the [audience,visitors] through a wifi access point. Networked

cameras can be accessed in real time, giving the ability to humans to

see some of the system's [inputs]. Thus, network activity is also

monitored as another sign of human presence, the system can then

[detect] activity elsewhere than in its dedicated space.

Whatever how numerous are collected

data, the system faces a real problem when it comes to the

interpretation of these data while not having benefit of a human

brain. Events that are quite obvious to humans, do not mean anything

to computers and softwares. In order to avoid the use of some

artificial neural networks simulation (which may still be a good

option to explore), I have decided to compute a limited set of

parameters, all based on previously analysed data, only computed

lately when the system may decide to react to perceived activities.

It defines a kind of global [mood] of the system, based on which it

will [decide] whether to be aggressive (from a human point of view)

by making the global tracking activity [noticeable] by humans

evolving in the installation's space, or by focusing tracking sensors

on a given area or by trying to enhance some sensor's information

analysis, whether to settle in a kind of silent mode.

Moreover, the evolution of these

parameters are also studied in time, making the [mood] evolving in

a human way, increasing and decreasing [analogically]. System's

[mood] may be wrong or [unjustified,weird] from a human point of

view, but that's where [multi-dimensional] software becomes

interesting. Beyond a certain complexity, by adding computation

layers on top of each over, having written every single line of code

does not allow the programmer to predict precisely what next system's

[re]action will be.

We did reach here monitoring system

limitations which is obviously [interpretation,comprehension]. As long as automatic

system can not correctly [understand] data, humans will need to be in

the loop, making all these monitoring systems quite useless [as

expert system], except for producing an enormous quantity of data

that still need to be post-analysed by a human brain. As the system

is producing an important set of heteregeneous data, a set of rules

may suggest to the system some sort of data correlation. These rules

should not be too [tights,precises] in order to avoid producing

obvious system's interpretation, while keeping them slightly [out of

focus] may allow [smart,astonishing] conclusion being produced. So

there's rooms here for additional implementation of the data analysis

processes that can still completely change the way the entire

installation [can,may] behave.