Entries tagged as network

Related tags

3g gsm lte mobile technology ai algorythm android apple arduino automation crowd-sourcing data mining data visualisation hardware innovation&society neural network programming robot sensors siri software web artificial intelligence big data cloud computing coding fft program amazon cloud ebook google kindle microsoft history ios iphone physical computing satellite drone light wifi botnet security browser chrome firefox ie privacy laptop chrome os os computing farm open source piracy sustainability 3d printing data center energy facebook art car flickr gui internet internet of things maps photos army camera virus social networkMonday, February 02. 2015

ConnectX wants to put server farms in space

Via Geek

-----

The next generation of cloud servers might be deployed where the clouds can be made of alcohol and cosmic dust: in space. That’s what ConnectX wants to do with their new data visualization platform.

Why space? It’s not as though there isn’t room to set up servers here on Earth, what with Germans willing to give up space in their utility rooms in exchange for a bit of ambient heat and malls now leasing empty storefronts to service providers. But there are certain advantages.

The desire to install servers where there’s abundant, free cooling makes plenty of sense. Down here on Earth, that’s what’s driven companies like Facebook to set up shop in Scandinavia near the edge of the Arctic Circle. Space gets a whole lot colder than the Arctic, so from that standpoint the ConnectX plan makes plenty of sense. There’s also virtually no humidity, which can wreak havoc on computers.

They also believe that the zero-g environment would do wonders for the lifespan of the hard drives in their servers, since it could reduce the resistance they encounter while spinning. That’s the same reason Western Digital started filling hard drives with helium.

But what about data transmission? How does ConnectX plan on moving the bits back and forth between their orbital servers and networks back on the ground? Though something similar to NASA’s Lunar Laser Communication Demonstration — which beamed data to the moon 4,800 times faster than any RF system ever managed — seems like a decent option, they’re leaning on RF.

Mind you, it’s a fairly complex setup. ConnectX says they’re polishing a system that “twists” signals to reap massive transmission gains. A similar system demonstrated last year managed to push data over radio waves at a staggering 32gbps, around 30 times faster than LTE.

So ConnectX seems to have that sorted. The only real question is the cost of deployment. Can the potential reduction in long-term maintenance costs really offset the massive expense of actually getting their servers into orbit? And what about upgrading capacity? It’s certainly not going to be nearly as fast, easy, or cheap as it is to do on Earth. That’s up to ConnectX to figure out, and they seem confident that they can make it work.

Saturday, November 29. 2014

NSA partners with Apache to release open-source data traffic program

Via zdnet

-----

Many of you probably think that the National Security Agency (NSA) and open-source software get along like a house on fire. That's to say, flaming destruction. You would be wrong.

In partnership with the Apache Software Foundation, the NSA announced on Tuesday that it is releasing the source code for Niagarafiles (Nifi). The spy agency said that Nifi "automates data flows among multiple computer networks, even when data formats and protocols differ".

Details on how Nifi does this are scant at this point, while the ASF continues to set up the site where Nifi's code will reside.

In a statement, Nifi's lead developer Joseph L Witt said the software "provides a way to prioritize data flows more effectively and get rid of artificial delays in identifying and transmitting critical information".

The NSA is making this move because, according to the director of the NSA's Technology Transfer Program (TPP) Linda L Burger, the agency's research projects "often have broad, commercial applications".

"We use open-source releases to move technology from the lab to the marketplace, making state-of-the-art technology more widely available and aiming to accelerate U.S. economic growth," she added.

The NSA has long worked hand-in-glove with open-source projects. Indeed, Security-Enhanced Linux (SELinux), which is used for top-level security in all enterprise Linux distributions — Red Hat Enterprise Linux, SUSE Linux Enterprise Server, and Debian Linux included — began as an NSA project.

More recently, the NSA created Accumulo, a NoSQL database store that's now supervised by the ASF.

More NSA technologies are expected to be open sourced soon. After all, as the NSA pointed out: "Global reviews and critiques that stem from open-source releases can broaden a technology's applications for the US private sector and for the good of the nation at large."

Wednesday, November 05. 2014

Researchers bridge air gap by turning monitors into FM radios

Via ars technica

-----

A two-stage attack could allow spies to sneak secrets out of the most sensitive buildings, even when the targeted computer system is not connected to any network, researchers from Ben-Gurion University of the Negev in Israel stated in an academic paper describing the refinement of an existing attack.

The technique, called AirHopper, assumes that an attacker has already compromised the targeted system and desires to occasionally sneak out sensitive or classified data. Known as exfiltration, such occasional communication is difficult to maintain, because government technologists frequently separate the most sensitive systems from the public Internet for security. Known as an air gap, such a defensive measure makes it much more difficult for attackers to compromise systems or communicate with infected systems.

Yet, by using a program to create a radio signal using a computer’s video card—a technique known for more than a decade—and a smartphone capable of receiving FM signals, an attacker could collect data from air-gapped devices, a group of four researchers wrote in a paper presented last week at the IEEE 9th International Conference on Malicious and Unwanted Software (MALCON).

“Such technique can be used potentially by people and organizations with malicious intentions and we want to start a discussion on how to mitigate this newly presented risk,” Dudu Mimran, chief technology officer for the cyber security labs at Ben-Gurion University, said in a statement.

For the most part, the attack is a refinement of existing techniques. Intelligence agencies have long known—since at least 1985—that electromagnetic signals could be intercepted from computer monitors to reconstitute the information being displayed. Open-source projects have turned monitors into radio-frequency transmitters. And, from the information leaked by former contractor Edward Snowden, the National Security Agency appears to use radio-frequency devices implanted in various computer-system components to transmit information and exfiltrate data.

AirHopper uses off-the-shelf components, however, to achieve the same result. By using a smartphone with an FM receiver, the exfiltration technique can grab data from nearby systems and send it to a waiting attacker once the smartphone is again connected to a public network.

“This is the first time that a mobile phone is considered in an attack model as the intended receiver of maliciously crafted radio signals emitted from the screen of the isolated computer,” the group said in its statement on the research.

The technique works at a distance of 1 to 7 meters, but can only send data at very slow rates—less than 60 bytes per second, according to the researchers.

Thursday, October 02. 2014

A Dating Site for Algorithms

-----

A startup called Algorithmia has a new twist on online matchmaking. Its website is a place for businesses with piles of data to find researchers with a dreamboat algorithm that could extract insights–and profits–from it all.

The aim is to make better use of the many algorithms that are developed in academia but then languish after being published in research papers, says cofounder Diego Oppenheimer. Many have the potential to help companies sort through and make sense of the data they collect from customers or on the Web at large. If Algorithmia makes a fruitful match, a researcher is paid a fee for the algorithm’s use, and the matchmaker takes a small cut. The site is currently in a private beta test with users including academics, students, and some businesses, but Oppenheimer says it already has some paying customers and should open to more users in a public test by the end of the year.

“Algorithms solve a problem. So when you have a collection of algorithms, you essentially have a collection of problem-solving things,” says Oppenheimer, who previously worked on data-analysis features for the Excel team at Microsoft.

Oppenheimer and cofounder Kenny Daniel, a former graduate student at USC who studied artificial intelligence, began working on the site full time late last year. The company raised $2.4 million in seed funding earlier this month from Madrona Venture Group and others, including angel investor Oren Etzioni, the CEO of the Allen Institute for Artificial Intelligence and a computer science professor at the University of Washington.

Etzioni says that many good ideas are essentially wasted in papers presented at computer science conferences and in journals. “Most of them have an algorithm and software associated with them, and the problem is very few people will find them and almost nobody will use them,” he says.

One reason is that academic papers are written for other academics, so people from industry can’t easily discover their ideas, says Etzioni. Even if a company does find an idea it likes, it takes time and money to interpret the academic write-up and turn it into something testable.

To change this, Algorithmia requires algorithms submitted to its site to use a standardized application programming interface that makes them easier to use and compare. Oppenheimer says some of the algorithms currently looking for love could be used for machine learning, extracting meaning from text, and planning routes within things like maps and video games.

Early users of the site have found algorithms to do jobs such as extracting data from receipts so they can be automatically categorized. Over time the company expects around 10 percent of users to contribute their own algorithms. Developers can decide whether they want to offer their algorithms free or set a price.

All algorithms on Algorithmia’s platform are live, Oppenheimer says, so users can immediately use them, see results, and try out other algorithms at the same time.

The site lets users vote and comment on the utility of different algorithms and shows how many times each has been used. Algorithmia encourages developers to let others see the code behind their algorithms so they can spot errors or ways to improve on their efficiency.

One potential challenge is that it’s not always clear who owns the intellectual property for an algorithm developed by a professor or graduate student at a university. Oppenheimer says it varies from school to school, though he notes that several make theirs open source. Algorithmia itself takes no ownership stake in the algorithms posted on the site.

Eventually, Etzioni believes, Algorithmia can go further than just matching up buyers and sellers as its collection of algorithms grows. He envisions it leading to a new, faster way to compose software, in which developers join together many different algorithms from the selection on offer.

Friday, September 05. 2014

The Internet of Things is here and there -- but not everywhere yet

Via PCWorld

-----

The Internet of Things is still too hard. Even some of its biggest backers say so.

For all the long-term optimism at the M2M Evolution conference this week in Las Vegas, many vendors and analysts are starkly realistic about how far the vaunted set of technologies for connected objects still has to go. IoT is already saving money for some enterprises and boosting revenue for others, but it hasn’t hit the mainstream yet. That’s partly because it’s too complicated to deploy, some say.

For now, implementations, market growth and standards are mostly concentrated in specific sectors, according to several participants at the conference who would love to see IoT span the world.

Cisco Systems has estimated IoT will generate $14.4 trillion in economic value between last year and 2022. But Kevin Shatzkamer, a distinguished systems architect at Cisco, called IoT a misnomer, for now.

“I think we’re pretty far from envisioning this as an Internet,” Shatzkamer said. “Today, what we have is lots of sets of intranets.” Within enterprises, it’s mostly individual business units deploying IoT, in a pattern that echoes the adoption of cloud computing, he said.

In the past, most of the networked machines in factories, energy grids and other settings have been linked using custom-built, often local networks based on proprietary technologies. IoT links those connected machines to the Internet and lets organizations combine those data streams with others. It’s also expected to foster an industry that’s more like the Internet, with horizontal layers of technology and multivendor ecosystems of products.

What’s holding back the Internet of Things

The good news is that cities, utilities, and companies are getting more familiar with IoT and looking to use it. The less good news is that they’re talking about limited IoT rollouts for specific purposes.

“You can’t sell a platform, because a platform doesn’t solve a problem. A vertical solution solves a problem,” Shatzkamer said. “We’re stuck at this impasse of working toward the horizontal while building the vertical.”

“We’re no longer able to just go in and sort of bluff our way through a technology discussion of what’s possible,” said Rick Lisa, Intel’s group sales director for Global M2M. “They want to know what you can do for me today that solves a problem.”

One of the most cited examples of IoT’s potential is the so-called connected city, where myriad sensors and cameras will track the movement of people and resources and generate data to make everything run more efficiently and openly. But now, the key is to get one municipal project up and running to prove it can be done, Lisa said.

ThroughTek, based in China, used a connected fan to demonstrate an Internet of Things device management system at the M2M Evolution conference in Las Vegas this week.

The conference drew stories of many successful projects: A system for tracking construction gear has caught numerous workers on camera walking off with equipment and led to prosecutions. Sensors in taxis detect unsafe driving maneuvers and alert the driver with a tone and a seat vibration, then report it to the taxi company. Major League Baseball is collecting gigabytes of data about every moment in a game, providing more information for fans and teams.

But for the mass market of small and medium-size enterprises that don’t have the resources to do a lot of custom development, even targeted IoT rollouts are too daunting, said analyst James Brehm, founder of James Brehm & Associates.

There are software platforms that pave over some of the complexity of making various devices and applications talk to each other, such as the Omega DevCloud, which RacoWireless introduced on Tuesday. The DevCloud lets developers write applications in the language they know and make those apps work on almost any type of device in the field, RacoWireless said. Thingworx, Xively and Gemalto also offer software platforms that do some of the work for users. But the various platforms on offer from IoT specialist companies are still too fragmented for most customers, Brehm said. There are too many types of platforms—for device activation, device management, application development, and more. “The solutions are too complex.”

He thinks that’s holding back the industry’s growth. Though the past few years have seen rapid adoption in certain industries in certain countries, sometimes promoted by governments—energy in the U.K., transportation in Brazil, security cameras in China—the IoT industry as a whole is only growing by about 35 percent per year, Brehm estimates. That’s a healthy pace, but not the steep “hockey stick” growth that has made other Internet-driven technologies ubiquitous, he said.

What lies ahead

Brehm thinks IoT is in a period where customers are waiting for more complete toolkits to implement it—essentially off-the-shelf products—and the industry hasn’t consolidated enough to deliver them. More companies have to merge, and it’s not clear when that will happen, he said.

“I thought we’d be out of it by now,” Brehm said. What’s hard about consolidation is partly what’s hard about adoption, in that IoT is a complex set of technologies, he said.

And don’t count on industry standards to simplify everything. IoT’s scope is so broad that there’s no way one standard could define any part of it, analysts said. The industry is evolving too quickly for traditional standards processes, which are often mired in industry politics, to keep up, according to Andy Castonguay, an analyst at IoT research firm Machina.

Instead, individual industries will set their own standards while software platforms such as Omega DevCloud help to solve the broader fragmentation, Castonguay believes. Even the Industrial Internet Consortium, formed earlier this year to bring some coherence to IoT for conservative industries such as energy and aviation, plans to work with existing standards from specific industries rather than write its own.

Ryan Martin, an analyst at 451 Research, compared IoT standards to human languages.

“I’d be hard pressed to say we are going to have one universal language that everyone in the world can speak,” and even if there were one, most people would also speak a more local language, Martin said.

Google flushes out users of old browsers by serving up CLUNKY, AGED version of search

Via The Register

-----

Google is attempting to shunt users away from old browsers by intentionally serving up a stale version of the ad giant's search homepage to those holdouts.

The tactic appears to be falling in line with Mountain View's policy on its other Google properties, such as Gmail, which the company declines to fully support on aged browsers.

However, it was claimed on Friday in a Google discussion thread that the multinational had unceremoniously dumped a past its sell-by-date version of the Larry Page-run firm's search homepage on those users who have declined to upgrade their Opera and Safari browsers.

A user with the moniker DJSigma wrote on the forum:

A few minutes ago, Google's homepage reverted to the old version for me. I'm using Opera 12.17. If I search for something, the results are shown with the current Google look, but the homepage itself is the old look with the black bar across the top. It seems to affect only the Google homepage and image search. If I click on "News", for instance, it's fine.

I've tried clearing cookies and deleting the browser cache/persistent storage. I've tried disabling all extensions. I've tried masking the browser as IE and Firefox. It doesn't matter whether I'm signed in or signed out. Nothing works. Please fix this!

In a later post, DJSigma added that there seemed to be a glitch on Google search.

If I go to the Google homepage, I get served the old version of the site. If I search for something, the results show up in the current look. However, if I then try and type something into the search box again, Google search doesn't work at all. I have to go back to the homepage every time to do a new search.

The Opera user then said that the problem appeared to be "intermittent". Others flagged up similar issues on the Google forum and said they hoped it was just a bug.

While someone going by the name MadFranko008 added:

Phew ... thought it was just me and I'd been hacked or something ... Same problem here everything "Google" has reverted back to the old style of several years ago.

Tested on 3 different computers and different OS's & browsers ... All have the same result, everything google has gone back to the old style of several years ago and there's no way to change it ... Even the copyright has reverted back to 2013!!!

Some Safari 5.1.x and Opera 12.x netizens were able to fudge the system by customising their browser's user agent. But others continued to complain about Google's "clunky", old search homepage.

A Google employee, meanwhile, said that the tactic was deliberate in a move to flush out stick-in-the-mud types who insisted on using older versions of browsers.

"Thanks for the reports. I want to assure you this isn't a bug, it's working as intended," said a Google worker going by the name nealem. She added:

We’re continually making improvements to Search, so we can only provide limited support for some outdated browsers. We encourage everyone to make the free upgrade to modern browsers - they’re more secure and provide a better web experience overall.

In a separate thread, as spotted by a Reg reader who brought this sorry affair to our attention, user MadFranko008 was able to show that even modern browsers - including the current version of Chrome - were apparently spitting out glitches on Apple Mac computers.

Google then appeared to have resolved the search "bug" spotted in Chrome.

Wednesday, July 16. 2014

The Shadow Internet That’s 100 Times Faster Than Google Fiber

Via Wired

-----

Illustration: dzima1/Getty

When Google chief financial officer Patrick Pichette said the tech giant might bring 10 gigabits per second internet connections to American homes, it seemed like science fiction. That’s about 1,000 times faster than today’s home connections. But for NASA, it’s downright slow.

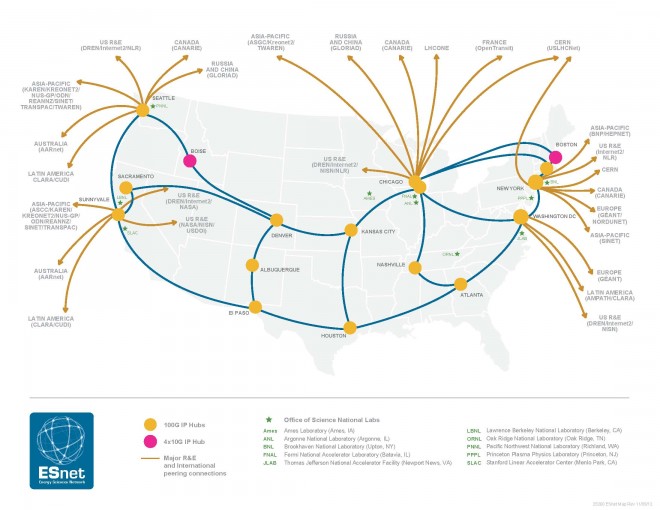

While the rest of us send data across the public internet, the space agency uses a shadow network called ESnet, short for Energy Science Network, a set of private pipes that has demonstrated cross-country data transfers of 91 gigabits per second–the fastest of its type ever reported.

NASA isn’t going bring these speeds to homes, but it is using this super-fast networking technology to explore the next wave of computing applications. ESnet, which is run by the U.S. Department of Energy, is an important tool for researchers who deal in massive amounts of data generated by projects such as the Large Hadron Collider and the Human Genome Project. Rather sending hard disks back and forth through the mail, they can trade data via the ultra-fast network. “Our vision for the world is that scientific discovery shouldn’t be constrained by geography,” says ESnet director Gregory Bell.

In making its network as fast as it can possibly be, ESnet and researchers are organizations like NASA are field testing networking technologies that may eventually find their way into the commercial internet. In short, ESnet a window into what our computing world will eventually look like.

The Other Net

The first nationwide computer research network was the Defense Department’s ARPAnet, which evolved into the modern internet. But it wasn’t the last network of its kind. In 1976, the Department of Energy sponsored the creation of the Magnetic Fusion Energy Network to connect what is today the National Energy Research Scientific Computing Center with other research laboratories. Then the agency created a second network in 1980 called the High Energy Physics Network to connect particle physics researchers at national labs. As networking became more important, agency chiefs realized it didn’t make sense to maintain multiple networks and merged the two into one: ESnet.

The nature of the network changes with the times. In the early days it ran on land lines and satellite links. Today it is uses fiber optic lines, spanning the DOE’s 17 national laboratories and many other sites, such as university research labs. Since 2010, ESnet and Internet2—a non-profit international network built in 1995 for researchers after the internet was commercialized—have been leasing “dark fiber,” the excess network capacity built-up by commercial internet providers during the late 1990s internet bubble.

An Internet Fast Lane

In November, using this network, NASA’s High End Computer Networking team achieved its 91 gigabit transfer between Denver and NASA Goddard Space Flight Center in Greenbelt, Maryland. It was the fastest end-to-end data transfer ever conducted under “real world” conditions.

ESnet has long been capable of 100 gigabit transfers, at least in theory. Network equipment companies have been offering 100 gigabit switches since 2010. But in practice, long-distance transfers were much slower. That’s because data doesn’t travel through the internet in a straight line. It’s less like a super highway and more like an interstate highway system. If you wanted to drive from San Francisco to New York, you’d pass through multiple cities along the way as you transferred between different stretches of highway. Likewise, to send a file from San Francisco to New York on the internet—or over ESnet—the data will flow through hardware housed in cities across the country.

A map of ESnet’s connected sites. Image: Courtesy of ESnet

NASA did a 98 gigabit transfer between Goddard and the University of Utah over ESnet in 2012. And Alcatel-Lucent and BT obliterated that record earlier this year with a 1.4 terabit connection between London and Ipswich. But in both cases, the two locations had a direct connection, something you rarely see in real world connections.

On the internet and ESnet, every stop along the way creates the potential for a bottleneck, and every piece of gear must be ready to handle full 100 gigabit speeds. In November, the team finally made it work. “This demonstration was about using commercial, off-the-shelf technology and being able to sustain the transfer of a large data network,” says Tony Celeste, a sales director at Brocade, the company that manufactured the equipment used in the record-breaking test.

Experiments for the Future

Meanwhile, the network is advancing the state of the art in other ways. Researchers have used it to explore virtual network circuits called “OSCARS,” which can be used to create complex networks without complex hardware changes. And they’re working on what are known as network “DMZs,” which can achieve unusually fast speeds by handling security without traditional network firewalls.

These solutions are designed specifically for networks in which a small number of very large transfers take place–as opposed to the commercial internet where lots of small transfers take place. But there’s still plenty for commercial internet companies to learn from ESnet. Telecommunications company XO Communications already has a 100 gigabit backbone, and we can expect more companies to follow suit.

Although we won’t see 10-gigabit connections—let alone 100 gigabit connections—at home any time soon, higher capacity internet backbones will mean less congestion as more and more people stream high-definition video and download ever-larger files. And ESnet isn’t stopping there. Bell says the organization is already working on a 400 gigabit network, and the long-term goal is a terabyte per second network, which about 100,000 times faster than today’s home connections. Now that sounds like science fiction.

Update 13:40 EST 06/17/14: This story has been updated to make it clear that ESnet is run by the Department of Energy.

Update 4:40 PM EST 06/17/14: This story has been updated to avoid confusion between ESnet’s production network and its more experimental test bed network.

Tuesday, July 08. 2014

LaMetric Is A Smart And Hackable Ticker Display

Via TechCrunch

-----

Ever since covering Fliike, a beautifully-designed physical ‘Like’ counter for local businesses, I’ve been thinking about how the idea could be extended, with a fully-programmable, but simple, ticker-style Internet-connected display.

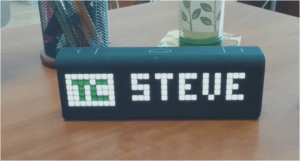

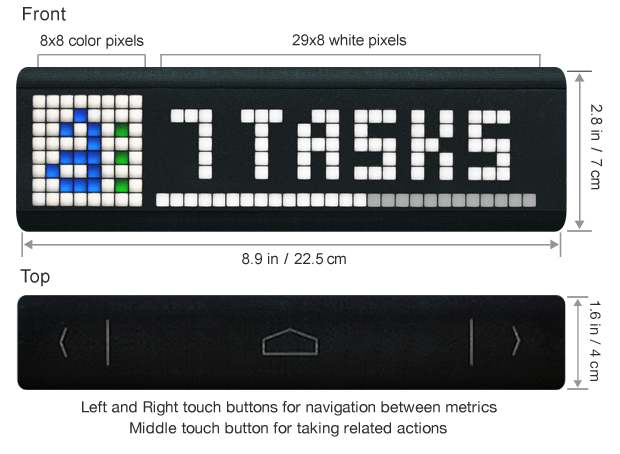

A few products along those lines do already exist, but I’ve yet to find anything that quite matches what I had in mind. That is, until recently, when I was introduced to LaMetric, a smart ticker being developed by UK/Ukraine Internet of Things (IoT) startup Smart Atoms.

Launching its Kickstarter crowdfunding campaign today, the LaMetric is aimed at both consumers and businesses. The idea is you may want to display alerts, notifications and other information from your online “life” via an elegant desktop or wall-mountable and glance-able display. Likewise, businesses that want an Internet-connected ticker, displaying various business information, either publicly for customers or in an office, are also a target market.

The device itself has a retro, 8-bit style desktop clock feel to it, thanks to its ‘blocky’ LED light powered display, which is part of its charm. The display can output one icon and seven numbers, and is scrollable.

But, best of all, the LaMetric is fully programmable via the accompanying app (or “hackable”) and comes with a bunch of off-the-shelf widgets, along with support for RSS and services like IFTTT, Smart Things, Wig Wag, Ninja Blocks, so you can get it talking to other smart devices or web services. Seriously, this thing goes way beyond what I had in mind — try the simulator for yourself — and, for an IoT junkie like me, is just damn cool.

Examples of the kind of things you can track with the device include time, weather, subject and time left till your next meeting, number of new emails and their subject lines, CrossFit timings and fitness goals, number of to-dos for today, stock quotes, and social network notifications.

Or for businesses, this might include Facebook Likes, website visitors, conversions and other metrics, app store rankings, downloads, and revenue.

In addition to the display, the device has back and forward buttons so you can rotate widgets (though these can be set to automatically rotate), as well as an enter key for programmed responses, such as accepting a calendar invitation.

There’s also a loudspeaker for audio alerts. The LaMetric is powered by micro-USB and also comes as an optional and more expensive battery-powered version.

Early-bird backers on Kickstarter can pick up the LaMetric for as little as $89 (plus shipping) for the battery-less version, with countless other options and perks, increasing in price.

Tuesday, June 24. 2014

New open-source router firmware opens your Wi-Fi network to strangers

ComputedBy - The idea to share a WiFi access point is far to be a new one (it is obviously as old as the technology of the WiFi access point itself), but previous solutions were not addressing many issues (including the legal ones) that this proposal seems finally to consider seriously. This may really succeed in transforming a ridiculously endless utopia in something tangible!

Now, Internet providers (including mobile networks) may have a word to say about that. Just by changing their terms of service they can just make this practice illegal... as business does not rhyme with effectiveness (yes, I know, that is strange!!...) neither with objectivity. It took some time but geographical boundaries were raised up over the Internet (which is somehow a as impressive as ridiculous achievement when you think about it), so I'm pretty sure 'they' can find a work around to make this idea not possible or put their hands over it.

Via ars technica

-----

We’ve often heard security folks explain their belief that one of the best ways to protect Web privacy and security on one's home turf is to lock down one's private Wi-Fi network with a strong password. But a coalition of advocacy organizations is calling such conventional wisdom into question.

Members of the “Open Wireless Movement,” including the Electronic Frontier Foundation (EFF), Free Press, Mozilla, and Fight for the Future are advocating that we open up our Wi-Fi private networks (or at least a small slice of our available bandwidth) to strangers. They claim that such a random act of kindness can actually make us safer online while simultaneously facilitating a better allocation of finite broadband resources.

The OpenWireless.org website explains the group’s initiative. “We are aiming to build technologies that would make it easy for Internet subscribers to portion off their wireless networks for guests and the public while maintaining security, protecting privacy, and preserving quality of access," its mission statement reads. "And we are working to debunk myths (and confront truths) about open wireless while creating technologies and legal precedent to ensure it is safe, private, and legal to open your network.”

One such technology, which EFF plans to unveil at the Hackers on Planet Earth (HOPE X) conference next month, is open-sourced router firmware called Open Wireless Router. This firmware would enable individuals to share a portion of their Wi-Fi networks with anyone nearby, password-free, as Adi Kamdar, an EFF activist, told Ars on Friday.

Home network sharing tools are not new, and the EFF has been touting the benefits of open-sourcing Web connections for years, but Kamdar believes this new tool marks the second phase in the open wireless initiative. Unlike previous tools, he claims, EFF’s software will be free for all, will not require any sort of registration, and will actually make surfing the Web safer and more efficient.

Open Wi-Fi initiative members have argued that the act of providing wireless networks to others is a form of “basic politeness… like providing heat and electricity, or a hot cup of tea” to a neighbor, as security expert Bruce Schneier described it.

Walled off

Kamdar said that the new firmware utilizes smart technologies that prioritize the network owner's traffic over others', so good samaritans won't have to wait for Netflix to load because of strangers using their home networks. What's more, he said, "every connection is walled off from all other connections," so as to decrease the risk of unwanted snooping.

Additionally, EFF hopes that opening one’s Wi-Fi network will, in the long run, make it more difficult to tie an IP address to an individual.

“From a legal perspective, we have been trying to tackle this idea that law enforcement and certain bad plaintiffs have been pushing, that your IP address is tied to your identity. Your identity is not your IP address. You shouldn't be targeted by a copyright troll just because they know your IP address," said Kamdar.

This isn’t an abstract problem, either. Consider the case of the Californian who, after allowing a friend access to his home Wi-Fi network, found his home turned inside-out by police officers asking tough questions about child pornography. The man later learned that his houseguest had downloaded illicit materials, thus subjecting the homeowner to police interrogation. Should a critical mass begin to open private networks to strangers, the practice of correlating individuals with IP addresses would prove increasingly difficult and therefore might be reduced.

While the EFF firmware will initially be compatible with only one specific router, the organization would like to eventually make it compatible with other routers and even, perhaps, develop its own router. “We noticed that router software, in general, is pretty insecure and inefficient," Kamdar said. “There are a few major players in the router space. Even though various flaws have been exposed, there have not been many fixes.”

Friday, June 20. 2014

The Fourth Internet

Via TechCrunch

-----

Not very often do you read something online that gives you the chills. Today, I read two such things.

The first came from former Gizmodo, Buzzfeed and now Awl writer John Herrman, who wrote about the brutality of the mobile social (or for sake of discussion ‘fourth’) Internet:

“Metafilter came from two or three internets ago, when a website’s core audience—people showing up there every day or every week, directly—was its main source of visitors. Google might bless a site with new visitors or take them away.

Either way, it was still possible for a site’s fundamentals to be strong, independent of extremely large outside referrers. What’s so disconcerting now is that the new sources of readership, the apps and sites people check every day and which lead people to new posts and stories, make up a majority of readership, and they’re utterly unpredictable (they’re also bigger, always bigger, every new internet is.)”

This broke my heart. In 2008, two Internets ago, Metafilter was my favorite site. It was where I went to find out what the next Star Wars Kid would be, or to find precious baby animal videos to show my cool boyfriend or even more intellectual fare. And now it’s as endangered as the sneezing pandas I first discovered there.

National Internet treasures like Metafilter (or TechCrunch for that matter) should never die. There should be some Internet Preservation Society filled with individuals like Herrman or Marc Andreessen or Mark Zuckerberg or Andy Baio whose sole purpose is to keep them alive.

But there isn’t. Herrman makes a very good point; Useful places to find information, that aren’t some strange Pavlovian manipulation of the human desire to click or identify, just aren’t good business these days.

And Herrman should know, he’s worked in every new media outlet under the web, including the one that AP staffers are now so desperate to join that they make mistakes like this.

The fourth Internet is scary like Darwinism, brutal enough to remind me of high school. It’s a game of identity where you either make people feel like members of some exclusive club, like The Information does with a pricy subscription model or all niche tech sites do with their relatively high CPM, or you straight up play up to reader narcissism like Buzzfeed does, slicing and dicing user identity until you end up with “21 Problems Only People With Baby Faces Will Understand.”

Which brings me to the thing I read today which truly scared the shit out of me. Buzzfeed founder Jonah Peretti, though his LinkedIn is completely bereft of it in favor of MIT, was apparently an undergrad at UC Santa Cruz in the late 90s.

Right after graduation in 1996, he wrote a paper about identity and capitalism in post-modern times, which tl:dr postulated that neo-capitalism needs to get someone to identify with its ideals before it could sell its wares.

(Aside: If you think you are immune to capitalistic entreaties, because you read Adbusters and are a Culture Jammer, you’re not. Think of it this way: What is actually wrong with being chubby? But how hard do modern ads try to tell you that this — which is arguably the Western norm — is somehow not okay.)

The thesis Peretti put forth in his paper is basically the blueprint for Buzzfeed, which increasingly has made itself All About You. Whether you’re an Armenian immigrant, or an Iggy Azaelia fan or a person born in the 2000s, 1990s or 80s, you will identify with Buzzfeed, because its business model (and the entire fourth Internet’s ) depends on it.

As Dylan Matthews writes:

“The way this identification will happen is through images and video, through ‘visual culture.’ Presumably, in this late capitalist world, someone who creates a website that can use pictures and GIFs and videos to form hundreds if not thousands of new identities for people to latch onto will become very successful!”

More than anything else in the pantheon of modern writing or as the kids call it, content creation, Buzzfeed aims to be hyper-relatable, through visuals! It hopes it can define your exact identity, because only then will you share its URL on Facebook and Twitter and Tumblr as some sort of badge of your own uniqueness, immortality.

If the first Internet was “Getting information online,” the second was “Getting the information organized” and the third was “Getting everyone connected” the fourth is definitely “Get mine.” Which is a trap.

Which Cog In The Digital Capitalist Machine Are You?

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131082)

- Norton cyber crime study offers striking revenue loss statistics (101713)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100102)

- Norton cyber crime study offers striking revenue loss statistics (57942)

- The PC inside your phone: A guide to the system-on-a-chip (57526)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

March '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 | 31 | |||||