Tuesday, January 22. 2013

3D printer to carve out world's first full-size building

Via c|net

-----

A rendering of the "Landscape House" by architect Janjaap Ruijssenaars.

(Credit: Universe Architecture)

Sure, we've heard of 3D-printed iPhone cases, dinosaur bones, and even a human fetus -- but something massive, like a building?

This is exactly what architect Janjaap Ruijssenaars has been working on. The Dutch native is planning to build what he calls a "Landscape House." This structure is two-stories and is laid out in a figure-eight shape. The idea is that this form can borrow from nature and also seamlessly fit into the outside world.

Ruijssenaars describes it on his Web site as "one surface folded in an endless mobius band," where "floors transform into ceilings, inside into outside."

The production of the building will be done on a 3D printer called the D-Shape, which was invented by Enrico Dini. The D-Shape uses a stereolithography printing process with sand and a binding agent -- letting builders create structures that are supposedly as strong as concrete.

According to the Los Angeles Times, the printer will lay down thousands of layers of sand to create 20 by 30-foot sections. These blocks will then be used to compile the building.

The "Landscape House" will be the first 3D-printed building and is estimated to cost between $5 million and $6 million, according to the BBC. Ruijssenaars plans to have it done sometime in 2014.

Wednesday, June 20. 2012

ArduSat: a real satellite mission that you can be a part of

Via DVICE

-----

We've been writing a lot recently about how the private space industry is poised to make space cheaper and more accessible. But in general, this is for outfits such as NASA, not people like you and me.

Today, a company called NanoSatisfi is launching a Kickstarter project to send an Arduino-powered satellite into space, and you can send an experiment along with it.

Whether it's private industry or NASA or the ESA or anyone else, sending stuff into space is expensive. It also tends to take approximately forever to go from having an idea to getting funding to designing the hardware to building it to actually launching something. NanoSatisfi, a tech startup based out of NASA's Ames Research Center here in Silicon Valley, is trying to change all of that (all of it) by designing a satellite made almost entirely of off-the-shelf (or slightly modified) hobby-grade hardware, launching it quickly, and then using Kickstarter to give you a way to get directly involved.

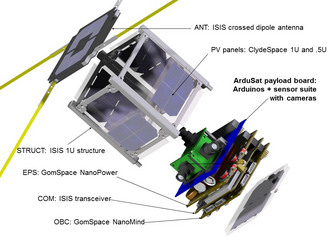

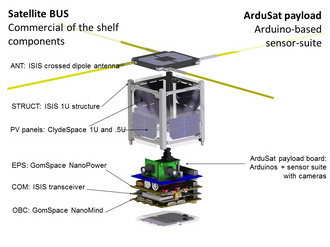

ArduSat is based on the CubeSat platform, a standardized satellite framework that measures about four inches on a side and weighs under three pounds. It's just about as small and cheap as you can get when it comes to launching something into orbit, and while it seems like a very small package, NanoSatisfi is going to cram as much science into that little cube as it possibly can.

Here's the plan: ArduSat, as its name implies, will run on Arduino boards, which are open-source microcontrollers that have become wildly popular with hobbyists. They're inexpensive, reliable, and packed with features. ArduSat will be packing between five and ten individual Arduino boards, but more on that later. Along with the boards, there will be sensors. Lots of sensors, probably 25 (or more), all compatible with the Arduinos and all very tiny and inexpensive. Here's a sampling:

Yeah, so that's a lot of potential for science, but the entire Arduino sensor suite is only going to cost about $1,500. The rest of the satellite (the power system, control system, communications system, solar panels, antennae, etc.) will run about $50,000, with the launch itself costing about $35,000. This is where you come in.

NanoSatisfi is looking for Kickstarter funding to pay for just the launch of the satellite itself: the funding goal is $35,000. Thanks to some outside investment, it's able to cover the rest of the cost itself. And in return for your help, NanoSatisfi is offering you a chance to use ArduSat for your own experiments in space, which has to be one of the coolest Kickstarter rewards ever.

- For a $150 pledge, you can reserve 15 imaging slots on ArduSat. You'll be able to go to a website, see the path that the satellite will be taking over the ground, and then select the targets you want to image. Those commands will be uploaded to the ArduSat, and when it's in the right spot in its orbit, it'll point its camera down at Earth and take a picture which will be then emailed right to you. From space.

- For $300, you can upload your own personal message to ArduSat, where it will be broadcast back to Earth from space for an entire day. ArduSat is in a polar orbit, so over the course of that day, it'll circle the Earth seven times and your message will be broadcast over the entire globe.

- For $500, you can take advantage of the whole point of ArduSat and run your very own experiment for an entire week on a selection of ArduSat's sensors. You know, in space. Just to be clear, it's not like you're just having your experiment run on data that's coming back to Earth from the satellite. Rather, your experiment is uploaded to the satellite itself, and it's actually running on one of the Arduino boards on ArduSat real time, which is why there are so many identical boards packed in there.

Now, NanoSatisfi itself doesn't really expect to get involved with a lot of the actual experiments that the ArduSat does: rather, it's saying "here's this hardware platform we've got up in space, it's got all these sensors, go do cool stuff." And if the stuff that you can do with the existing sensor package isn't cool enough for you, backers of the project will be able to suggest new sensors and new configurations, even for the very first generation ArduSat.

To make sure you don't brick the satellite with buggy code, NanSatisfi will have a duplicate satellite in a space-like environment here on Earth that it'll use to test out your experiment first. If everything checks out, your code gets uploaded to the satellite, runs in whatever timeslot you've picked, and then the results get sent back to you after your experiment is completed. Basically, you're renting time and hardware on this satellite up in space, and you can do (almost) whatever you want with that.

ArduSat has a lifetime of anywhere from six months to two years. None of this payload stuff (neither the sensors nor the Arduinos) are specifically space-rated or radiation-hardened or anything like that, and some of them will be exposed directly to space. There will be some backups and redundancy, but partly, this will be a learning experience to see what works and what doesn't. The next generation of ArduSat will take all of this knowledge and put it to good use making a more capable and more reliable satellite.

This, really, is part of the appeal of ArduSat: with a fast,

efficient, and (relatively) inexpensive crowd-sourced model, there's a

huge potential for improvement and growth. For example, If this

Kickstarter goes bananas and NanoSatisfi runs out of room for people to

get involved on ArduSat, no problem, it can just build and launch

another ArduSat along with the first, jammed full of (say) fifty more

Arduinos so that fifty more experiments can be run at the same time. Or

it can launch five more ArduSats. Or ten more. From the decision to

start developing a new ArduSat to the actual launch of that ArduSat is a

period of just a few months. If enough of them get up there at the same

time, there's potential for networking multiple ArduSats together up in

space and even creating a cheap and accessible global satellite array.

If this sounds like a lot of space junk in the making, don't worry: the

ArduSats are set up in orbits that degrade after a year or two, at which

point they'll harmlessly burn up in the atmosphere. And you can totally

rent the time slot corresponding with this occurrence and measure

exactly what happens to the poor little satellite as it fries itself to a

crisp.

Longer term, there's also potential for making larger ArduSats with more complex and specialized instrumentation. Take ArduSat's camera: being a little tiny satellite, it only has a little tiny camera, meaning that you won't get much more detail than a few kilometers per pixel. In the future, though, NanoSatisfi hopes to boost that to 50 meters (or better) per pixel using a double or triple-sized satellite that it'll call OptiSat. OptiSat will just have a giant camera or two, and in addition to taking high resolution pictures of Earth, it'll also be able to be turned around to take pictures of other stuff out in space. It's not going to be the next Hubble, but remember, it'll be under your control.

NanoSatisfi's Peter Platzer holds a prototype ArduSat board,

including the master controller, sensor suite, and camera. Photo: Evan

Ackerman/DVICE

Assuming the Kickstarter campaign goes well, NanoSatisfi hopes to complete construction and integration of ArduSat by about the end of the year, and launch it during the first half of 2013. If you don't manage to get in on the Kickstarter, don't worry- NanoSatisfi hopes that there will be many more ArduSats with many more opportunities for people to participate in the idea. Having said that, you should totally get involved right now: there's no cheaper or better way to start doing a little bit of space exploration of your very own.

Check out the ArduSat Kickstarter video below, and head on through the link to reserve your spot on the satellite.

-----

Thursday, April 12. 2012

Paranoid Shelter - [Implementation Code]

By Computed·By

-----

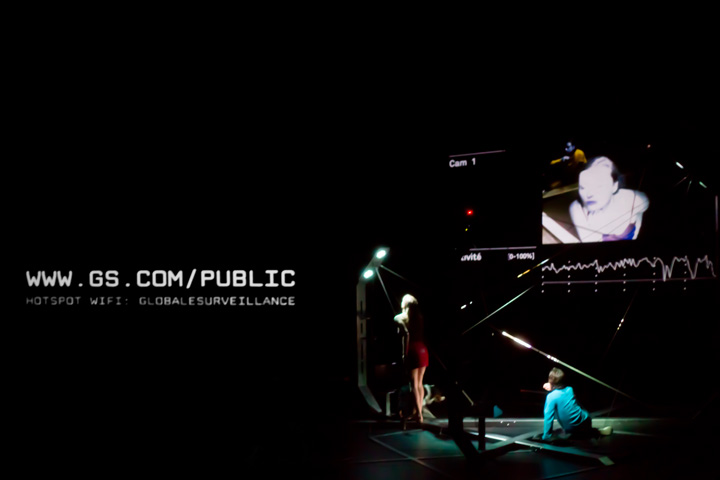

Paranoid Shelter is a recent installation / architectural device that fabric | ch finalized later in 2011 after a 6 months residency at the EPFL-ECAL Lab in Renens (Switzerland). It was realized with the support of Pro Helvetia, the OFC, the City of Lausanne and the State of Vaud. It was initiated and first presented as sketches back in 2008 (!), in the context of a colloquium about surveillance at the Palais de Tokyo in Paris.

Being created in the context of a theatrical collaboration with french writer and essayist Eric Sadin around his books about contemporary surveillance (Surveillance globale and Globale paranoïa --both published back in 2009--), Paranoid Shelter revisits the old figure/myth of the architectural shelter, articulated by the use of surveillance technologies as building blocks.

Additionnal information on the overall project can be found through the two following links:

Paranoid Shelter - (Globale Surveillance)

(Paranoid Shelter) - Globale Surveillance

A compressed preview and short of the play by NOhista.

-----

On the first technical drawings and sketches of the Paranoid Shelter project, the entire system was just looking like a (big) mess of wires, sensors and video cameras, all concentrated on a pretty tiny space where humans will have difficulties to move in. The entire space is consciously organised around tracking methods/systems, the space being delimited by 3 [augmented] posts which host a set of sensors, video cameras and microphones. It includes networked [power over ethernet] video cameras, microphones and a set of wireless ambient sensors (giving the ability of measuring temperature, O2 and CO2 gaz concentration, current atmospheric pressure, light, etc...).

Based on a real-time analysis of major sensors hardware, the system is able to control DMX lights, a set of two displays (one LCD screen and one projector) and to produce sound through a dynamically generated text to speech process.

All programs were developed using openFrameworks enhanced by a set of dedicated in-house C++ libraries in order to be able to capture networked camera video flow, control any DMX compatible piece of hardware and collect wireless Libelum sensor's data. Sound analysis programs, LCD display program and the main program are all connected to each other via a local network. The main program is in charge of collecting other program's data, performing the global analysis of the system's activity, recording system's raw information to a database and controlling system's [re]actions (lights, display).

The overall system can act in an [autonomous] way by controlling the entire installation behavior while it can also be remotely controlled when used on stage, in the context of a theater play.

Collecting all sensor's flows is one of the basic task. Cameras are used to track movements, microphones measure sound activity and sensors collect a set of ambient parameters. Even if data capture consists in some basic network based tasks, it is easily raised to upper complexity level when each data collection should occur simultaneously, in real-time, [without,with] a [limited,acceptable] delay. Major raw data analysis have to occur directly after data acquisition in order to minimize the time-shift in the system's space awareness. This first level of data analysis brings out mainly frequencies information, quantity of activity and 2D location tracking (from the point of view of each camera). Every single piece of raw information is systematically recorded in a dedicated database : it reduces system's memory footprint (by keeping it almost constant) without loosing any activity information. From time to time the system can access these recorded information in its post-analysis process, when required, mainly to add a time-scale dimension on the global activity that occurred in the monitored space. Time isolated information can be interpreted in a rough and basic way, while time composition of the same information or a set of information may bring additional meanings by verifying information consistency over time (of course, it could be in a negative or a positive way, by confirming or refuting a first level deduced activity information). Another level of analysis can be reached by taking in account the spacial distribution of sensors in the overall installation. The system is then able to compute 3D information getting an awareness of activities within the space it is monitoring. It generates a second level of data analysis, spatialised, that will increase the global understanding of captured data by the system.

Recorded activities are made available to the [audience,visitors] through a wifi access point. Networked cameras can be accessed in real time, giving the ability to humans to see some of the system's [inputs]. Thus, network activity is also monitored as another sign of human presence, the system can then [detect] activity elsewhere than in its dedicated space.

Whatever how numerous are collected data, the system faces a real problem when it comes to the interpretation of these data while not having benefit of a human brain. Events that are quite obvious to humans, do not mean anything to computers and softwares. In order to avoid the use of some artificial neural networks simulation (which may still be a good option to explore), I have decided to compute a limited set of parameters, all based on previously analysed data, only computed lately when the system may decide to react to perceived activities. It defines a kind of global [mood] of the system, based on which it will [decide] whether to be aggressive (from a human point of view) by making the global tracking activity [noticeable] by humans evolving in the installation's space, or by focusing tracking sensors on a given area or by trying to enhance some sensor's information analysis, whether to settle in a kind of silent mode.

Moreover, the evolution of these parameters are also studied in time, making the [mood] evolving in a human way, increasing and decreasing [analogically]. System's [mood] may be wrong or [unjustified,weird] from a human point of view, but that's where [multi-dimensional] software becomes interesting. Beyond a certain complexity, by adding computation layers on top of each over, having written every single line of code does not allow the programmer to predict precisely what next system's [re]action will be.

We did reach here monitoring system limitations which is obviously [interpretation,comprehension]. As long as automatic system can not correctly [understand] data, humans will need to be in the loop, making all these monitoring systems quite useless [as expert system], except for producing an enormous quantity of data that still need to be post-analysed by a human brain. As the system is producing an important set of heteregeneous data, a set of rules may suggest to the system some sort of data correlation. These rules should not be too [tights,precises] in order to avoid producing obvious system's interpretation, while keeping them slightly [out of focus] may allow [smart,astonishing] conclusion being produced. So there's rooms here for additional implementation of the data analysis processes that can still completely change the way the entire installation [can,may] behave.

Monday, August 01. 2011

World's first 'printed' plane snaps together and flies

Via CNET

By Eric Mach

-----

English engineers have produced what is believed to be the world's first printed plane. I'm not talking a nice artsy lithograph of the Wright Bros. first flight. This is a complete, flyable aircraft spit out of a 3D printer.

The SULSA began life in something like an inkjet and wound up in the air. (Credit: University of Southhampton)

The SULSA (Southampton University Laser Sintered Aircraft) is an unmanned air vehicle that emerged, layer by layer, from a nylon laser sintering machine that can fabricate plastic or metal objects. In the case of the SULSA, the wings, access hatches, and the rest of the structure of the plane were all printed.

As if that weren't awesome enough, the entire thing snaps together in minutes, no tools or fasteners required. The electric plane has a wingspan of just under 7 feet and a top speed of 100 mph.

Jim Scanlon, one of the project leads at the University of Southhampton, explains in a statement that the technology allows for products to go from conception to reality much quicker and more cheaply.

"The flexibility of the laser-sintering process allows the design team to revisit historical techniques and ideas that would have been prohibitively expensive using conventional manufacturing," Scanlon says. "One of these ideas involves the use of a Geodetic structure... This form of structure is very stiff and lightweight, but very complex. If it was manufactured conventionally it would require a large number of individually tailored parts that would have to be bonded or fastened at great expense."

So apparently when it comes to 3D printing, the sky is no longer the limit. Let's just make sure someone double-checks the toner levels before we start printing the next international space station.

-----

Personal comments:

Industrial production tools back to people?

Fab@Home project

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131034)

- Norton cyber crime study offers striking revenue loss statistics (101642)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100044)

- Norton cyber crime study offers striking revenue loss statistics (57871)

- The PC inside your phone: A guide to the system-on-a-chip (57435)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |