Monday, February 26. 2018

WebAssembly

-----

WebAssembly (wasm, WA) is a web standard that defines a binary format and a corresponding assembly-like text format for executable code in Web pages. It is meant to enable executing code nearly as fast as running native machine code. It was envisioned to complement JavaScript to speed up performance-critical parts of web applications and later on to enable web development in other languages than JavaScript.[1][2][3] It is developed at the World Wide Web Consortium (W3C) with engineers from Mozilla, Microsoft, Google and Apple.[4]

It is executed in a sandbox in the web browser after a formal verification step. Programs can be compiled from high-level languages into wasm modules and loaded as libraries from within JavaScript applets.

Wednesday, February 21. 2018

Inching closer to a DNA-based file system

Via ars technica

-----

When it comes to data storage, efforts to get faster access grab most of the attention. But long-term archiving of data is equally important, and it generally requires a completely different set of properties. To get a sense of why getting this right is important, just take the recently revived NASA satellite as an example—extracting anything from the satellite's data will rely on the fact that a separate NASA mission had an antiquated tape drive that could read the satellite's communication software.

One of the more unexpected technologies to receive some attention as an archival storage medium is DNA. While it is incredibly slow to store and retrieve data from DNA, we know that information can be pulled out of DNA that's tens of thousands of years old. And there have been some impressive demonstrations of the approach, like an operating system being stored in DNA at a density of 215 Petabytes a gram.

But that method treated DNA as a glob of unorganized bits—you had to sequence all of it in order to get at any of the data. Now, a team of researchers has figured out how to add something like a filesystem to DNA storage, allowing random access to specific data within a large collection of DNA. While doing this, the team also tested a recently developed method for sequencing DNA that can be done using a compact USB device.

Randomization

DNA holds data as a combination of four bases, so storing data in it requires a way of translating bits into this system. Once a bit of data is translated, it's chopped up into smaller pieces (usually 100 to 150 bases long) and inserted in between ends that make it easier to copy and sequence. These ends also contain some information where the data resides in the overall storage scheme—i.e., these are bytes 197 to 300.

To restore the data, all the DNA has to be sequenced, the locational information read, and the DNA sequence decoded. In fact, the DNA needs to be sequenced several times over, since there are errors and a degree of randomness involved in how often any fragment will end up being sequenced.

Adding random access to data would cut down significantly on the amount of sequencing that would need to be done. Rather than sequencing an entire archive just to get one file out of it, the sequencing could be far more targeted. And, as it turns out, this is pretty simple to do.

Note above where the data is packed between short flanking DNA sequences, which makes it easier to copy and sequence. There are lots of potential sequences that can fit the bill in terms of making DNA easier to work with. The researchers identified thousands of them. Each of these can be used to tag the intervening data as belonging to a specific file, allowing it to be amplified and sequenced separately, even if it's present in a large mixture of DNA from different files. If you want to store more files, you just have to keep different pools of DNA, each containing several thousand files (or multiple terabytes). Keeping these pools physically separated requires about a square millimeter of space.

(It's possible to have many more of these DNA sequencing tags, but the authors selected only those that should produce very consistent amplification results.)

The team also came up with a clever solution to one of the problems of DNA storage. Lots of digital files will have long stretches of the same bits (think of a blue sky or a few seconds of silence in a music track). Unfortunately, DNA sequencing tends to choke when confronted with a long run of identical bases, either producing errors or simply stopping. To avoid this, the researchers created a random sequence and used it to do a bit-flipping operation (XOR) with the sequence being encoded. This would break up long runs of identical bases and poses a minimal risk of creating new ones.

Long reads

The other bit of news in this publication is the use of a relatively new DNA sequencing technology that involves stuffing strands of DNA through a tiny pore and reading each base as it passes through. The technology for this is compact enough that it's available in a palm-sized USB device. The technology had been pretty error-prone, but it has improved enough that it was recently used to sequence an entire human genome.

While the nanopore technique has issues with errors, it has the advantage of working with much longer stretches of DNA. So the authors rearranged their stored data so it sits on fewer, longer DNA molecules and gave the hardware a test.

It had an astonishingly high error rate—about 12 percent by their measure. This suggests that the system needs to be adapted to work with the DNA samples that the authors prepared. Still, the errors were mostly random, and the team was able to identify and correct them by sequencing enough molecules so that, on average, each DNA sequence was read 36 times.

So, with something resembling a filesystem and a compact reader, are we moving close to the point where DNA-based storage is practical? Not exactly. The authors point out the issue of capacity. Our ability to synthesize DNA has grown at an astonishing pace, but it started from almost nothing a few decades ago, so it's still relatively small. Assuming a DNA-based drive would be able to read a few KB per second, then the researchers calculate that it would only take about two weeks to read every bit of DNA that we could synthesize annually. Put differently, our ability to synthesize DNA has a long way to go before we can practically store much data.

Nature Biotechnology, 2018. DOI: 10.1038/nbt.4079 (About DOIs).

Monday, April 24. 2017

Replika is a personal device that learns your personality, talks to people FOR you

Via The Real Daily (C.L. Brenton)

-----

We’ve been warned…

Remember the movie Surrogates, where everyone lives in at home plugged in to virtual reality screens and robot versions of them run around and do their bidding? Or Her, where Siri’s personality is so attractive and real, that it’s possible to fall in love with an AI?

Replika may be the seedling of such a world, and there’s no denying the future implications of that kind of technology.

Replika is a personal chatbot that you can raise through SMS. By chatting with it, you teach it your personality and gain in-app points. Through the app it can chat with your friends and learn enough about you so that maybe one day it will take over some of your responsibilities like social media, or checking in with your mom. It’s in beta right now, so we haven’t gotten our hands on one, and there is little information available about it, but color me fascinated and freaked out based on what we already know.

AI is just that – artificial

I have never been a fan of technological advances that replace human interaction. Social media, in general, seems to replace and enhance traditional communication, which is fine. But robots that hug you when you’re grieving a lost child, or AI personalities that text your girlfriend sweet nothings for you don’t seem like human enhancement, they feel like human loss.

You know that feeling when you get a text message from someone you care about, who cares about you? It’s that little dopamine rush, that little charge that gets your blood pumping, your heart racing. Just one ding, and your day could change. What if a robot’s text could make you feel that way?

It’s a real boy?

Replika began when one of its founders lost her roommate, Roman, to a car accident. Eugenia Kyuda created Luka, a restaurant recommending chatbot, and she realized that with all of her old text messages from Roman, she could create an AI that texts and chats just like him. When she offered the use of his chatbot to his friends and family, she found a new business.

People were communicating with their deceased friend, sibling, and child, like he was still there. They wanted to tell him things, to talk about changes in their lives, to tell him they missed him, to hear what he had to say back.

This is human loss and grieving tempered by an AI version of the dead, and the implications are severe. Your personality could be preserved in servers after you die. Your loved ones could feel like they’re talking to you when you’re six feet under. Doesn’t that make anyone else feel uncomfortable?

Bringing an X-file to life

If you think about your closest loved one dying, talking to them via chatbot may seem like a dream come true. But in the long run, continuing a relationship with a dead person via their AI avatar is dangerous. Maybe it will help you grieve in the short term, but what are we replacing? And is it worth it?

Imagine texting a friend, a parent, a sibling, a spouse instead of Replika. Wouldn’t your interaction with them be more valuable than your conversation with this personality? Because you’re building on a lifetime of friendship, one that has value after the conversation is over. One that can exist in real tangible life. One that can actually help you grieve when the AI replacement just isn’t enough. One that can give you a hug.

“One day it will do things for you,” Kyuda said in an interview with Bloomberg, “including keeping you alive. You talk to it, and it becomes you.”

Replacing you is so easy

This kind of rhetoric from Replika’s founder has to make you wonder if this app was intended as a sort of technological fountain of youth. You never have to “die” as long as your personality sticks around to comfort your loved ones after you pass. I could even see myself trying to cope with a terminal diagnosis by creating my own Replika to assist family members after I’m gone.

But it’s wrong isn’t it? Isn’t it? Psychologically and socially wrong?

It all starts with a chatbot. That replicates your personality. It begins with a woman who was just trying to grieve. This is a taste of the future, and a scary one too. One of clones, downloaded personalities, and creating a life that sticks around after you’re gone.

Monday, April 10. 2017

A second life for open-world games as self-driving car training software

Via Tech Crunch

-----

A lot of top-tier video games enjoy lengthy long-tail lives with remasters and re-releases on different platforms, but the effort put into some games could pay dividends in a whole new way, as companies training things like autonomous cars, delivery drones and other robots are looking to rich, detailed virtual worlds to provided simulated training environments that mimic the real world.

Just as companies including Boom can now build supersonic jets with a small team and limited funds, thanks to advances made possible in simulation, startups like NIO (formerly NextEV) can now keep pace with larger tech concerns with ample funding in developing self-driving software, using simulations of real-world environments including those derived from games like Grand Theft Auto V. Bloomberg reports that the approach is increasingly popular among companies that are looking to supplement real-world driving experience, including Waymo and Toyota’s Research Institute.

There are some drawbacks, of course: Anyone will tell you that regardless of the industry, simulation can do a lot, but it can’t yet fully replace real-world testing, which always diverges in some ways from what you’d find in even the most advanced simulations. Also, miles driven in simulation don’t count towards the total miles driven by autonomous software figures most regulatory bodies will actually care about in determining the road worthiness of self-driving systems.

The more surprising takeaway here is that GTA V in this instance isn’t a second-rate alternative to simulation software created for the purpose of testing autonomous driving software – it proves an incredibly advanced testing platform, because of the care taken in its open-world game design. That means there’s no reason these two market uses can’t be more aligned in future: Better, more comprehensive open-world game design means better play experiences for users looking for that truly immersive quality, and better simulation results for researchers who then leverage the same platforms as a supplement to real-world testing.

Monday, March 20. 2017

How Uber Deceives the Authorities Worldwide

Via New York Times (Mike Isaac)

-----

SAN FRANCISCO — Uber has for years engaged in a worldwide program to deceive the authorities in markets where its low-cost ride-hailing service was resisted by law enforcement or, in some instances, had been banned.

The program, involving a tool called Greyball, uses data collected from the Uber app and other techniques to identify and circumvent officials who were trying to clamp down on the ride-hailing service. Uber used these methods to evade the authorities in cities like Boston, Paris and Las Vegas, and in countries like Australia, China and South Korea.

Greyball was part of a program called VTOS, short for “violation of terms of service,” which Uber created to root out people it thought were using or targeting its service improperly. The program, including Greyball, began as early as 2014 and remains in use, predominantly outside the United States. Greyball was approved by Uber’s legal team.

Greyball and the VTOS program were described to The New York Times by four current and former Uber employees, who also provided documents. The four spoke on the condition of anonymity because the tools and their use are confidential and because of fear of retaliation by Uber.

Uber’s use of Greyball was recorded on video in late 2014, when Erich England, a code enforcement inspector in Portland, Ore., tried to hail an Uber car downtown in a sting operation against the company.

At the time, Uber had just started its ride-hailing service in Portland without seeking permission from the city, which later declared the service illegal. To build a case against the company, officers like Mr. England posed as riders, opening the Uber app to hail a car and watching as miniature vehicles on the screen made their way toward the potential fares.

But unknown to Mr. England and other authorities, some of the digital cars they saw in the app did not represent actual vehicles. And the Uber drivers they were able to hail also quickly canceled. That was because Uber had tagged Mr. England and his colleagues — essentially Greyballing them as city officials — based on data collected from the app and in other ways. The company then served up a fake version of the app, populated with ghost cars, to evade capture.

At a time when Uber is already under scrutiny for its boundary-pushing workplace culture, its use of the Greyball tool underscores the lengths to which the company will go to dominate its market. Uber has long flouted laws and regulations to gain an edge against entrenched transportation providers, a modus operandi that has helped propel it into more than 70 countries and to a valuation close to $70 billion.

Yet using its app to identify and sidestep the authorities where regulators said Uber was breaking the law goes further toward skirting ethical lines — and, potentially, legal ones. Some at Uber who knew of the VTOS program and how the Greyball tool was being used were troubled by it.

In a statement, Uber said, “This program denies ride requests to users who are violating our terms of service — whether that’s people aiming to physically harm drivers, competitors looking to disrupt our operations, or opponents who collude with officials on secret ‘stings’ meant to entrap drivers.”

The mayor of Portland, Ted Wheeler, said in a statement, “I am very concerned that Uber may have purposefully worked to thwart the city’s job to protect the public.”

Uber, which lets people hail rides using a smartphone app, operates multiple types of services, including a luxury Black Car offering in which drivers are commercially licensed. But an Uber service that many regulators have had problems with is the lower-cost version, known in the United States as UberX.

UberX essentially lets people who have passed a background check and vehicle inspection become Uber drivers quickly. In the past, many cities have banned the service and declared it illegal.

That is because the ability to summon a noncommercial driver — which is how UberX drivers using private vehicles are typically categorized — was often unregulated. In barreling into new markets, Uber capitalized on this lack of regulation to quickly enlist UberX drivers and put them to work before local regulators could stop them.

After the authorities caught on to what was happening, Uber and local officials often clashed. Uber has encountered legal problems over UberX in cities including Austin, Tex., Philadelphia and Tampa, Fla., as well as internationally. Eventually, agreements were reached under which regulators developed a legal framework for the low-cost service.

That approach has been costly. Law enforcement officials in some cities have impounded vehicles or issued tickets to UberX drivers, with Uber generally picking up those costs on the drivers’ behalf. The company has estimated thousands of dollars in lost revenue for every vehicle impounded and ticket received.

This is where the VTOS program and the use of the Greyball tool came in. When Uber moved into a new city, it appointed a general manager to lead the charge. This person, using various technologies and techniques, would try to spot enforcement officers.

One technique involved drawing a digital perimeter, or “geofence,” around the government offices on a digital map of a city that Uber was monitoring. The company watched which people were frequently opening and closing the app — a process known internally as eyeballing — near such locations as evidence that the users might be associated with city agencies.

Other techniques included looking at a user’s credit card information and determining whether the card was tied directly to an institution like a police credit union.

Enforcement officials involved in large-scale sting operations meant to catch Uber drivers would sometimes buy dozens of cellphones to create different accounts. To circumvent that tactic, Uber employees would go to local electronics stores to look up device numbers of the cheapest mobile phones for sale, which were often the ones bought by city officials working with budgets that were not large.

In all, there were at least a dozen or so signifiers in the VTOS program that Uber employees could use to assess whether users were regular new riders or probably city officials.

If such clues did not confirm a user’s identity, Uber employees would search social media profiles and other information available online. If users were identified as being linked to law enforcement, Uber Greyballed them by tagging them with a small piece of code that read “Greyball” followed by a string of numbers.

When someone tagged this way called a car, Uber could scramble a set of ghost cars in a fake version of the app for that person to see, or show that no cars were available. Occasionally, if a driver accidentally picked up someone tagged as an officer, Uber called the driver with instructions to end the ride.

Uber employees said the practices and tools were born in part out of safety measures meant to protect drivers in some countries. In France, India and Kenya, for instance, taxi companies and workers targeted and attacked new Uber drivers.

“They’re beating the cars with metal bats,” the singer Courtney Love posted on Twitter from an Uber car in Paris at a time of clashes between the company and taxi drivers in 2015. Ms. Love said that protesters had ambushed her Uber ride and had held her driver hostage. “This is France? I’m safer in Baghdad.”

Uber has said it was also at risk from tactics used by taxi and limousine companies in some markets. In Tampa, for instance, Uber cited collusion between the local transportation authority and taxi companies in fighting ride-hailing services.

In those areas, Greyballing started as a way to scramble the locations of UberX drivers to prevent competitors from finding them. Uber said that was still the tool’s primary use.

But as Uber moved into new markets, its engineers saw that the same methods could be used to evade law enforcement. Once the Greyball tool was put in place and tested, Uber engineers created a playbook with a list of tactics and distributed it to general managers in more than a dozen countries on five continents.

At least 50 people inside Uber knew about Greyball, and some had qualms about whether it was ethical or legal. Greyball was approved by Uber’s legal team, led by Salle Yoo, the company’s general counsel. Ryan Graves, an early hire who became senior vice president of global operations and a board member, was also aware of the program.

Ms. Yoo and Mr. Graves did not respond to requests for comment.

Outside legal specialists said they were uncertain about the legality of the program. Greyball could be considered a violation of the federal Computer Fraud and Abuse Act, or possibly intentional obstruction of justice, depending on local laws and jurisdictions, said Peter Henning, a law professor at Wayne State University who also writes for The New York Times.

“With any type of systematic thwarting of the law, you’re flirting with disaster,” Professor Henning said. “We all take our foot off the gas when we see the police car at the intersection up ahead, and there’s nothing wrong with that. But this goes far beyond avoiding a speed trap.”

On Friday, Marietje Schaake, a member of the European Parliament for the Dutch Democratic Party in the Netherlands, wrote that she had written to the European Commission asking, among other things, if it planned to investigate the legality of Greyball.

To date, Greyballing has been effective. In Portland on that day in late 2014, Mr. England, the enforcement officer, did not catch an Uber, according to local reports.

And two weeks after Uber began dispatching drivers in Portland, the company reached an agreement with local officials that said that after a three-month suspension, UberX would eventually be legally available in the city.

Monday, January 30. 2017

Hungry penguins help keep car code safe

Via technewsbase

-----

Hungry penguins have inspired a novel way of making sure computer code in smart cars does not crash.

Tools based on the way the birds co-operatively hunt for fish are being developed to test different ways of organising in-car software.

The tools look for safe ways to organise code in the same way that penguins seek food sources in the open ocean.

Experts said such testing systems would be vital as cars get more connected.

Engineers have often turned to nature for good solutions to tricky problems, said Prof Yiannis Papadopoulos, a computer scientist at the University of Hull who developed the penguin-inspired testing system.

The way ants pass messages among nest-mates has helped telecoms firms keep telephone networks running, and many robots get around using methods of locomotion based on the ways animals move.

‘Big society’

Penguins were another candidate, said Prof Papadopoulos, because millions of years of evolution has helped them develop very efficient hunting strategies.

This was useful behaviour to copy, he said, because it showed that penguins had solved a tricky optimisation problem – how to ensure as many penguins as possible get enough to eat.

“Penguins are social birds and we know they live in colonies that are often very large and can include hundreds of thousands of birds. This raises the question of how can they sustain this kind of big society given that together they need a vast amount of food.

“There must be something special about their hunting strategy,” he said, adding that an inefficient strategy would mean many birds starved.

Prof Papadopoulos said many problems in software engineering could be framed as a search among all hypothetical solutions for the one that produces the best results. Evolution, through penguins and many other creatures, has already searched through and discarded a lot of bad solutions.

Studies of hunting penguins have hinted at how they organised themselves.

“They forage in groups and have been observed to synchronise their dives to get fish,” said Prof Papadopoulos. “They also have the ability to communicate using vocalisations and possibly convey information about food resources.”

The communal, co-ordinated action helps the penguins get the most out of a hunting expedition. Groups of birds are regularly reconfigured to match the shoals of fish and squid they find. It helps the colony as a whole optimise the amount of energy they have to expend to catch food.

“This solution has generic elements which can be abstracted and be used to solve other problems,” he said, “such as determining the integrity of software components needed to reach the high safety requirements of a modern car.”

Integrity in this sense means ensuring the software does what is intended, handles data well, and does not introduce errors or crash.

By mimicking penguin behaviour in a testing system which seeks the safest ways to arrange code instead of shoals of fish, it becomes possible to slowly zero in on the best way for that software to be structured.

The Hull researchers, in conjunction with Dr Youcef Gheraibia, a postdoctoral researcher from Algeria, turned to search tools based on the collaborative foraging behaviour of penguins.

The foraging-based system helped to quickly search through the many possible ways software can be specified to home in on the most optimal solutions in terms of safety and cost.

Currently, complex software was put together and tested manually, with only experience and engineering judgement to guide it, said Prof Papadopoulos. While this could produce decent results it could consider only a small fraction of all possible good solutions.

The penguin-based system could crank through more solutions and do a better job of assessing which was best, he said.

Under pressure

Mike Ahmadi, global director of critical systems security at Synopsys, which helps vehicle-makers secure code, said modern car manufacturing methods made optimisation necessary.

“When you look at a car today, it’s essentially something that’s put together from a vast and extended supply chain,” he said.

Building a car was about getting sub-systems made by different manufacturers to work together well, rather than being something made wholly in one place.

That was a tricky task given how much code was present in modern cars, he added.

“There’s about a million lines of code in the average car today and there’s far more in connected cars.”

Carmakers were under pressure, said Mr Ahmadi, to adapt cars quickly so they could interface with smartphones and act as mobile entertainment hubs, as well as make them more autonomous.

“From a performance point of view carmakers have gone as far as they can,” he said. “What they have discovered is that the way to offer features now is through software.”

Security would become a priority as cars got smarter and started taking in and using data from other cars, traffic lights and online sources, said Nick Cook from software firm Intercede, which is working with carmakers on safe in-car software.

“If somebody wants to interfere with a car today then generally they have to go to the car itself,” he said. “But as soon as it’s connected they can be anywhere in the world.

“Your threat landscape is quite significantly different and the opportunity for a hack is much higher.”

Tuesday, January 10. 2017

The mind-blowing AI announcement from Google that you probably missed.

Via freeCodeCamp

-----

In the closing weeks of 2016, Google published an article that quietly sailed under most people’s radars. Which is a shame, because it may just be the most astonishing article about machine learning that I read last year.

Don’t feel bad if you missed it. Not only was the article competing with the pre-Christmas rush that most of us were navigating — it was also tucked away on Google’s Research Blog, beneath the geektastic headline Zero-Shot Translation with Google’s Multilingual Neural Machine Translation System.

This doesn’t exactly scream must read, does it? Especially when you’ve got projects to wind up, gifts to buy, and family feuds to be resolved — all while the advent calendar relentlessly counts down the days until Christmas like some kind of chocolate-filled Yuletide doomsday clock.

Luckily, I’m here to bring you up to speed. Here’s the deal.

Up until September of last year, Google Translate used phrase-based translation. It basically did the same thing you and I do when we look up key words and phrases in our Lonely Planet language guides. It’s effective enough, and blisteringly fast compared to awkwardly thumbing your way through a bunch of pages looking for the French equivalent of “please bring me all of your cheese and don’t stop until I fall over.” But it lacks nuance.

Phrase-based translation is a blunt instrument. It does the job well enough to get by. But mapping roughly equivalent words and phrases without an understanding of linguistic structures can only produce crude results.

This approach is also limited by the extent of an available vocabulary. Phrase-based translation has no capacity to make educated guesses at words it doesn’t recognize, and can’t learn from new input.

All that changed in September, when Google gave their translation tool a new engine: the Google Neural Machine Translation system (GNMT). This new engine comes fully loaded with all the hot 2016 buzzwords, like neural network and machine learning.

The short version is that Google Translate got smart. It developed the ability to learn from the people who used it. It learned how to make educated guesses about the content, tone, and meaning of phrases based on the context of other words and phrases around them. And — here’s the bit that should make your brain explode — it got creative.

Google Translate invented its own language to help it translate more effectively.

What’s more, nobody told it to. It didn’t develop a language (or interlingua, as Google call it) because it was coded to. It developed a new language because the software determined over time that this was the most efficient way to solve the problem of translation.

Stop and think about that for a moment. Let it sink in. A neural computing system designed to translate content from one human language into another developed its own internal language to make the task more efficient. Without being told to do so. In a matter of weeks.

To understand what’s going on, we need to understand what zero-shot translation capability is. Here’s Google’s Mike Schuster, Nikhil Thorat, and Melvin Johnson from the original blog post:

Let’s say we train a multilingual system with Japanese⇄English and Korean⇄English examples. Our multilingual system, with the same size as a single GNMT system, shares its parameters to translate between these four different language pairs. This sharing enables the system to transfer the “translation knowledge” from one language pair to the others. This transfer learning and the need to translate between multiple languages forces the system to better use its modeling power.

This inspired us to ask the following question: Can we translate between a language pair which the system has never seen before? An example of this would be translations between Korean and Japanese where Korean⇄Japanese examples were not shown to the system. Impressively, the answer is yes — it can generate reasonable Korean⇄Japanese translations, even though it has never been taught to do so.

Here you can see an advantage of Google’s new neural machine over the old phrase-based approach. The GMNT is able to learn how to translate between two languages without being explicitly taught. This wouldn’t be possible in a phrase-based model, where translation is dependent upon an explicit dictionary to map words and phrases between each pair of languages being translated.

And this leads the Google engineers onto that truly astonishing discovery of creation:

The success of the zero-shot translation raises another important question: Is the system learning a common representation in which sentences with the same meaning are represented in similar ways regardless of language — i.e. an “interlingua”? Using a 3-dimensional representation of internal network data, we were able to take a peek into the system as it translates a set of sentences between all possible pairs of the Japanese, Korean, and English languages.

Within a single group, we see a sentence with the same meaning but from three different languages. This means the network must be encoding something about the semantics of the sentence rather than simply memorizing phrase-to-phrase translations. We interpret this as a sign of existence of an interlingua in the network.

So there you have it. In the last weeks of 2016, as journos around the world started penning their “was this the worst year in living memory” thinkpieces, Google engineers were quietly documenting a genuinely astonishing breakthrough in software engineering and linguistics.

I just thought maybe you’d want to know.

Ok, to really understand what’s going on we probably need multiple computer science and linguistics degrees. I’m just barely scraping the surface here. If you’ve got time to get a few degrees (or if you’ve already got them) please drop me a line and explain it all me to. Slowly.

Update 1: in my excitement, it’s fair to say that I’ve exaggerated the idea of this as an ‘intelligent’ system — at least so far as we would think about human intelligence and decision making. Make sure you read Chris McDonald’s comment after the article for a more sober perspective.

Update 2: Nafrondel’s excellent, detailed reply is also a must read for an expert explanation of how neural networks function.

Monday, January 18. 2016

MIT Researchers Train An Algorithm To Predict How Boring Your Selfie Is

Via TechCrunch

-----

Researchers at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) have created an algorithm they claim can predict how memorable or forgettable an image is almost as accurately as a human — which is to say that their tech can predict how likely a person would be to remember or forget a particular photo.

The algorithm performed 30 per cent better than existing algorithms and was within a few percentage points of the average human performance, according to the researchers.

The team has put a demo of their tool online here, where you can upload your selfie to get a memorability score and view a heat map showing areas the algorithm considers more or less memorable. They have also published a paper on the research which can be found here.

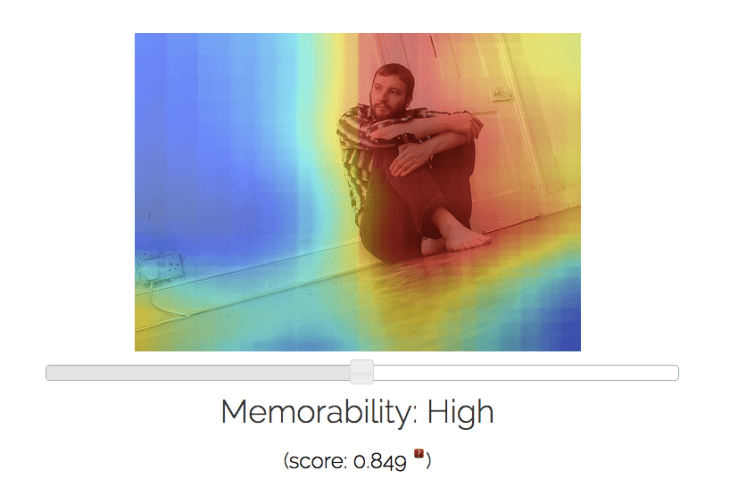

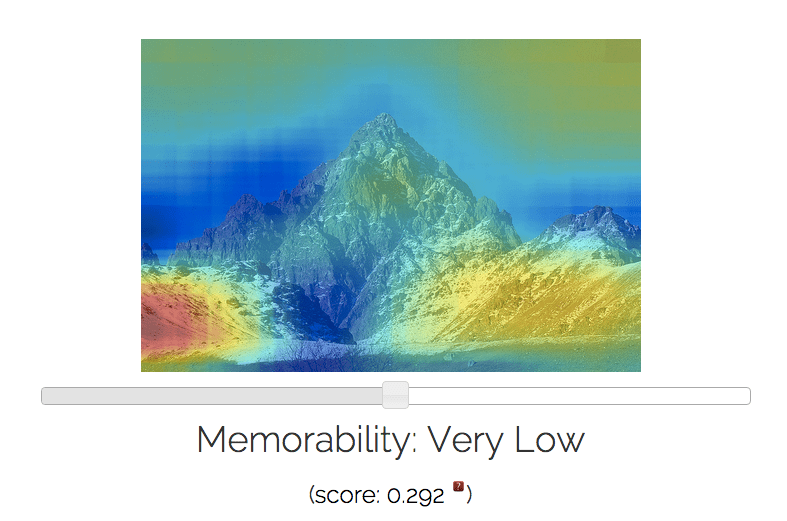

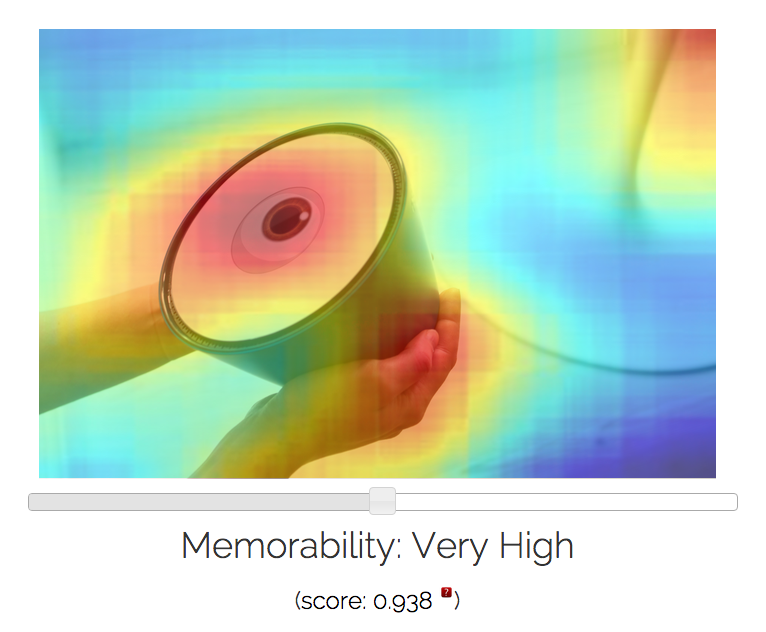

Here are some examples of images I ran through their MemNet algorithm, with resulting memorability scores and most and least forgettable areas depicted via heat map:

Potential applications for the algorithm are very broad indeed when you consider how photos and photo-sharing remains the currency of the social web. Anything that helps improve understanding of how people process visual information and the impact of that information on memory has clear utility.

The team says it plans to release an app in future to allow users to tweak images to improve their impact. So the research could be used to underpin future photo filters that do more than airbrush facial features to make a shot more photogenic — but maybe tweak some of the elements to make the image more memorable too.

Beyond helping people create a more lasting impression with their selfies, the team envisages applications for the algorithm to enhance ad/marketing content, improve teaching resources and even power health-related applications aimed at improving a person’s capacity to remember or even as a way to diagnose errors in memory and perhaps identify particular medical conditions.

The MemNet algorithm was created using deep learning AI techniques, and specifically trained on tens of thousands of tagged images from several different datasets all developed at CSAIL — including LaMem, which contains 60,000 images each annotated with detailed metadata about qualities such as popularity and emotional impact.

Publishing the LaMem database alongside their paper is part of the team’s effort to encourage further research into what they say has often been an under-studied topic in computer vision.

Asked to explain what kind of patterns the deep-learning algorithm is trying to identify in order to predict memorability/forgettability, PhD candidate at MIT CSAIL, Aditya Khosla, who was lead author on a related paper, tells TechCrunch: “This is a very difficult question and active area of research. While the deep learning algorithms are extremely powerful and are able to identify patterns in images that make them more or less memorable, it is rather challenging to look under the hood to identify the precise characteristics the algorithm is identifying.

“In general, the algorithm makes use of the objects and scenes in the image but exactly how it does so is difficult to explain. Some initial analysis shows that (exposed) body parts and faces tend to be highly memorable while images showing outdoor scenes such as beaches or the horizon tend to be rather forgettable.”

The research involved showing people images, one after another, and asking them to press a key when they encounter an image they had seen before to create a memorability score for images used to train the algorithm. The team had about 5,000 people from the Amazon Mechanical Turk crowdsourcing platform view a subset of its images, with each image in their LaMem dataset viewed on average by 80 unique individuals, according to Khosla.

In terms of shortcomings, the algorithm does less well on types of images it has not been trained on so far, as you’d expect — so it’s better on natural images and less good on logos or line drawings right now.

“It has not seen how variations in colors, fonts, etc affect the memorability of logos, so it would have a limited understanding of these,” says Khosla. “But addressing this is a matter of capturing such data, and this is something we hope to explore in the near future — capturing specialized data for specific domains in order to better understand them and potentially allow for commercial applications there. One of those domains we’re focusing on at the moment is faces.”

The team has previously developed a similar algorithm for face memorability.

Discussing how the planned MemNet app might work, Khosla says there are various options for how images could be tweaked based on algorithmic input, although ensuring a pleasing end photo is part of the challenge here. “The simple approach would be to use the heat map to blur out regions that are not memorable to emphasize the regions of high memorability, or simply applying an Instagram-like filter or cropping the image a particular way,” he notes.

“The complex approach would involve adding or removing objects from images automatically to change the memorability of the image — but as you can imagine, this is pretty hard — we would have to ensure that the object size, shape, pose and so on match the scene they are being added to, to avoid looking like a photoshop job gone bad.”

Looking ahead, the next step for the researchers will be to try to update their system to be able to predict the memory of a specific person. They also want to be able to better tailor it for individual “expert industries” such as retail clothing and logo-design.

How many training images they’d need to show an individual person before being able to algorithmically predict their capacity to remember images in future is not yet clear. “This is something we are still investigating,” says Khosla.

Wednesday, January 06. 2016

New internet error code identifies censored websites

Via Slash Gear

Everyone on the internet has come across at least couple error codes, the most well-known being 404, for page not found, while other common ones include 500, for internal server error, or 403, for a "forbidden" page. However, with latter, there's the growing issue of why a certain webpage has become forbidden, or who made it so. In an effort to address things like censorship or "legal obstacles," a new code has been published, to be used when legal demands require access to a page be blocked: error 451.

The number is a knowing reference Fahrenheit 451, the novel by Ray Bradbury that depicted a dystopian future where books are banned for spreading dissenting ideas, and in burned as a way censor the spread of information. The code itself was approved for use by the Internet Engineering Steering Group (IESG), which helps maintain internet standards.

The idea for code 451 originally came about around 3 years ago, when a UK court ruling required some websites to block The Pirate Bay. Most sites in turn used the 403 "forbidden" code, making it unclear to users about what the issue was. The goal of 451 is to eliminate some of the confusion around why sites may be blocked.

The use of the code is completely voluntary, however, and requires developers to begin adopting it. But if widely implemented, it should be able to communicate to users that some information has been taken down because of a legal demand, or is being censored by a national government.

Monday, January 04. 2016

Your Algorithmic Self Meets Super-Intelligent AI

Via Tech Crunch

As humanity debates the threats and opportunities of advanced artificial intelligence, we are simultaneously enabling that technology through the increasing use of personalization that is understanding and anticipating our needs through sophisticated machine learning solutions.

In effect, while using personalization technologies in our everyday lives, we are contributing in a real way to the development of the intelligent systems we purport to fear.

Perhaps uncovering the currently inaccessible personalization systems is crucial for creating a sustainable relationship between humans and super–intelligent machines?

From Machines Learning About You…

Industry giants are currently racing to develop more intelligent and lucrative AI solutions. Google is extending the ways machine learning can be applied in search, and beyond. Facebook’s messenger assistant M is combining deep learning and human curators to achieve the next level in personalization.

With your iPhone you’re carrying Apple’s digital assistant Siri with you everywhere; Microsoft’s counterpart Cortana can live in your smartphone, too. IBM’s Watson has highlighted its diverse features, varying from computer vision and natural language processing to cooking skills and business analytics.

At the same time, your data and personalized experiences are used to develop and train the machine learning systems that are powering the Siris, Watsons, Ms and Cortanas. Be it a speech recognition solution or a recommendation algorithm, your actions and personal data affect how these sophisticated systems learn more about you and the world around you.

The less explicit fact is that your diverse interactions — your likes, photos, locations, tags, videos, comments, route selections, recommendations and ratings — feed learning systems that could someday transform into super–intelligent AIs with unpredictable consequences.

As of today, you can’t directly affect how your personal data is used in these systems.

In these times, when we’re starting to use serious resources to contemplate the creation of ethical frameworks for super–intelligent AIs-to-be, we also should focus on creating ethical terms for the use of personal data and the personalization technologies that are powering the development of such systems.

To make sure that you as an individual continue to have a meaningful agency in the emerging algorithmic reality, we need learning algorithms that are on your side and solutions that augment and extend your abilities. How could this happen?

…To Machines That Learn For You

Smart devices extend and augment your memory (no forgotten birthdays) and brain processing power (no calculating in your head anymore). And they augment your senses by letting you experience things beyond your immediate environment (think AR and VR).

The web itself gives you access to a huge amount of diverse information and collective knowledge. The next step would be that smart devices and systems enhance and expand your abilities even more. What is required for that to happen in a human-centric way?

Data Awareness And Algorithmic Accountability

Algorithmic systems and personal data are too often seen as something abstract, incomprehensible and uncontrollable. Concretely, how many really stopped using Facebook or Google after PRISM came out in the open? Or after we learned that we are exposed to continuous A/B testing that is used to develop even more powerful algorithms?

More and more people are getting interested in data ethics and algorithmic accountability. Academics are already analyzing the effects of current data policies and algorithmic systems. Educational organizations are starting to emphasize the importance of coding and digital literacy.

Initiatives such as VRM, Indie Web and MyData are raising awareness on alternative data ecosystems and data management practices. Big companies like Apple and various upcoming startups are bringing personal data issues to the mainstream discussion.

Yet we still need new tools and techniques to become more data aware and to see how algorithms can be more beneficial for us as unique individuals. We need apps and data visualizations with great user experience to illuminate the possibilities of more human-centric personalization.

It’s time to create systems that evaluate algorithmic biases and keep them in check. More accessible algorithms and transparent data policies are created only through wider collaboration that brings together companies, developers, designers, users and scientists alike.

Personal Machine Learning Systems

Personalization technologies are already augmenting your decision making and future thinking by learning from you and recommending to you what to see and do next. However, not on your own terms. Rather than letting someone else and their motives and values dictate how the algorithms work and affect your life, it’s time to create solutions, such as algorithmic angels, that let you develop and customize your own algorithms and choose how they use your data.

When you’re in control, you can let your personal learning system access previously hidden data and surface intimate insights about your own behavior, thus increasing your self-awareness in an actionable way.

Personal learners could help you develop skills related to work or personal life, augmenting and expanding your abilities. For example, learning languages, writing or playing new games. Fitness or mediation apps powered by your personal algorithms would know you better than any personal trainer.

Google’s experiments with deep learning and image manipulation showed us how machine learning could be used to augment creative output. Systems capable of combining your data with different materials like images, text, sound and video could expand your abilities to see and utilize new and unexpected connections around you.

In effect, your personal algorithm can take a mind-expanding “trip” on your behalf, letting you see music or sense other dimensions beyond normal human abilities. By knowing you, personal algorithms can expose you to new diverse information, thus breaking your existing filter bubbles.

Additionally, people tinkering with their personal algorithms would create more “citizen algorithm experts,” like “citizen scientists,” coming up with new ideas, solutions and observations, stemming from real live situations and experiences.

However, personally adjustable algorithms for the general public are not happening overnight, even though Google recently open-sourced parts of its machine learning framework. But it’s possible to see how today’s personalization experiences can someday evolve into customizable algorithms that strengthen your agency and capacity to deal with other algorithmic systems.

Algorithmic Self

The next step is that your personal algorithms become a more concrete part of you, continuously evolving with you by learning from your interactions both in digital and physical environments. Your algorithmic self combines your personal abilities and knowledge with machine learning systems that adapt to you and work for you. Be it your smartwatch, self-driving car or an intelligent home system, they can all be spirited by your algorithmic self.

Your algorithmic self also can connect with other algorithmic selves, thus empowering you with the accumulating collective knowledge and intelligence. To expand your existing skills and faculties, your algorithmic self also starts to learn and act on its own, filtering information, making online transactions and comparing best options on your behalf. It makes you more resourceful, and even a better person, when you can concentrate on things that really require your human presence and attention.

Partly algorithmic humans are not bound by existing human capabilities; new skills and abilities emerge when human intelligence is extended with algorithmic selves. For example, your algorithmic self can multiply to execute different actions simultaneously. Algorithmic selves could also create simple simulations by playing out different scenarios involving your real-life choices and their consequences, helping you to make better decisions in the future.

Algorithmic selves — tuned by your data and personal learners — also could be the key when creating invasive human-computer interfaces that connect digital systems directly to your brain, expanding human brain concretely beyond the “wetware.”

But to ensure that your algorithmic self works for your benefit, could you trust someone building that for you without you participating in the process?

Machine learning expert Pedro Domingos says in his new book “The Master Algorithm” that “[m]achine learning will not single-handedly determine the future… it’s what we decide to do with it that counts.”

Machines are still far from human intelligence. No one knows exactly when super–intelligent AIs will become concrete reality. But developing personal machine learning systems could enable us to interact with any algorithmic entities, be it an obtrusive recommendation algorithm or a super–intelligent AI.

In general, being more transparent on how learning algorithms work and use our data could be crucial for creating ethical and sustainable artificial intelligence. And potentially, maybe we wouldn’t need to fear being overpowered by our own creations.

(Page 1 of 19, totaling 181 entries)

» next page

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131054)

- Norton cyber crime study offers striking revenue loss statistics (101667)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100064)

- Norton cyber crime study offers striking revenue loss statistics (57896)

- The PC inside your phone: A guide to the system-on-a-chip (57461)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |