Tuesday, January 31. 2012

Build Up Your Phone’s Defenses Against Hackers

-----

Chuck Bokath would be terrifying if he were not such a nice guy. A jovial senior engineer at the Georgia Tech Research Institute in Atlanta, Mr. Bokath can hack into your cellphone just by dialing the number. He can remotely listen to your calls, read your text messages, snap pictures with your phone’s camera and track your movements around town — not to mention access the password to your online bank account.

And while Mr. Bokath’s job is to expose security flaws in wireless devices, he said it was “trivial” to hack into a cellphone. Indeed, the instructions on how to do it are available online (the link most certainly will not be provided here). “It’s actually quite frightening,” said Mr. Bokath. “Most people have no idea how vulnerable they are when they use their cellphones.”

Technology experts expect breached, infiltrated or otherwise compromised cellphones to be the scourge of 2012. The smartphone security company Lookout Inc. estimates that more than a million phones worldwide have already been affected. But there are ways to reduce the likelihood of getting hacked — whether by a jealous ex or Russian crime syndicate — or at least minimize the damage should you fall prey.

As cellphones have gotten smarter, they have become less like phones and more like computers, and thus susceptible to hacking. But unlike desktop or even most laptop computers, cellphones are almost always on hand, and are often loaded with even more personal information. So an undefended or carelessly operated phone can result in a breathtaking invasion of individual privacy as well as the potential for data corruption and outright theft.

“Individuals can have a significant impact in protecting themselves from the kind of fraud and cybercrimes we’re starting to see in the mobile space,” said Paul N. Smocer, the president of Bits, the technology policy division of the Financial Services Roundtable, an industry association of more than 100 financial institutions.

Cellphones can be hacked in several ways. A so-called man-in-the-middle attack, Mr. Bokath’s specialty, is when someone hacks into a phone’s operating system and reroutes data to make a pit stop at a snooping third party before sending it on to its destination.

That means the hacker can listen to your calls, read your text messages, follow your Internet browsing activity and keystrokes and pinpoint your geographical location. A sophisticated perpetrator of a man-in-the-middle attack can even instruct your phone to transmit audio and video when your phone is turned off so intimate encounters and sensitive business negotiations essentially become broadcast news.

How do you protect yourself? Yanking out your phone’s battery is about the only way to interrupt the flow of information if you suspect you are already under surveillance. As for prevention, a common ruse for making a man-in-the middle attack is to send the target a text message that claims to be from his or her cell service provider asking for permission to “reprovision” or otherwise reconfigure the phone’s settings due to a network outage or other problem. Don’t click “O.K.” Call your carrier to see if the message is bogus.

For added security, Mr. Bokath uses a prepaid subscriber identity module, or SIM, card, which he throws away after using up the line of credit. A SIM card digitally identifies the cellphone’s user, not only to the cellphone provider but also to hackers. It can take several months for the cellphone registry to associate you with a new SIM. So regularly changing the SIM card, even if you have a contract, will make you harder to target. They are not expensive (about $25 for 50 of them on eBay). This tactic works only if your phone is from AT&T or T-Mobile, which support SIM cards. Verizon and Sprint do not. Another way hackers can take over your phone is by embedding malware, or malicious software, in an app. When you download the app, the malware gets to work corrupting your system and stealing your data. Or the app might just be poorly designed, allowing hackers to exploit a security deficiency and insert malware on your phone when you visit a dodgy Web site or perhaps click on nefarious attachments or links in e-mails. Again, treat your cellphone as you would a computer. If it’s unlikely Aunt Beatrice texted or e-mailed you a link to “Great deals on Viagra!”, don’t click on it.

Since apps are a likely vector for malware transmission on smartphones, Roman Schlegel, a computer scientist at City University of Hong Kong who specializes in mobile security threats, advised, “Only buy apps from a well-known vendor like Google or Apple, not some lonely developer.”

It’s also a good idea to read the “permissions” that apps required before downloading them. “Be sure the permissions requested make sense,” Mr. Schlegel said. “Does it make sense for an alarm clock app to want permission to record audio? Probably not.” Be especially wary of apps that want permission to make phone calls, connect to the Internet or reveal your identity and location.

The Google Android Market, Microsoft Windows Phone Marketplace, Research in Motion BlackBerry App World and Appstore for Android on Amazon.com all disclose the permissions of apps they sell. The Apple iTunes App Store does not, because Apple says it vets all the apps in its store.

Also avoid free unofficial versions of popular apps, say, Angry Birds or Fruit Ninja. They often have malware hidden in the code. Do, however, download an antivirus app like Lookout, Norton and AVG. Some are free. Just know that security apps screen only for viruses, worms, Trojans and other malware that are already in circulation. They are always playing catch-up to hackers who are continually developing new kinds of malware. That’s why it’s important to promptly download security updates, not only from app developers but also from your cellphone provider.

Clues that you might have already been infected include delayed receipt of e-mails and texts, sluggish performance while surfing the Internet and shorter battery life. Also look for unexplained charges on your cellphone bill.

As a general rule it is safer to use a 3G network than public Wi-Fi. Using Wi-Fi in a Starbucks or airport, for example, leaves you open to hackers shooting the equivalent of “gossamer threads into your phone, which they use to reel in your data,” said Martin H. Singer, chief executive of Pctel, a company in Bloomingdale, Ill., that provides wireless security services to government and industry.

If that creepy image tips you into the realm of paranoia, there are supersecure smartphones like the Sectéra Edge by General Dynamics, which was commissioned by the Defense Department for use by soldiers and spies. Today, the phone is available for $3,000 only to those working for government-sponsored entities, but it’s rumored that the company is working to provide something similar to the public in the near future. General Dynamics did not wish to comment.

Georgia Tech Research Institute is taking a different tack by developing software add-on solutions to make commercially available phones as locked-down as those used by government agents.

Michael Pearce, a mobile security consultant with Neohapsis in Chicago, said you probably did not need to go as far as buying a spy phone, but you should take precautions. “It’s like any arms race,” he said. “No one wins, but you have to go ahead and fight anyway.”

Monday, January 30. 2012

What happened to innovative games?

Via #AltDevBlogADay

-----

Indie developer Nimblebit dropped a PR bomb on Zynga yesterday

with it’s letter addressing the similarities between their hit iPhone

game Tiny Tower and Zynga’s upcoming release, Dream Heights. This

galvanized the gaming community, with thousands of people, from prominent bloggers to gamers on Reddit criticizing the company.

However, just after the new year, Atari ordered the removal of Black Powder Media’s Vector Tanks, a game strongly inspired by Atari’s Battlezone. This galvanized the community in a similar way, except this time, gamers were furious that Atari shut down an indie game company that made an extremely similar game.

Unfortunately, the line between inspiration and copying is incredibly blurry at best. The one thing that’s certain is that copying is here to stay. Copying has been present in some form since the dawn of capitalism (if you need proof, just go to the toothpaste isle of your local supermarket). The game industry is no stranger to this trend: game companies have been copying each other for years. Given it’s repeated success, there’s little reason to think that this practice will stop. Indie flash game studio XGEN Studios posted a response to Nimblebit, showing that their hit games were also copied:

Some would even argue that the incredibly successful iOS game Angry Birds was a copy of the popular Armor Games flash game, Crush the Castle, but then Crush the Castle was inspired by others that game before it. Social games even borrow many of their game mechanics from slot machines to increase retention. So what is copying, or more importantly, which parts of it are moral and immoral? Everyone seems to have a different answer, but it’s safe to say that people always copy the most successful ideas. The one thing that those in the Zynga-Nimblebit conversation seems to have overlooked is that everyone copies others in some way.

Of course, while imitation may be the sincerest form of flattery, it doesn’t feel good to be imitated when a competitor comes after your users. In this case, people may question Zynga’s authenticity and make a distinction between inspiration and outright duplication. But at the same time, Zynga’s continued success with the “watch, then replicate” model shows that marketing, analytics, and operations can improve on an existing game concept. Or just give them the firepower to beat out the original game, depending on how you look at it.

Friday, January 27. 2012

A New Mathematics for Computing

Via Input Output

-----

We live in a world where digital technology is so advanced that even what used to be science fiction looks quaint. (Really, Kirk, can you download Klingon soap operas on that communicator?) Yet underlying it all is a mathematical theory that dates back to 1948.

That year, Claude Shannon published the foundational information theory paper, A Mathematical Theory of Communication (PDF). Shannon’s work underlies communications channels as diverse as proprietary wireless networks and leads on printed circuits.

Yet in Shannon’s time, as we all know, there were no personal computers, and the only guy communicating without a desk telephone was Dick Tracy. In our current era, the number of transistors on chips has gotten so dense that the potential limits of Moore’s Law are routinely discussed. Multi-core processors, once beyond the dreams of even supercomputers, are now standard on consumer laptops. Is it any wonder then, that even Shannon’s foresighted genius may have reached its limits?

From the Internet to a child’s game of telephone, the challenge in communications is to relay a message accurately, without losing any of it to noise. Prior to Shannon, the answer was a cumbersome redundancy. Imagine a communications channel as a conveyer belt, with every message as a pile of loose diamonds: If you send hundreds of piles down the belt, some individual diamonds might fall off, but at least you get most of the diamonds through quickly. If you wrap every single diamond in bubble wrap, you won’t lose any individual diamonds, but now you can’t send as many in the same amount of time.

Shannon’s conceptual breakthrough was to realize you could wrap the entire pile in bubble wrap and save the correction for the very end. “It was a complete revolution,” says Schulman, “You could get better and better reliability without actually slowing down the communications.”

In its simplest form, Shannon’s idea of global error correction is what we know as a “parity check” or “check sum,” in which a section of end digits should be an accurate summation of all the preceding digits. (In a more technical scheme: The message is a string n-bits long. It is mapped to another, longer string of code words. If you know what the Hamming distance—the difference in their relative positions—should be, you can determine reliability.)

Starting back as far as the late 1970s, researchers in the emerging field of communication complexity were beginning to ask themselves: Could you do what Shannon did, if your communication were more of a dialogue than a monologue?

What was a theoretical question then is becoming a real-world problem today. With faster chips and multi-core processes, communications channels are becoming two-way, with small messages going back and forth. Yet error correction still assumes big messages going in one direction. “How on earth are you going to bubble wrap all these things together, if what you say depends on what I said to you?” says Schulman.

The answer is to develop an “interactive” form of error correction. In essence, a message sent using classic Shannon error correction is like a list of commands, “Go Left, Go Right, Go Left again, Go Right…” Like a suffering dinner date, the receiver only gets a chance to reply at the end of the string.

By contrast, an interactive code sounds like a dialog between sender and receiver:

“Going left.”“Going left too.”

“Going right.”

“Going left instead.”“Gzzing zzzztt.”

“Huh?”

“Going left too.”

The underlying mathematics for making this conversation highly redundant and reliable is a “tree code.” To the lay person, tree codes resemble a branching graph. To a computer scientist they are “a set of structured binary strings, in which the metric space looks like a tree,” says Schulman, who wrote the original paper laying out their design in 1993.

“Consider a graph that describes every possible sequence of words you could say in your lifetime. For every word you say, there’s thousands of possibilities for the next word,” explains Amit Sahai, “A tree code describes a way of labeling this graph, where each label is an instruction. The ways to label the graph are infinite, but remarkably Leonard showed there is actually one out there that’s useful.”

Tree codes can branch to infinity, a dendritic structure which allows for error correction at multiple points. It also permits tree codes to remember entire sequences; how the next reply is encoded depends on the entire history of the conversation. As a result, a tree code can perform mid-course corrections. It’s as if you think you’re on your way to London, but increasingly everyone around you is speaking French, and then you realize, “Oops, I got on at the wrong Chunnel station.”

The result is that instructions are not only conveyed faster; they’re acted on faster as well, with real-time error correction. There’s no waiting to discover too late that an entire string requires re-sending. In that sense, tree codes could help support the parallel processing needs of multi-core machines. They could also help in the increasingly noisy environments of densely packed chips. “We already have chip-level error correction, using parity checks,” says Schulman. But once again, there’s the problem of one-way transmission, in which an error is only discovered at the end. By contrast, a tree code, “gives you a check sum at every stage of a long interaction,” says Schulman.

Unfortunately, those very intricacies are why interactive communications aren’t coming to a computer near you anytime soon. Currently, tree codes are in the proof of concept phase. “We can start from that math and show they exist, without being able to say: here is one,” says Schulman. One of the primary research challenges is to make the error correction as robust as possible.

One potential alternative that may work much sooner was just published by Sahai and his colleagues. They modified Schulman’s work to create a code that, while not quite the full theoretical ideal of a tree code, may actually perform nearly up to that ideal in real-world applications. “It’s not perfect, but for most purposes, if the technology got to where you needed to use it, you could go ahead with that,” says Schulman, “It’s a really nice piece of progress.”

Sahai, Schulman, and other researchers are still working to create perfect tree codes. “As a mathematician, there’s something that gnaws at us,” says Sahai, “We do want to find the real truth—what is actually needed for this to work.” They look to Shannon as their inspiration. “Where we are now is exactly analogous to what Shannon did in that first paper,” Schulman says, “He proved that good error correcting codes could exist, but it wasn’t until the late 1960s that people figured out how to use algebraic methods to do them, and then the field took off dramatically.”

Wednesday, January 25. 2012

Google’s Broken Promise: The End of "Don’t Be Evil"

Via Gizmodo

-----

In a privacy policy shift, Google announced today that it will begin tracking users universally across all its services—Gmail, Search, YouTube and more—and sharing data on user activity across all of them. So much for the Google we signed up for.

The change was announced in a blog post today, and will go into effect March 1. After that, if you are signed into your Google Account to use any service at all, the company can use that information on other services as well. As Google puts it:

Our new Privacy Policy makes clear that, if you're signed in, we may combine information you've provided from one service with information from other services. In short, we'll treat you as a single user across all our products, which will mean a simpler, more intuitive Google experience.

This has been long coming. Google's privacy policies have been shifting towards sharing data across services, and away from data compartmentalization for some time. It's been consistently de-anonymizing you, initially requiring real names with Plus, for example, and then tying your Plus account to your Gmail account. But this is an entirely new level of sharing. And given all of the negative feedback that it had with Google+ privacy issues, it's especially troubling that it would take actions that further erode users' privacy.

What this means for you is that data from the things you search for, the emails you send, the places you look up on Google Maps, the videos you watch in YouTube, the discussions you have on Google+ will all be collected in one place. It seems like it will particularly affect Android users, whose real-time location (if they are Latitude users), Google Wallet data and much more will be up for grabs. And if you have signed up for Google+, odds are the company even knows your real name, as it still places hurdles in front of using a pseudonym (although it no longer explicitly requires users to go by their real names).

All of that data history will now be explicitly cross-referenced. Although it refers to providing users a better experience (read: more highly tailored results), presumably it is so that Google can deliver more highly targeted ads. (There has, incidentally, never been a better time to familiarize yourself with Google's Ad Preferences.)

So why are we calling this evil? Because Google changed the rules that it defined itself. Google built its reputation, and its multi-billion dollar business, on the promise of its "don't be evil" philosophy. That's been largely interpreted as meaning that Google will always put its users first, an interpretation that Google has cultivated and encouraged. Google has built a very lucrative company on the reputation of user respect. It has made billions of dollars in that effort to get us all under its feel-good tent. And now it's pulling the stakes out, collapsing it. It gives you a few weeks to pull your data out, using its data-liberation service, but if you want to use Google services, you have to agree to these rules.

Google's philosophy speaks directly to making money without doing evil. And it is very explicit in calling out advertising in the section on "evil." But while it emphasizes that ads should be relevant, obvious, and "not flashy," what seems to have been forgotten is a respect for its users privacy, and established practices.

Among its privacy principles, number four notes:

People have different privacy concerns and needs. To best serve the full range of our users, Google strives to offer them meaningful and fine-grained choices over the use of their personal information. We believe personal information should not be held hostage and we are committed to building products that let users export their personal information to other services. We don‘t sell users' personal information.

This crosses that line. It eliminates that fine-grained control, and means that things you could do in relative anonymity today, will be explicitly associated with your name, your face, your phone number come March 1st. If you use Google's services, you have to agree to this new privacy policy. Yet a real concern for various privacy concerns would recognize that I might not want Google associating two pieces of personal information.

And much worse, it is an explicit reversal of its previous policies. As Google noted in 2009:

Previously, we only offered Personalized Search for signed-in users, and only when they had Web History enabled on their Google Accounts. What we're doing today is expanding Personalized Search so that we can provide it to signed-out users as well. This addition enables us to customize search results for you based upon 180 days of search activity linked to an anonymous cookie in your browser. It's completely separate from your Google Account and Web History (which are only available to signed-in users). You'll know when we customize results because a "View customizations" link will appear on the top right of the search results page. Clicking the link will let you see how we've customized your results and also let you turn off this type of customization.

The changes come shortly after Google revamped its search results to include social results it called Search plus Your World. Although that move has drawn heavy criticism from all over the Web, at least it gives users the option to not participate.

Tuesday, January 24. 2012

A tale of Apple, the iPhone, and overseas manufacturing

Via CNET

-----

Workers assemble and perform quality control checks on MacBook Pro display enclosures at an Apple supplier facility in Shanghai.

(Credit: Apple)

A new report on Apple offers up an interesting detail about the evolution of the iPhone and gives a fascinating--and unsettling--look at the practice of overseas manufacturing.

The article, an in-depth report by Charles Duhigg and Keith Bradsher of The New York Times, is based on interviews with, among others, "more than three dozen current and former Apple employees and contractors--many of whom requested anonymity to protect their jobs."

The piece uses Apple and its recent history to look at why the success of some U.S. firms hasn't led to more U.S. jobs--and to examine issues regarding the relationship between corporate America and Americans (as well as people overseas). One of the questions it asks is: Why isn't more manufacturing taking place in the U.S.? And Apple's answer--and the answer one might get from many U.S. companies--appears to be that it's simply no longer possible to compete by relying on domestic factories and the ecosystem that surrounds them.

The iPhone detail crops up relatively early in the story, in an anecdote about then-Apple CEO Steve Jobs. And it leads directly into questions about offshore labor practices:

In 2007, a little over a month before the iPhone was scheduled to appear in stores, Mr. Jobs beckoned a handful of lieutenants into an office. For weeks, he had been carrying a prototype of the device in his pocket.

Mr. Jobs angrily held up his iPhone, angling it so everyone could see the dozens of tiny scratches marring its plastic screen, according to someone who attended the meeting. He then pulled his keys from his jeans.

People will carry this phone in their pocket, he said. People also carry their keys in their pocket. "I won't sell a product that gets scratched," he said tensely. The only solution was using unscratchable glass instead. "I want a glass screen, and I want it perfect in six weeks."

A tall order. And another anecdote suggests that Jobs' staff went overseas to fill it--along with other requirements for the top-secret phone project (code-named, the Times says, "Purple 2"):

One former executive described how the company relied upon a Chinese factory to revamp iPhone manufacturing just weeks before the device was due on shelves. Apple had redesigned the iPhone's screen at the last minute, forcing an assembly line overhaul. New screens began arriving at the plant near midnight.

A foreman immediately roused 8,000 workers inside the company's dormitories, according to the executive. Each employee was given a biscuit and a cup of tea, guided to a workstation and within half an hour started a 12-hour shift fitting glass screens into beveled frames. Within 96 hours, the plant was producing more than 10,000 iPhones a day.

"The speed and flexibility is breathtaking," the executive said. "There's no American plant that can match that."

That last quote there, like several others in the story, leaves one feeling almost impressed by the no-holds-barred capabilities of these manufacturing plants--impressed and queasy at the same time. Here's another quote, from Jennifer Rigoni, Apple's worldwide supply demand manager until 2010: "They could hire 3,000 people overnight," she says, speaking of Foxconn City, Foxconn Technology's complex of factories in China. "What U.S. plant can find 3,000 people overnight and convince them to live in dorms?"

The article says that cheap and willing labor was indeed a factor in Apple's decision, in the early 2000s, to follow most other electronics companies in moving manufacturing overseas. But, it says, supply chain management, production speed, and flexibility were bigger incentives.

"The entire supply chain is in China now," the article quotes a former high-ranking Apple executive as saying. "You need a thousand rubber gaskets? That's the factory next door. You need a million screws? That factory is a block away. You need that screw made a little bit different? It will take three hours."

It also makes the point that other factors come into play. Apple analysts, the Times piece reports, had estimated that in the U.S., it would take the company as long as nine months to find the 8,700 industrial engineers it would need to oversee workers assembling the iPhone. In China it wound up taking 15 days.

The article and its sources paint a vivid picture of how much easier it is for companies to get things made overseas (which is why so many U.S. firms go that route--Apple is by no means alone in this). But the underlying humanitarian issues nag at the reader.

Perhaps there's hope--at least for overseas workers--in last week's news that Apple has joined the Fair Labor Association, and that it will be providing more transparency when it comes to the making of its products.

As for manufacturing returning to the U.S.? The Times piece cites an unnamed guest at President Obama's 2011 dinner with Silicon Valley bigwigs. Obama had asked Steve Jobs what it would take to produce the iPhone in the states, why that work couldn't return. The Times' source quotes Jobs as having said, in no uncertain terms, "Those jobs aren't coming back."

Apple, by the way, would not provide a comment to the Times about the article. And Foxconn disputed the story about employees being awakened at midnight to work on the iPhone, saying strict regulations about working hours would have made such a thing impossible.

Monday, January 23. 2012

Quantum physics enables perfectly secure cloud computing

Via eurekalert

-----

Researchers have succeeded in combining the power of quantum computing with the security of quantum cryptography and have shown that perfectly secure cloud computing can be achieved using the principles of quantum mechanics. They have performed an experimental demonstration of quantum computation in which the input, the data processing, and the output remain unknown to the quantum computer. The international team of scientists will publish the results of the experiment, carried out at the Vienna Center for Quantum Science and Technology (VCQ) at the University of Vienna and the Institute for Quantum Optics and Quantum Information (IQOQI), in the forthcoming issue of Science.

Quantum computers are expected to play an important role in future information processing since they can outperform classical computers at many tasks. Considering the challenges inherent in building quantum devices, it is conceivable that future quantum computing capabilities will exist only in a few specialized facilities around the world – much like today's supercomputers. Users would then interact with those specialized facilities in order to outsource their quantum computations. The scenario follows the current trend of cloud computing: central remote servers are used to store and process data – everything is done in the "cloud." The obvious challenge is to make globalized computing safe and ensure that users' data stays private.

The latest research, to appear in Science, reveals that quantum computers can provide an answer to that challenge. "Quantum physics solves one of the key challenges in distributed computing. It can preserve data privacy when users interact with remote computing centers," says Stefanie Barz, lead author of the study. This newly established fundamental advantage of quantum computers enables the delegation of a quantum computation from a user who does not hold any quantum computational power to a quantum server, while guaranteeing that the user's data remain perfectly private. The quantum server performs calculations, but has no means to find out what it is doing – a functionality not known to be achievable in the classical world.

The scientists in the Vienna research group have demonstrated the concept of "blind quantum computing" in an experiment: they performed the first known quantum computation during which the user's data stayed perfectly encrypted. The experimental demonstration uses photons, or "light particles" to encode the data. Photonic systems are well-suited to the task because quantum computation operations can be performed on them, and they can be transmitted over long distances.

The process works in the following manner. The user prepares qubits – the fundamental units of quantum computers – in a state known only to himself and sends these qubits to the quantum computer. The quantum computer entangles the qubits according to a standard scheme. The actual computation is measurement-based: the processing of quantum information is implemented by simple measurements on qubits. The user tailors measurement instructions to the particular state of each qubit and sends them to the quantum server. Finally, the results of the computation are sent back to the user who can interpret and utilize the results of the computation. Even if the quantum computer or an eavesdropper tries to read the qubits, they gain no useful information, without knowing the initial state; they are "blind."

###

The research at the Vienna Center for Quantum Science and Technology (VCQ) at the University of Vienna and at the Institute for Quantum Optics and Quantum Information (IQOQI) of the Austrian Academy of Sciences was undertaken in collaboration with the scientists who originally invented the protocol, based at the University of Edinburgh, the Institute for Quantum Computing (University of Waterloo), the Centre for Quantum Technologies (National University of Singapore), and University College Dublin.

Publication: "Demonstration of Blind Quantum Computing" Stefanie Barz, Elham Kashefi, Anne Broadbent, Joseph Fitzsimons, Anton Zeilinger, Philip Walther. DOI: 10.1126/science.1214707

Friday, January 20. 2012

Did Google ever have a plan to curb Android fragmentation?

Via ZDNet

-----

Another day, another set of Android fragmentation stories. And while there’s no doubt that there is wide fragmentation within the platform, and there’s not real solution in sight, I’m starting to wonder if Google ever had a plan to prevent the platform for becoming a fragmented mess.

How bad’s the problem? Jon Evans over on TechCrunch tells it like it is:

OS fragmentation, though, is an utter disaster. Ice Cream Sandwich is by all accounts very nice; but what good does that do app developers, when according to Google’s own stats, 30% of all Android devices are still running an OS that is 20 months old?

…

More than two-thirds of iOS users had upgraded to iOS 5 a mere three months after its release. Anyone out there think that Ice Cream Sandwich will crack the 20% mark on Google’s platform pie chart by March?

He then goes on to deliver the killer blow:

OS fragmentation is the single greatest problem Android faces, and it’s only going to get worse. Android’s massive success over the last year mean that there are now tens if not hundreds of millions of users whose handset manufacturers and carriers may or may not allow them to upgrade their OS someday; and the larger that number grows, the more loath app developers will become to turn their back on them. That unwillingness to use new features means Android apps will fall further and further behind their iOS equivalents, unless Google manages – via carrot, stick, or both – to coerce Android carriers and manufacturers to prioritize OS upgrades.

And that’s the core problem with Android. While there’s no doubt that consumers who’ve bought Android devices are being screwed out of updates that they deserve (the take up of Android 4.0 ‘Ice Cream Sandwich’ is pretty poor so far), the biggest risk from fragmentation is that developers will ignore new Android features an instead focus on supporting older but more mainstream feature sets. After all, developers want to hit the masses, not the fringes. Also, the more platforms developers have to support, the more testing work there is.

OK, so Android is fragmented, and it’s a problem that Google doesn’t seem willing to tackle. But the more I look at the Android platform and the associated ecosystem, it makes me wonder if Google ever had any plan (or for that matter intention) to control platform fragmentation.

But could Google have done anything to control fragmentation? Former Microsoftie (and now investor) Charlie Kindel thinks there no hope to curb fragmentation. In fact, he believes that most things will make it worse. I disagree with Kindel on this matter. He also believes that Google’s current strategy amounts to little more that wishing that everyone will upgrade. On this point we are in total agreement.

I disagree with Kindel that that there’s nothing that Google can do to at least try to discourage fragmentation. I believe that one of Google’s strongest cards are Android users themselves. Look at how enthusiastic iPhone and iPad owners are about iOS updates. They’re enthusiastic because Apple tells them why they should be enthusiastic about new updates. Compare this to Google’s approach to Android customers. Google (or anyone else in the chain for that matter) doesn’t seem to be doing much to get people fired up and enthusiastic about Android. In fact, it seems to me the only message being given to Android customers is ‘buy another Android handset.’

I understand that Google isn’t Apple and can’t seem to sway the crowds in the same way, but it might start to help if the search giant seemed to care about the OS. The absence of enthusiasm make the seem Sphinx-like and uncaring. Why should anyone care about new Android updates when Google itself doesn’t really seem all that excited? If Google created a real demand for Android updates from the end users, this would put put pressure on the handset makers and the carriers to get updates in a timely fashion to users.

Make the users care about updates, and the people standing in the way of those updates will sit up and pay attention to things.

Personal comment:

Google with Android OS is now in a similar place than Microsoft with Windows, and blaming Google to have this disparity of OS versions would be the same than blaming Microsoft on the fact that Windows XP, Windows Vista and Windows 7 are still co-existing nowadays. One reason Android got that 'fragged' is that it has to face a rapid evolution of hardware and new kind of devices in a very short time, somehow having a kind of Frankenstein-like experience with its Android creature. Many distinct hardware manufacturers adopt Android, develop their own GUI layer on top of it, making Google having a direct control on the spread of new Android version quite impossible... as each manufacturer may need to perform their own code update prior to propose a new version of Android on their own devices.

The direct comparison with iOS is a kind of unfair as Apple do have a rapid update cycle by controlling every single workings of the overall mechanism: SDK regular updates push developers to adopt new features and forget about old iOS versions and new iDevice's Apps request the end-user to upgrade their iOS version to the last one in order to be able to install new Apps. Meanwhile, Apple is having control on hardware design, production and evolution too, making the propagation of new iOS versions much easier and much faster than it is for Google with Android.

Then, mobile devices (smartphones or tablets) do have a short life timeline and this was already true prior Google and Apple starts acting in this market. So whatever your name is Google or Apple, considering not proposing the very last version of your OS on so-called 'old' or obsolete hardware is a kind of an obvious choice to do. This is not even a 'choice' but more a direct consequence of how fast technology is evolving nowadays.

Now, smartphones and tablets hardware capabilities will reach a 'standard' level to become 'mature' products (all smartphones/tablets do have cameras, video capabilities, editing capabilities etc...) which may make easier for Android to spread over on all devices in a similar version while hardware evolution observes a pause. Already Apple's last innovations are more linked to software than real hardware (r)evolution, so Android may take benefit of this in order to reduce the gap.

Thursday, January 19. 2012

The faster-than-fast Fourier transform

Via PhysOrg

-----

The Fourier transform is one of the most fundamental concepts in the information sciences. It’s a method for representing an irregular signal — such as the voltage fluctuations in the wire that connects an MP3 player to a loudspeaker — as a combination of pure frequencies. It’s universal in signal processing, but it can also be used to compress image and audio files, solve differential equations and price stock options, among other things.

The reason the Fourier transform is so prevalent is an algorithm called the fast Fourier transform (FFT), devised in the mid-1960s, which made it practical to calculate Fourier transforms on the fly. Ever since the FFT was proposed, however, people have wondered whether an even faster algorithm could be found.

At the Association for Computing Machinery’s Symposium on Discrete Algorithms (SODA) this week, a group of MIT researchers will present a new algorithm that, in a large range of practically important cases, improves on the fast Fourier transform. Under some circumstances, the improvement can be dramatic — a tenfold increase in speed. The new algorithm could be particularly useful for image compression, enabling, say, smartphones to wirelessly transmit large video files without draining their batteries or consuming their monthly bandwidth allotments.

Like the FFT, the new algorithm works on digital signals. A digital signal is just a series of numbers — discrete samples of an analog signal, such as the sound of a musical instrument. The FFT takes a digital signal containing a certain number of samples and expresses it as the weighted sum of an equivalent number of frequencies.

“Weighted” means that some of those frequencies count more toward the total than others. Indeed, many of the frequencies may have such low weights that they can be safely disregarded. That’s why the Fourier transform is useful for compression. An eight-by-eight block of pixels can be thought of as a 64-sample signal, and thus as the sum of 64 different frequencies. But as the researchers point out in their new paper, empirical studies show that on average, 57 of those frequencies can be discarded with minimal loss of image quality.

Heavyweight division

Signals whose Fourier transforms include a relatively small number of heavily weighted frequencies are called “sparse.” The new algorithm determines the weights of a signal’s most heavily weighted frequencies; the sparser the signal, the greater the speedup the algorithm provides. Indeed, if the signal is sparse enough, the algorithm can simply sample it randomly rather than reading it in its entirety.

“In nature, most of the normal signals are sparse,” says Dina Katabi, one of the developers of the new algorithm. Consider, for instance, a recording of a piece of chamber music: The composite signal consists of only a few instruments each playing only one note at a time. A recording, on the other hand, of all possible instruments each playing all possible notes at once wouldn’t be sparse — but neither would it be a signal that anyone cares about.

The new algorithm — which associate professor Katabi and professor Piotr Indyk, both of MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), developed together with their students Eric Price and Haitham Hassanieh — relies on two key ideas. The first is to divide a signal into narrower slices of bandwidth, sized so that a slice will generally contain only one frequency with a heavy weight.

In signal processing, the basic tool for isolating particular frequencies is a filter. But filters tend to have blurry boundaries: One range of frequencies will pass through the filter more or less intact; frequencies just outside that range will be somewhat attenuated; frequencies outside that range will be attenuated still more; and so on, until you reach the frequencies that are filtered out almost perfectly.

If it so happens that the one frequency with a heavy weight is at the edge of the filter, however, it could end up so attenuated that it can’t be identified. So the researchers’ first contribution was to find a computationally efficient way to combine filters so that they overlap, ensuring that no frequencies inside the target range will be unduly attenuated, but that the boundaries between slices of spectrum are still fairly sharp.

Zeroing in

Once they’ve isolated a slice of spectrum, however, the researchers still have to identify the most heavily weighted frequency in that slice. In the SODA paper, they do this by repeatedly cutting the slice of spectrum into smaller pieces and keeping only those in which most of the signal power is concentrated. But in an as-yet-unpublished paper, they describe a much more efficient technique, which borrows a signal-processing strategy from 4G cellular networks. Frequencies are generally represented as up-and-down squiggles, but they can also be though of as oscillations; by sampling the same slice of bandwidth at different times, the researchers can determine where the dominant frequency is in its oscillatory cycle.

Two University of Michigan researchers — Anna Gilbert, a professor of mathematics, and Martin Strauss, an associate professor of mathematics and of electrical engineering and computer science — had previously proposed an algorithm that improved on the FFT for very sparse signals. “Some of the previous work, including my own with Anna Gilbert and so on, would improve upon the fast Fourier transform algorithm, but only if the sparsity k” — the number of heavily weighted frequencies — “was considerably smaller than the input size n,” Strauss says. The MIT researchers’ algorithm, however, “greatly expands the number of circumstances where one can beat the traditional FFT,” Strauss says. “Even if that number k is starting to get close to n — to all of them being important — this algorithm still gives some improvement over FFT.”

Wednesday, January 18. 2012

SOPA is a Red Herring

-----

As usual, the bought and paid for self-fulfilling tech press is missing the elephant in the room. ![]()

The blogosphere discussion

surrounding a self-imposed 'blackout' of "key" websites and services

that we apparently can't live without, is scheduled for this wednesday.

All in protest of proposed legislation in the house and senate. ![]()

I submit this is a big fat red herring. ![]()

Actually there are 3 pieces of

legislation; the Stop Online Piracy Act (SOPA) the Protect Intellectual

Property Act (PIPA) and the Online Protection and Enforcement of

Digital Trade Act (OPEN,) which is currently in draft form, initially

proposed by Darrel Issa (R) who will be holding a hearing on Wednesday

regarding the strong opposition to DNS 'tampering' as a punitive measure

against foreign registered websites infringing on intellectual property

and trademarks of US companies within the borders of the United

States. ![]()

I have read all three pieces

of legislation (its a hobby) and can confidently say that not only are

they pretty much identical in scope. The key differences are that only

SOPA proposes the DNS 'tampering', which would allow US officials to

remove an infringing website's DNS records from the root servers if

deemed to be operating in defiance of Intellectual Property and

Trademark law, effectively rendering them unfindable when you type in a

corresponding domain name website address. ![]()

The boundaries of what is

legal and not is not actually contained in any of the bills, as they all

universally refer to mainly the Lanham Act. All of it tried and true legislation. Nothing new there. ![]()

All three bills further

provide language that will allow justice to forbid US based financial

transaction providers, search engines and advertising companies from

doing business with a 'website' that is found to be guilty of

infringement. ![]()

Of the three proposals, OPEN

appears most fair to all parties in any dispute, by requiring a

complainant to post a bond when requesting an investigation of

infringement in order to combat frivolous use of the provisions

available. ![]()

The outrage over SOPA's DNS provisions is justified, but misdirected, Congress is already backpedaling on including it in any final legislation and even the Administration's own response to the "We the People Petitions on SOPA" included an firm stance against measures that would affect the DNS infrastructure: ![]()

We must avoid creating new cybersecurity risks or disrupting the underlying architecture of the Internet. Proposed laws must not tamper with the technical architecture of the Internet through manipulation of the Domain Name System (DNS), a foundation of Internet security. Our analysis of the DNS filtering provisions in some proposed legislation suggests that they pose a real risk to cybersecurity and yet leave contraband goods and services accessible online. We must avoid legislation that drives users to dangerous, unreliable DNS servers and puts next-generation security policies, such as the deployment of DNSSEC, at risk.

Without the DNS clause, it

would appear perfectly logical that the government pursue action against

websites that attempt to cash in on fake products and stolen

intellectual property of it's people. ![]()

The entire reason for even

trying to get a DNS provision into law is because it is nearly

impossible to track down the owner of a website, or domain name, through

today's registration tools. ![]()

A whois lookup on a domain

name merely provides whatever information is given at time of

registration, and there is no verification of the registrant. ![]()

So, here's what the press has missed; ![]()

During all the shouting about

SOPA and proposed blackouts to 'protest', the organization that

actually runs the DNS root servers, ICANN, the backbone of the web, has

been quite busy in plain view on changing the game, in favor of the government. ![]()

It's been highly underreported that ICANN is now accepting submissions for new gTLD's, or 'generic top level domains'. ![]()

Without getting into all the

details of what that means, other than possibly hundreds if not

thousands of new domains like .shop .dork .shill and .drone that you

will be able to register vanity domain names under, ICANN has come up

with a new requirement upon registration: ![]()

You must verify who you are when you register a new domain name, even an international one. ![]()

So, if I pay GoDaddy or any

other outfit my $9 for curry.blog and have it point to my server at

blog.curry.com, I will have to prove my identity upon registration.

Presumably with some form of government approved ID. ![]()

This way, when OPEN or

perhaps a non-NDS-version of SOPA is passed, if you break the rules, you

will be hunted down, regardless of where you live or operate since this

also includes international domain names. ![]()

The Administration like this approach as well. Just read the language from the International Strategy For Cyberspace document [pdf]: ![]()

In this future, individuals and businesses can quickly and easily obtain the tools necessary to set up their own presence online; domain names and addresses are available, secure, and properly maintained, without onerous licenses or unreasonable disclosures of personal information.

onerous licenses and unreasonable disclosures of personal information clearly indicates you will have to provide verification of your identity, which in today's world is not a requirement. ![]()

"Hey Citizen, if you have

nothing to hide, what are you worried about?" Just follow the rules and

all will be fine. I don't think I need to explain the implications of

this massive change in internet domain name policy and to your privacy. ![]()

The term for this new type of registration is Thick Whois

and you'll be hearing about it eventually, when the so called 'tech

press' stops their circle jerking around the latest

facebook/google/twitter cat fights and actually starts reporting on

things that matter. ![]()

Until then, feel free to make your google+ facebook and twitter icons all black, as your faux protest

is futile. The real change, that of your privacy online, is being made

in plain sight by former Director of the National Cyber Security Center

of the Department of Homeland Security Rod Beckstrom, current CEO of ICANN. Shill anyone? ![]()

This topic was originally discussed on the No Agenda Podcast of Janury 15th 2012. ![]()

Disclaimers: I am not a lawyer, nor am I a journalist. I am not distracted by shiny gadgets. ![]()

[related post: SOPA:Follow the Money] ![]()

The rise and fall of personal computing

Via asymco

-----

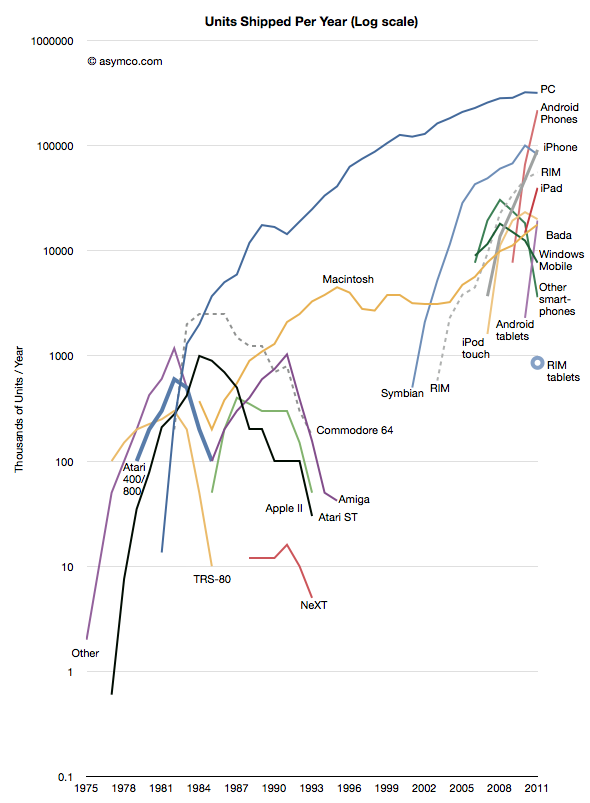

Thanks to Jeremy Reimer I was able to create the following view into the history of computer platforms.

I added data from the smartphone industry, Apple and updated the PC industry figures with those from Gartner. Note the log scale.

The same information is available as an animation in the following video (Music by Nora Tagle):

This data combines several “categories” of products and is not complete in that not all mobile phone platforms are represented. However, the zooming out offers several possible observations into the state of the “personal computing” world as of today.

- We cannot consider the iPad as a “niche”. The absolute volume of units sold after less than two years is enough to place it within an order of magnitude of all PCs sold. We can also observe that it has a higher trajectory than the iPhone which became a disruptive force in itself. Compare these challengers to NeXT in 1991.

- The “entrants” into personal computing, the iPad, iPhone and Android, have a combined volume that is higher than the PCs sold in the same period (358 million estimated iOS+Android vs. 336 million PCs excluding Macs in 2011.) The growth rate and the scale itself combine to make the entrants impossible to ignore.

- There is a distinct grouping of platform options into three phases or eras. The first lasting from 1975 to 1991 was an era of rapid growth but also of multiple standards and experiments. It was typical of an industry in emergence. The personalization of computing brought about a new set of entrants. The second phase lasted between 1991 and 2007 and was characterized by a near monopoly of Microsoft, but, crucially one alternative platform did survive. The third phase can be seen as starting five years ago with the emergence of the iPhone and its derivatives. It has similarities to the first phase.

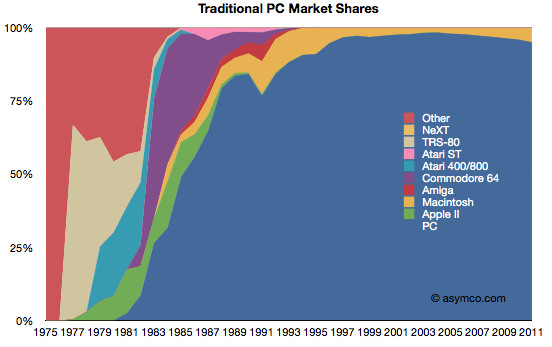

We can also look at the data through a slightly different view: market share. Share is a bit more subjective because we need to combine products in ways that are considered comparable (or competing).

First, this is a “traditionalist” view of the PC market as defined by Gartner and IDC, and excluding tablets and smartphones.

This view would imply that the PC market is not changing in any substantial way. Although the Mac platform is gaining share, there is no significant erosion in the power of the incumbent.

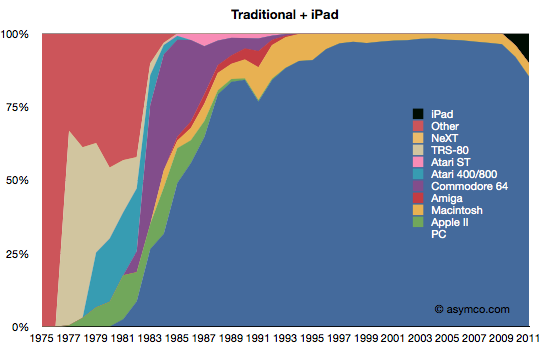

Second, is a view where the iPad is added to the traditionalist view.

This view is more alarming. Given the first chart, in order for the iPad to be significant, it would need to be “visible” for a market that already ships over 350 million units. And there it is. If counted, the iPad begins to show the first disruption in the status quo since 1991.

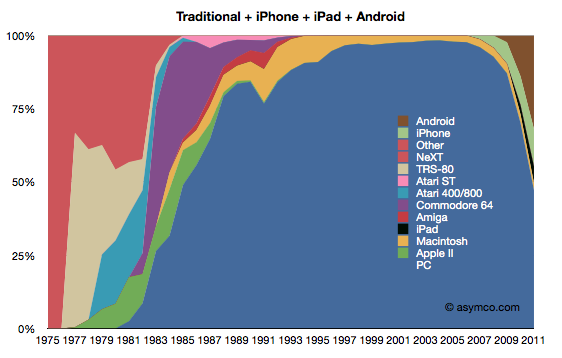

The third view is with the addition of iPhone and Android.

This last view corresponds to the data in the first graph (line chart). If iOS and Android are added as potential substitutions for personal computing, the share of PCs suddenly collapses to less than 50%. It also suggests much more collapse to come.

I will concede that this last view is extremist. It does not reflect a competition that exists in real life. However, I put this data together to show a historic pattern. Sometimes extremism is a better point of view than conservatism. Ignoring this view is very harmful as these not-good-enough computers will surely get better. A competitor that has no strategy to deal with this shift is likely to suffer the fate of those companies in the left side of the chart. Treating the first share chart as reality is surely much more dangerous than contemplating the third.

I’ve used anecdotes in the past to tell the story of the disruptive shift in the fortunes of computing incumbents and entrants. I’ve also shown how the entry of smart devices has disrupted the telecom world and caused a transfer of wealth away from the old guard.

The data shown here frames these anecdotes. The data is not the whole story but it solidifies what should be an intuition.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131046)

- Norton cyber crime study offers striking revenue loss statistics (101656)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100057)

- Norton cyber crime study offers striking revenue loss statistics (57885)

- The PC inside your phone: A guide to the system-on-a-chip (57446)