Saturday, November 29. 2014

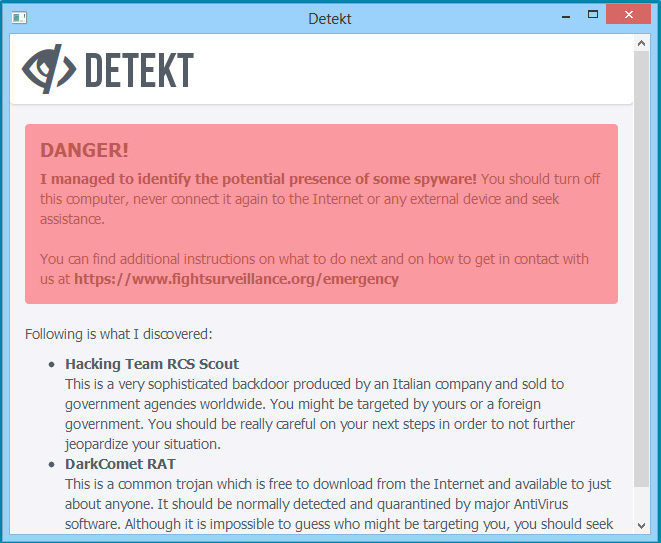

Human rights organizations launch free tool to detect surveillance software

Via Mashable

-----

More and more, governments are using powerful spying software to target human rights activists and journalists, often the forgotten victims of cyberwar. Now, these victims have a new tool to protect themselves.

Called Detekt, it scans a person's computer for traces of surveillance software, or spyware. A coalition of human rights organizations, including Amnesty International and the Electronic Frontier Foundation launched Detekt on Wednesday, with the goal of equipping activists and journalists with a free tool to discover if they've been hacked.

"Our ultimate aim is for human rights defenders, journalists and civil society groups to be able to carry out their legitimate work without fear of surveillance, harassment, intimidation, arrest or torture," Amnesty wroteThursday in a statement.

The open-source tool was developed by security researcher Claudio Guarnieri, a security researcher who has been investigating government abuse of spyware for years. He often collaborates with other researchers at University of Toronto's Citizen Lab.

During their investigations, Guarnieri and his colleagues discovered, for example, that the Bahraini government used software created by German company FinFisher to spy on human rights activists. They also found out that the Ethiopian government spied on journalists in the U.S. and Europe, using software developed by Hacking Team, another company that sells off-the-shelf surveillance tools.

--------------------------

Guarnieri developed Detekt from software he and the other researchers used during those investigations.

--------------------------

"I decided to release it to the public because keeping it private made no sense," he told Mashable. "It's better to give more people as possible the chance to test and identify the problem as quickly as possible, rather than keeping this knowledge private and let it rot."

Detekt only works with Windows, and it's designed to discover malware developed both by commercial firms, as well as popular spyware used by cybercriminals, such as BlackShades RAT (Remote Access Tool) and Gh0st RAT.

The tool has some limitations, though: It's only a scanner, and doesn't remove the malware infection, which is why Detekt's official site warns that if there are traces of malware on your computer, you should stop using it "immediately," and and look for help. It also might not detect newer versions of the spyware developed by FinFisher, Hacking Team and similar companies.

"If Detekt does not find anything, this unfortunately cannot be considered a clean bill of health," the software's "readme" file warns.

For some, given these limitations, Detekt won't help much.

"The tool appears to be a simple signature-based black list that does not promise it knows all the bad files, and admits that it can be fooled," John Prisco, president and CEO of security firm Triumfant, said. "Given that, it seems worthless to me, but that’s probably why it can be offered for free."

Joanna Rutkowska, a researcher who develops the security-minded operating system Qubes, said computers with traditional operating systems are inherently insecure, and that tools like Detekt can't help with that.

"Releasing yet another malware scanner does nothing to address the primary problem," she told Mashable. "Yet, it might create a false sense of security for users."

But Guarnieri disagrees, saying that Detekt is not a silver-bullet solution intended to be used in place of commercial anti-virus software or other security tools.

"Telling activists and journalists to spend 50 euros a year for some antivirus license in emergency situations isn't very helpful," he said, adding that Detekt is not "just a tool," but also an initiative to spark discussion around the government use of intrusive spyware, which is extremely unregulated.

For Mikko Hypponen, a renowned security expert and chief research officer for anti-virus vendor F-Secure, Detekt is a good project because its target audience — activists and journalists — don't often have access to expensive commercial tools.

“Since Detekt only focuses on detecting a handful of spy tools — but detecting them very well — it might actually outperform traditional antivirus products in this particular area,” he told Mashable.

NSA partners with Apache to release open-source data traffic program

Via zdnet

-----

Many of you probably think that the National Security Agency (NSA) and open-source software get along like a house on fire. That's to say, flaming destruction. You would be wrong.

In partnership with the Apache Software Foundation, the NSA announced on Tuesday that it is releasing the source code for Niagarafiles (Nifi). The spy agency said that Nifi "automates data flows among multiple computer networks, even when data formats and protocols differ".

Details on how Nifi does this are scant at this point, while the ASF continues to set up the site where Nifi's code will reside.

In a statement, Nifi's lead developer Joseph L Witt said the software "provides a way to prioritize data flows more effectively and get rid of artificial delays in identifying and transmitting critical information".

The NSA is making this move because, according to the director of the NSA's Technology Transfer Program (TPP) Linda L Burger, the agency's research projects "often have broad, commercial applications".

"We use open-source releases to move technology from the lab to the marketplace, making state-of-the-art technology more widely available and aiming to accelerate U.S. economic growth," she added.

The NSA has long worked hand-in-glove with open-source projects. Indeed, Security-Enhanced Linux (SELinux), which is used for top-level security in all enterprise Linux distributions — Red Hat Enterprise Linux, SUSE Linux Enterprise Server, and Debian Linux included — began as an NSA project.

More recently, the NSA created Accumulo, a NoSQL database store that's now supervised by the ASF.

More NSA technologies are expected to be open sourced soon. After all, as the NSA pointed out: "Global reviews and critiques that stem from open-source releases can broaden a technology's applications for the US private sector and for the good of the nation at large."

Friday, November 21. 2014

Code Watch: Can we code conscious programs?

Via SD Times

-----

A few months ago, I pondered the growing gap between the way we solve problems in our day-to-day programming and the way problems are being solved by new techniques involving Big Data and statistical machine-learning techniques. The ImageNet Large Scale Visual Recognition Challenge has seen a leap in performance with the widespread adoption of convolutional neural nets, to the point where “It is clear that humans will soon only be able to outperform state-of-the-art image classification models by use of significant effort, expertise, and time,” according to Andrej Karpathy when discussing his own results of the test.

Is it reasonable to think or suggest that artificial neural nets are, in any way, conscious?

Integrated Information Theory (IIT), developed by the neuroscientist and psychiatrist Giulio Tononi and expanded by Adam Barrett, suggests that it is not absurd. According to this theory, the spark of consciousness is the integration of information in a system with feedback loops. (Roughly: the theory has complexities relating to systems and subsystems. And—spoiler alert—Tononi has recently cast doubt on the ability of current computer systems to create the necessary “maximally irreducible” integrated system from which consciousness springs.)

It cannot be overstated how slippery consciousness is and how misleading our intuitive sense of the world is. We all know that our eyes are constantly darting from one thing to another, have a blind spot the size of the full moon, and transmit largely black-and-white data except for the 55 degrees or so in the center of our field of vision. And yet our vision seems stable, continuous, and fully imbued with qualities of color and shape. I remember my wonder when, as a child, I realized that I saw cartoons the same way whether I was sitting up or, more likely, lying down on the coach. How could that be?

Some people deny that subjective experience is a problem at all. Daniel Dennett is the clearest advocate of this view. His book “Consciousness Explained” hammers on the slipperiness of “qualia” (the “redness” of 650 nanometer electromagnetic waves, the “warmth” of 40 degrees Celsius). Dennett’s arguments are extremely compelling, but have never sufficed to convince me that there is not what David Chalmers labeled “the Hard Problem”—the problem of experience.

IIT is, at the very least, a bold attempt at a framework for consciousness that is quantitative and verifiable. A surprising (but welcome) aspect is that it allows that almost trivially simple machines could have a minimal consciousness. Such machines would not have the same qualia as us—their internal stories would not be the same as our stories, writ in a smaller font. Rather, a minimally conscious machine, whether attached to a photodiode or a thermistor, would experience a qualia of one bit of information: the difference between “is” and “not is.” I think of this as the experience of “!” (a “bang” of experience, if you will).

Such an experience is as far removed from human consciousness as the spark made from banging two rocks together is from the roiling photosphere of the sun. Your cerebral cortex has 20 billion or so deeply interconnected neurons, and on top of the inherent experiences enabled by that we’ve layered the profoundly complicating matter of language.

Tononi’s book “Phi: A Voyage from the Brain to the Soul” presents his theory in chapters featuring metaphorical dialogues between Galileo and various scientists and philosophers. In this way, it echoes portions of Douglas Hofstadter’s classic, “Gödel, Escher, Bach,” which also has positive feedback and recursion as essential ingredients of consciousness and intelligence (a theme elaborated by Hofstadter in his equally important, but less popular, “I Am a Strange Loop”).

Another approachable book on IIT, and which I actually prefer to Tononi’s, is Christof Koch’s “Consciousness: Confessions of a Romantic Reductionist.”

“Phi” is Tononi’s proposed measure for integrated information, but he does not define it rigorously in his book. His paper “From the Phenomenology to the Mechanisms of Consciousness: Integrated Information Theory 3.0? is much more substantial. His “Consciousness: Here, There but Not Everywhere” paper is in between. It is in this paper that he explicitly casts doubt on a simulation as likely to have the necessary “real causal power” of a neuron to create a “maximally irreducible” integrated system, “For the simple reason that the brain is real, but a simulation of brain is virtual.” It’s not clear to me why there would be a difference; a simulated phi seems to have simply shifted abstraction layers, not the arguments for or against its phenomenal reality.

If IIT is true, consciousness has causal power (that is, it affects the future calculations of its substrate, whether that be a brain or a machine), and our everyday programming models are fundamentally incapable of bootstrapping consciousness.

More tentatively, it may be that today’s machine architectures are incapable. If so, the recent leaps in machine learning and artificial intelligence may not continue for much longer. Perhaps another AI Winter is coming.

A few months ago, I pondered the growing gap between the way we solve problems in our day-to-day programming and the way problems are being solved by new techniques involving Big Data and statistical machine-learning techniques. The ImageNet Large Scale Visual Recognition Challenge has seen a leap in performance with the widespread adoption of convolutional neural nets, to the point where “It is clear that humans will soon only be able to outperform state-of-the-art image classification models by use of significant effort, expertise, and time,” according to Andrej Karpathy when discussing his own results of the test.

Is it reasonable to think or suggest that artificial neural nets are, in any way, conscious?

Integrated Information Theory (IIT), developed by the neuroscientist and psychiatrist Giulio Tononi and expanded by Adam Barrett, suggests that it is not absurd. According to this theory, the spark of consciousness is the integration of information in a system with feedback loops. (Roughly: the theory has complexities relating to systems and subsystems. And—spoiler alert—Tononi has recently cast doubt on the ability of current computer systems to create the necessary “maximally irreducible” integrated system from which consciousness springs.)

It cannot be overstated how slippery consciousness is and how misleading our intuitive sense of the world is. We all know that our eyes are constantly darting from one thing to another, have a blind spot the size of the full moon, and transmit largely black-and-white data except for the 55 degrees or so in the center of our field of vision. And yet our vision seems stable, continuous, and fully imbued with qualities of color and shape. I remember my wonder when, as a child, I realized that I saw cartoons the same way whether I was sitting up or, more likely, lying down on the coach. How could that be?

Some people deny that subjective experience is a problem at all. Daniel Dennett is the clearest advocate of this view. His book “Consciousness Explained” hammers on the slipperiness of “qualia” (the “redness” of 650 nanometer electromagnetic waves, the “warmth” of 40 degrees Celsius). Dennett’s arguments are extremely compelling, but have never sufficed to convince me that there is not what David Chalmers labeled “the Hard Problem”—the problem of experience.

IIT is, at the very least, a bold attempt at a framework for consciousness that is quantitative and verifiable. A surprising (but welcome) aspect is that it allows that almost trivially simple machines could have a minimal consciousness. Such machines would not have the same qualia as us—their internal stories would not be the same as our stories, writ in a smaller font. Rather, a minimally conscious machine, whether attached to a photodiode or a thermistor, would experience a qualia of one bit of information: the difference between “is” and “not is.” I think of this as the experience of “!” (a “bang” of experience, if you will).

Such an experience is as far removed from human consciousness as the spark made from banging two rocks together is from the roiling photosphere of the sun. Your cerebral cortex has 20 billion or so deeply interconnected neurons, and on top of the inherent experiences enabled by that we’ve layered the profoundly complicating matter of language.

Tononi’s book “Phi: A Voyage from the Brain to the Soul” presents his theory in chapters featuring metaphorical dialogues between Galileo and various scientists and philosophers. In this way, it echoes portions of Douglas Hofstadter’s classic, “Gödel, Escher, Bach,” which also has positive feedback and recursion as essential ingredients of consciousness and intelligence (a theme elaborated by Hofstadter in his equally important, but less popular, “I Am a Strange Loop”).

- See more at: http://sdtimes.com/code-watch-can-code-conscious-programs/#sthash.5DyMnDPN.dpuf

A few months ago, I pondered the growing gap between the way we solve problems in our day-to-day programming and the way problems are being solved by new techniques involving Big Data and statistical machine-learning techniques. The ImageNet Large Scale Visual Recognition Challenge has seen a leap in performance with the widespread adoption of convolutional neural nets, to the point where “It is clear that humans will soon only be able to outperform state-of-the-art image classification models by use of significant effort, expertise, and time,” according to Andrej Karpathy when discussing his own results of the test.

Is it reasonable to think or suggest that artificial neural nets are, in any way, conscious?

Integrated Information Theory (IIT), developed by the neuroscientist and psychiatrist Giulio Tononi and expanded by Adam Barrett, suggests that it is not absurd. According to this theory, the spark of consciousness is the integration of information in a system with feedback loops. (Roughly: the theory has complexities relating to systems and subsystems. And—spoiler alert—Tononi has recently cast doubt on the ability of current computer systems to create the necessary “maximally irreducible” integrated system from which consciousness springs.)

It cannot be overstated how slippery consciousness is and how misleading our intuitive sense of the world is. We all know that our eyes are constantly darting from one thing to another, have a blind spot the size of the full moon, and transmit largely black-and-white data except for the 55 degrees or so in the center of our field of vision. And yet our vision seems stable, continuous, and fully imbued with qualities of color and shape. I remember my wonder when, as a child, I realized that I saw cartoons the same way whether I was sitting up or, more likely, lying down on the coach. How could that be?

Some people deny that subjective experience is a problem at all. Daniel Dennett is the clearest advocate of this view. His book “Consciousness Explained” hammers on the slipperiness of “qualia” (the “redness” of 650 nanometer electromagnetic waves, the “warmth” of 40 degrees Celsius). Dennett’s arguments are extremely compelling, but have never sufficed to convince me that there is not what David Chalmers labeled “the Hard Problem”—the problem of experience.

IIT is, at the very least, a bold attempt at a framework for consciousness that is quantitative and verifiable. A surprising (but welcome) aspect is that it allows that almost trivially simple machines could have a minimal consciousness. Such machines would not have the same qualia as us—their internal stories would not be the same as our stories, writ in a smaller font. Rather, a minimally conscious machine, whether attached to a photodiode or a thermistor, would experience a qualia of one bit of information: the difference between “is” and “not is.” I think of this as the experience of “!” (a “bang” of experience, if you will).

Such an experience is as far removed from human consciousness as the spark made from banging two rocks together is from the roiling photosphere of the sun. Your cerebral cortex has 20 billion or so deeply interconnected neurons, and on top of the inherent experiences enabled by that we’ve layered the profoundly complicating matter of language.

Tononi’s book “Phi: A Voyage from the Brain to the Soul” presents his theory in chapters featuring metaphorical dialogues between Galileo and various scientists and philosophers. In this way, it echoes portions of Douglas Hofstadter’s classic, “Gödel, Escher, Bach,” which also has positive feedback and recursion as essential ingredients of consciousness and intelligence (a theme elaborated by Hofstadter in his equally important, but less popular, “I Am a Strange Loop”).

- See more at: http://sdtimes.com/code-watch-can-code-conscious-programs/#sthash.5DyMnDPN.dpuf

A few months ago, I pondered the growing gap between the way we solve problems in our day-to-day programming and the way problems are being solved by new techniques involving Big Data and statistical machine-learning techniques. The ImageNet Large Scale Visual Recognition Challenge has seen a leap in performance with the widespread adoption of convolutional neural nets, to the point where “It is clear that humans will soon only be able to outperform state-of-the-art image classification models by use of significant effort, expertise, and time,” according to Andrej Karpathy when discussing his own results of the test.

Is it reasonable to think or suggest that artificial neural nets are, in any way, conscious?

Integrated Information Theory (IIT), developed by the neuroscientist and psychiatrist Giulio Tononi and expanded by Adam Barrett, suggests that it is not absurd. According to this theory, the spark of consciousness is the integration of information in a system with feedback loops. (Roughly: the theory has complexities relating to systems and subsystems. And—spoiler alert—Tononi has recently cast doubt on the ability of current computer systems to create the necessary “maximally irreducible” integrated system from which consciousness springs.)

It cannot be overstated how slippery consciousness is and how misleading our intuitive sense of the world is. We all know that our eyes are constantly darting from one thing to another, have a blind spot the size of the full moon, and transmit largely black-and-white data except for the 55 degrees or so in the center of our field of vision. And yet our vision seems stable, continuous, and fully imbued with qualities of color and shape. I remember my wonder when, as a child, I realized that I saw cartoons the same way whether I was sitting up or, more likely, lying down on the coach. How could that be?

Some people deny that subjective experience is a problem at all. Daniel Dennett is the clearest advocate of this view. His book “Consciousness Explained” hammers on the slipperiness of “qualia” (the “redness” of 650 nanometer electromagnetic waves, the “warmth” of 40 degrees Celsius). Dennett’s arguments are extremely compelling, but have never sufficed to convince me that there is not what David Chalmers labeled “the Hard Problem”—the problem of experience.

IIT is, at the very least, a bold attempt at a framework for consciousness that is quantitative and verifiable. A surprising (but welcome) aspect is that it allows that almost trivially simple machines could have a minimal consciousness. Such machines would not have the same qualia as us—their internal stories would not be the same as our stories, writ in a smaller font. Rather, a minimally conscious machine, whether attached to a photodiode or a thermistor, would experience a qualia of one bit of information: the difference between “is” and “not is.” I think of this as the experience of “!” (a “bang” of experience, if you will).

Such an experience is as far removed from human consciousness as the spark made from banging two rocks together is from the roiling photosphere of the sun. Your cerebral cortex has 20 billion or so deeply interconnected neurons, and on top of the inherent experiences enabled by that we’ve layered the profoundly complicating matter of language.

Tononi’s book “Phi: A Voyage from the Brain to the Soul” presents his theory in chapters featuring metaphorical dialogues between Galileo and various scientists and philosophers. In this way, it echoes portions of Douglas Hofstadter’s classic, “Gödel, Escher, Bach,” which also has positive feedback and recursion as essential ingredients of consciousness and intelligence (a theme elaborated by Hofstadter in his equally important, but less popular, “I Am a Strange Loop”).

- See more at: http://sdtimes.com/code-watch-can-code-conscious-programs/#sthash.5DyMnDPN.dpuf

A few months ago, I pondered the growing gap between the way we solve problems in our day-to-day programming and the way problems are being solved by new techniques involving Big Data and statistical machine-learning techniques. The ImageNet Large Scale Visual Recognition Challenge has seen a leap in performance with the widespread adoption of convolutional neural nets, to the point where “It is clear that humans will soon only be able to outperform state-of-the-art image classification models by use of significant effort, expertise, and time,” according to Andrej Karpathy when discussing his own results of the test.

Is it reasonable to think or suggest that artificial neural nets are, in any way, conscious?

Integrated Information Theory (IIT), developed by the neuroscientist and psychiatrist Giulio Tononi and expanded by Adam Barrett, suggests that it is not absurd. According to this theory, the spark of consciousness is the integration of information in a system with feedback loops. (Roughly: the theory has complexities relating to systems and subsystems. And—spoiler alert—Tononi has recently cast doubt on the ability of current computer systems to create the necessary “maximally irreducible” integrated system from which consciousness springs.)

It cannot be overstated how slippery consciousness is and how misleading our intuitive sense of the world is. We all know that our eyes are constantly darting from one thing to another, have a blind spot the size of the full moon, and transmit largely black-and-white data except for the 55 degrees or so in the center of our field of vision. And yet our vision seems stable, continuous, and fully imbued with qualities of color and shape. I remember my wonder when, as a child, I realized that I saw cartoons the same way whether I was sitting up or, more likely, lying down on the coach. How could that be?

Some people deny that subjective experience is a problem at all. Daniel Dennett is the clearest advocate of this view. His book “Consciousness Explained” hammers on the slipperiness of “qualia” (the “redness” of 650 nanometer electromagnetic waves, the “warmth” of 40 degrees Celsius). Dennett’s arguments are extremely compelling, but have never sufficed to convince me that there is not what David Chalmers labeled “the Hard Problem”—the problem of experience.

IIT is, at the very least, a bold attempt at a framework for consciousness that is quantitative and verifiable. A surprising (but welcome) aspect is that it allows that almost trivially simple machines could have a minimal consciousness. Such machines would not have the same qualia as us—their internal stories would not be the same as our stories, writ in a smaller font. Rather, a minimally conscious machine, whether attached to a photodiode or a thermistor, would experience a qualia of one bit of information: the difference between “is” and “not is.” I think of this as the experience of “!” (a “bang” of experience, if you will).

Such an experience is as far removed from human consciousness as the spark made from banging two rocks together is from the roiling photosphere of the sun. Your cerebral cortex has 20 billion or so deeply interconnected neurons, and on top of the inherent experiences enabled by that we’ve layered the profoundly complicating matter of language.

Tononi’s book “Phi: A Voyage from the Brain to the Soul” presents his theory in chapters featuring metaphorical dialogues between Galileo and various scientists and philosophers. In this way, it echoes portions of Douglas Hofstadter’s classic, “Gödel, Escher, Bach,” which also has positive feedback and recursion as essential ingredients of consciousness and intelligence (a theme elaborated by Hofstadter in his equally important, but less popular, “I Am a Strange Loop”).

- See more at: http://sdtimes.com/code-watch-can-code-conscious-programs/#sthash.5DyMnDPN.dpufThursday, November 20. 2014

MAME Arcade Game Emulator online

![[screenshot]](https://archive.org/serve/arcade_mpatrol/mpatrol_screenshot.gif)

Mame (standing for Multiple Arcade Machine Emulator) is an emulator able to load and run classic arcade games on your computer. In the late 90s, one was able to download arcade game's board numerical images (like a backup of the game program, called 'Rom files') from a set of websites. Arcade game lovers were trying to find as much as possible old game's boards in order to extract ROM programs from the physical board and make them available within the MAME emulator (which drives the emulator to support more and more games, by adding more and more emulated environments, as running a game within MAME is like switching on the original game, you have to go through ROM and hardware check like if your were in front of the original game hardware). One of these well none web sites was mame.dk.

While, for years, no one seems to care about those forgotten games brought back to life for free, classic arcade game's owners start to re-distribute their forgotten games on console like XBox or PS (re-discovering that one may be able to make money with those old games), and start to shutdown MAME based web sites, suing them for illegal distribution of games (obvious violation of copyright laws when freely distributing game's ROM files). Nevertheless this copyright infringement issue, MAME community has performed an impressive work of archive, saving thousand of vintage games that would have disappeared otherwise.

So it is quite a good news that many of those old games seem to be available again through this javascript version of the MAME emulator, being able to run and play those cute old games from within a simple web browser.

-----

Welcome to Internet Arcade

The Internet Arcade is a web-based library of arcade (coin-operated) video games from the 1970s through to the 1990s, emulated in JSMAME, part of the JSMESS software package. Containing hundreds of games ranging through many different genres and styles, the Arcade provides research, comparison, and entertainment in the realm of the Video Game Arcade.

The game collection ranges from early "bronze-age" videogames, with black and white screens and simple sounds, through to large-scale games containing digitized voices, images and music. Most games are playable in s ome form, although some are useful more for verification of behavior or programming due to the intensity and requirements of their systems.

Many games have a "boot-up" sequence when first turned on, where the systems run through a check and analysis, making sure all systems are go. In some cases, odd controllers make proper playing of the systems on a keyboard or joypad a pale imitation of the original experience. Please report any issues to the Internet Arcade Operator, Jason Scott.

If you are encountering issues with control, sound, or other technical problems, read this entry of some common solutions.

Also, Armchair Arcade (a video game review site) has written an excellent guide to playing on the Internet Arcade as well.

Wednesday, November 19. 2014

Brain decoder can eavesdrop on your inner voice

Via New Scientist

-----

As you read this, your neurons are firing – that brain activity can now be decoded to reveal the silent words in your head

TALKING to yourself used to be a strictly private pastime. That's no longer the case – researchers have eavesdropped on our internal monologue for the first time. The achievement is a step towards helping people who cannot physically speak communicate with the outside world.

"If you're reading text in a newspaper or a book, you hear a voice in your own head," says Brian Pasley at the University of California, Berkeley. "We're trying to decode the brain activity related to that voice to create a medical prosthesis that can allow someone who is paralysed or locked in to speak."

When you hear someone speak, sound waves activate sensory neurons in your inner ear. These neurons pass information to areas of the brain where different aspects of the sound are extracted and interpreted as words.

In a previous study, Pasley and his

colleagues recorded brain activity in people who already had electrodes

implanted in their brain to treat epilepsy, while they listened to

speech. The team found that certain neurons in the brain's temporal lobe

were only active in response to certain aspects of sound, such as a

specific frequency. One set of neurons might only react to sound waves

that had a frequency of 1000 hertz, for example, while another set only

cares about those at 2000 hertz. Armed with this knowledge, the team

built an algorithm that could decode the words heard based on neural activity alone (PLoS Biology, doi.org/fzv269).

(PLoS Biology, doi.org/fzv269).

The team hypothesised that hearing speech and thinking to oneself might spark some of the same neural signatures in the brain. They supposed that an algorithm trained to identify speech heard out loud might also be able to identify words that are thought.

Mind-reading

To test the idea, they recorded brain activity in another seven people undergoing epilepsy surgery, while they looked at a screen that displayed text from either the Gettysburg Address, John F. Kennedy's inaugural address or the nursery rhyme Humpty Dumpty.

Each participant was asked to read the text aloud, read it silently in their head and then do nothing. While they read the text out loud, the team worked out which neurons were reacting to what aspects of speech and generated a personalised decoder to interpret this information. The decoder was used to create a spectrogram – a visual representation of the different frequencies of sound waves heard over time. As each frequency correlates to specific sounds in each word spoken, the spectrogram can be used to recreate what had been said. They then applied the decoder to the brain activity that occurred while the participants read the passages silently to themselves (see diagram).

Despite the neural activity from imagined or actual speech differing slightly, the decoder was able to reconstruct which words several of the volunteers were thinking, using neural activity alone (Frontiers in Neuroengineering, doi.org/whb).

The algorithm isn't perfect, says Stephanie Martin, who worked on the study with Pasley. "We got significant results but it's not good enough yet to build a device."

In practice, if the decoder is to be used by people who are unable to speak it would have to be trained on what they hear rather than their own speech. "We don't think it would be an issue to train the decoder on heard speech because they share overlapping brain areas," says Martin.

The team is now fine-tuning their algorithms, by looking at the neural activity associated with speaking rate and different pronunciations of the same word, for example. "The bar is very high," says Pasley. "Its preliminary data, and we're still working on making it better."

The team have also turned their hand to predicting what songs a person is listening to by playing lots of Pink Floyd to volunteers, and then working out which neurons respond to what aspects of the music. "Sound is sound," says Pasley. "It all helps us understand different aspects of how the brain processes it."

"Ultimately, if we understand covert speech well enough, we'll be able to create a medical prosthesis that could help someone who is paralysed, or locked in and can't speak," he says.

Several other researchers are also investigating ways to read the human mind. Some can tell what pictures a person is looking at, others have worked out what neural activity represents certain concepts in the brain, and one team has even produced crude reproductions of movie clips that someone is watching just by analysing their brain activity. So is it possible to put it all together to create one multisensory mind-reading device?

In theory, yes, says Martin, but it would be extraordinarily complicated. She says you would need a huge amount of data for each thing you are trying to predict. "It would be really interesting to look into. It would allow us to predict what people are doing or thinking," she says. "But we need individual decoders that work really well before combining different senses."

Monday, November 10. 2014

Google is working on “copresence,” which might let Chrome, Android and iOS devices communicate directly

Via GIGAOM

-----

Google is working on new APIs to support something called “copresence,” but what it might be isn’t yet clear. A tip from the blog TechAeris suggests a new cross-platform messaging system between Android and iOS, which is certainly plausible. I did a little digging in the Chromium bug tracker and found 18 mentions of copresence there, along with a pair of related APIs available on the Chrome dev channel.

Here are all of the bug listings, all of which were at least updated this month, meaning that Google has been pretty active of late on copresence. Scanning though these I saw one mention of GCM, which is the Google Cloud Messaging service that’s already available to Android app makers. Developers can deliver files or data from a server to their respective Android app through GCM.

But the TechAeris report suggests copresence will do more than what GCM does today. Based on a source that scanned the latest Google Play Services build, numerous references to copresence were found along with images alluding to peer-to-peer file transfers, similar to how Apple’s AirDrop works between iOS and OS X. One of the images in the software appears to be song sharing between an iPhone and an Android device.

Copresence could even be location-based, where the appropriate app could tell you when other friends are nearby and in range for transfers or direct communications. Obviously, without an official Google announcement we don’t know what this project is about. All of the evidence so far suggests, however, that a new cross-platform communication method is in the works.

Update: In a review of the Google Play Services software last month, Android Police noticed the copresence mentions that may correspond to a Google service called Nearby.

Friday, November 07. 2014

Predicting the next decade of tech: From the cloud to disappearing computers and the rise of robots

Via ZDNet

-----

For an industry run according to logic and rationality, at least outwardly, the tech world seems to have a surprising weakness for hype and the 'next big thing'.

Perhaps that's because, unlike — say — in sales or HR, where innovation is defined by new management strategies, tech investment is very product driven. Buying a new piece of hardware or software often carries the potential for a 'disruptive' breakthrough in productivity or some other essential business metric. Tech suppliers therefore have a vested interest in promoting their products as vigorously as possible: the level of spending on marketing and customer acquisition by some fast-growing tech companies would turn many consumer brands green with envy.

As a result, CIOs are tempted by an ever-changing array of tech buzzwords (cloud, wearables and the Internet of Things [IoT] are prominent in the recent crop) through which they must sift in order to find the concepts that are a good fit for their organisations, and that match their budgets, timescales and appetite for risk. Short-term decisions are relatively straightforward, but the further you look ahead, the harder it becomes to predict the winners.

Tech innovation in a one-to-three year timeframe

Despite all the temptations, the technologies that CIOs are looking at deploying in the near future are relatively uncontroversial — pretty safe bets, in fact. According to TechRepublic's own research, top CIO investment priorities over the next three years include security, mobile, big data and cloud. Fashionable technologies like 3D printing and wearables find themselves at the bottom of the list.

A separate survey from Deloitte reported similar findings: many of the technologies that CIOs are piloting and planning to implement in the near future are ones that have been around for quite some time — business analytics, mobile apps, social media and big data tools, for example. Augmented reality and gamification were seen as low-priority technologies.

This reflects the priorities of most CIOs, who tend to focus on reliability over disruption: in TechRepublic's research, 'protecting/securing networks and data' trumps 'changing business requirements' for understandably risk-wary tech chiefs.

Another major factor here is money: few CIOs have a big budget for bets on blue-skies innovation projects, even if they wanted to. (And many no doubt remember the excesses of the dotcom years, and are keen to avoid making that mistake again.)

According to the research by Deloitte, less than 10 percent of the tech budget is ring-fenced for technology innovation (and CIOs that do spend more on innovation tend to be in smaller, less conservative, companies). There's another complication in that CIOs increasingly don't control the budget dedicated to innovation, as this is handed onto other business units (such as marketing or digital) that are considered to have a more entrepreneurial outlook.

CIOs tend to blame their boss's conservative attitude to risk as the biggest constraint in making riskier IT investments for innovation and growth. Although CIOs claim to be willing to take risks with IT investments, this attitude does not appear to match up with their current project portfolios.

Another part of the problem is that it's very hard to measure the return on some of these technologies. Managers have been used to measuring the benefits of new technologies using a standard return-on-investment measure that tracks some very obvious costs — headcount or spending on new hardware, for example. But defining the return on a social media project or an IoT trial is much more slippery.

Tech investment: A medium-term view

If CIO investment plans remain conservative and hobbled by a limited budget in the short term, you have to look a little further out to see where the next big thing in tech might come from.

One place to look is in what's probably the best-known set of predictions about the future of IT: Gartner's Hype Cycle for Emerging Technologies, which tries to assess the potential of new technologies while taking into account the expectations surrounding them.

The chart grades technologies not only by how far they are from mainstream adoption, but also on the level of hype surrounding them, and as such it demonstrates what the analysts argue is a fundamental truth: that we can't help getting excited about new technology, but that we also rapidly get turned off when we realize how hard it can be to deploy successfully. The exotically-named Peak of Inflated Expectations is commonly followed by the Trough of Disillusionment, before technologies finally make it up the Slope of Enlightenment to the Plateau of Productivity.

"It was a pattern we were seeing with pretty much all technologies — that up-and-down of expectations, disillusionment and eventual productivity," says Jackie Fenn, vice-president and Gartner fellow, who has been working on the project since the first hype cycle was published 20 years ago, which she says is an example of the human reaction to any novelty.

"It's not really about the technologies themselves, it's about how we respond to anything new. You see it with management trends, you see it with projects. I've had people tell me it applies to their personal lives — that pattern of the initial wave of enthusiasm, then the realisation that this is much harder than we thought, and then eventually coming to terms with what it takes to make something work."

According to Gartner's 2014 list, the technologies expected to reach the Plateau of Productivity, (where they become widely adopted) within the next two years include speech recognition and in-memory analytics.

Technologies that might take two to five years until mainstream adoption include 3D scanners, NFC and cloud computing. Cloud is currently entering Gartner's trough of disillusionment, where early enthusiasm is overtaken by the grim reality of making this stuff work: "there are many signs of fatigue, rampant cloudwashing and disillusionment (for example, highly visible failures)," Gartner notes.

When you look at a 5-10-year horizon, the predictions include virtual reality, cryptocurrencies and wearable user interfaces.

Working out when the technologies will make the grade, and thus how CIOs should time their investments, seems to be the biggest challenge. Several of the technologies on Gartner's first-ever hype curve back in 1995 — including speech recognition and virtual reality — are still on the 2014 hype curve without making it to primetime yet.

These sorts of user interface technologies have taken a long time to mature, says Fenn. For example, voice recognition started to appear in very structured call centre applications, while the latest incarnation is something like Siri — "but it's still not a completely mainstream interface," she says.

Nearly all technologies go through the same rollercoaster ride, because our response to new concepts remains the same, says Fenn. "It's an innate psychological reaction — we get excited when there's something new. Partly it's the wiring of our brains that attracts us — we want to keep going around the first part of the cycle where new technologies are interesting and engaging; the second half tends to be the hard work, so it's easier to get distracted."

But even if they can't escape the hype cycle, CIOs can use concepts like this to manage their own impulses: if a company's investment strategy means it's consistently adopting new technologies when they are most hyped (remember a few years back when every CEO had to blog?) then it may be time to reassess, even if the CIO peer-pressure makes it difficult.

Says Fenn: "There is that pressure, that if you're not doing it you just don't get it — and it's a very real pressure. Look at where [new technology] adds value and if it really doesn't, then sometimes it's fine to be a later adopter and let others learn the hard lessons if it's something that's really not critical to you."

The trick, she says, is not to force-fit innovation, but to continually experiment and not always expect to be right.

Looking further out, the technologies labelled 'more than 10 years' to mainstream adoption on Gartner's hype cycle are the rather sci-fi-inflected ones: holographic displays, quantum computing and human augmentation. As such, it's a surprisingly entertaining romp through the relatively near future of technology, from the rather mundane to the completely exotic. "Employers will need to weigh the value of human augmentation against the growing capabilities of robot workers, particularly as robots may involve fewer ethical and legal minefields than augmentation," notes Gartner.

Where the futurists roam

Beyond the 10-year horizon, you're very much into the realm where the tech futurists roam.

Steve Brown, a futurist at chip-maker Intel argues that three mega-trends will shape the future of computing over the next decade. "They are really simple — it's small, big and natural," he says.

'Small' is the consequence of Moore's Law, which will continue the trend towards small, low-power devices, making the rise of wearables and the IoT more likely. 'Big' refers to the ongoing growth in raw computing power, while 'natural' is the process by which everyday objects are imbued with some level of computing power.

"Computing was a destination: you had to go somewhere to compute — a room that had a giant whirring computer in it that you worshipped, and you were lucky to get in there. Then you had the era where you could carry computing with you," says Brown.

"The next era is where the computing just blends into the world around us, and once you can do that, and instrument the world, you can essentially make everything smart — you can turn anything into a computer. Once you do that, profoundly interesting things happen," argues Brown.

With this level of computing power comes a new set of problems for executives, says Brown. The challenge for CIOs and enterprise architects is that once they can make everything smart, what do they want to use it for? "In the future you have all these big philosophical questions that you have to answer before you make a deployment," he says.

Brown envisages a world of ubiquitous processing power, where robots are able to see and understand the world around them.

"Autonomous machines are going to change everything," he claims. "The challenge for enterprise is how humans will work alongside machines — whether that's a physical machine or an algorithm — and what's the best way to take a task and split it into the innately human piece and the bit that can be optimized in some way by being automated."

The pace of technological development is accelerating: where we used to have a decade to make these decisions, these things are going to hit us faster and faster, argues Brown. All of which means we need to make better decisions about how to use new technology — and will face harder questions about privacy and security.

"If we use this technology, will it make us better humans? Which means we all have to decide ahead of time what do we consider to be better humans? At the enterprise level, what do we stand for? How do we want to do business?".

Not just about the hardware and software

For many organizations there's a big stumbling block in the way of this bright future — their own staff and their ways of working. Figuring out what to invest in may be a lot easier than persuading staff, and whole organisations, to change how they operate.

"What we really need to figure out is the relationship between humans and technology, because right now humans get technology massively wrong," says Dave Coplin, chief envisioning officer for Microsoft (a firmly tongue-in-cheek job title, he assures me).

Coplin argues that most of us tend to use new technology to do things the way we've always been doing them for years, when the point of new technology is to enable us to do things fundamentally differently. The concept of productivity is a classic example: "We've got to pick apart what productivity means. Unfortunately most people think process is productivity — the better I can do the processes, the more productive I am. That leads us to focus on the wrong point, because actually productivity is about leading to better outcomes." Three-quarters of workers think a productive day in the office is clearing their inbox, he notes.

Developing a better relationship with technology is necessary because of the huge changes ahead, argues Coplin: "What happens when technology starts to disappear into the background; what happens when every surface has the capability to have contextual information displayed on it based on what's happening around it, and who is looking at it? This is the kind of world we're heading into — a world of predictive data that will throw up all sorts of ethical issues. If we don't get the humans ready for that change we'll never be able to make the most of it."

Nicola Millard, a futurologist at telecoms giant BT, echoes these ideas, arguing that CIOs have to consider not just changes to the technology ahead of them, but also changes to the workers: a longer working life requires workplace technologies that appeal to new recruits as well as staff into their 70s and older. It also means rethinking the workplace: "The open-plan office is a distraction machine," she says — but can you be innovative in a grey cubicle? Workers using tablets might prefer 'perch points' to desks, those using gesture control may need more space. Even the role of the manager itself may change — becoming less about traditional command and control, and more about being a 'party host', finding the right mix of skills to get the job done.

In the longer term, not only will the technology change profoundly, but the workers and managers themselves will also need to upgrade their thinking.

Thursday, November 06. 2014

Google is working on “copresence,” which might let Chrome, Android and iOS devices communicate directly

Via GIGAOM

-----

Google is working on new APIs to support something called “copresence,” but what it might be isn’t yet clear. A tip from the blog TechAeris suggests a new cross-platform messaging system between Android and iOS, which is certainly plausible. I did a little digging in the Chromium bug tracker and found 18 mentions of copresence there, along with a pair of related APIs available on the Chrome dev channel.

Here are all of the bug listings, all of which were at least updated this month, meaning that Google has been pretty active of late on copresence. Scanning though these I saw one mention of GCM, which is the Google Cloud Messaging service that’s already available to Android app makers. Developers can deliver files or data from a server to their respective Android app through GCM.

But the TechAeris report suggests copresence will do more than what GCM does today. Based on a source that scanned the latest Google Play Services build, numerous references to copresence were found along with images alluding to peer-to-peer file transfers, similar to how Apple’s AirDrop works between iOS and OS X. One of the images in the software appears to be song sharing between an iPhone and an Android device.

Copresence could even be location-based, where the appropriate app could tell you when other friends are nearby and in range for transfers or direct communications. Obviously, without an official Google announcement we don’t know what this project is about. All of the evidence so far suggests, however, that a new cross-platform communication method is in the works.

Update: In a review of the Google Play Services software last month, Android Police noticed the copresence mentions that may correspond to a Google service called Nearby.

9 cutting-edge programming languages worth learning now

Via IT World

-----

The big languages are popular for a reason: They offer a huge foundation of open source code, libraries, and frameworks that make finishing the job easier. This is the result of years of momentum in which they are chosen time and again for new projects, and expertise in their nuances grow worthwhile and plentiful.

Sometimes the vast resources of the popular, mainstream programming languages aren’t enough to solve your particular problem. Sometimes you have to look beyond the obvious to find the right language, where the right structure makes the difference while offering that extra feature to help your code run significantly faster without endless tweaking and optimizing. This language produces vastly more stable and accurate code because it prevents you from programming sloppy or wrong code.

The world is filled with thousands of clever languages that aren't C#, Java, or JavaScript. Some are treasured by only a few, but many have flourishing communities connected by a common love for the language's facility in solving certain problems. There may not be tens of millions of programmers who know the syntax, but sometimes there is value in doing things a little different, as experimenting with any new language can pay significant dividends on future projects.

The following nine languages should be on every programmer’s radar. They may not be the best for every job -- many are aimed at specialized tasks. But they all offer upsides that are worth investigating and investing in. There may be a day when one of these languages proves to be exactly what your project -- or boss -- needs.

Erlang: Functional programming for real-time systems

Erlang began deep inside the spooky realms of telephone switches at Ericsson, the Swedish telco. When Ericsson programmers began bragging about its "nine 9s" performance, by delivering 99.9999999 percent of the data with Erlang, the developers outside Ericsson started taking notice.

Erlang’s secret is the functional paradigm. Most of the code is forced to operate in its own little world where it can't corrupt the rest of the system through side effects. The functions do all their work internally, running in little "processes" that act like sandboxes and only talk to each other through mail messages. You can't merely grab a pointer and make a quick change to the state anywhere in the stack. You have to stay inside the call hierarchy. It may require a bit more thought, but mistakes are less likely to propagate.

The model also makes it simpler for runtime code to determine what can run at the same time. With concurrency so easy to detect, the runtime scheduler can take advantage of the very low overhead in setting up and ripping down a process. Erlang fans like to brag about running 20 million "processes" at the same time on a Web server.

If you're building a real-time system with no room for dropped data, such as a billing system for a mobile phone switch, then check out Erlang.

Go: Simple and dynamic

Google wasn’t the first organization to survey the collection of languages, only to find them cluttered, complex, and often slow. In 2009, the company released its solution: a statically typed language that looks like C but includes background intelligence to save programmers from having to specify types and juggle malloc calls. With Go, programmers can have the terseness and structure of compiled C, along with the ease of using a dynamic script language.

While Sun and Apple followed a similar path in creating Java and Swift, respectively, Google made one significantly different decision with Go: The language’s creators wanted to keep Go "simple enough to hold in one programmer's head." Rob Pike, one of Go’s creators, famously told Ars Technica that "sometimes you can get more in the long run by taking things away." Thus, there are few zippy extras like generics, type inheritance, or assertions, only clean, simple blocks of if-then-else code manipulating strings, arrays, and hash tables.

The language is reportedly well-established inside of Google's vast empire and is gaining acceptance in other places where dynamic-language lovers of Python and Ruby can be coaxed into accepting some of the rigor that comes from a compiled language.

If you're a startup trying to catch Google's eye and need to build some server-side business logic, Go is a great place to start.

Groovy: Scripting goodness for Java

The Java world is surprisingly flexible. Say what you will about its belts-and-suspenders approach, like specifying the type for every variable, ending every line with a semicolon, and writing access methods for classes that simply return the value. But it looked at the dynamic languages gaining traction and built its own version that's tightly integrated with Java.

Groovy offers programmers the ability to toss aside all the humdrum conventions of brackets and semicolons, to write simpler programs that can leverage all that existing Java code. Everything runs on the JVM. Not only that, everything links tightly to Java JARs, so you can enjoy your existing code. The Groovy code runs like a dynamically typed scripting language with full access to the data in statically typed Java objects.

Groovy programmers think they have the best of both worlds. There's all of the immense power of the Java code base with all of the fun of using closures, operator overloading, and polymorphic iteration -- not to mention the simplicity of using the question mark to indicate a check for null pointers. It's so much simpler than typing another if-then statement to test nullity. Naturally, all of this flexibility tends to create as much logic with a tiny fraction of the keystrokes. Who can't love that?

Finally, all of the Java programmers who've envied the simplicity of dynamic languages can join the party without leaving the realm of Java.

OCaml: Complex data hierarchy juggler

Some programmers don't want to specify the types of their variables, and for them we've built the dynamic languages. Others enjoy the certainty of specifying whether a variable holds an integer, string, or maybe an object. For them, many of the compiled languages offer all the support they want.

Then there are those who dream of elaborate type hierarchies and even speak of creating "algebras" of types. They imagine lists and tables of heterogeneous types that are brought together to express complex, multileveled data extravaganzas. They speak of polymorphism, pattern-matching primitives, and data encapsulation. This is just the beginning of the complex, highly structured world of types, metatypes, and metametatypes they desire.

For them, there is OCaml, a serious effort by the programming language community to popularize many of the aforementioned ideas. There's object support, automatic memory management, and device portability. There are even OCaml apps available from Apple’s App Store.

An ideal project for OCaml might be building a symbolic math website to teach algebra.

CoffeeScript: JavaScript made clean and simple

Technically, CoffeeScript isn't a language. It's a preprocessor that converts what you write into JavaScript. But it looks different because it's missing plenty of the punctuation. You might think it is Ruby or Python, though the guts behave like JavaScript.

CoffeeScript began when semicolon haters were forced to program in JavaScript because that was what Web browsers spoke. Changing the way the Web works would have been an insurmountable task, so they wrote their own preprocessor instead. The result? Programmers can write cleaner code and let CoffeeScript turn it back into the punctuation-heavy JavaScript Web browsers demand.

Missing semicolons are only the beginning. With CoffeeScript, you can create a variable without typing var. You can define a function without typing function or wrapping it in curly brackets. In fact, curly brackets are pretty much nonexistent in CoffeeScript. The code is so much more concise that it looks like a modernist building compared to a Gothic cathedral. This is why many of the newest JavaScript frameworks are often written in CoffeeScript and compiled.

Scala: Functional programming on the JVM

If you need the code simplicity of object-oriented hierarchies for your project but love the functional paradigm, you have several choices. If Java is your realm, Scala is the choice for you.

Scala runs on the JVM, bringing all the clean design strictures of functional programming to the Java world by delivering code that fits with the Java class specifications and links with other JAR files. If those other JAR files have side effects and other imperative nasty headaches, so be it. Your code will be clean.

The type mechanism is strongly static and the compiler does all the work to infer types. There's no distinction between primitive types and object types because Scala wants everything to descend from one ur-object call Any. The syntax is much simpler and cleaner than Java; Scala folks call it "low ceremony." You can leave your paragraph-long CamelCase variable names back in Java Land.

Scala offers many of the features expected of functional languages, such as lazy evaluation, tail recursion, and immutable variables, but have been modified to work with the JVM. The basic metatypes or collection variables, like linked lists or hash tables, can be either mutable or immutable. Tail recursion works with simpler examples, but not with elaborate, mutually recursive examples. The ideas are all there, even if the implementation may be limited by the JVM. Then again, it also comes with all the ubiquity of the Java platform and the deep collection of existing Java code written by the open source community. That's not a bad trade-off for many practical problems.

If you must juggle the data in a thousand-processor cluster and have a pile of legacy Java code, Scala is a great solution.

Dart: JavaScript without the JavaScript

Being popular is not all it's cracked up to be. JavaScript may be used in more stacks than ever, but familiarity leads to contempt -- and contempt leads to people looking for replacements. Dart is a new programming language for Web browsers from Google.

Dart isn't much of a departure from the basic idea of JavaScript. It works in the background to animate all the DIVs and Web form objects that we see. The designers simply wanted to clean up the nastier, annoying parts of JavaScript while making it simpler. They couldn't depart too far from the underlying architecture because they wanted to compile Dart down to JavaScript to help speed adoption.

The highlight may be the extra functions that fold in many de facto JavaScript libraries. You don't need JQuery or any of the other common libraries to modify some part of the HTML page. It's all there with a reasonably clean syntax. Also, more sophisticated data types and syntactic shorthand tricks will save a few keystrokes. Google is pushing hard by offering open source development tools for all of the major platforms.

If you are building a dynamic Web app and are tired of JavaScript, Dart offers a clean syntax for creating multiple dancing DIVs filled with data from various Web sources.

Haskell: Functional programming, pure and simple

For more than 20 years, the academics working on functional programming have been actively developing Haskell, a language designed to encapsulate their ideas about the evils of side effects. It is one of the purer expressions of the functional programming ideal, with a careful mechanism for handling I/O channels and other unavoidable side effects. The rest of the code, though, should be perfectly functional.

The community is very active, with more than a dozen variants of Haskell waiting for you to explore. Some are stand-alone, and others are integrated with more mainstream efforts like Java (Jaskell, Frege) or Python (Scotch). Most of the names seem to be references to Scotland, a hotbed of Haskell research, or philosopher/logicians who form the intellectual provenance for many of the ideas expressed in Haskell. If you believe that your data structures will be complex and full of many types, Haskell will help you keep them straight.

Julia: Bringing speed to Python land

The world of scientific programming is filled with Python lovers who enjoy the simple syntax and the freedom to avoid thinking of gnarly details like pointers and bytes. For all its strengths, however, Python is often maddeningly slow, which can be a problem if you're crunching large data sets as is common in the world of scientific computing. To speed up matters, many scientists turn to writing the most important routines at the core in C, which is much faster. But that saddles them with software written in two languages and is thus much harder to revise, fix, or extend.

Julia is a solution to this complexity. Its creators took the clean syntax adored by Python programmers and tweaked it so that the code can be compiled in the background. That way, you can set up a notebook or an interactive session like with Python, but any code you create will be compiled immediately.

The guts of Julia are fascinating. They provide a powerful type inference engine that can help ensure faster code. If you enjoy metaprogramming, the language is flexible enough to be extended. The most valuable additions, however, may be Julia’s simple mechanisms for distributing parallel algorithms across a cluster. A number of serious libraries already tackle many of the most common numerical algorithms for data analysis.

The best news, though, may be the high speeds. Many basic benchmarks run 30 times faster than Python and often run a bit faster than C code. If you have too much data but enjoy Python’s syntax, Julia is the next language to learn.

Wednesday, November 05. 2014

Researchers bridge air gap by turning monitors into FM radios

Via ars technica

-----

A two-stage attack could allow spies to sneak secrets out of the most sensitive buildings, even when the targeted computer system is not connected to any network, researchers from Ben-Gurion University of the Negev in Israel stated in an academic paper describing the refinement of an existing attack.

The technique, called AirHopper, assumes that an attacker has already compromised the targeted system and desires to occasionally sneak out sensitive or classified data. Known as exfiltration, such occasional communication is difficult to maintain, because government technologists frequently separate the most sensitive systems from the public Internet for security. Known as an air gap, such a defensive measure makes it much more difficult for attackers to compromise systems or communicate with infected systems.

Yet, by using a program to create a radio signal using a computer’s video card—a technique known for more than a decade—and a smartphone capable of receiving FM signals, an attacker could collect data from air-gapped devices, a group of four researchers wrote in a paper presented last week at the IEEE 9th International Conference on Malicious and Unwanted Software (MALCON).

“Such technique can be used potentially by people and organizations with malicious intentions and we want to start a discussion on how to mitigate this newly presented risk,” Dudu Mimran, chief technology officer for the cyber security labs at Ben-Gurion University, said in a statement.

For the most part, the attack is a refinement of existing techniques. Intelligence agencies have long known—since at least 1985—that electromagnetic signals could be intercepted from computer monitors to reconstitute the information being displayed. Open-source projects have turned monitors into radio-frequency transmitters. And, from the information leaked by former contractor Edward Snowden, the National Security Agency appears to use radio-frequency devices implanted in various computer-system components to transmit information and exfiltrate data.

AirHopper uses off-the-shelf components, however, to achieve the same result. By using a smartphone with an FM receiver, the exfiltration technique can grab data from nearby systems and send it to a waiting attacker once the smartphone is again connected to a public network.

“This is the first time that a mobile phone is considered in an attack model as the intended receiver of maliciously crafted radio signals emitted from the screen of the isolated computer,” the group said in its statement on the research.

The technique works at a distance of 1 to 7 meters, but can only send data at very slow rates—less than 60 bytes per second, according to the researchers.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131044)

- Norton cyber crime study offers striking revenue loss statistics (101656)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100056)

- Norton cyber crime study offers striking revenue loss statistics (57885)

- The PC inside your phone: A guide to the system-on-a-chip (57445)