Via TechCrunch

-----

Researchers at MIT’s Computer Science and Artificial Intelligence Lab

(CSAIL) have created an algorithm they claim can predict how memorable

or forgettable an image is almost as accurately as a human — which is to

say that their tech can predict how likely a person would be to

remember or forget a particular photo.

The algorithm performed 30 per cent better than existing algorithms

and was within a few percentage points of the average human performance,

according to the researchers.

The team has put a demo of their tool online here,

where you can upload your selfie to get a memorability score and view a

heat map showing areas the algorithm considers more or less memorable.

They have also published a paper on the research which can be found here.

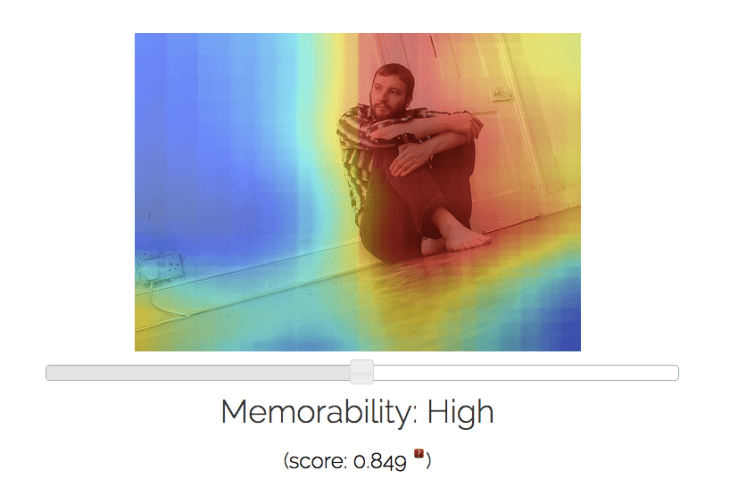

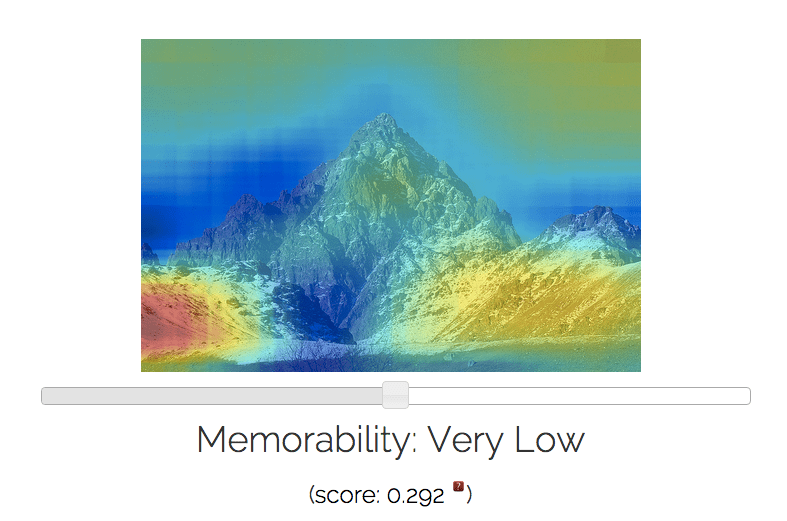

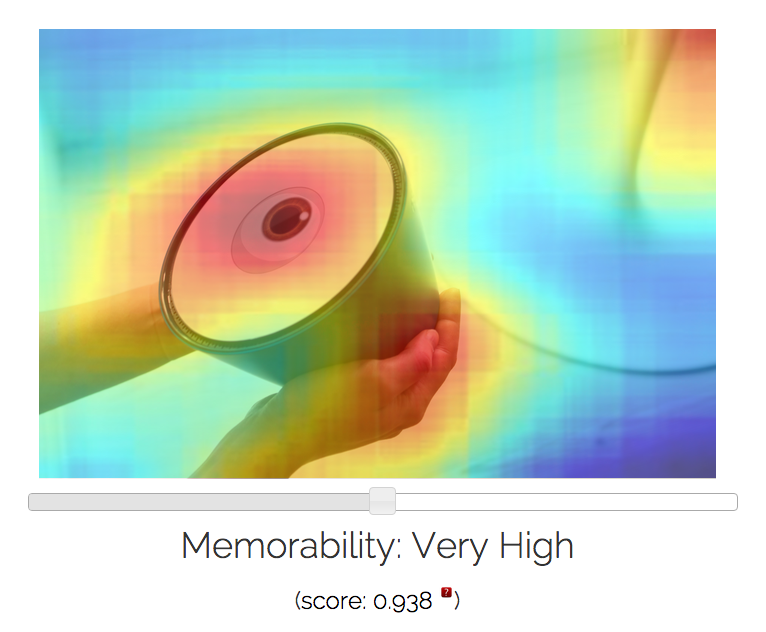

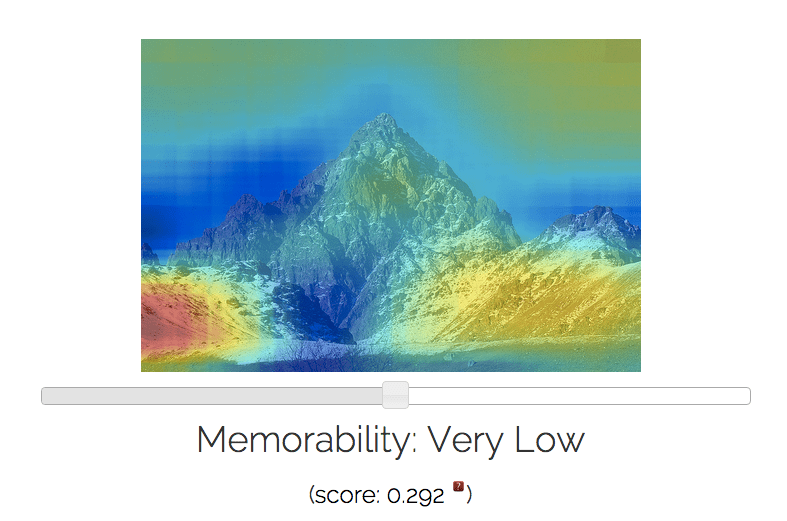

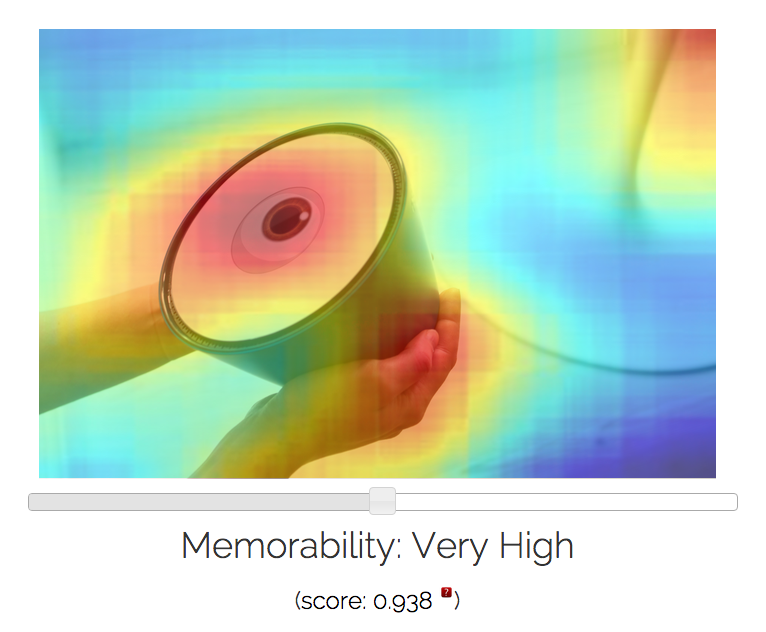

Here are some examples of images I ran through their MemNet

algorithm, with resulting memorability scores and most and least

forgettable areas depicted via heat map:

Potential applications for the algorithm are very broad indeed when

you consider how photos and photo-sharing remains the currency of the

social web. Anything that helps improve understanding of how people

process visual information and the impact of that information on memory

has clear utility.

The team says it plans to release an app in future to allow users to

tweak images to improve their impact. So the research could be used to

underpin future photo filters that do more than airbrush facial

features to make a shot more photogenic — but maybe tweak some of the

elements to make the image more memorable too.

Beyond helping people create a more lasting impression with

their selfies, the team envisages applications for the algorithm to

enhance ad/marketing content, improve teaching resources and even power

health-related applications aimed at improving a person’s capacity to

remember or even as a way to diagnose errors in memory and perhaps

identify particular medical conditions.

The MemNet algorithm was created using deep learning AI techniques,

and specifically trained on tens of thousands of tagged images from

several different datasets all developed at CSAIL — including LaMem,

which contains 60,000 images each annotated with detailed metadata about

qualities such as popularity and emotional impact.

Publishing the LaMem database alongside their paper is part of the

team’s effort to encourage further research into what they say has often

been an under-studied topic in computer vision.

Asked to explain what kind of patterns the deep-learning algorithm is

trying to identify in order to predict memorability/forgettability, PhD

candidate at MIT CSAIL, Aditya Khosla, who was lead author on a related

paper, tells TechCrunch: “This is a very difficult question and active

area of research. While the deep learning algorithms are extremely

powerful and are able to identify patterns in images that make them more

or less memorable, it is rather challenging to look under the hood to

identify the precise characteristics the algorithm is identifying.

“In general, the algorithm makes use of the objects and scenes in the

image but exactly how it does so is difficult to explain. Some initial

analysis shows that (exposed) body parts and faces tend to be highly

memorable while images showing outdoor scenes such as beaches or the

horizon tend to be rather forgettable.”

The research involved showing people images, one after another, and

asking them to press a key when they encounter an image they had seen

before to create a memorability score for images used to train the

algorithm. The team had about 5,000 people from the Amazon Mechanical

Turk crowdsourcing platform view a subset of its images, with each image

in their LaMem dataset viewed on average by 80 unique individuals,

according to Khosla.

In terms of shortcomings, the algorithm does less well on types of

images it has not been trained on so far, as you’d expect — so it’s

better on natural images and less good on logos or line drawings right

now.

“It has not seen how variations in colors, fonts, etc affect the

memorability of logos, so it would have a limited understanding of

these,” says Khosla. “But addressing this is a matter of capturing such

data, and this is something we hope to explore in the near future —

capturing specialized data for specific domains in order to better

understand them and potentially allow for commercial applications there.

One of those domains we’re focusing on at the moment is faces.”

The team has previously developed a similar algorithm for face memorability.

Discussing how the planned MemNet app might work, Khosla says there

are various options for how images could be tweaked based on algorithmic

input, although ensuring a pleasing end photo is part of the challenge

here. “The simple approach would be to use the heat map to blur out

regions that are not memorable to emphasize the regions of high

memorability, or simply applying an Instagram-like filter or cropping

the image a particular way,” he notes.

“The complex approach would involve adding or removing objects from

images automatically to change the memorability of the image — but as

you can imagine, this is pretty hard — we would have to ensure that the

object size, shape, pose and so on match the scene they are being added

to, to avoid looking like a photoshop job gone bad.”

Looking ahead, the next step for the researchers will be to try to

update their system to be able to predict the memory of a specific

person. They also want to be able to better tailor it for individual

“expert industries” such as retail clothing and logo-design.

How many training images they’d need to show an individual person

before being able to algorithmically predict their capacity to remember

images in future is not yet clear. “This is something we are still

investigating,” says Khosla.