Via Kurzweil

-----

IBM researchers unveiled today a new generation of experimental

computer chips designed to emulate the brain’s abilities for perception,

action and cognition.

In a sharp departure from traditional von

Neumann computing concepts in designing and building computers, IBM’s

first neurosynaptic computing chips recreate the phenomena between

spiking neurons and synapses in biological systems, such as the brain,

through advanced algorithms and silicon circuitry.

The technology

could yield many orders of magnitude less power consumption and space

than used in today’s computers, the researchers say. Its first two

prototype chips have already been fabricated and are currently

undergoing testing.

Called cognitive computers,

systems built with these chips won’t be programmed the same way

traditional computers are today. Rather, cognitive computers are

expected to learn through experiences, find correlations, create

hypotheses, and remember — and learn from — the outcomes, mimicking the

brains structural and synaptic plasticity.

“This is a major

initiative to move beyond the von Neumann paradigm that has been ruling

computer architecture for more than half a century,” said Dharmendra

Modha, project leader for IBM Research.

“Future applications of

computing will increasingly demand functionality that is not efficiently

delivered by the traditional architecture. These chips are another

significant step in the evolution of computers from calculators to

learning systems, signaling the beginning of a new generation of

computers and their applications in business, science and government.”

Neurosynaptic chips

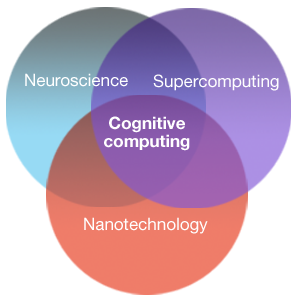

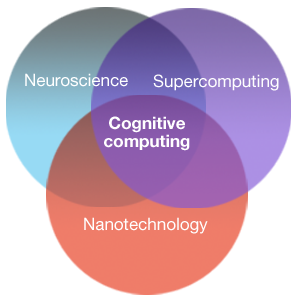

IBM

is combining principles from nanoscience, neuroscience, and

supercomputing as part of a multi-year cognitive computing initiative.

IBM’s long-term goal is to build a chip system with ten billion neurons

and hundred trillion synapses, while consuming merely one kilowatt of

power and occupying less than two liters of volume.

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

IBM has two working prototype designs. Both

cores were fabricated in 45 nm SOICMOS and contain 256 neurons. One

core contains 262,144 programmable synapses and the other contains

65,536 learning synapses. The IBM team has successfully demonstrated

simple applications like navigation, machine vision, pattern

recognition, associative memory and classification.

IBM’s

overarching cognitive computing architecture is an on-chip network of

lightweight cores, creating a single integrated system of hardware and

software. It represents a potentially more power-efficient architecture

that has no set programming, integrates memory with processor, and

mimics the brain’s event-driven, distributed and parallel processing.

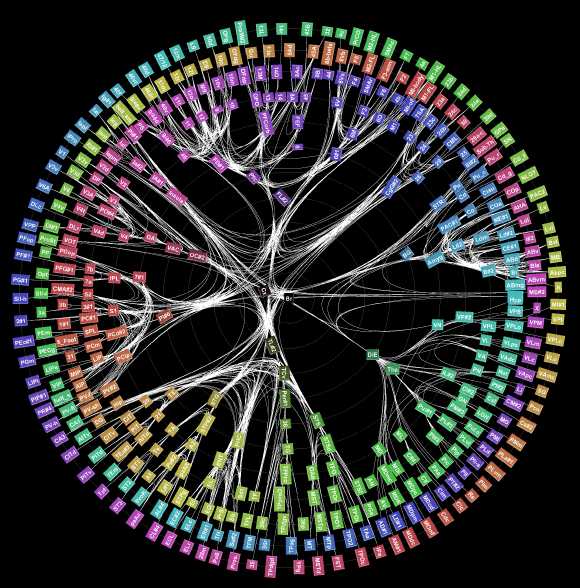

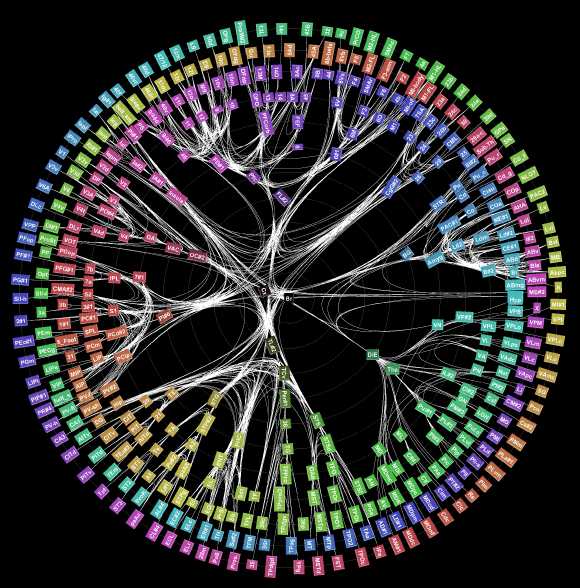

Visualization of the long distance network of a monkey brain (credit: IBM Research)

SyNAPSE

The

company and its university collaborators also announced they have been

awarded approximately $21 million in new funding from the Defense

Advanced Research Projects Agency (DARPA) for Phase 2 of the Systems of

Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) project.

The

goal of SyNAPSE is to create a system that not only analyzes complex

information from multiple sensory modalities at once, but also

dynamically rewires itself as it interacts with its environment — all

while rivaling the brain’s compact size and low power usage.

For

Phase 2 of SyNAPSE, IBM has assembled a world-class multi-dimensional

team of researchers and collaborators to achieve these ambitious goals.

The team includes Columbia University; Cornell University; University

of California, Merced; and University of Wisconsin, Madison.

Why Cognitive Computing

Future

chips will be able to ingest information from complex, real-world

environments through multiple sensory modes and act through multiple

motor modes in a coordinated, context-dependent manner.

For

example, a cognitive computing system monitoring the world’s water

supply could contain a network of sensors and actuators that constantly

record and report metrics such as temperature, pressure, wave height,

acoustics and ocean tide, and issue tsunami warnings based on its

decision making.

Similarly, a grocer stocking shelves could use an

instrumented glove that monitors sights, smells, texture and

temperature to flag bad or contaminated produce. Making sense of

real-time input flowing at an ever-dizzying rate would be a Herculean

task for today’s computers, but would be natural for a brain-inspired

system.

“Imagine traffic lights that can integrate sights, sounds

and smells and flag unsafe intersections before disaster happens or

imagine cognitive co-processors that turn servers, laptops, tablets, and

phones into machines that can interact better with their environments,”

said Dr. Modha.

IBM has a rich history in the area of artificial

intelligence research going all the way back to 1956 when IBM performed

the world’s first large-scale (512 neuron) cortical simulation. Most

recently, IBM Research scientists created Watson,

an analytical computing system that specializes in understanding

natural human language and provides specific answers to complex

questions at rapid speeds.

---

IBM research

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).