Monday, February 27. 2017

Samsung scraps a Raspberry Pi 3 competitor, shrinks Artik line

Via PCWorld

-----

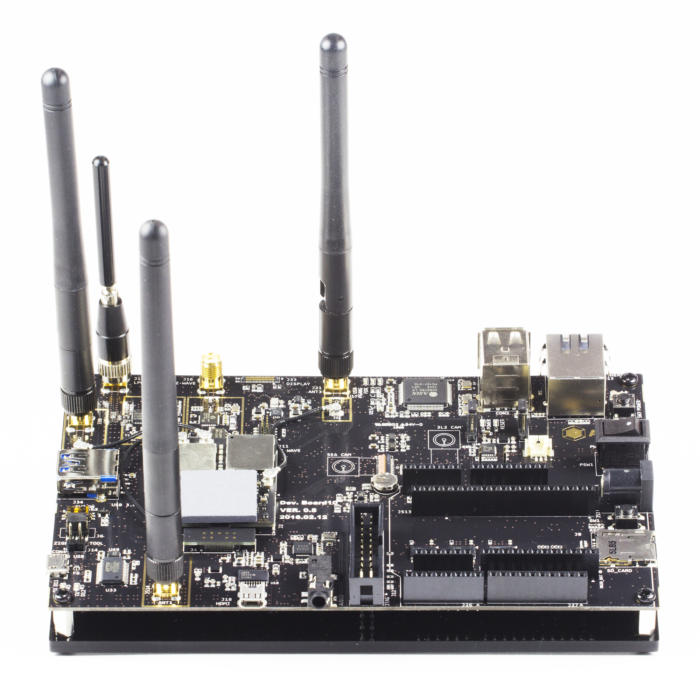

Samsung has scrapped its Raspberry Pi 3 competitor called Artik 10 as it moves to smaller and more powerful boards to create gadgets, robots, drones, and IoT devices.

A last remaining stock of the US$149 boards is still available through online retailers Digi-Key and Arrow.

Samsung has stopped making Artik 10 and is asking users to buy its Artik 7 boards instead.

"New development for high-performance IoT products should be based on the Samsung Artik 710, as the Artik 1020 is no longer in production. Limited stocks of Artik 1020 modules and developer kits are still available for experimentation and small-scale projects," the company said on its Artik website.

The Artik boards have been used to develop robots, drone, smart lighting systems, and other smart home products. Samsung has a grand plan to make all its appliances, including refrigerators and washing machines, smart in the coming years, and Artik is a big part of that strategy.

Samsung hopes that tinkerers will make devices that work with its smart appliances. Artik can also be programmed to work with Amazon Echo.

Artik 10 started shipping in May last year, and out-performed Raspberry Pi 3 in many ways, but also lagged on some features.

The board had a plethora of wireless connectivity features including 802.11b/g/n Wi-Fi, Zigbee, and Bluetooth, and it had a better graphics processor than Raspberry Pi. The Artik 10 had 16GB flash storage and 2GB of RAM, both more than Raspberry Pi 3.

The board's biggest flaw was a 32-bit eight-core ARM processor; Raspberry Pi had a 64-bit ARM processor.

The Artik 7 is smaller and a worthy replacement. It has an eight-core 64-bit ARM processor, and it also has Wi-Fi, Bluetooth, and ZigBee. It supports 1080p graphics, and it has 1GB of RAM and 4GB of flash storage, less than in Artik 10. Cameras and sensors can be easily attached to the board.

The Artik boards work on Linux and Tizen. The company also sells Artik 5 and Artik 0, which are smaller boards with lower power processors.

Monday, February 20. 2017

Millimeter-Scale Computers: Now With Deep-Learning Neural Networks on Board

Via IEEE Spectrum

-----

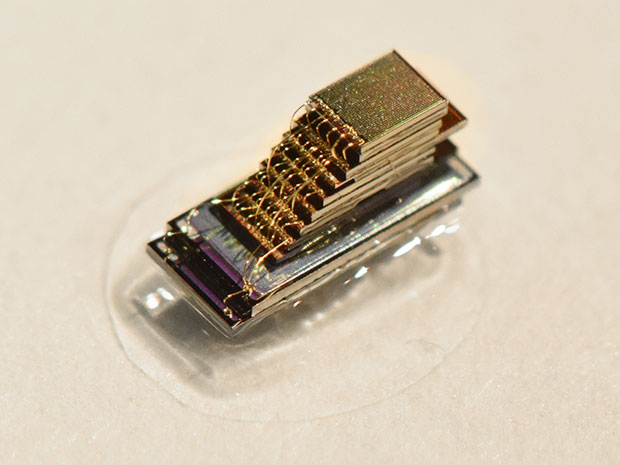

Photo: University of Michigan and TSMC One of several varieties of University of Michigan micromotes. This one incorporates 1 megabyte of flash memory.

Computer scientist David Blaauw pulls a small plastic box from his bag. He carefully uses his fingernail to pick up the tiny black speck inside and place it on the hotel café table. At 1 cubic millimeter, this is one of a line of the world’s smallest computers. I had to be careful not to cough or sneeze lest it blow away and be swept into the trash.

Blaauw and his colleague Dennis Sylvester, both IEEE Fellows and computer scientists at the University of Michigan, were in San Francisco this week to present 10 papers related to these “micromote” computers at the IEEE International Solid-State Circuits Conference (ISSCC). They’ve been presenting different variations on the tiny devices for a few years.

Their broader goal is to make smarter, smaller sensors for medical devices and the Internet of Things—sensors that can do more with less energy. Many of the microphones, cameras, and other sensors that make up the eyes and ears of smart devices are always on alert, and frequently beam personal data into the cloud because they can’t analyze it themselves. Some have predicted that by 2035, there will be 1 trillion such devices. “If you’ve got a trillion devices producing readings constantly, we’re going to drown in data,” says Blaauw. By developing tiny, energy-efficient computing sensors that can do analysis on board, Blaauw and Sylvester hope to make these devices more secure, while also saving energy.

At the conference, they described micromote designs that use only a few nanowatts of power to perform tasks such as distinguishing the sound of a passing car and measuring temperature and light levels. They showed off a compact radio that can send data from the small computers to receivers 20 meters away—a considerable boost compared to the 50-centimeter range they reported last year at ISSCC. They also described their work with TSMC (Taiwan Semiconductor Manufacturing Company) on embedding flash memory into the devices, and a project to bring on board dedicated, low-power hardware for running artificial intelligence algorithms called deep neural networks.

Blaauw and Sylvester say they take a holistic approach to adding new features without ramping up power consumption. “There’s no one answer” to how the group does it, says Sylvester. If anything, it’s “smart circuit design,” Blaauw adds. (They pass ideas back and forth rapidly, not finishing each other’s sentences but something close to it.)

The memory research is a good example of how the right trade-offs can improve performance, says Sylvester. Previous versions of the micromotes used 8 kilobytes of SRAM (static RAM), which makes for a pretty low-performance computer. To record video and sound, the tiny computers need more memory. So the group worked with TSMC to bring flash memory on board. Now they can make tiny computers with 1 megabyte of storage.

Flash can store more data in a smaller footprint than SRAM, but it takes a big burst of power to write to the memory. With TSMC, the group designed a new memory array that uses a more efficient charge pump for the writing process. The memory arrays are a bit less dense than TSMC’s commercial products, for example, but still much better than SRAM. “We were able to get huge gains with small trade-offs,” says Sylvester.

Another micromote they presented at the ISSCC incorporates a deep-learning processor that can operate a neural network while using just 288 microwatts. Neural networks are artificial intelligence algorithms that perform well at tasks such as face and voice recognition. They typically demand both large memory banks and intense processing power, and so they’re usually run on banks of servers often powered by advanced GPUs. Some researchers have been trying to lessen the size and power demands of deep-learning AI with dedicated hardware that’s specially designed to run these algorithms. But even those processors still use over 50 milliwatts of power—far too much for a micromote. The Michigan group brought down the power requirements by redesigning the chip architecture, for example by situating four processing elements within the memory (in this case, SRAM) to minimize data movement.

The idea is to bring neural networks to the Internet of Things. “A lot of motion detection cameras take pictures of branches moving in the wind—that’s not very helpful,” says Blaauw. Security cameras and other connected devices are not smart enough to tell the difference between a burglar and a tree, so they waste energy sending uninteresting footage to the cloud for analysis. Onboard deep-learning processors could make better decisions, but only if they don’t use too much power. The Michigan group imagine that deep-learning processors could be integrated into many other Internet-connected things besides security systems. For example, an HVAC system could decide to turn the air-conditioning down if it sees multiple people putting on their coats.

After demonstrating many variations on these micromotes in an

academic setting, the Michigan group hopes they will be ready for market

in a few years. Blaauw and Sylvester say their startup company, CubeWorks,

is currently prototyping devices and researching markets. The company

was quietly incorporated in late 2013. Last October, Intel Capital announced they had invested an undisclosed amount in the tiny computer company.

Monday, February 13. 2017

HyperFace Camouflage

-----

- False-face computer vision camouflage patterns for Hyphen Labs’ NeuroSpeculative AfroFeminism at Sundance Film Festival 2017.

HyperFace is being developed for Hyphen Labs NeuroSpeculative AfroFeminism project at Sundance Film Festival and is a collaboration with Hyphen Labs members Ashley Baccus-Clark, Carmen Aguilar y Wedge, Ece Tankal, Nitzan Bartov, and JB Rubinovitz.

NeuroSpeculative AfroFeminism is a transmedia exploration of black women and the roles they play in technology, society and culture—including speculative products, immersive experiences and neurocognitive impact research. Using fashion, cosmetics and the economy of beauty as entry points, the project illuminates issues of privacy, transparency, identity and perception.

HyperFace is a new kind of camouflage that aims to reduce the confidence score of facial detection and recognition by providing false faces that distract computer vision algorithms. HyperFace development began in 2013 and was first presented at 33c3 in Hamburg, Germany on December 30th, 2016. HyperFace will launch as a textile print at Sundance Film Festival on January 16, 2017.

Together HyperFace and NeuroSpeculative AfroFeminism will explore an Afrocentric countersurveillance aesthetic.

For more information about NeuroSpeculative AfroFeminism visit nsaf.space

How Does HyperFace Work?

HyperFace works by providing maximally activated false faces based on ideal algorithmic representations of a human face. These maximal activations are targeted for specific algorithms. The prototype above is specific to OpenCV’s default frontalface profile. Other patterns target convolutional nueral networks and HoG/SVM detectors. The technical concept is an extension of earlier work on CV Dazzle. The difference between the two projects is that HyperFace aims to alter the surrounding area (ground) while CV Dazzle targets the facial area (figure). In camouflage, the objective is often to minimize the difference between figure and ground. HyperFace reduces the confidence score of the true face (figure) by redirecting more attention to the nearby false face regions (ground).

Conceptually, HyperFace recognizes that completely concealing a face to facial detection algorithms remains a technical and aesthetic challenge. Instead of seeking computer vision anonymity through minimizing the confidence score of a true face (i.e. CV Dazzle), HyperFace offers a higher confidence score for a nearby false face by exploiting a common algorithmic preference for the highest confidence facial region. In other words, if a computer vision algorithm is expecting a face, give it what it wants.

How Well Does This Work?

The patterns are still under development and are expected to change. Please check back towards the end of January for more information.

Product Photos

Please check back towards the end of January for product photos

Notifications

If you’re interested in purchasing one of the first commercially available HyperFace textiles, please add yourself to my mailing list at Undisclosed.studio

Notes

- Designs subject to change

- Displayed patterns are prototypes and are currently undergoing testing.

- First prototype designed for OpenCV Haarcascade. Future iterations will include patterns for HoG/SVM and CNN detectors

- Will not make you invisible

- Please credit image as HyperFace Prototype by “Adam Harvey / ahprojects.com”

- Please credit scarf rendering prototype as “Rendering by Ece Tankal / hyphen-labs.com”

- Not affilliated with the IARPA funded Hyperface algorithm for pose and gender recognition

Monday, February 06. 2017

Everything You Need to Know About 5G

Via IEEE Spectrum

-----

Today’s mobile users want faster data speeds and more reliable service. The next generation of wireless networks—5G—promises to deliver that, and much more. With 5G, users should be able to download a high-definition film in under a second (a task that could take 10 minutes on 4G LTE). And wireless engineers say these networks will boost the development of other new technologies, too, such as autonomous vehicles, virtual reality, and the Internet of Things.

If all goes well, telecommunications companies hope to debut the first commercial 5G networks in the early 2020s. Right now, though, 5G is still in the planning stages, and companies and industry groups are working together to figure out exactly what it will be. But they all agree on one matter: As the number of mobile users and their demand for data rises, 5G must handle far more traffic at much higher speeds than the base stations that make up today’s cellular networks.

To achieve this, wireless engineers are designing a suite of brand-new technologies. Together, these technologies will deliver data with less than a millisecond of delay (compared to about 70 ms on today’s 4G networks) and bring peak download speeds of 20 gigabits per second (compared to 1 Gb/s on 4G) to users.

At the moment, it’s not yet clear which technologies will do the most for 5G in the long run, but a few early favorites have emerged. The front-runners include millimeter waves, small cells, massive MIMO, full duplex, and beamforming. To understand how 5G will differ from today’s 4G networks, it’s helpful to walk through these five technologies and consider what each will mean for wireless users.

Millimeter Waves

Today’s wireless networks have run into a problem: More people and devices are consuming more data than ever before, but it remains crammed on the same bands of the radio-frequency spectrum that mobile providers have always used. That means less bandwidth for everyone, causing slower service and more dropped connections.

One way to get around that problem is to simply transmit signals on a whole new swath of the spectrum, one that’s never been used for mobile service before. That’s why providers are experimenting with broadcasting on millimeter waves, which use higher frequencies than the radio waves that have long been used for mobile phones.

Millimeter waves are broadcast at frequencies between 30 and 300 gigahertz, compared to the bands below 6 GHz that were used for mobile devices in the past. They are called millimeter waves because they vary in length from 1 to 10 mm, compared to the radio waves that serve today’s smartphones, which measure tens of centimeters in length.

Until now, only operators of satellites and radar systems used millimeter waves for real-world applications. Now, some cellular providers have begun to use them to send data between stationary points, such as two base stations. But using millimeter waves to connect mobile users with a nearby base station is an entirely new approach.

There is one major drawback to millimeter waves, though—they can’t easily travel through buildings or obstacles and they can be absorbed by foliage and rain. That’s why 5G networks will likely augment traditional cellular towers with another new technology, called small cells.

Small Cells

Small cells are portable miniature base stations that require minimal power to operate and can be placed every 250 meters or so throughout cities. To prevent signals from being dropped, carriers could install thousands of these stations in a city to form a dense network that acts like a relay team, receiving signals from other base stations and sending data to users at any location.

While traditional cell networks have also come to rely on an increasing number of base stations, achieving 5G performance will require an even greater infrastructure. Luckily, antennas on small cells can be much smaller than traditional antennas if they are transmitting tiny millimeter waves. This size difference makes it even easier to stick cells on light poles and atop buildings.

This radically different network structure should provide more targeted and efficient use of spectrum. Having more stations means the frequencies that one station uses to connect with devices in one area can be reused by another station in a different area to serve another customer. There is a problem, though—the sheer number of small cells required to build a 5G network may make it hard to set up in rural areas.

In addition to broadcasting over millimeter waves, 5G base stations will also have many more antennas than the base stations of today’s cellular networks—to take advantage of another new technology: massive MIMO.

Massive MIMO

Today’s 4G base stations have a dozen ports for antennas that handle all cellular traffic: eight for transmitters and four for receivers. But 5G base stations can support about a hundred ports, which means many more antennas can fit on a single array. That capability means a base station could send and receive signals from many more users at once, increasing the capacity of mobile networks by a factor of 22 or greater.

This technology is called massive MIMO. It all starts with MIMO, which stands for multiple-input multiple-output. MIMO describes wireless systems that use two or more transmitters and receivers to send and receive more data at once. Massive MIMO takes this concept to a new level by featuring dozens of antennas on a single array.

MIMO is already found on some 4G base stations. But so far, massive MIMO has only been tested in labs and a few field trials. In early tests, it has set new records for spectrum efficiency, which is a measure of how many bits of data can be transmitted to a certain number of users per second.

Massive MIMO looks very promising for the future of 5G. However, installing so many more antennas to handle cellular traffic also causes more interference if those signals cross. That’s why 5G stations must incorporate beamforming.

Beamforming

Beamforming is a traffic-signaling system for cellular base stations that identifies the most efficient data-delivery route to a particular user, and it reduces interference for nearby users in the process. Depending on the situation and the technology, there are several ways for 5G networks to implement it.

Beamforming can help massive MIMO arrays make more efficient use of the spectrum around them. The primary challenge for massive MIMO is to reduce interference while transmitting more information from many more antennas at once. At massive MIMO base stations, signal-processing algorithms plot the best transmission route through the air to each user. Then they can send individual data packets in many different directions, bouncing them off buildings and other objects in a precisely coordinated pattern. By choreographing the packets’ movements and arrival time, beamforming allows many users and antennas on a massive MIMO array to exchange much more information at once.

For millimeter waves, beamforming is primarily used to address a different set of problems: Cellular signals are easily blocked by objects and tend to weaken over long distances. In this case, beamforming can help by focusing a signal in a concentrated beam that points only in the direction of a user, rather than broadcasting in many directions at once. This approach can strengthen the signal’s chances of arriving intact and reduce interference for everyone else.

Besides boosting data rates by broadcasting over millimeter waves and beefing up spectrum efficiency with massive MIMO, wireless engineers are also trying to achieve the high throughput and low latency required for 5G through a technology called full duplex, which modifies the way antennas deliver and receive data.

Full Duplex

Today's base stations and cellphones rely on transceivers that must take turns if transmitting and receiving information over the same frequency, or operate on different frequencies if a user wishes to transmit and receive information at the same time.

With 5G, a transceiver will be able to transmit and receive data at the same time, on the same frequency. This technology is known as full duplex, and it could double the capacity of wireless networks at their most fundamental physical layer: Picture two people talking at the same time but still able to understand one another—which means their conversation could take half as long and their next discussion could start sooner.

Some militaries already use full duplex technology that relies on bulky equipment. To achieve full duplex in personal devices, researchers must design a circuit that can route incoming and outgoing signals so they don’t collide while an antenna is transmitting and receiving data at the same time.

This is especially hard because of the tendency of radio waves to travel both forward and backward on the same frequency—a principle known as reciprocity. But recently, experts have assembled silicon transistors that act like high-speed switches to halt the backward roll of these waves, enabling them to transmit and receive signals on the same frequency at once.

One drawback to full duplex is that it also creates more signal interference, through a pesky echo. When a transmitter emits a signal, that signal is much closer to the device’s antenna and therefore more powerful than any signal it receives. Expecting an antenna to both speak and listen at the same time is possible only with special echo-canceling technology.

With these and other 5G technologies, engineers hope to build the wireless network that future smartphone users, VR gamers, and autonomous cars will rely on every day. Already, researchers and companies have set high expectations for 5G by promising ultralow latency and record-breaking data speeds for consumers. If they can solve the remaining challenges, and figure out how to make all these systems work together, ultrafast 5G service could reach consumers in the next five years.

Writing Credits:

- Amy Nordrum–Article Author & Voice Over

Produced By:

- Celia Gorman–Executive Producer

- Kristen Clark–Producer

Art Direction and Illustrations:

- Brandon Palacio–Art Director

- Mike Spector–Illustrator

- Ove Edfors–Expert & Illustrator

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131044)

- Norton cyber crime study offers striking revenue loss statistics (101656)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100056)

- Norton cyber crime study offers striking revenue loss statistics (57885)

- The PC inside your phone: A guide to the system-on-a-chip (57445)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '17 |

|

||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | ||

| 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| 13 | 14 | 15 | 16 | 17 | 18 | 19 |

| 20 | 21 | 22 | 23 | 24 | 25 | 26 |

| 27 | 28 | |||||