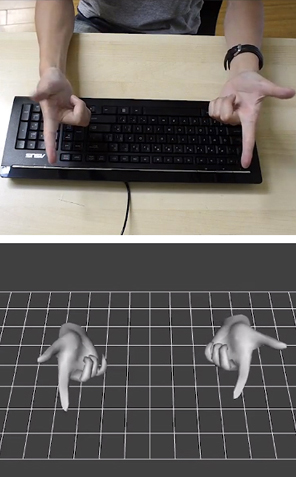

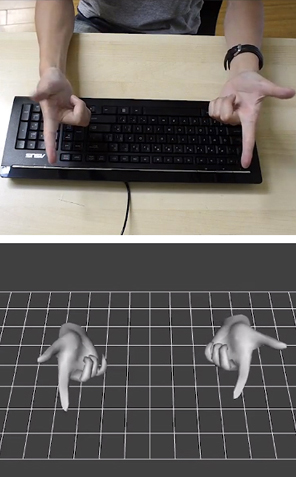

Finger mouse: 3Gear uses depth-sensing cameras to track finger movements.

3Gear Systems

Wednesday, October 24. 2012Wi-Fi LED Light Bulbs: Gateway to the Smart Home?-----

In the future, we’re told, homes will be filled with smart gadgets connected to the Internet, giving us remote control of our homes and making the grid smarter. Wireless thermostats and now lighting appear to be leading the way. Startup Greenwave Reality today announced that its wireless LED lighting kit is available in the U.S., although not through retail sales channels. The company, headed by former consumer electronics executives, plans to sell the set, which includes four 40-watt-equivalent bulbs and a smartphone application, through utilities and lighting companies for about $200, according to CEO Greg Memo. The Connected Lighting Solution includes four EnergyStar-rated LED light bulbs, a gateway box that connects to a home router, and a remote control. Customers also download a smartphone or tablet app that lets people turn lights on or off, dim lights, or set up schedules. Installation is extremely easy. Greenwave Reality sent me a set to try out, and I had it operating within a few minutes. The bulbs each have their own IP address and are paired with the gateway out of the box, so there’s no need to configure the bulbs, which communicate over the home wireless network or over the Internet for remote access. Using the app is fun, if only for the novelty. When’s the last time you used your iPhone to turn off the lights downstairs? It also lets people put lights on a schedule (they can be used outside in a sheltered area but not exposed directly to water) or set custom scenes. For instance, Memo set some of the wireless bulbs in his kitchen to be at half dimness during the day. Many smart-home or smart-building advocates say that lighting is the toehold for a house full of networked gadgets. “The thing about lighting is that it’s a lot more personal than appliances or a thermostat. It’s actually something that affects people’s moods and comfort,” Memo says. “We think this will move the needle on the automated home.” Rather than sell directly to consumers, as most other smart lighting products are, Greenwave Reality intends to sell through utilities and service companies. While gadget-oriented consumers may be attracted to wireless light bulbs, utilities are interested in energy savings. And because these lights are connected to the Internet, energy savings can be quantified. In Europe and many states, utilities are required to spend money on customer efficiency programs, such as rebates for efficient appliances or subsidizing compact fluorescent bulbs. But unlike traditional CFLs, network-connected LEDs can report usage information. That allows Greenwave Reality to see how many bulbs are actually in use and verify the intended energy savings of, for example, subsidized light bulbs. (The reported data would be anonymized, Memo says.) Utilities could also make lighting part of demand response programs to lower power during peak times. As for performance of the bulbs, there is essentially no latency when using the smartphone app. The remote control essentially brings dimmers to fixtures that don’t have them already. For people who like the idea of bringing the Internet of things to their home with smart gadgets, LED lights (and thermostats) seem like a good way to start. But in the end, it may be the energy savings of better managed and more efficient light bulbs that will give wireless lighting a broader appeal.

Molecular 3D bioprinting could mean getting drugs through emailVia dvice ----- What happens when you combine advances in 3D printing with biosynthesis and molecular construction? Eventually, it might just lead to printers that can manufacture vaccines and other drugs from scratch: email your doc, download some medicine, print it out and you're cured. This concept (which is surely being worked on as we speak) comes from Craig Venter, whose idea of synthesizing DNA on Mars we posted about last week. You may remember a mention of the possibility of synthesizing Martian DNA back here on Earth, too: Venter says that we can do that simply by having the spacecraft email us genetic information on whatever it finds on Mars, and then recreate it in a lab by mixing together nucleotides in just the right way. This sort of thing has already essentially been done by Ventner, who created the world's first synthetic life form back in 2010. Vetner's idea is to do away with complex, expensive and centralized vaccine production and instead just develop one single machine that can "print" drugs by carefully combining nucleotides, sugars, amino acids, and whatever else is needed while u wait. Technology like this would mean that vaccines could be produced locally, on demand, simply by emailing the appropriate instructions to your closes drug printer. Pharmacies would no longer consists of shelves upon shelves of different pills, but could instead be kiosks with printers inside them. Ultimately, this could even be something you do at home. While the benefits to technology like this are obvious, the risks are equally obvious. I mean, you'd basically be introducing the Internet directly into your body. Just ingest that for a second and think about everything that it implies. Viruses. LOLcats. Rule 34. Yeah, you know what, maybe I'll just stick with modern American healthcare and making ritual sacrifices to heathen gods, at least one of which will probably be effective.

Tuesday, October 16. 2012Google must change privacy policy demand EU watchdogsVia Slash Gear -----

European data protection regulators have demanded Google change its privacy policy, though the French-led team did not conclude that the search giant’s actions amounted to something illegal. The investigation, by the Commission Nationale de l’Informatique (CNIL), argued that Google’s decision to condense the privacy policies of over sixty products into a single agreement – and at the same time increase the amount of inter-service data sharing – could leave users unclear as to how different types of information (as varied as search terms, credit card details, or phone numbers) could be used by the company.

“The Privacy Policy makes no difference in terms of processing between the innocuous content of search query and the credit card number or the telephone communications of the user” the CNIL points out, “all these data can be used equally for all the purposes in the Policy.” That some web users merely interact passively with Google products, such as adverts, also comes in for heightened attention, with those users getting no explanation at all as to how their actions might be tracked or stored.

In a letter to Google [pdf link] – signed by the CNIL and other authorities from across Europe – the concerns are laid out in full, together with some suggestions as to how they can be addressed. For instance, the search company could “develop interactive presentations that allow users to navigate easily through the content of the policies” and “provide additional and precise information about data that have a significant impact on users (location, credit card data, unique device identifiers, telephony, biometrics).” Ironically, one of Google’s arguments for initially changing its policy system was that a single, harmonized agreement would be easier for users to read through and understand. It also insisted that the data-sharing aspects were little changed from before. “The CNIL, all the authorities among the Working Party and data protection authorities from other regions of the world expect Google to take effective and public measures to comply quickly and commit itself to the implementation of these recommendations” the commission concluded. Google has a 3-4 month period to enact the changes requested, or it could face the threat of sanctions. “We have received the report and are reviewing it now” Peter Fleischer, Google’s global privacy counsel, told TechCrunch. “Our new privacy policy demonstrates our long-standing commitment to protecting our users’ information and creating great products. We are confident that our privacy notices respect European law.”

Monday, October 15. 2012Constraints on the Universe as a Numerical SimulationSilas R. Beane, Zohreh Davoudi, Martin J. Savage ----- Observable consequences of the hypothesis that the observed universe is a numerical simulation performed on a cubic space-time lattice or grid are explored. The simulation scenario is first motivated by extrapolating current trends in computational resource requirements for lattice QCD into the future. Using the historical development of lattice gauge theory technology as a guide, we assume that our universe is an early numerical simulation with unimproved Wilson fermion discretization and investigate potentially-observable consequences. Among the observables that are considered are the muon g-2 and the current differences between determinations of alpha, but the most stringent bound on the inverse lattice spacing of the universe, b^(-1) >~ 10^(11) GeV, is derived from the high-energy cut off of the cosmic ray spectrum. The numerical simulation scenario could reveal itself in the distributions of the highest energy cosmic rays exhibiting a degree of rotational symmetry breaking that reflects the structure of the underlying lattice.

Posted by Christian Babski

in Technology

at

12:06

Defined tags for this entry: simulation, technology

Thursday, October 11. 2012Rain Room, 2012

Rain Room is a hundred square metre field of falling water through which it is possible to walk, trusting that a path can be navigated, without being drenched in the process. As you progress through The Curve, the sound of water and a suggestion of moisture fill the air, before you are confronted by this carefully choreographed downpour that responds to your movements and presence. ----- Water, injection moulded tiles, solenoid valves, pressure regulators,

custom software, 3D tracking cameras, wooden frames, steel beams,

hydraulic

management system, grated floor Wednesday, October 10. 2012The mouse faces extinction as computer interaction evolves-----

Swipe, swipe, pinch-zoom. Fifth-grader Josephine Nguyen is researching the definition of an adverb on her iPad and her fingers are flying across the screen. Her 20 classmates are hunched over their own tablets doing the same. Conspicuously absent from this modern scene of high-tech learning: a mouse. Nguyen, who is 10, said she has used one before — once — but the clunky desktop computer/monitor/keyboard/mouse setup was too much for her. “It was slow,” she recalled, “and there were too many pieces.” Gilbert Vasquez, 6, is also baffled by the idea of an external pointing device named after a rodent. “I don’t know what that is,” he said with a shrug. Nguyen and Vasquez, who attend public schools here, are part of the first generation growing up with a computer interface that is vastly different from the one the world has gotten used to since the dawn of the personal-computer era in the 1980s. This fall, for the first time, sales of iPads are cannibalizing sales of PCs in schools, according to Charles Wolf, an analyst for the investment research firm Needham & Co. And a growing number of even more sophisticated technologies for communicating with your computer — such as the Leap Motion boxes and Sony Vaio laptops that read hand motions, as well as voice recognition services such as Apple’s Siri — are beginning to make headway in the commercial market. John Underkoffler, a former MIT researcher who was the adviser for the high-tech wizardry that Tom Cruise used in “Minority Report,” says that the transition is inevitable and that it will happen in as soon as a few years. Underkoffler, chief scientist for Oblong, a Los Angeles-based company that has created a gesture-controlled interface for computer systems, said that for decades the mouse was the primary bridge to the virtual world — and that it was not always optimal. “Human hands and voice, if you use them in the digital world in the same way as the physical world, are incredibly expressive,” he said. “If you let the plastic chunk that is a mouse drop away, you will be able to transmit information between you and machines in a very different, high-bandwidth way.” This type of thinking is turning industrial product design on its head. Instead of focusing on a single device to access technology, innovators are expanding their horizons to gizmos that respond to body motions, the voice, fingers, eyes and even thoughts. Some devices can be accessed by multiple people at the same time. Keyboards might still be used for writing a letter, but designing, say, a landscaped garden might be more easily done with a digital pen, as would studying a map of Lisbon by hand gestures, or searching the Internet for Rihanna’s latest hits by voice. And the mouse — which many agree was a genius creation in its time — may end up as a relic in a museum. The mouse is born The first computer mouse, built at the Stanford Research Institute in Palo Alto, Calif., by Douglas Englebart and Bill English in 1963, was just a block of wood fashioned with two wheels. It was just one of a number of interfaces the team experimented with. There were also foot pedals, head-pointing devices and knee-mounted joysticks. But the mouse proved to be the fastest and most accurate, and with the backing of Apple founder Steve Jobs — who bundled it with shipments of Lisa, the predecessor to the Macintosh, in the 1980s — the device suddenly became a mainstream phenomenon. Englebart’s daughter, Christina, a cultural anthropologist, said that her father was able to predict many trends in technology over the years, but she said the one thing he has been surprised about is that the mouse has lasted as long as it has. “He never assumed the mouse would be it,” said the younger Englebart, who wrote her father’s biography. “He always figured there would be newer ways of exploring a computer.” She was 8 years old when her father invented the mouse. Now 57, she says she is finally seeing glimpses of the next stage of computing with the surging popularity of the iPad. These days her two children, 20 and 23, do not use a mouse anymore. San Antonio and LUCHA elementary schools in eastern San Jose, just 17 miles south of where Englebart conducted his research, provide a glimpse at the future. The schools, which share a campus, have integrated iPod Touches and iPads into the curriculum for all 700 students. The teachers all get Mac Airbooks with touch pads. “Most children here have never seen a computer mouse,” said Hannah Tenpas, 24, a kindergarten teacher at San Antonio. Kindergartners, as young as 4, use the iPod Touch to learn letter sounds. The older students use iPads to research historical information and prepare multimedia slide-show presentations about school rules. The intuitive touch-screen interface has allowed the school to introduce children to computers at an age that would have been impossible in the past, said San Antonio Elementary’s principal, Jason Sorich. Even toddlers are able manipulate a touch screen. A popular YouTube video shows a baby trying to swipe the pages of a fashion magazine that she assumes is a broken iPad. “For my one-year-old daughter, a magazine is an iPad that does not work. It will remain so for her whole life,” the creator of the video says in a slide at the end of the clip. The iPad side of the brain “The popularity of iPads and other tablets is changing how society interacts with information,” said Aniket Kittur, an assistant professor at the Human-Computer Interaction Institute at Carnegie Mellon University. “.?.?. Direct manipulation with our fingers, rather than mediated through a keyboard/mouse, is intuitive and easy for children to grasp.” Underkoffler said that while desktop computers helped activate the language and abstract-thinking parts of a child’s brain, new interfaces are helping open the spatial part. “Once our user interface can start to talk to us that way .?.?. we sort of blow the barn doors off how we learn,” he said. That may explain why iPads are becoming so popular in schools. Apple said in July that the iPad outsold the Mac 2 to 1 for the second consecutive quarter in the education market. In all, the company sold 17 million iPads in the April-to-June quarter; at the same time, mouse sales in the United States are down, some manufacturers say. “The adoption rate of iPad in education is something I’d never seen from any technology product in history,” Apple chief executive Tim Cook said in July. At San Antonio Elementary and LUCHA, which started their $300,000 iPad and iPod experiment last school year, the school board president, Esau Herrera, said he is thrilled by the results. Test scores have gone up (although officials say they cannot directly correlate that to the new technology), and the level of engagement has increased. The schools are now debating what to do with the handful of legacy desktop PCs, each with its own keyboard and mouse, and whether they should bother teaching students to move a pointer around a monitor. “Things are moving so fast,” said LUCHA Principal Kristin Burt, “that we’re not sure the computer and mouse will even be around when they get old enough to really use them.”

Tuesday, October 09. 2012Meet the Nimble-Fingered Interface of the Future-----

Finger mouse: 3Gear uses depth-sensing cameras to track finger movements.

Microsoft's Kinect, a 3-D camera and software for gaming, has made a big impact since its launch in 2010. Eight million devices were sold in the product's first two months on the market as people clamored to play video games with their entire bodies in lieu of handheld controllers. But while Kinect is great for full-body gaming, it isn't useful as an interface for personal computing, in part because its algorithms can't quickly and accurately detect hand and finger movements. Now a San Francisco-based startup called 3Gear has developed a gesture interface that can track fast-moving fingers. Today the company will release an early version of its software to programmers. The setup requires two 3-D cameras positioned above the user to the right and left. The hope is that developers will create useful applications that will expand the reach of 3Gear's hand-tracking algorithms. Eventually, says Robert Wang, who cofounded the company, 3Gear's technology could be used by engineers to craft 3-D objects, by gamers who want precision play, by surgeons who need to manipulate 3-D data during operations, and by anyone who wants a computer to do her bidding with a wave of the finger. One problem with gestural interfaces—as well as touch-screen desktop displays—is that they can be uncomfortable to use. They sometimes lead to an ache dubbed "gorilla arm." As a result, Wang says, 3Gear focused on making its gesture interface practical and comfortable. "If I want to work at my desk and use gestures, I can't do that all day," he says. "It's not precise, and it's not ergonomic." The key, Wang says, is to use two 3-D cameras above the hands. They are currently rigged on a metal frame, but eventually could be clipped onto a monitor. A view from above means that hands can rest on a desk or stay on a keyboard. (While the 3Gear software development kit is free during its public beta, which lasts until November 30, developers must purchase their own hardware, including cameras and frame.) "Other projects have replaced touch screens with sensors that sit on the desk and point up toward the screen, still requiring the user to reach forward, away from the keyboard," says Daniel Wigdor, professor of computer science at the University of Toronto and author of Brave NUI World, a book about touch and gesture interfaces. "This solution tries to address that." 3Gear isn't alone in its desire to tackle the finer points of gesture tracking. Earlier this year, Microsoft released an update that enabled people who develop Kinect for Windows software to track head position, eyebrow location, and the shape of a mouth. Additionally, Israeli startup Omek, Belgian startup SoftKinetic, and a startup from San Francisco called Leap Motion—which claims its small, single-camera system will track movements to a hundredth of a millimeter—are all jockeying for a position in the fledgling gesture-interface market.

Monday, October 08. 2012There Really Is a Smartphone Inside ‘EW Magazine’Via Mashable ----- They told us, but we did not believe them: The Oct. 5 print edition of Entertainment Weekly, which features a one-of-a-kind digital ad running video and live tweets, actually has a smartphone inside of it. A real, full-sized 3G cellphone inside a print magazine. The digital ad is designed to promote the CW network’s fresh lineup of action shows (The Arrow and Emily Owens, M.D.) and, when you open the magazine to the ad, the small LCD screen shows short clips of the two shows and then switches to live tweets from CW’s Twitter account. When we spoke to CW representatives earlier this week, they did tell us that “the ad is powered by a custom-built, smartphone-like Android device with an LED screen and 3G connectivity; it was manufactured in China.” This is all true, though the device is far more than just “smartphone-like.” During our teardown, we discovered a smartphone-sized battery, a full QWERTY keyboard hidden under black plastic tape, a T-Mobile 3G card, a camera, speaker and a live USB port that will accept a mini USB cable, which you can then plug into a computer and recharge the phone. We could also see from the motherboard that the smartphone was built by Foxconn. You may have heard of it. Once we extracted the phone from its clear plastic housing (which was sandwiched between two rather thick card-stock pages), we were able to use a screw driver to close the open contacts on the touch pad and access the on-screen Android menu, which has a full complement of apps. It wasn’t easy, but we even made a phone call. That’s right, there’s nothing wrong with this phone, other than it being old, under powered and partially in Chinese. Oh, yes, and the fact that it’s jammed inside a print magazine. Mashable Senior Tech Analyst Christina Warren, who assisted in our teardown, did some research (including using the number on the motherboard) and is now fairly certain that guts come from this $86 ABO smartphone. Don’t worry, it’s unlikely that it cost the CW anywhere near that much. Entertainment Weekly is only producing 1,000 of these digital advertising-enhanced issues, so if you want a nearly free smartphone that, with a good deal of nudging, actually works, you better run, not walk, to your nearest newsstand. In the meantime, we’ll keep playing with the phone to see if we can make it perform other tricks, like calling the phone number we desire and crafting our own tweets. We did finally get the camera working, but without a lens over it, the images are a blurry mess. More challenges for what I’m officially naming our Entertainment Weekly Digital Ad/Smartphone Print Insert Hackathon!

Thursday, October 04. 2012How Google Builds Its Maps—and What It Means for the Future of EverythingVia The Atlantic -----

Behind every Google Map, there is a much more complex map that's the key to your queries but hidden from your view. The deep map contains the logic of places: their no-left-turns and freeway on-ramps, speed limits and traffic conditions. This is the data that you're drawing from when you ask Google to navigate you from point A to point B -- and last week, Google showed me the internal map and demonstrated how it was built. It's the first time the company has let anyone watch how the project it calls GT, or "Ground Truth," actually works. Google opened up at a key moment in its evolution. The company began as an online search company that made money almost exclusively from selling ads based on what you were querying for. But then the mobile world exploded. Where you're searching from has become almost as important as what you're searching for. Google responded by creating an operating system, brand, and ecosystem in Android that has become the only significant rival to Apple's iOS. And for good reason. If Google's mission is to organize all the world's information, the most important challenge -- far larger than indexing the web -- is to take the world's physical information and make it accessible and useful. "If you look at the offline world, the real world in which we live, that information is not entirely online," Manik Gupta, the senior product manager for Google Maps, told me. "Increasingly as we go about our lives, we are trying to bridge that gap between what we see in the real world and [the online world], and Maps really plays that part." This is not just a theoretical concern. Mapping systems matter on phones precisely because they are the interface between the offline and online worlds. If you're at all like me, you use mapping more than any other application except for the communications suite (phone, email, social networks, and text messaging). Google is locked in a battle with the world's largest company, Apple, about who will control the future of mobile phones. Whereas Apple's strengths are in product design, supply chain management, and retail marketing, Google's most obvious realm of competitive advantage is in information. Geo data -- and the apps built to use it -- are where Google can win just by being Google. That didn't matter on previous generations of iPhones because they used Google Maps, but now Apple's created its own service. How the two operating systems incorporate geo data and present it to users could become a key battleground in the phone wars. But that would entail actually building a better map. *** The office where Google has been building the best representation of the world is not a remarkable place. It has all the free food, ping pong, and Google Maps-inspired Christoph Niemann cartoons that you'd expect, but it's still a low-slung office building just off the 101 in Mountain View in the burbs. I was slated to meet with Gupta and the engineering ringleader on his team, former NASA engineer Michael Weiss-Malik, who'd spent his 20 percent time working on Google Mars, and Nick Volmar, an "operator" who actually massages map data. "So you want to make a map," Weiss-Malik tells me as we sit down in front of a massive monitor. "There are a couple of steps. You acquire data through partners. You do a bunch of engineering on that data to get it into the right format and conflate it with other sources of data, and then you do a bunch of operations, which is what this tool is about, to hand massage the data. And out the other end pops something that is higher quality than the sum of its parts." This is what they started out with, the TIGER data from the US Census Bureau (though the base layer could and does come from a variety of sources in different countries).

On first inspection, this data looks great. The roads look like they are all there and you've got the freeways differentiated. This is a good map to the untrained eye. But let's look closer. There are issues where the digital data does not match the physical world. I've circled a few obvious ones below. And

that's just from comparing the map to the satellite imagery. But there

are also a variety of other tools at Google's disposal. One is bringing

in data from other sources, say the US Geological Survey. But Google's

Ground Truthers can also bring another exclusive asset to bear on the

maps problem: the Street View cars' tracks and imagery. In keeping with

Google's more-data-is-better-data mantra, the maps team, largely driven

by Street View, is publishing more imagery data every two weeks than

Google possessed total in 2006.*

Let's step

back a tiny bit to recall with wonderment the idea that a single company

decided to drive cars with custom cameras over every road they could

access. Google is up to five million miles driven now. Each drive

generates two kinds of really useful data for mapping. One is the actual

tracks the cars have taken; these are proof-positive that certain

routes can be taken. The other are all the photos. And what's

significant about the photographs in Street View is that Google can run

algorithms that extract the traffic signs and can even paste them onto

the deep map within their Atlas tool. So, for a particularly complicated

intersection like this one in downtown San Francisco, that could look

like this:

Google

Street View wasn't built to create maps like this, but the geo team

quickly realized that computer vision could get them incredible data for

ground truthing their maps. Not to detour too much, but what you see

above is just the beginning of how Google is going to use Street View

imagery. Think of them as the early web crawlers (remember those?) going

out in the world, looking for the words on pages. That's what Street

View is doing. One of its first uses is finding street signs (and

addresses) so that Google's maps can better understand the logic of

human transportation systems. But as computer vision and OCR improve,

any word that is visible from a road will become a part of Google's

index of the physical world.

Later in the day, Google Maps VP Brian McClendon put it like this: "We can actually organize the world's physical written information if we can OCR it and place it," McClendon said. "We use that to create our maps right now by extracting street names and addresses, but there is a lot more there." More like what? "We already have what we call 'view codes' for 6 million businesses and 20 million addresses, where we know exactly what we're looking at," McClendon continued. "We're able to use logo matching and find out where are the Kentucky Fried Chicken signs ... We're able to identify and make a semantic understanding of all the pixels we've acquired. That's fundamental to what we do." For now, though, computer vision transforming Street View images directly into geo-understanding remains in the future. The best way to figure out if you can make a left turn at a particular intersection is still to have a person look at a sign -- whether that's a human driving or a human looking at an image generated by a Street View car. There is an analogy to be made to one of Google's other impressive projects: Google Translate. What looks like machine intelligence is actually only a recombination of human intelligence. Translate relies on massive bodies of text that have been translated into different languages by humans; it then is able to extract words and phrases that match up. The algorithms are not actually that complex, but they work because of the massive amounts of data (i.e. human intelligence) that go into the task on the front end. Google Maps has executed a similar operation. Humans are coding every bit of the logic of the road onto a representation of the world so that computers can simply duplicate (infinitely, instantly) the judgments that a person already made. This reality is incarnated in Nick Volmar, the operator who has been showing off Atlas while Weiss-Malik and Gupta explain it. He probably uses twenty-five keyboard shortcuts switching between types of data on the map and he shows the kind of twitchy speed that I associate with long-time designers working with Adobe products or professional Starcraft players. Volmar has clearly spent thousands of hours working with this data. Weiss-Malik told me that it takes hundreds of operators to map a country. (Rumor has it many of these people work in the Bangalore office, out of which Gupta was promoted.) The sheer amount of human effort that goes into Google's maps is just mind-boggling. Every road that you see slightly askew in the top image has been hand-massaged by a human. The most telling moment for me came when we looked at couple of the several thousand user reports of problems with Google Maps that come in every day. The Geo team tries to address the majority of fixable problems within minutes. One complaint reported that Google did not show a new roundabout that had been built in a rural part of the country. The satellite imagery did not show the change, but a Street View car had recently driven down the street and its tracks showed the new road perfectly. Volmar began to fix the map, quickly drawing the new road and connecting it to the existing infrastructure. In his haste (and perhaps with the added pressure of three people watching his every move), he did not draw a perfect circle of points. Weiss-Malik and I detoured into another conversation for a couple of minutes. By the time I looked back at the screen, Volmar had redrawn the circle with perfect precision and upgraded a few other things while he was at it. The actions were impressively automatic. This is an operation that promotes perfectionism. And that's how you get your maps to look this this: Some

details are worth pointing out. In the top at the center, trails have

been mapped out and coded as places for walking. All the parking lots

have been mapped out. All the little roads, say, to the left of the

small dirt patch on the right, have also been coded. Several of the

actual buildings have been outlined. Down at the bottom left, a road has

been marked as a no-go. At each and every intersection, there are

arrows that delineate precisely where cars can and cannot turn.

Now

imagine doing this for every tile on Google's map in the United States

and 30 other countries over the last four years. Every roundabout

perfectly circular, every intersection with the correct logic. Every new

development. Every one-way street. This is a task of a nearly

unimaginable scale. This is not something you can put together with a

few dozen smart engineers.

I came away

convinced that the geographic data Google has assembled is not likely to

be matched by any other company. The secret to this success isn't, as

you might expect, Google's facility with data, but rather its

willingness to commit humans to combining and cleaning data about the

physical world. Google's map offerings build in the human intelligence

on the front end, and that's what allows its computers to tell you the

best route from San Francisco to Boston.

*** It's probably better not to think of Google Maps as a thing like a paper map. Geographic information systems represent a jump from paper maps like the abacus to the computer. "I honestly think we're seeing a more profound change, for map-making, than the switch from manuscript to print in the Renaissance," University of London cartographic historian Jerry Brotton told the Sydney Morning Herald. "That was huge. But this is bigger." The maps we used to keep folded in our glove compartments were a collection of lines and shapes that we overlaid with human intelligence. Now, as we've seen, a map is a collection of lines and shapes with Nick Volmar's (and hundreds of others') intelligence encoded within. It's common when we discuss the future of maps to reference the Borgesian dream of a 1:1 map of the entire world. It seems like a ridiculous notion that we would need a complete representation of the world when we already have the world itself. But to take scholar Nathan Jurgenson's conception of augmented reality seriously, we would have to believe that every physical space is, in his words, "interpenetrated" with information. All physical spaces already are also informational spaces. We humans all hold a Borgesian map in our heads of the places we know and we use it to navigate and compute physical space. Google's strategy is to bring all our mental maps together and process them into accessible, useful forms. Their MapMaker product makes that ambition clear. Project managed by Gupta during his time in India, it's the "bottom up" version of Ground Truth. It's a publicly accessible way to edit Google Maps by adding landmarks and data about your piece of the world. It's a way of sucking data out of human brains and onto the Internet. And it's a lot like Google's open competitor, Open Street Map, which has proven that it, too, can harness the crowd's intelligence. As we slip and slide into a world where our augmented reality is increasingly visible to us off and online, Google's geographic data may become its most valuable asset. Not solely because of this data alone, but because location data makes everything else Google does and knows more valuable. Or as my friend and sci-fi novelist Robin Sloan put it to me, "I maintain that this is Google's core asset. In 50 years, Google will be the self-driving car company (powered by this deep map of the world) and, oh, P.S. they still have a search engine somewhere." Of course, they will always need one more piece of geographic information to make all this effort worthwhile: You. Where you are, that is. Your location is the current that makes Google's giant geodata machine run. They've built this whole playground as an elaborate lure for you. As good and smart and useful as it is, good luck resisting taking the bait.* Due to a

transcription error, an earlier version of this story stated that Google

published 20PB of imagery data every two weeks.

Wednesday, October 03. 2012Oculus Rift: VR gaming headset takes to KickstarterVia Edge -----

UPDATE: Oculus Rift is not, as reported below, John Carmack's creation. The Id co-founder tweeted last night to confirm that "I have no direct ties with Oculus; I endorse it is a wonderful advance in VR tech, but I'm not "backing it". The virtual reality headset John Carmack was showing off at E3 is now on Kickstarter. Named Oculus Rift, it has already raised three times its $250,000 goal since the project went live yesterday. The project pitches Oculus Rift as the first truly functional and affordable VR headset, which "takes 3D gaming to the next level." The headset "is designed to maximise immersion, comfort, and pure, uninhibited fun, at a price everyone can afford." Given Carmack's involvement it's little surprise that the project has, at the time of writing, raised $777,130 from over 3,000 backers. Some of the most respected people in the industry have also thrown their weight behind Oculus Rift, including Gabe Newell, who in a quote on the project page is full of praise for Oculus founder Palmer Luckey. "It looks incredibly exciting," The Valve president says. "If anybody's going to tackle this set of hard problems, we think that Palmer's going to do it. So we'd strongly encourage you to support this Kickstarter." Also on board are Epic Games design director Cliff Bleszinski ("I'm a believer"), Unity Technologies CEO David Helgason ("This will be the coolest way to experience games in the future"), and Michael Abrash, former colleague of Carmack at Id and now one of Valve's more prominent thinkers, who recently revealed the company's work on wearable computing. Oculus Rift will be bundled with a copy of Doom 3 BFG, Id's revamp of its 2004 shooter and the first Rift-compatible game. Kickstarter reward tiers begin at $10, though the serious stuff is reserved for those pledging larger amounts. $275 will net you an unassembled Rift prototype; $300 gets you early access to the developer kit. The SDK is a work in progress, but Oculus plans on integration of Unreal Engine and Unity, opening up a host of possible platforms including PC and mobile. Those paying $5,000 or more – three people have done so already – get all rewards plus a visit to the Oculus lab. One of the three is Markus "Notch" Persson, who tweeted last night to reveal he had pledged $10,000 and that there was a good chance Mojang's games would be supported – and let's be honest, a headset-controlled Minecraft is quite the prospect. It's a remarkable turn of events coming just months after the headset's E3 debut. Carmack admitted the prototype was "literally held together with duct tape" and it certainly didn't seem like it was mere months away from becoming a serious proposition. For more, we recommend PC Gamer's video of John Carmack's VR headset. David Boddington played Doom 3 BFG using the device, and came away resoundingly impressed, describing it as "unlike any other gaming experience I've had".

« previous page

(Page 28 of 53, totaling 527 entries)

» next page

|

QuicksearchPopular Entries

CategoriesShow tagged entriesSyndicate This BlogCalendar

Blog Administration |

|||||||||||||||||||||||||||||||||||||||||||||||||