Tuesday, October 02. 2012

Questions abound as malicious phpMyAdmin backdoor found on SourceForge site

Via ars technica

-----

Developers of phpMyAdmin warned users they may be running a malicious version of the open-source software package after discovering backdoor code was snuck into a package being distributed over the widely used SourceForge repository.

The backdoor contains code that allows remote attackers to take control of the underlying server running the modified phpMyAdmin, which is a Web-based tool for managing MySQL databases. The PHP script is found in a file named server_sync.php, and it reads PHP code embedded in standard POST Web requests and then executes it. That allows anyone who knows the backdoor is present to execute code of his choice. HD Moore, CSO of Rapid7 and chief architect of the Metasploit exploit package for penetration testers and hackers, told Ars a module has already been added that tests for the vulnerability.

The backdoor is concerning because it was distributed on one of the official mirrors for SourceForge, which hosts more than 324,000 open-source projects, serves more than 46 million consumers, and handles more than four million downloads each day. SourceForge officials are still investigating the breach, so crucial questions remain unanswered. It's still unclear, for instance, if the compromised server hosted other maliciously modified software packages, if other official SourceForge mirror sites were also affected, and if the central repository that feeds these mirror sites might also have been attacked.

"If that one mirror was compromised, nearly every SourceForge package on that mirror could have been backdoored, too," Moore said. "So you're looking at not just phpMyAdmin, but 12,000 other projects. If that one mirror was compromised and other projects were modified this isn't just 1,000 people. This is up to a couple hundred thousand."

An advisory posted Tuesday on phpMyAdmin said: "One of the SourceForge.net mirrors, namely cdnetworks-kr-1, was being used to distribute a modified archive of phpMyAdmin, which includes a backdoor. This backdoor is located in file server_sync.php and allows an attacker to remotely execute PHP code. Another file, js/cross_framing_protection.js, has also been modified." phpMyAdmin officials didn't respond to e-mails seeking to learn how long the backdoored version had been available and how many people have downloaded it.

Update: In a blog post, SourceForge officials said they believe only the affected phpMyAdmin-3.5.2.2-all-languages.zip package was the only modified file on the cdnetworks mirror site, but they are continuing to investigate to make sure. Logs indicate that about 400 people downloaded the malicious package. The provider of the Korea-based mirror has confirmed the breach, which is believe to have happened around September 22, and indicated it was limited to that single mirror site. The machine has been taken out of rotation.

"Downloaders are at risk only if a corrupt copy of this software was obtained, installed on a server, and serving was enabled," the SourceForge post said. "Examination of web logs and other server data should help confirm whether this backdoor was accessed."

It's not the first time a widely used open-source project has been hit by a breach affecting the security of its many downstream users. In June of last year, WordPress required all account holders on WordPress.org to change their passwords following the discovery that hackers contaminated it with malicious software. Three months earlier, maintainers of the PHP programming language spent several days scouring their source code for malicious modifications after discovering the security of one of their servers had been breached.

A three-day security breach in 2010 on ProFTP caused users who downloaded the package during that time to be infected with a malicious backdoor. The main source-code repository for the Free Software Foundation was briefly shuttered that same year following the discovery of an attack that compromised some of the website's account passwords and may have allowed unfettered administrative access. And last August, multiple servers used to maintain and distribute the Linux operating system were infected with malware that gained root system access, although maintainers said the repository was unaffected.

Monday, October 01. 2012

HP launches Open webOS 1.0

Via SlashGear

-----

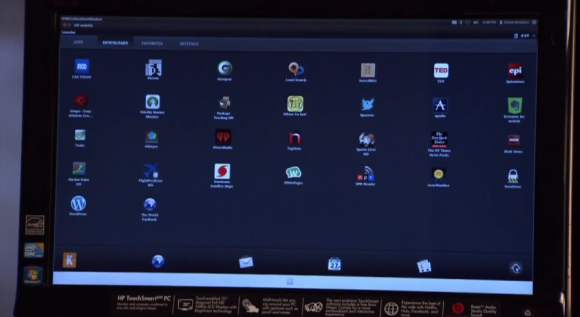

HP‘s TouchPad and Palm devices may be long and gone, but webOS (the mobile OS that these devices ran off of) has been alive and well despite its hardware extinction, mostly thanks to its open-source status. Open webOS, as its now called, went into beta in August, and now a month later, a final stable build is ready for consumption as version 1.0.

The 1.0 release offers some changes that the Open webOS team hopes will offer major new capabilities for developers. The team also mentions that over 75 Open webOS components have been delivered over the past 9 months (totaling over 450,000 lines of code), which means that Open webOS can now be ported to new devices thanks to today’s 1.0 release.

In the video below, Open webOS architect Steve Winston demoes the operating system on a HP TouchSmart all-in-one PC. He mentions that it took the team just “a couple of days” to port Open webOS to the PC that he has in front of him. The user interface doesn’t seem to be performing super smoothly, but you can’t really expect more out of a 1.0 release.

Winston says that possible uses for Open webOS include kiosk applications in places like hotels, and since Open webOS is aimed to work on phones, tablets, and PCs, there’s the possibility that Open webOS could become an all-in-one solution for kiosk or customer service platforms for businesses. Obviously, version 1.0 is just the first step, so the Open webOS team is just getting started with this project and they expect to only improve on it and add new features as time goes on.

Watch 32 discordant metronomes achieve synchrony in a matter of minutes

Via io9

-----

If you place 32 metronomes on a static object and set them rocking out of phase with one another, they will remain that way indefinitely. Place them on a moveable surface, however, and something very interesting (and very mesmerizing) happens.

The metronomes in this video fall into the latter camp. Energy from the motion of one ticking metronome can affect the motion of every metronome around it, while the motion of every other metronome affects the motion of our original metronome right back. All this inter-metranome "communication" is facilitated by the board, which serves as an energetic intermediary between all the metronomes that rest upon its surface. The metronomes in this video (which are really just pendulums, or, if you want to get really technical, oscillators) are said to be "coupled."

The math and physics surrounding coupled oscillators are actually relevant to a variety of scientific phenomena, including the transfer of sound and thermal conductivity. For a much more detailed explanation of how this works, and how to try it for yourself, check out this excellent video by condensed matter physicist Adam Milcovich.

Tuesday, September 25. 2012

Power, Pollution and the Internet

Via New York Times

-----

SANTA CLARA, Calif. — Jeff Rothschild’s machines at Facebook had a problem he knew he had to solve immediately. They were about to melt.

The company had been packing a 40-by-60-foot rental space here with racks of computer servers that were needed to store and process information from members’ accounts. The electricity pouring into the computers was overheating Ethernet sockets and other crucial components.

Thinking fast, Mr. Rothschild, the company’s engineering chief, took some employees on an expedition to buy every fan they could find — “We cleaned out all of the Walgreens in the area,” he said — to blast cool air at the equipment and prevent the Web site from going down.

That was in early 2006, when Facebook had a quaint 10 million or so users and the one main server site. Today, the information generated by nearly one billion people requires outsize versions of these facilities, called data centers, with rows and rows of servers spread over hundreds of thousands of square feet, and all with industrial cooling systems.

They are a mere fraction of the tens of thousands of data centers that now exist to support the overall explosion of digital information. Stupendous amounts of data are set in motion each day as, with an innocuous click or tap, people download movies on iTunes, check credit card balances through Visa’s Web site, send Yahoo e-mail with files attached, buy products on Amazon, post on Twitter or read newspapers online.

A yearlong examination by The New York Times has revealed that this foundation of the information industry is sharply at odds with its image of sleek efficiency and environmental friendliness.

Most data centers, by design, consume vast amounts of energy in an incongruously wasteful manner, interviews and documents show. Online companies typically run their facilities at maximum capacity around the clock, whatever the demand. As a result, data centers can waste 90 percent or more of the electricity they pull off the grid, The Times found.

To guard against a power failure, they further rely on banks of generators that emit diesel exhaust. The pollution from data centers has increasingly been cited by the authorities for violating clean air regulations, documents show. In Silicon Valley, many data centers appear on the state government’s Toxic Air Contaminant Inventory, a roster of the area’s top stationary diesel polluters.

Worldwide, the digital warehouses use about 30 billion watts of electricity, roughly equivalent to the output of 30 nuclear power plants, according to estimates industry experts compiled for The Times. Data centers in the United States account for one-quarter to one-third of that load, the estimates show.

“It’s staggering for most people, even people in the industry, to understand the numbers, the sheer size of these systems,” said Peter Gross, who helped design hundreds of data centers. “A single data center can take more power than a medium-size town.”

Energy efficiency varies widely from company to company. But at the request of The Times, the consulting firm McKinsey & Company analyzed energy use by data centers and found that, on average, they were using only 6 percent to 12 percent of the electricity powering their servers to perform computations. The rest was essentially used to keep servers idling and ready in case of a surge in activity that could slow or crash their operations.

A server is a sort of bulked-up desktop computer, minus a screen and keyboard, that contains chips to process data. The study sampled about 20,000 servers in about 70 large data centers spanning the commercial gamut: drug companies, military contractors, banks, media companies and government agencies.

“This is an industry dirty secret, and no one wants to be the first to say mea culpa,” said a senior industry executive who asked not to be identified to protect his company’s reputation. “If we were a manufacturing industry, we’d be out of business straightaway.”

These physical realities of data are far from the mythology of the Internet: where lives are lived in the “virtual” world and all manner of memory is stored in “the cloud.”

The inefficient use of power is largely driven by a symbiotic relationship between users who demand an instantaneous response to the click of a mouse and companies that put their business at risk if they fail to meet that expectation.

Even running electricity at full throttle has not been enough to satisfy the industry. In addition to generators, most large data centers contain banks of huge, spinning flywheels or thousands of lead-acid batteries — many of them similar to automobile batteries — to power the computers in case of a grid failure as brief as a few hundredths of a second, an interruption that could crash the servers.

“It’s a waste,” said Dennis P. Symanski, a senior researcher at the Electric Power Research Institute, a nonprofit industry group. “It’s too many insurance policies.”

Monday, September 24. 2012

Get ready for 'laptabs' to proliferate in a Windows 8 world

Via DVICE

-----

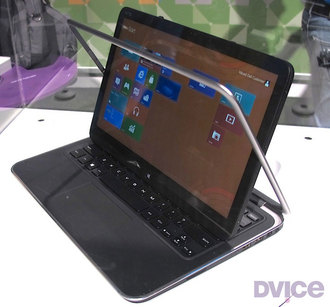

Image Credit: Stewart Wolpin/DVICE

A few months after the iPad came out, computer makers who had made convertible laptops started phasing them out, believing the iPad usurped their need. What's old is new again: several computer makers are planning to introduce new Windows 8 convertible laptops soon after Microsoft makes the OS official on October 26.

I agree with the assessment that the iPad stymied the need for convertible laptops; if you need a keyboard with the lighter-than-a-convertible iPad, or even an Android tablet, you could buy an auxiliary Bluetooth QWERTY keypad. In fact, your bag would probably be lighter with an iPad and an ultrabook both contained therein, as opposed to a single convertible laptop.

But if these new hybrids succeed, we can't keep calling them "convertible laptops" (for one thing, it takes too long to type). So, I'm inventing a new name for these sometimes-a-laptop, sometimes-a-tablet combo computers.

"Laptabs!"

It's a name I'd trademark if I could (I wish I'd made up "phablet," for instance). Please cite me if you use it. (And don't get dyslexically clever and start calling them "tablaps" — I'm claiming that portmanteau, too.)

Here's the convertible rundown on the five laptabs I found during last months IFA electronics showcase in Berlin, Germany — some have sliding tops and some have detachable tabs, but they're all proper laptabs.

1. Dell XPS Duo 12 It looks like a regular clamshell at first glance, but the 12.5-inch screen pops out and swivels 360 degrees on its central horizontal axis inside the machined aluminum frame, then lies back-to-front over the keyboard to create one fat tablet. The idea isn't exactly original — the company put out a 10.1-inch Inspiron Duo netbook a few years back with the same swinging configuration, but was discontinued when the iPad also killed the netbook.

2. HP Envy X2 Here's a detachable tablet laptab with an 11.6-inch snap-off screen. Combined with its keyboard, the X2 weighs a whopping 3.1 pounds; the separated screen/tablet tips the scales at just 1.5 pounds. Its heavier-than-thou nature stems from HP building a battery into both the X2's keyboard and the screen/tablet. HP didn't have a battery life rating, only saying the dual configuration meant it will be naturally massive.

3. Samsung ATIV Smart PC/Smart PC Pro Like the HP, Samsung's offering has an 11.6-inch screen that pops off the QWERTY keypad. The Pro sports an Intel Core i5 processor, measures 11.9 mm thick when closed and will run for eight hours on a single charge, while its sibling is endowed with an Intel Core i3 chip, measures a relatively svelte 9.9mm thin and operates for a healthy 13.5 hours on its battery.

4. Sony VAIO Duo 11 Isn't it odd that Sony and Dell came up with similar laptab appellations? Or maybe not. The VAIO Duo 11 is equipped with an 11.1-inch touchscreen that slides flat-then-back-to-front so it lies back-down on top of the keypad. You also get a digitizer stylus. Sony's Duo doesn't offer any weight advantages compared to an ultrabook, though, which I think poses a problem for most of these laptabs. For instance, both the Intel i3 and i5 Duo 11 editions weigh in nearly a half pound more than Apple's 11-inch Mac Book Air, and at 2.86 pounds, just 0.1 pounds lighter than the 13-inch MacBook Air.

5. Toshiba Satellite U920t Like the Sony Duo, the Satellite U920t is a back-to-front slider, but lacks the seemingly overly complex mechanism of its sliding laptab competitor. Instead, you lay the U920t's 12.5-inch screen flat, then slide it over the keyboard. While easier to slide, it's a bit thick at 19.9 mm compared to Duo 11's 17.8 mm depth, and weighs a heftier 3.2 pounds.

Choices, Choices And More Choices

So: a light ultrabook, or a heavier laptab? And once you pop the tab top off the HP and Samsung when mobile, your bag continues to be weighed down by the keyboard, obviating the whole advantage of carrying a tablet.

In other words, laptabs carry all the disadvantages of a heavier laptop with none of the weight advantages of a tablet. Perhaps there are some functionality advantages by having both; I just don't see these worth a sore back.

Check out the gallery below for a closer look at each laptab written about here.

All images above by Stewart Wolpin for DVICE.

MakerBot shows off next-gen Replicator 2 'desktop' 3D printer

Via DVICE

-----

The MakerBot Replicator 2 Desktop 3D Printer. (Image Credit: Kevin Hall/DVICE)

The new Replicator 2 looks good on a shelf, but also boasts two notable upgrades: it's insanely accurate with a 100-micron resolution, and can build objects 37 percent larger than its predecessor without adding roughly any bulk to its size.

In a small eatery in Brooklyn, New York, MakerBot CEO Bre Pettis unveiled the next generation Replicator 2, which he presented in terms of Apple-like evolution in design. From the memory of its own Apple IIe in much smaller DIY 3D printing kits comes the all-steel Replicator 2. The fourth generation MakerBot printer ditches the wood for a sleek hard-body chassis, and is "designed for the desktop of an engineer, researcher, creative professional, or anyone who loves to make things," according to the company.

Of course, MakerBot, which helps enable a robust community of 3D printing enthusiasts, is all about the idea of 3D printing at home. By calling them desktop 3D printers, MakerBot alludes to 1984's Apple Macintosh, the first computer designed to be affordable. The Macintosh was introduced at a fraction of the cost of the Lisa, which came before it and was the first computer with an easy-to-use graphical interface.

Each iteration of MakerBot's 3D printing technology has tried to find a sweet spot between utility and affordability. Usually, affordability comes at the cost of two things: micron-level layer resolutions (the first Replicator had a 270-micron layer resolution, nearly three times as thick) and enough area to build. The Replicator 2 prioritizes these things, and, while it only has a single-color extruder, can still be sold at an impressive $2,100.

Well, to really be a Macintosh, the Replicator 2 also needs a user-friendly GUI. To that end, MakerBot is announcing MakerWare, which will allow users to arrange shapes and resize them on the fly with a simple program. If you don't need precise sizes, you can just wing it and build a shape as large as you like in the Replicator 2's 410-cubic-inch build area. More advanced users can set out an array of parts with the tool across the build space, and print multiple smaller components at once:

Even with all that going for it, $2,100 isn't a very Macintosh price — the original Macintosh was far cheaper than the Lisa before it. MakerBot's 3D printers have gotten more expensive over the years: $750 Cupcake, $1,225 Thing-O-Matic, $1749 Replicator (which the company still sells), and now a $2,100 Replicator 2. With that in mind, maybe it's not price that will determine 3D printers finding a spot in every home, but the layer resolution.

Printing with a 100-micron layer resolution, the amount of material the Replicator 2 puts down to build an object is impressive. To illustrate, each layer is 100 microns, or 0.1 millimeters, thick. A six-inch-tall object, which the printer can manage, would be made of at least 1,500 layers.

Most importantly, at 100 microns, the objects printed feel smooth, where the previous generation of home printers created objects that had rough wavy edges. The Replicator 2 uses a special-to-MakerBot bioplastic that's made from corn (to quote Pettis: "It smells good, too"), and the 3D-printed sample MakerBot gave us definitely felt smooth with a barely detectable grain.

The MakerBot Replicator 2 appears on the October (2012, if you're from the future) issue of Wired magazine with the words, "This machine will change the world." Does that mean 3D printing affordable, useable, accurate and space-efficient, yet? If the answer is yes, you can get your own MakerBot Replicator 2 from MakerBot

(One last thing, Pettis urged us all to check out the the brochure as the MakerBot team "worked really hard on it" to make it the "best brochure in the universe." Straight from my notes to your screen.)

Thursday, September 20. 2012

Three-Minute Tech: LTE

Via TechHive

-----

The iPhone 5 is the latest smartphone to hop on-board the LTE (Long Term Evolution) bandwagon, and for good reason: The mobile broadband standard is fast, flexible, and designed for the future. Yet LTE is still a young technology, full of growing pains. Here’s an overview of where it came from, where it is now, and where it might go from here.

The evolution of ‘Long Term Evolution’

LTE is a mobile broadband standard developed by the 3GPP (3rd Generation Partnership Project), a group that has developed all GSM standards since 1999. (Though GSM and CDMA—the network Verizon and Sprint use in the United States—were at one time close competitors, GSM has emerged as the dominant worldwide mobile standard.)

Cell networks began as analog, circuit-switched systems nearly identical in function to the public switched telephone network (PSTN), which placed a finite limit on calls regardless of how many people were speaking on a line at one time.

The second-generation, GPRS, added data (at dial-up modem speed). GPRS led to EDGE, and then 3G, which treated both voice and data as bits passing simultaneously over the same network (allowing you to surf the web and talk on the phone at the same time).

GSM-evolved 3G (which brought faster speeds) started with UMTS, and then accelerated into faster and faster variants of 3G, 3G+, and “4G” networks (HSPA, HSDPA, HSUPA, HSPA+, and DC-HSPA).

Until now, the term “evolution” meant that no new standard broke or failed to work with the older ones. GSM, GPRS, UMTS, and so on all work simultaneously over the same frequency bands: They’re intercompatible, which made it easier for carriers to roll them out without losing customers on older equipment. But these networks were being held back by compatibility.

That’s where LTE comes in. The “long term” part means: “Hey, it’s time to make a big, big change that will break things for the better.”

LTE needs its own space, man

LTE has “evolved” beyond 3G networks by incorporating new radio technology and adopting new spectrum. It allows much higher speeds than GSM-compatible standards through better encoding and wider channels. (It’s more “spectrally efficient,” in the jargon.)

LTE is more flexible than earlier GSM-evolved flavors, too: Where GSM’s 3G variants use 5 megahertz (MHz) channels, LTE can use a channel size from 1.4 MHz to 20 MHz; this lets it work in markets where spectrum is scarce and sliced into tiny pieces, or broadly when there are wide swaths of unused or reassigned frequencies. In short, the wider the channel—everything else being equal—the higher the throughput.

Speeds are also boosted through MIMO (multiple input, multiple output), just as in 802.11n Wi-Fi. Multiple antennas allow two separate benefits: better reception, and multiple data streams on the same spectrum.

LTE complications

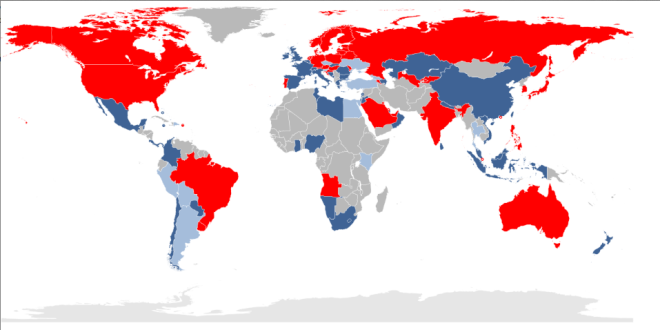

Unfortunately, in practice, LTE implementation gets sticky: There are 33 potential bands for LTE, based on a carrier’s local regulatory domain. In contrast, GSM has just 14 bands, and only five of those are widely used. (In broad usage, a band is two sets of paired frequencies, one devoted to upstream traffic and the other committed to downstream. They can be a few MHz apart or hundreds of MHz apart.)

And while LTE allows voice, no standard has yet been agreed upon; different carriers could ultimately choose different approaches, leaving it to handset makers to build multiple methods into a single phone, though they’re trying to avoid that. In the meantime, in the U.S., Verizon and AT&T use the older CDMA and GSM networks for voice calls, and LTE for data.

LTE in the United States

Of the four major U.S. carriers, AT&T, Verizon, and Sprint have LTE networks, with T-Mobile set to start supporting LTE in the next year. But that doesn’t mean they’re set to play nice. We said earlier that current LTE frequencies are divided up into 33 spectrum bands: With the exception of AT&T and T-Mobile, which share frequencies in band 4, each of the major U.S. carriers has its own band. Verizon uses band 13; Sprint has spectrum in band 26; and AT&T holds band 17 in addition to some crossover in band 4.

In addition, smaller U.S. carriers, like C Spire, U.S. Cellular, and Clearwire, all have their own separate piece of the spectrum pie: C Spire and U.S. Cellular use band 12, while Clearwire uses band 41.

As such, for a manufacturer to support LTE networks in the United States alone, it would need to build a receiver that could tune into seven different LTE bands—let alone the various flavors of GSM-evolved or CDMA networks.

With the iPhone, Apple tried to cut through the current Gordian Knot by releasing two separate models, the A1428 and A1429, which cover a limited number of different frequencies depending on where they’re activated. (Apple has kindly released a list of countries that support its three iPhone 5 models.) Other companies have chosen to restrict devices to certain frequencies, or to make numerous models of the same phone.

Banded together

Other solutions are coming. Qualcomm made a regulatory filing in June regarding a seven-band LTE chip, which could be in shipping devices before the end of 2012 and could allow a future iPhone to be activated in different fashions. Within a year or so, we should see most-of-the-world phones, tablets, and other LTE mobile devices that work on the majority of large-scale LTE networks.

That will be just in time for the next big thing: LTE-Advanced, the true fulfillment of what was once called 4G networking, with rates that could hit 1 Gbps in the best possible cases of wide channels and short distances. By then, perhaps the chip, handset, and carrier worlds will have converged to make it all work neatly together.

Wednesday, September 19. 2012

A Genome-Wide Association Study Identifies Five Loci Influencing Facial Morphology in Europeans

Via PLOS genetics

-----

Abstract

Inter-individual variation in facial shape is one of the most noticeable phenotypes in humans, and it is clearly under genetic regulation; however, almost nothing is known about the genetic basis of normal human facial morphology. We therefore conducted a genome-wide association study for facial shape phenotypes in multiple discovery and replication cohorts, considering almost ten thousand individuals of European descent from several countries. Phenotyping of facial shape features was based on landmark data obtained from three-dimensional head magnetic resonance images (MRIs) and two-dimensional portrait images. We identified five independent genetic loci associated with different facial phenotypes, suggesting the involvement of five candidate genes—PRDM16, PAX3, TP63, C5orf50, and COL17A1—in the determination of the human face. Three of them have been implicated previously in vertebrate craniofacial development and disease, and the remaining two genes potentially represent novel players in the molecular networks governing facial development. Our finding at PAX3 influencing the position of the nasion replicates a recent GWAS of facial features. In addition to the reported GWA findings, we established links between common DNA variants previously associated with NSCL/P at 2p21, 8q24, 13q31, and 17q22 and normal facial-shape variations based on a candidate gene approach. Overall our study implies that DNA variants in genes essential for craniofacial development contribute with relatively small effect size to the spectrum of normal variation in human facial morphology. This observation has important consequences for future studies aiming to identify more genes involved in the human facial morphology, as well as for potential applications of DNA prediction of facial shape such as in future forensic applications.

Introduction

The morphogenesis and patterning of the face is one of the most complex events in mammalian embryogenesis. Signaling cascades initiated from both facial and neighboring tissues mediate transcriptional networks that act to direct fundamental cellular processes such as migration, proliferation, differentiation and controlled cell death. The complexity of human facial development is reflected in the high incidence of congenital craniofacial anomalies, and almost certainly underlies the vast spectrum of subtle variation that characterizes facial appearance in the human population.

Facial appearance has a strong genetic component; monozygotic (MZ) twins look more similar than dizygotic (DZ) twins or unrelated individuals. The heritability of craniofacial morphology is as high as 0.8 in twins and families [1], [2], [3]. Some craniofacial traits, such as facial height and position of the lower jaw, appear to be more heritable than others [1], [2], [3]. The general morphology of craniofacial bones is largely genetically determined and partly attributable to environmental factors [4]–[11]. Although genes have been mapped for various rare craniofacial syndromes largely inherited in Mendelian form [12], the genetic basis of normal variation in human facial shape is still poorly understood. An appreciation of the genetic basis of facial shape variation has far reaching implications for understanding the etiology of facial pathologies, the origin of major sensory organ systems, and even the evolution of vertebrates [13], [14]. In addition, it is feasible to speculate that once the majority of genetic determinants of facial morphology are understood, predicting facial appearance from DNA found at a crime scene will become useful as investigative tool in forensic case work [15]. Some externally visible human characteristics, such as eye color [16]–[18] and hair color [19], can already be inferred from a DNA sample with practically useful accuracies.

In a recent candidate gene study carried out in two independent European population samples, we investigated a potential association between risk alleles for non-syndromic cleft lip with or without cleft palate (NSCL/P) and nose width and facial width in the normal population [20]. Two NSCL/P associated single nucleotide polymorphisms (SNPs) showed association with different facial phenotypes in different populations. However, facial landmarks derived from 3-Dimensional (3D) magnetic resonance images (MRI) in one population and 2-Dimensional (2D) portrait images in the other population were not completely comparable, posing a challenge for combining phenotype data. In the present study, we focus on the MRI-based approach for capturing facial morphology since previous facial imaging studies by some of us have demonstrated that MRI-derived soft tissue landmarks represent a reliable data source [21], [22].

In geometric morphometrics, there are different ways to deal with the confounders of position and orientation of the landmark configurations, such as (1) superimposition [23], [24] that places the landmarks into a consensus reference frame; (2) deformation [25]–[27], where shape differences are described in terms of deformation fields of one object onto another; and (3) linear distances [28], [29], where Euclidean distances between landmarks instead of their coordinates are measured. Rationality and efficacy of these approaches have been reviewed and compared elsewhere [30]–[32]. We briefly compared these methods in the context of our genome-wide association study (GWAS) (see Methods section) and applied them when appropriate.

We extracted facial landmarks from 3D head MRI in 5,388 individuals of European origin from Netherlands, Australia, and Germany, and used partial Procrustes superimposition (PS) [24], [30], [33] to superimpose different sets of facial landmarks onto a consensus 3D Euclidean space. We derived 48 facial shape features from the superimposed landmarks and estimated their heritability in 79 MZ and 90 DZ Australian twin pairs. Subsequently, we conducted a series of GWAS separately for these facial shape dimensions, and attempted to replicate the identified associations in 568 Canadians of European (French) ancestry with similar 3D head MRI phenotypes and additionally sought supporting evidence in further 1,530 individuals from the UK and 2,337 from Australia for whom facial phenotypes were derived from 2D portrait images.

-----

The full article@PLOS genetics

Tuesday, September 18. 2012

Stretchable, Tattoo-Like Electronics Are Here to Check Your Health

Via Motherboard

-----

Wearable computing is all the rage this year as Google pulls back the curtain on their Glass technology, but some scientists want to take the idea a stage further. The emerging field of stretchable electronics is taking advantage of new polymers that allow you to not just wear your computer but actually become a part of the circuitry. By embedding the wiring into a stretchable polymer, these cutting edge devices resemble human skin more than they do circuit boards. And with a whole host of possible medical uses, that’s kind of the point.

A Cambridge, Massachusetts startup called MC10 is leading the way in stretchable electronics. So far, their products are fairly simple. There’s a patch that’s meant to be installed right on the skin like a temporary tattoo that can sense whether or not the user is hydrated as well as an inflatable balloon catheter that can measure the electronic signals of the user’s heartbeat to search for irregularities like arrythmias. Later this year, they’re launching a mysterious product with Reebok that’s expected to take advantage of the technology’s ability to detect not only heartbeat but also respiration, body temperature, blood oxygenation and so forth.

The joy of stretchable electronics is that the manufacturing process is not unlike that of regular electronics. Just like with a normal microchip, gold electrodes and wires are deposited on to thin silicone wafers, but they’re also embedded in the stretchable polymer substrate. When everything’s in place, the polymer substrate with embedded circuitry can be peeled off and later installed on a new surface. The components that can be added to stretchable surface include sensors, LEDs, transistors, wireless antennas and solar cells for power.

For now, the technology is still the nascent stages, but scientists have high hopes. In the future, you could wear a temporary tattoo that would monitor your vital signs, or doctors might install stretchable electronics on your organs to keep track of their behavior. Stretchable electronics could also be integrated into clothing or paired with a smartphone. Of course, if all else fails, it’ll probably make for some great children’s toys.

Wednesday, September 12. 2012

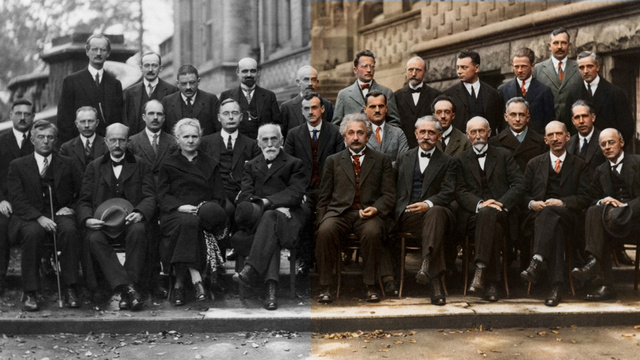

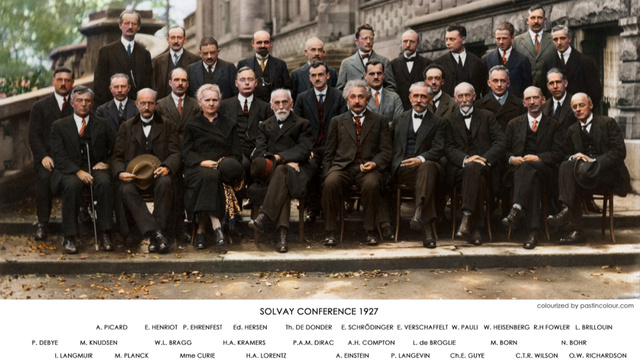

Twenty-nine of history’s most iconic scientists in one photograph – now in color!

Via io9

-----

It was originally captured in 1927 at the fifth Solvay Conference, one of the most star-studded meetings of scientific minds in history. Notable attendees included Albert Einstein, Niels Bohr, Marie Curie, Erwin Schrödinger, Werner Heisenberg, Wolfgang Pauli, Paul Dirac and Louis de Broglie — to name a few.

Of the 29 scientists in attendance (the majority of whom contributed to the fields of physics and chemistry), over half of them were, or would would go to become, Nobel laureates. (It bears mentioning that Marie Curie, the only woman in attendance at the conference, remains the only scientist in history to be awarded Nobel Prizes in two different scientific fields.)

All this is to say that this photo is beyond epic. Now, thanks to the masterful work of redditor mygrapefruit, this photo is more impressive, still. Through a process known as colorization, mygrapefruit has given us an an even better idea of what this scene might have looked like had we attended the conference in person back in 1927; and while the Sweden-based artist typically charges for her skills, she's included a link to a hi-res, print-size download of the colorized photo featured below, free of charge.

You can learn more about the colorization process, and check out more of mygrapefruit's work, over on her website.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (130804)

- Norton cyber crime study offers striking revenue loss statistics (101318)

- MeCam $49 flying camera concept follows you around, streams video to your phone (99823)

- Norton cyber crime study offers striking revenue loss statistics (57547)

- The PC inside your phone: A guide to the system-on-a-chip (57175)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

January '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 | |