Thursday, November 15. 2012

Israel Announces Gaza Invasion Via Twitter, Marks The First Time A Military Campaign Goes Public Via Tweet

Via Fast Company

-----

The Israeli military issued the world's first announcement of a military campaign via Twitter today with the disclosure of a large-scale, ongoing operation in Gaza. The Twitter announcement was made before a press conference was held.

A major Israeli military campaign in the Gaza Strip was announced today via Twitter in lieu of a formal press conference. The Israeli Defense Forces' official @IDFSpokesperson Twitter feed confirmed “a widespread campaign on terror sites & operatives in the #Gaza Strip,” called Operation Pillar of Defense, at approximately 9:30 a.m. Eastern time. Shortly before the tweet, Hamas' military head Ahmed al-Jabari was killed by an Israeli missile strike--Israel's military claims al-Jabari was responsible for numerous terror attacks against civilians. The IDF announcement on Twitter was the first confirmation made to the media of an official military campaign.

The Israeli military incursion follows months of rising tension in Gaza and southern Israel. More than 100 rockets have been fired at Israeli civilian targets since the beginning of November 2012, and Israeli troops have targeted Hamas and Islamic Jihad forces inside Gaza in attacks that reportedly included civilian casualties. According to the IDF spokespersons' office, the Israeli military is “ready to initiate a ground operation in Gaza” if necessary. Hamas' military wing, the Al-Qassam Brigades, operates the @alqassambrigade Twitter feed, which confirmed al-Jabari's death.

Wednesday, November 14. 2012

Spike in government surveillance of Google

Via BBC

-----

Governments around the world made nearly 21,000 requests for access to Google data in the first six months of this year, according to the search engine.

Its Transparency Reportindicates government surveillance of online lives is rising sharply.

The US government made the most demands, asking for details 7,969 times in the first six months of 2012.

Turkey topped the list for requests to remove content.

Government 'bellwether'

Google, in common with other technology and communication companies, regularly receives requests from government agencies and courts around the world to have access to content.

It has been publishing its Transparency Report twice a year since 2009 and has seen a steady rise in government demands for data. In its first report in 2009, it received 12,539 requests. The latest figure stands at 20,939.

"This is the sixth time we've released this data, and one trend has become clear: government surveillance is on the rise," Google said in a blog post.

The report acts as a bellwether for government behaviour around the world, a Google spokeswoman told the BBC.

"It reflects laws on the ground. For example in Turkey there are specific laws about defaming public figures whereas in Germany we get requests to remove neo-Nazi content," she said.

"And in Brazil we get a lot of requests to remove content during elections because there is a law banning parodies of candidates.

"We hope that the report will shed light on how governments interact with online services and how laws are reflected in online behaviour," she added.

The US has consistently topped the charts for data requests. France, Germany, Italy, Spain and the UK are also in the top 10.

In France and Germany it complied with fewer than half of all requests. In the UK it complied with 64% of requests and 90% of requests from the US.

Removing content

Google said the top three reasons cited by government for content removal were defamation, privacy and security.

Worldwide authorities made 1,789 requests for Google to remove content, up from 1,048 requests for the last six months of 2011.

In the period from January to June, Turkey made 501 requests for content removal.

These included 148 requests related to Mustafa Kemal Ataturk - the first president of Turkey, the current government, national identity and values.

Others included claims of pornography, hate speech and copyright.

Google has its own criteria for whether it will remove content - the request must be specific, relate to a specific web address and have come from a relevant authority.

In one example from the UK, Google received a request from police to remove 14 search results that linked to sites allegedly criticising the police and claiming individuals were involved in obscuring crimes. It did not remove the content.

Thursday, November 08. 2012

TouchDevelop Now Available as Web App

Via Technet

-----

If you’re a software developer—or if you follow the work of software developers—you’ve probably heard of TouchDevelop,

a Microsoft Research app that enables you to write code for your phone

using scripts on your phone. Its ability to bring the excitement of

programming to Windows Phone 7 has reaped lots of enthusiasm from the

development community over the past year or so.

Now, the team behind TouchDevelop has taken things a step further, with a web app that can work on any Windows 8

device with a touchscreen. You can write Windows Store apps simply by

tapping on the screen of your device. The web app also works with a

keyboard and mouse, but the touchscreen capability means that the

keyboard is not required. To learn more, watch this video.

This reimplementation of TouchDevelop went live just in time for Build,

Microsoft’s annual conference that helps developers learn how to take

advantage of Windows 8. The conference is being held Oct. 30-Nov. 2 in

Redmond, Wash.

“Just as users could turn their scripts into Windows Phone apps,” says Nikolai Tillmann, principal research software-design engineer with the Research in Software Engineering (RiSE) team at Microsoft Research Redmond, “we will also allow our users to turn their scripts into Windows Store apps.”

The TouchDevelop web app, which requires Internet Explorer 10,

enables developers to publish their scripts so they can be shared with

others using TouchDevelop. As with the Windows Phone version, a

touchdevelop.com cloud service enables scripts to be published and

queried, and when you log in with the same credentials, all of your

scripts are synchronized between all your platforms and devices.

While

in the TouchDevelop web app, users can navigate to the properties of an

installed script already created. Videos describing editor operation of

the TouchDevelop web app are available on the project’s webpage.

TouchDevelop shipped as a Windows Phone app about a year and a half ago and has seen strong downloads and reviews in the Windows Phone Store.

“Our

TouchDevelop app for Windows Phone has been downloaded more than

200,000 times,” Tillmann says, “and more than 20,000 users have logged

in with a Windows Live ID or via Facebook.”

Since

the app became available, Tillmann and his RiSE colleagues have been

astounded by the creativity the user base has demonstrated. Further

Windows 8 developer excitement will be on display during Build, which is being streamed to audiences worldwide.

Tuesday, November 06. 2012

I.B.M. Reports Nanotube Chip Breakthrough

-----

SAN FRANCISCO — I.B.M. scientists are reporting progress in a chip-making technology that is likely to ensure that the basic digital switch at the heart of modern microchips will continue to shrink for more than a decade.

The advance, first described in the journal Nature Nanotechnology on Sunday, is based on carbon nanotubes — exotic molecules that have long held out promise as an alternative to silicon from which to create the tiny logic gates now used by the billions to create microprocessors and memory chips.

The I.B.M. scientists at the T.J. Watson Research Center in Yorktown Heights, N.Y., have been able to pattern an array of carbon nanotubes on the surface of a silicon wafer and use them to build hybrid chips with more than 10,000 working transistors.

Against all expectations, silicon-based chips have continued to improve in speed and capacity for the last five decades. In recent years, however, there has been growing uncertainty about whether the technology would continue to improve.

A failure to increase performance would inevitably stall a growing array of industries that have fed off the falling cost of computer chips.

Chip makers have routinely doubled the number of transistors that can be etched on the surface of silicon wafers by shrinking the size of the tiny switches that store and route the ones and zeros that are processed by digital computers.

The switches are rapidly approaching dimensions that can be measured in terms of the widths of just a few atoms.

The process known as Moore’s Law was named after Gordon Moore, a co-founder of Intel, who in 1965 noted that the industry was doubling the number of transistors it could build on a single chip at routine intervals of about two years.

To maintain that rate of progress, semiconductor engineers have had to consistently perfect a range of related manufacturing systems and materials that continue to perform at evermore Lilliputian scale.

I.B.M. Research Vials contain carbon nanotubes that have been suspended in liquid.

The I.B.M. advance is significant, scientists said, because the chip-making industry has not yet found a way forward beyond the next two or three generations of silicon.

“This is terrific. I’m really excited about this,” said Subhasish Mitra, an electrical engineering professor at Stanford who specializes in carbon nanotube materials.

The promise of the new materials is twofold, he said: carbon nanotubes will allow chip makers to build smaller transistors while also probably increasing the speed at which they can be turned on and off.

In recent years, while chip makers have continued to double the number of transistors on chips, their performance, measured as “clock speed,” has largely stalled.

This has required the computer industry to change its designs and begin building more so-called parallel computers. Today, even smartphone microprocessors come with as many as four processors, or “cores,” which are used to break up tasks so they can be processed simultaneously.

I.B.M. scientists say they believe that once they have perfected the use of carbon nanotubes — sometime after the end of this decade — it will be possible to sharply increase the speed of chips while continuing to sharply increase the number of transistors.

This year, I.B.M. researchers published a separate paper describing the speedup made possible by carbon nanotubes.

“These devices outperformed any other switches made from any other material,” said Supratik Guha, director of physical sciences at I.B.M.’s Yorktown Heights research center. “We had suspected this all along, and our device physicists had simulated this, and they showed that we would see a factor of five or more performance improvement over conventional silicon devices.”

Carbon nanotubes are one of three promising

technologies engineers hope will be perfected in time to keep the

industry on its Moore’s Law pace.

Graphene is another promising

material that is being explored, as well as a variant of the standard

silicon transistor known as a tunneling field-effect transistor.

Dr. Guha, however, said carbon nanotube materials had more promising performance characteristics and that I.B.M. physicists and chemists had perfected a range of “tricks” to ease the manufacturing process.

Carbon nanotubes are essentially single sheets of carbon rolled into tubes. In the Nature Nanotechnology paper, the I.B.M. researchers described how they were able to place ultrasmall rectangles of the material in regular arrays by placing them in a soapy mixture to make them soluble in water. They used a process they described as “chemical self-assembly” to create patterned arrays in which nanotubes stick in some areas of the surface while leaving other areas untouched.

Perfecting the process will require a more highly purified form of the carbon nanotube material, Dr. Guha said, explaining that less pure forms are metallic and are not good semiconductors.

Dr. Guha said that in the 1940s scientists at Bell Labs had discovered ways to purify germanium, a metal in the carbon group that is chemically similar to silicon, to make the first transistors. He said he was confident that I.B.M. scientists would be able to make 99.99 percent pure carbon nanotubes in the future.

Monday, November 05. 2012

Android 4.2 Announced: Photo Sphere, Gesture Typing, Multi-User, TV Connect, Quick Settings, and Much More!

Via xda developpers

-----

Retaining the code name from Android 4.1, 4.2 is a revamped version of Jelly Bean. Despite the lack of name change, 4.2 offers various new and exciting features. Join us as we take a closer look at some of the highlights!

Photo Sphere and Camera UI Improvements

Not too long ago, Google gave us native support for panoramic photos with the launch of ICS. However, in their eyes, a standard panoramic shot doesn’t properly convey the feeling of actually being there. Photo Sphere takes us one step closer.

Once Photo Sphere mode is enabled, the app first guides you as you move your device to capture the entire scene. By using the same technology employed by Google Maps Street View, Photo Sphere then stitches the shots into a 360-degree view that allows you to pan and zoom, as you would in Street View. Those wishing to look at photo spheres from photographers around the would can do so as well.

In addition to Photo Sphere, the Camera app’s UI also been updated with gesture controls. Thanks to the gestures, the interface no longer obscures the photo being taken with various controls. Instead, the app now makes full use of the screen real estate so that you can take better photos.

Gesture Typing

Taking a page from Swype’s play book, the new keyboard built into Android 4.2 has slide gesture functionality. The heavily revised keyboard differentiates itself from current versions of Swype, however, by showing predictions in real time, as you slide around your fingers.

This isn’t the first time we’ve seen real time gesture recognition—dubstep aside. That said, the interface looks to be better on Google’s latest offering, but the real test will be in actual day to day usage. The dictionaries have also been updated, as has voice recognition.

Multi-User

Remember all the buzz about enabling multi-user support on Android? Apparently, Google does too. We all knew this was coming; it was only a matter of “when.”

Well, it’s finally here. Multi-User support has finally made its way to the OS officially. Each user is given his or her own personal space, complete with a customized home screen, background, widgets, apps, and games. While we don’t have access to the source code to verify, this is likely accomplished by sectioning off the /data partition between users. Interestingly, switching between user profiles is done via fast user switching, rather than completely logging in and out.

Naturally, this feature is only available / practical on tablets, but you can bet your bacon that this will find its way to phones in the coming months, after 4.2 is released to AOSP.

TV Connect

Many were disappointed to learn that the Nexus Q was only able to stream Google Play content rather

than supporting full device mirroring. Problem, no more. In Android

4.2, users will be able to wirelessly mirror their displays to various

supported devices.

Many were disappointed to learn that the Nexus Q was only able to stream Google Play content rather

than supporting full device mirroring. Problem, no more. In Android

4.2, users will be able to wirelessly mirror their displays to various

supported devices.

While we can’t speak in regards to additional functionality for Google’s enigmatic black orb, we can say that this will truly be a useful feature if executed properly. The underlying technology is the new industry standard Miracast, which was created by the Wi-Fi Alliance, and is based on Wi-Fi Direct.

DayDream

A fun, new feature present in 4.2 allows your device to display photo albums, news, and more when your device is docked.

Quick Settings

Remember AP’s video showing the “future site of quick settings?” It’s finally here. Google has now added a separate panel to the notification bar that can be accessed by a two-finger swipe from the top of the screen or simple button tap in the upper right corner if the notification tray is extended. Once summoned, it gives you quick access to user accounts, brightness, device settings, WiFi, Airplane Mode, Bluetooth, Battery, and Wireless Display.

Lock Screen Widgets

Much as we have seen in third party applications, Android now natively supports widgets on the lock screen. In fact, you can now add several pages of widgets to your device’s lock screen, essentially giving you a home screen—before you get to your home screen. Memetastic.

Enhanced Google Now

Google Now was also updated with more cards. A good example of this is how the software can pick out shipping updates and flight details from your email, and display them in a context-relevant manner. This, however, is not exclusively tied with the updated OS, as those with devices running 4.1 can access the update today.

Friday, November 02. 2012

U.S. looks to replace human surveillance with computers

Via c|net

-----

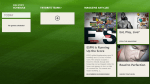

Security cameras that watch you, and predict what you'll do next, sound like science fiction. But a team from Carnegie Mellon University says their computerized surveillance software will be capable of "eventually predicting" what you're going to do.

Computerized surveillance can predict what people will do next -- it's called "activity forecasting" -- and eventually sound the alarm if the action is not permitted. Click for larger image.

(Credit: Carnegie Mellon University)Computer software programmed to detect and report illicit behavior could eventually replace the fallible humans who monitor surveillance cameras.

The U.S. government has funded the development of so-called automatic video surveillance technology by a pair of Carnegie Mellon University researchers who disclosed details about their work this week -- including that it has an ultimate goal of predicting what people will do in the future.

"The main applications are in video surveillance, both civil and military," Alessandro Oltramari, a postdoctoral researcher at Carnegie Mellon who has a Ph.D. from Italy's University of Trento, told CNET yesterday.

Oltramari and fellow researcher Christian Lebiere say automatic video surveillance can monitor camera feeds for suspicious activities like someone at an airport or bus station abandoning a bag for more than a few minutes. "In this specific case, the goal for our system would have been to detect the anomalous behavior," Oltramari says.

Think of it as a much, much smarter version of a red light camera: the unblinking eye of computer software that monitors dozens or even thousands of security camera feeds could catch illicit activities that human operators -- who are expensive and can be distracted or sleepy -- would miss. It could also, depending on how it's implemented, raise similar privacy and civil liberty concerns.

Alessandro Oltramari, left, and Christian Lebiere say their software will "automatize video-surveillance, both in military and civil applications."

(Credit: Carnegie Mellon University)A paper (PDF) the researchers presented this week at the Semantic Technology for Intelligence, Defense, and Security conference outside of Washington, D.C. -- today's sessions are reserved only for attendees with top secret clearances -- says their system aims "to approximate human visual intelligence in making effective and consistent detections."

Their Army-funded research, Oltramari and Lebiere claim, can go further than merely recognizing whether any illicit activities are currently taking place. It will, they say, be capable of "eventually predicting" what's going to happen next.

This approach relies heavily on advances by machine vision researchers, who have made remarkable strides in last few decades in recognizing stationary and moving objects and their properties. It's the same vein of work that led to Google's self-driving cars, face recognition software used on Facebook and Picasa, and consumer electronics like Microsoft's Kinect.

When it works well, machine vision can detect objects and people -- call them nouns -- that are on the other side of the camera's lens.

But to figure out what these nouns are doing, or are allowed to do, you need the computer science equivalent of verbs. And that's where Oltramari and Lebiere have built on the work of other Carnegie Mellon researchers to create what they call a "cognitive engine" that can understand the rules by which nouns and verbs are allowed to interact.

Their cognitive engine incorporates research, called activity forecasting, conducted by a team led by postdoctoral fellow Kris Kitani, which tries to understand what humans will do by calculating which physical trajectories are most likely. They say their software "models the effect of the physical environment on the choice of human actions."

Both projects are components of Carnegie Mellon's Mind's Eye architecture, a DARPA-created project that aims to develop smart cameras for machine-based visual intelligence.

Predicts Oltramari: "This work should support human operators and automatize video-surveillance, both in military and civil applications."

Thursday, November 01. 2012

Telefonica Digital shows off Thinking Things for connecting stuff to the web

Via Slash Gear

-----

Telefonica Digital has unveiled a new plastic brick device designed to connect just about anything you can think of to the Internet. These plastic bricks are called Thinking Things and are described as a simple solution for connecting almost anything wirelessly to the Internet. Thinking Things is under development right now.

Telefonica I+D invented the Thinking Things concept and believes that the product will significantly boost the development of M2M communications and help to establish an Internet of physical things. Thinking Things can connect all sorts of inanimate objects to the Internet, including thermostats and allows users to monitor various assets or tracking loads.

Thinking Things are comprised of three different elements. The first is a physical module that contains the core communications and logic hardware. The second element is energy to make electronics work via a battery or AC power. The third element is a variety of sensors and actuators to perform the tasks users want.

The Thinking Things device is modular, and the user can connect together multiple bricks to perform the task they need. This is an interesting project that can be used for anything from home automation offering simple control over a lamp to just about anything else you can think of. The item connected to the web using Thinking Things automatically gets its own webpage. That webpage provides online access allowing the user to control the function of the modules and devices attached to the modules. An API allows developers to access all functionality of the Thinking Things from within their software.

Tuesday, October 30. 2012

The Pirate Bay switches to cloud-based servers

Via Slash Gear

-----

It isn’t exactly a secret that authorities and entertainment groups don’t like The Pirate Bay, but today the infamous site made it a little bit harder for them to bring it down. The Pirate Bay announced today that it has move its servers to the cloud. This works in a couple different ways: it helps the people who run The Pirate Bay save money, while it makes it more difficult for police to carry out a raid on the site.

“All attempts to attack The Pirate Bay from now on is an attack on everything and nothing,” a Pirate Bay blog post reads. “The site that you’re at will still be here, for as long as we want it to. Only in a higher form of being. A reality to us. A ghost to those who wish to harm us.” The site told TorrentFreak after the switch that its currently being hosted by two different cloud providers in two different countries, and what little actual hardware it still needs to use is being kept in different countries as well. The idea is not only to make it harder for authorities to bring The Pirate Bay down, but also to make it easier to bring the site back up should that ever happen.

Even if authorities do manage to get their hands on The Pirate Bay’s remaining hardware, they’ll only be taking its transit router and its load balancer – the servers are stored in several Virtual Machine instances, along with all of TPB’s vital data. The kicker is that these cloud hosting companies aren’t aware that they’re hosting The Pirate Bay, and if they discovered the site was using their service, they’d have a hard time digging up any dirt on users since the communication between the VMs and the load balancer is encrypted.

In short, it sounds like The Pirate Bay has taken a huge step in not only protecting its own rear end, but those of users as well. If all of this works out the way The Pirate Bay is claiming it will, then don’t expect to hear about the site going down anytime soon. Still, there’s nothing stopping authorities from trying to bring it down, or from putting in the work to try and figure out who the people behind The Pirate Bay are. Stay tuned

Monday, October 29. 2012

Don’t Call The New Microsoft Surface RT A Tablet, This Is A PC

Via Tech Crunch

-----

The Microsoft Surface RT is a PC. It’s not a mobile device and it’s not a tablet, it’s a PC. And Microsoft’s first self-branded computer. It is, in short, the physical incarnation of Microsoft’s Windows 8.

The expectations and competition for the Surface are daunting. It’s been said that Microsoft built the Surface to show up HP, Dell, and the rest of the personal computing establishment. PC sales are stagnant while Apple is selling the iPad at an incredible pace. But the Surface is something different from other tablets. Microsoft built a PC for the post-PC consumer and chose to power it with a limited operating system called Windows RT. These trade-offs, real or imagined, are what really makes or breaks this device.

While the Surface RT is a solid piece of hardware, there are a few things that makes the device a bit hard to handle. The Surface RT is a widescreen tablet. It’s 16×9, making it a lot wider than it is tall, so using this 10.6-inch tablet is slightly different from an iPad or Galaxy Note 10.1. When holding it properly, that is, in landscape, it’s a bit too long to be held with one hand. Likewise, when holding it in portrait, it’s too tall to be held comfortably one-handed. In fact, it’s slightly awkward overall.

For starters, it’s rather tough to type efficiently on the Surface RT’s on-screen keyboard when holding the tablet. I’m 6 feet tall and have normal size hands; I cannot grasp the Surface and hit all the on-screen keys without shifting my hands. Moreover, when holding the Surface in this orientation, I cannot touch the Windows home button. Windows 8 compensates for this flawed design by incorporating an additional Windows home button into a slide-out virtual tray activated by a bezel gesture.

It’s clear Microsoft designed the Surface RT to be a convertible PC rather than a tablet with an optional keyboard. This is an important distinction. By comparison, tablets such as the iPad and Galaxy Note 10.1 feel complete without anything else. I own a Logitech Ultrathin Keyboard and use it daily. But only for work. I enjoy using the iPad without it. Due equally to Windows 8 and the large 16:9 form factor, the Surface RT is naked without a $119 Touch Cover.

Without a Touch Cover, the Surface RT feels incomplete in design and function. The problem here is that the Surface is basically a big laptop screen without the keyboard. The cover rights the design’s wrongs by forcing the user to use the physical keyboard rather than the on-screen keyboard. Microsoft knows this. After all, Surface is rarely advertised without a Touch Cover, but that doesn’t alleviate the sting of paying another $100+ for a keyboard.

The Surface RT runs a limited version of Windows 8 called Windows RT. It shares the same ebb and flow as Windows 8, which is a radical take on the classic operating system. The operating system was clearly built for the mobile era and it shows promise. But right now, at launch, Windows RT needs work. Most of the apps are limited in features and the touch version of Internet Explorer is slow and clunky. Pages load very slowly and the bezel gesture to switch between tabs is unreliable and buggy. And worse yet, due to Windows RT’s lack of apps like Facebook or Twitter, you’re forced to use Internet Explorer for nearly everything. Don’t expect to load your favorite Windows applications on the Surface RT. It’s just not possible. Think of this as Windows 8 Lite, a cross between a desktop and a mobile OS.

Despite its shortcomings, the Surface RT is a very functional productivity device in a traditional sense. I wrote the majority of this review on the Surface with the $129 Type Cover. It feels like an ultraportable Ultrabook. Using Windows RT’s classic Desktop mode, I was able to compose and edit within TechCrunch’s WordPress back-end as if I was sitting at my Windows 7 desktop. In this use, the Surface outshines other tablets as it allows for a full desktop workspace. But here is where the problem lies – the Surface acts like a tablet and ends up working more like a notebook. This interface between flat tablet and horizontal laptop is frustrating and confusing.

Physically, the Surface feels like it’s from the future. It employs just the right amount of neo-brutalist industrial design. The casing is made out of magnesium alloy, called VaporMg by Microsoft, which is more durable and scratch resistant than aluminum. The iPad feels pedestrian compared to the Surface. But the iPad is a different sort of device. Where the iPad is a tablet, the Surface is convertible PC.

The Surface’s marque feature is a large kickstand that props the tablet at a 22 degree angle. The Kickstand pops out with an air of confidence. A hidden hinge snaps it away from the body and likewise, when collapsing the kickstand, the hinge forcefully snaps it back in place.

Unlike most tablets, the Surface sports a large range of I/O ports. Along with the bottom-mounted Touch Cover port, there’s a microHDMI output, microSDXC slot, and a full-size USB port for connecting a camera, phone, USB flash drive, or XBOX 360 controller. This last feature sets the Surface apart from other tablets, allowing the Surface to nearly match the functions of a laptop.

You cannot pick up a Surface and be disappointed by the feel.

Remember, the Surface is a PC, not a tablet in the traditional sense. It should have all these ports and, although it looks comical, a full-sized USB port on this tablet is absolutely necessary.

There are front and rear cameras, 802.11a/b/g/n Wi-Fi, Bluetooth 4.0 and a non-removable battery. There are no 3G or 4G wireless connectivity options. The speakers are nice and loud (but not booming), though there is very little bass in the sound.

The Surface feels fantastic. The hardware is its strongest selling point. You cannot pick up a Surface and be disappointed by the feel. There isn’t another tablet on the market with the same build quality or connectivity options in such a svelte package, including the iPad.

Microsoft didn’t just build the Surface. The company also spent resources developing the Surface’s Touch Covers. These covers, one with physical keys and the other with touch-sensitive keypads, magnetically snap onto the bottom of the Surface like Apple’s Smart Covers.

Both versions of the Touch Covers enhance the overall feel of the device. It’s impossible not to appreciate the Surface’s design when you snap one of these covers onto the Surface, close it up, and carry it around. I’m completely taken by the feel of the Surface.

The Surface is ungainly large when deployed.

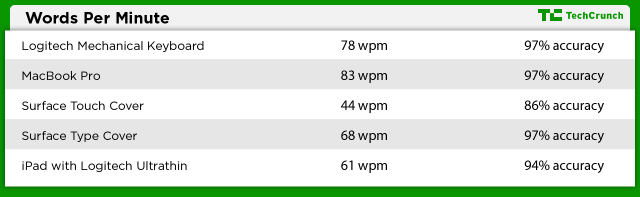

The Touch Covers work equally as well as they look. There are two versions: one has chicklet keys that feel a lot like the keyboards used in Ultrabooks. This version is called the Type Cover and costs $129. The $119 Touch Cover uses touch-sensitive buttons that do not physically move. This Touch Cover is half as thin as its brother, but after spending a week with both, I found I was about half as productive on the Touch Cover versus the Type Cover (see WPP chart below). Both have little touchpads with right and left clicking buttons under the keyboard.

However, the Touch Covers reveal the Surface’s fundamental flaw: The Surface is ungainly large when deployed. When used with the Surface’s kickstand and a Touch Cover, the whole contraption is 10-inches deep. That’s the same depth as a 15-inch MacBook Pro. An iPad with a Logitech Ultrathin Keyboard is only 7-inches deep; most ultrabooks are 9-inches or under. A Surface with a Touch Cover barely fits on most airplane seat-back trays; it doesn’t work at all on the trays that pull out of an armrest. That’s a problem.

The sheer size of the Surface negates its appeal. At 10-inches deep with a Touch Cover, when used, it’s larger than many more capable ultrabooks. Additionally, with the nicer Type Cover, the Surface isn’t much thinner or less expensive than many full-powered notebooks.

The Touch Covers of course are optional, but Microsoft saw them as a key component for the device. Look at the market or pre-release press coverage: a good amount of the message is about these Touch Covers and not the Surface RT itself. Microsoft is attempting to see them as one unit. To Microsoft, it’s not if Surface buyers are going to purchase a Touch Cover, but rather when. It’s expected. Unfortunately the Touch Covers are not as functional as keyboards for other tablets.

As previously mentioned, the Touch Covers magnetically snap onto the bottom of the Surface and then the kickstand props the screen at a pleasing angle. But the connection point is not rigid. Pick up the Surface and the Touch Cover dangles in place.

This design makes it very hard to use the Surface with a Touch Cover anywhere but a tabletop. It needs a 10-inch deep flat surface. I could not use the Surface with a Touch Cover sitting in an armchair, walking around, or laying on my back in bed. Forget about using it on the commode; it sits too precariously on the legs for comfort. These are use-cases that I do nearly daily with my iPad and Logitech Ultrathin Keyboard. The Surface is only usable when seated at a table or desk.

The Surface RT uses a 10.6-inch 16×9 ClearType HD Display at 1366×768. The screen can handle 5 points of contact at once. Indoors, the screen is deep and rich with vibrant colors. Colors wash out in direct sunlight and the glossy overlay results in a lot of glare — but slightly less glare than the iPad’s. The screen is fairly sharp, but overall is far inferior to the Retina screen in the new iPad or even the screen found in the iPad 2.

When sitting side-by-side, it’s easy to see the difference between the Surface RT and new iPad, both $499 tablets. The new iPad is more detailed and the colors are much more accurate. There is no contest, really. The Retina display in the new iPad has nearly twice the resolution of the Surface’s and can handle 10 points of contact instead of just 5. The Retina screen is brighter, has a deeper color palette, and, most importantly, makes text easier to read thanks to the higher resolution screen.

Microsoft advertises its tablet with a keyboard and mouse.

I found the Surface RT’s screen to be a tad frustrating at times. It seemingly doesn’t register touches properly. I often had to reattempt selecting a particular on-screen item. The Surface RT (or maybe it’s Windows RT?) is not as accurate as I would like it to be. This happened more often in the classic Desktop environment where the buttons and elements are lot smaller than those found in the new tile interface.

But per Microsoft’s marketing, the interaction with the display is not as critical with the Surface as, say, with an iPad or Galaxy Note 10.1. That’s where the Touch Covers with their little trackpads come into play. Apple and Samsung advertise their tablets’ touchscreen capabilities. Microsoft advertises its tablet with a keyboard and mouse.

The Surface RT packs two 1MP cameras: one on the front, one on the back. They’re a joke. The picture quality is horrible under any lighting condition and completely unacceptable for a $500 device. See the examples below.

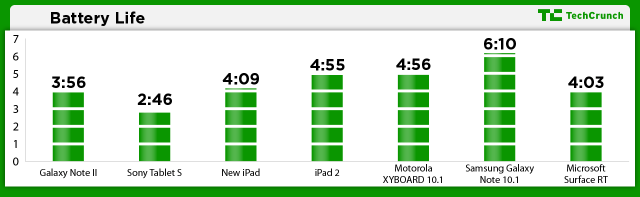

Note: Results are based on a standardized test that requires the device to search Google Images until the battery is depleted. The screens are set to max brightness.

The Surface RT cannot write its own story. Unfortunately for the Surface RT, its fate rests solely in the hands of Windows 8, and moreover, Windows RT. No matter how good the hardware is, the operating system makes or breaks the device. As it sits right now, at the launch of Windows 8/RT, the experience is a mish-mash of interfaces and the experience is poor.

At launch, Windows 8 feels like a brand-new playground built in an affluent retirement complex. It’s pretty, full of bold colors, seemingly fun, but built for a different generation. Microsoft is clearly attempting to bring relevance to Windows with the touch-focused interface and post PC concepts but is unwilling and unable to fully commit completely to touch.

Again, the Surface RT runs a version of Windows called Windows RT. This is not Windows 8, although they look and work very similarly. It’s stripped down, and designed to run better on mobile computing platforms.

The main difference is Windows RT requires apps be coded for ARM processors rather than x86/x64 chips that have powered Windows computers since Windows 3.1. This means users cannot replace Internet Explorer with Chrome (even in Desktop) until Google releases a version of Chrome coded specifically for Windows RT. Likewise, a trusty version of the Print Shop Pro application cannot be installed on Windows RT, or for that matter, nearly any other Windows application built over the last 20 years. For that, you’ll need a Surface Pro which runs Windows 8 on an Intel CPU.

As of this review’s writing, Microsoft had yet to detail the price or release schedule of the Surface Pro. It is expected to hit stores by the end of 2012 for around $1,000.

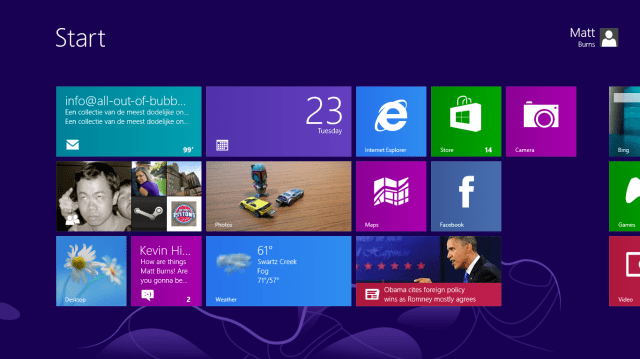

Windows 8/RT is different. It’s a stark departure from previous Windows builds. It’s built for the post-PC world but still holds onto the past with a classic desktop mode. It boots to the touch-friendly UI, previously called Metro and now called Modern UI, where apps are presented in a pleasing grid. This is also the Start Menu, so there is no longer a comfortable Windows button to click to get around the OS.

Like Windows Phone, most of the tiles representing the apps are live, providing quick insight into the content held within. The tile for the application called Photo previews the pics within the app. Mail shows the sender and subject line of the last received message. Likewise, Weather shows the current weather, Messages shows the top message, and People shows the latest social media update. It’s a smooth, versatile and smart take on a mobile tablet OS.

The Surface seems built specifically for the touch interface. The 16:9 screen matches the widescreen flow of the operating system perfectly. The Surface utilizes bezel gestures to hide menus. Starting with your finger on the Surface’s black bezel, slide onto the screen to reveal a menu tray. Done on the right side displays the main menu where most options are held. Done on the left switches between applications. Make a quick on and off swipe on the left side to display the application switcher. Most apps also have customized option drawers on the top and bottom of the screen.

These hidden menus allow the apps to take full advantage of the screen; there’s no need to display a menu bar when they’re just a bezel swipe away.

Microsoft itself didn’t fully commit to the touch interface.

As great as Windows 8/RT looks, it sadly fails to provide a cohesive user experience.

Even though Windows RT features myriad next-gen features, most of them are half-baked at launch. The application store is missing key apps, the single sign-on fails to sync user profiles across devices, and the social sharing features do not nativity include Twitter or Facebook.

Microsoft itself didn’t fully commit to the touch interface. Windows 8’s dependency on the classic environment will not allow the Surface to be a tablet. Half the apps pre-installed on the Surface RT launches in the classic desktop interface, most notably Microsoft Office, where the smaller user elements do not play nicely with the touch interface.

Windows traditionalists will find the Classic interface a lovely memory of the good ol’ times. Most everything is where it’s supposed to be – besides the Start Menu – and it runs and acts like Windows 7. But for the Surface, with its 10.6-inch 16:9 screen, it’s hard to use with the touchscreen alone. The elements are too small to efficiently be controlled with just touch. After all, the Desktop emulates an environment designed a several generations ago for use with a keyboard and mouse.

Windows RT is launching fairly bereft of apps. Windows RT ships with an

app store but right now there isn’t anything worthwhile available

besides Evernote and Netflix. The operating system is on the verge of

launching and it doesn’t have the support of a vast library of apps.

There isn’t a Facebook app. Twitter is missing, as well as anything

pertaining to Google Apps. There is no Dropbox, Fruit Ninja, or Angry

Birds. And don’t trust the application store: there are a bunch of 3rd

party apps masquerading as official apps. Worse yet, IE 10 is painful to

use.

Windows 8/RT ships with two different versions of Internet Explorer. One lives in the new touch interface and the other lives a different life in Desktop; both are completely oblivious to each other’s existence. Open up a couple of tabs in one and the other does not replicate the behavior. The Internet Explorer in Desktop is the good ol’ IE — it should be very familiar to most. The one in the touch interface is completely different. It’s slow and clunky. Thanks to larger buttons, it’s easier to use by touch, but that’s its only redeeming quality. I hate using it.

The lack of apps is one of the Surface’s main downfalls.

The sad state of Windows RT’s application store is eerily similar to that of Windows Phone. Launched nearly two years ago, the application selection on Windows Phone still pales in comparison to that of Android or iOS. Microsoft has spent two years attempting to get developers on-board its smartphone platform. Will developers ignore Windows RT as well? No one knows and that’s the trick.

It’s true that the iPad launched with very few apps, but owners could also run iPhone apps while the legions of iOS developers furiously adapted to the larger screen. Windows 8/RT lacks the ability to run mobile apps and Microsoft seemingly doesn’t have the same sort of rabid developers at its disposal (otherwise there would already be apps).

Bing Apps

Moments after the Surface is turned on for the first time, it’s easy to dive right into content thanks to Bing Apps. Bing Daily, Bing Sports, Bing Sports, Bing Travel, and Bing Finance look fantastic and they’re loaded with content. With large lead photos and strong headlines, they’re sure to be some of the most used apps — until Windows 8 is embraced by 3rd party devs at least.

These apps offer a lot of info. For instance, Bing Sports leads with the news, but swipe left and it reveals the schedule for user-designated teams. Swipe a little more to reveal standings charts. The next section shows panels of leading players followed by panels of the leading teams of the default sport. Swipe a little bit more and you get a large advertisement.

The Surface ships with ad-supported apps installed. Every Bing App has a single ad located at the end of its news feed. It’s a little shady but not exactly scandalous.

Video

The Surface seems designed specifically to watch movies with the 16:9 screen. Launch the video player app and it immediately loads the Xbox Video store. This app can playback movies loaded on the internal storage or microSD card, but it’s primarily designed to be a video store. And like the Xbox dashboard it emulates, there are ads here too.

Xbox

Video offers a good selection of movies but they’re a tad pricey like

on iTunes. The Avengers in HD costs $20; it’s $15 on Amazon. A single HD

episode of The Walking Dead costs $2.99 on Xbox but $1.99 on Amazon.

Xbox

Video offers a good selection of movies but they’re a tad pricey like

on iTunes. The Avengers in HD costs $20; it’s $15 on Amazon. A single HD

episode of The Walking Dead costs $2.99 on Xbox but $1.99 on Amazon.

The Surface RT lacks a powerful video playback solution. Microsoft didn’t include Windows Media Player or Windows Media Center in Windows RT. The included video app is very limited but it did manage to playback 720p .avi files off of a microSD card. Fans of sideloading content, however, may find themselves disappointed.

Photos

The Windows RT photo app is a thing of beauty. It’s fast, flexible and easily pulls in photos from Facebook and Flickr. It’s deeply integrated into Windows RT so sharing and printing is built in.

Once authorized, the app can access Facebook photos and videos and displays them in such a way as if they’re stored locally.

Once authorized, the app can access Facebook photos and videos and displays them in such a way as if they’re stored locally.

While the app runs great, it lacks any editing tools — even basic items like cropping are missing. It’s mostly designed to show off the screen and UI elements.

The Surface launches to a crowded market dominated by just a few players. The $499 iPad is the top tablet in the world, currently commanding a dominant portion of the tablet market share. The $199 tablets, the Kindle Fire HD, Nexus 7, and Nook Tablet HD, offer a lot of tablet for the money. But Surface is fundamentally different from the aforementioned options.

The Surface is a convertible PC, meaning it’s a personal computer with a detachable keyboard, not a consumer tablet. The Surface is incomplete without a Touch Cover. It’s not designed to simply be a tablet. It’s too large and clunky to be used without a keyboard.

With its awkward size and incomplete operating system, the Surface fails to excel at anything particular in the way other tablets have. The iPad provides better casual computing. The Android tablets sync beautifully with Google accounts and the media tablets by Amazon and B&N offer an unmatched content selection.

The Surface’s closest natural competitor is a budget Ultrabook. But even here, most Ultrabooks run Windows 8 rather than Windows RT, allowing for the full Windows experience. Plus most Ultrabooks have a larger screen but smaller footprint when used, offer longer battery life, and are generally more powerful.

Microsoft built the Surface to be a Jack of all trades, but failed to make sure it was even competent at any one task.

Should you buy the Surface RT? No.

The Surface RT is a product of unfortunate timing. The hardware is great. The Type Cover turns it into a small convertible tablet powered by a promising OS in Windows RT. That said, there are simply more mature options available right now.

Microsoft needs to court developers for Windows RT. As a consumer tablet, the Surface lacks all of the appeal of the iPad. There aren’t any mainstream apps and Microsoft has failed to connect Windows desktop and mobile ecosystem in any meaningful way like Android or iOS/OS X.

Windows RT is a brand new operating system that is incompatible with legacy Windows software. This immediately limits the appeal since the Surface RT is dependent on Windows RT’s application Store – a storefront that is currently devoid of anything useful.

The Surface RT isn’t a tablet. It’s not a legitimate alternative to the iPad or Galaxy Note 10.1. That’s not a bad thing. With the Touch Covers, the Surface RT is a fine alternative to a laptop, offering a slightly limited Windows experience in a small, versatile form. Just don’t call it an iPad killer.

If properly nurtured, Windows RT and the Surface RT could be something worthwhile. But right now, given Microsoft’s track record with Windows Phone, buying the Surface RT is a huge risk. The built-in apps are very limited and the Internet experience is fairly poor. Skip this generation of the Surface RT or at least wait until it offers a richer, more useful experience. While we’re bullish on Windows 8, the RT incarnation just isn’t quite there.

Is it heavy?

- Not heavy, but solid. Listed by Microsoft as “under 1.5lbs”. The new iPad with 3G is 1.46 lbs.

How much does the Surface cost?

- $499 for the 32GB. $599 for the 32GB plus Touch Cover. $699 for 64GB plus Touch Cover.

Who should buy one?

- Very few people. Perhaps a student who already utilizes Microsoft’s cloud storage system, SkyDrive, and is looking for a compact note-taking device that can sometime play a movie.

Is there a lot of glare?

- Yes, but no more than other tablets.

Is the Kickstand adjustable?

- No, locked in at 22 degrees.

Can I open up the Surface?

- Yes, the backplate is secured with 12 Torex head screws.

Can I download pictures from my camera to the Surface?

- Yes, from the microSD card slot or over USB.

Does the Surface work with 3G/4G networks?

- No, the Surface RT does not have a built-in cellular modem and because of Windows RT’s application requirement, you cannot install a carrier’s application for USB modems. A WiFi hotspot will work

Does Windows RT have a lot of apps?

- No, it likely doesn’t have any of your top apps. The most popular ones in Windows RT is Netflix and Kindle.

Images made by our graphics ninja, Bryce Durbin.

A Stuxnet Future? Yes, Offensive Cyber-Warfare is Already Here

Via ISN ETHZ

By Mihoko Matsubara

-----

Server room at CERN

Mounting Concerns and Confusion

Stuxnet proved that any actor with sufficient know-how in terms of cyber-warfare can physically inflict serious damage upon any infrastructure in the world, even without an internet connection. In the words of former CIA Director Michael Hayden: “The rest of the world is looking at this and saying, ‘Clearly someone has legitimated this kind of activity as acceptable international conduct’.”

Governments are now alert to the enormous uncertainty created by cyber-instruments and especially worried about cyber-sabotage against critical infrastructure. As US Secretary of Defense Leon Panetta warned in front of the Senate Armed Services Committee in June 2011; “the next Pearl Harbor we confront could very well be a cyber-attack that cripples our power systems, our grid, our security systems, our financial systems, our governmental systems.” On the other hand, a lack of understanding about instances of cyber-warfare such as Stuxnet has led to confused expectations about what cyber-attacks can achieve. Some, however, remain excited about the possibilities of this new form of warfare. For example, retired US Air Force Colonel Phillip Meilinger expects that “[a]… bloodless yet potentially devastating new method of war” is coming. However, under current technological conditions, instruments of cyber-warfare are not sophisticated enough yet to deliver a decisive blow to the adversary. As a result, cyber-capabilities still need to be used alongside kinetic instruments of war.

Advantages of Cyber-Capabilities

Cyber-capabilities provide three principal advantages to those actors that possess them. First, they can deny or degrade electronic devices including telecommunications and global positioning systems in a stealthy manner irrespective of national borders. This means potentially crippling an adversary’s intelligence, surveillance, and reconnaissance capabilities, delaying an adversary’s ability to retaliate (or even identify the source of an attack), and causing serious dysfunction in an adversary’s command and control and radar systems.

Second, precise and timely attribution is particularly challenging in cyberspace because skilled perpetrators can obfuscate their identity. This means that responsibility for attacks needs to be attributed forensically which not only complicates retaliatory measures but also compromises attempts to seek international condemnation of the attacks.

Finally, attackers can elude penalties because there is currently no international consensus as to what actually constitutes an ‘armed attack’ or ‘imminent threat’ (which can invoke a state’s right of self-defense under Article 51 of the UN Charter) involving cyber-weapons. Moreover, while some countries - including the United States and Japan - insist that the principles of international law apply to the 'cyber' domain, others such as China argue that cyber-attacks “do not threaten territorial integrity or sovereignty.”

Disadvantages of Cyber-Capabilities

On the other hand, high-level cyber-warfare has three major disadvantages for would-be attackers. First, the development of a sophisticated ‘Stuxnet-style’ cyber-weapon for use against well-protected targets is time- and resource- intensive. Only a limited number of states possess the resources required to produce such weapons. For instance, the deployment of Stuxnet required arduous reconnaissance and an elaborate testing phase in a mirrored environment. F-Secure Labs estimates that Stuxnet took more than ten man-years of work to develop, underscoring just how resource- and labor- intensive a sophisticated cyber-weapon is to produce.

Second, sophisticated and costly cyber-weapons are unlikely to be adapted for generic use. As Thomas Rid argues in "Think Again: Cyberwar,” different system configurations need to be targeted by different types of computer code. The success of a highly specialized cyber-weapon therefore requires the specific vulnerabilities of a target to remain in place. If, on the contrary, a targeted vulnerability is ‘patched’, the cyber-operation will be set back until new malware can be prepared. Moreover, once the existence of malware is revealed, it will tend to be quickly neutralized – and can even be reverse-engineered by the target to assist in future retaliation on their part.

Finally, it is difficult to develop precise, predictable, and controllable cyber-weapons for use in a constantly evolving network environment. The growth of global connectivity makes it difficult to assess the implications of malware infection and challenging to predict the consequences of a given cyber-attack. Stuxnet , for instance, was not supposed to leave Iran’s Natanz enrichment facility, yet the worm spread to the World Wide Web, infecting other computers and alerting the international community to its existence. According to the Washington Post , US forces contemplated launching cyber-attacks on Libya’s air defense system before NATO’s airstrikes. But this idea was quickly abandoned due to the possibility of unintended consequences for civilian infrastructure such as the power grid or hospitals.

Implications for Military Strategy

Despite such disadvantages, cyber-attacks are nevertheless part of contemporary military strategy including espionage and offensive operations. In August 2012, US Marine Corps Lieutenant General Richard Mills confirmed that operations in Afghanistan included cyber-attacks against the adversary. Recalling an incident in 2010, he said, "I was able to get inside his nets, infect his command-and-control, and in fact defend myself against his almost constant incursions to get inside my wire, to affect my operations."

His comments confirm that the US military now employs a combination of cyber- and traditional offensive measures in wartime. As Thomas Mahnken points out in “Cyber War and Cyber Warfare,” cyber-attacks can produce disruptive and surprising effects. And while cyber-attacks are not a direct cause of death, their consequences may lead to injuries and loss of life. As Mahnken argues, it would be inconceivable to directly cause Hiroshima-type damage and casualties merely with cyber-attacks. While a “cyber Pearl Harbor” might shock the adversary on a similar scale as its namesake in 1941, the ability to inflict a decisive, extensive, and foreseeable blow requires kinetic support – at least under current technological conditions.

Implications for Critical Infrastructure

Nevertheless, the shortcomings of cyber-attacks will not discourage malicious actors in peacetime. The anonymous, borderless, and stealthy nature of the 'cyber' domain offers an extremely attractive asymmetrical platform for inflicting physical and psychological damage to critical infrastructure such as finance, energy, power and water supply, telecommunication, and transportation as well as to society at large. Since well before Stuxnet, successful cyber-attacks have been launched against vulnerable yet important infrastructure systems. For example, in 2000 a cyber-attack against an Australian sewage control system resulted in millions of liters of raw sewage leaking into a hotel, parks, and rivers.

Accordingly, the safeguarding of cyber-security is an increasingly important consideration for heads of state. In July 2012, for example, President Barack Obama published an op-ed in the Wall Street Journal warning about the unique risk cyber-attacks pose to national security. In particular, the US President emphasized that cyber-attacks have the potential to severely compromise the increasingly wired and networked lifestyle of the global community. Since the 1990s, the process control systems of critical infrastructures have been increasingly connected to the Internet. This has unquestionably improved efficiencies and lowered costs, but has also left these systems alarmingly vulnerable to penetration. In March 2012, McAfee and Pacific Northwest National Laboratory released a report which concluded that power grids are rendered vulnerable due to their common computing technologies, growing exposure to cyberspace, and increased automation and interconnectivity.

Despite such concerns, private companies may be tempted to prioritize short-term profits rather than allocate more funds to (or accept more regulation of) cyber-security, especially in light of prevailing economic conditions. After all, it takes time and resources to probe vulnerabilities and hire experts to protect them. Nevertheless, leadership in both the public and private sectors needs to recognize that such an attitude provides opportunities for perpetrators to take advantage of security weaknesses to the detriment of economic and national security. It is, therefore, essential for governments to educate and encourage --- and if necessary, fund --- the private sector to provide appropriate cyber-security in order to protect critical infrastructure.

Implications for Espionage

The sophistication of the Natanz incident, in which Stuxnet was able to exploit Iranian vulnerabilities, stunned the world. Advanced Persistent Threats (APTs) were employed to find weaknesses by stealing data which made it possible to sabotage Iran’s nuclear program. Yet APTs can also be used in many different ways, for example against small companies in order to exploit larger business partners that may be possession of valuable information or intellectual property. As a result, both the public and private sectors must brace themselves for daily cyber-attacks and espionage on their respective infrastructures. Failure to do so may result in the theft of intellectual property as well as trade and defense secrets that could undermine economic competitiveness and national security.

In an age of cyber espionage, the public and private sectors must also reconsider what types of information should be deemed as “secret,” how to protect that information, and how to share alerts with others without the sensitivity being compromised. While realization of the need for this kind of wholesale re-evaluation is growing, many actors remain hesitant. Indeed, such hesitancy is driven often out of fears that doing so may reveal their vulnerabilities, harm their reputations, and benefit their competitors. Of course, there are certain types of information that should remain unpublicized so as not to damage the business, economy, and national security. However, such classifications must not be abused against balance between public interest and security.

Cyber-Warfare Is Here to Stay

The Stuxnet incident is set to encourage the use of cyber-espionage and sabotage in warfare. However, not all countries can afford to acquire offensive cyber-capabilities as sophisticated as Stuxnet and a lack of predictability and controllability continues to make the deployment of cyber-weapons a risky business. As a result, many states and armed forces will continue to combine both kinetic and 'cyber' tactics for the foreseeable future. Growing interconnectivity also means that the number of potential targets is set to grow. This, in turn, means that national cyber-security strategies will need to confront the problem of prioritization. Both the public and private sectors will have to decide which information and physical targets need to be protected and work together to share information effectively.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (130804)

- Norton cyber crime study offers striking revenue loss statistics (101318)

- MeCam $49 flying camera concept follows you around, streams video to your phone (99821)

- Norton cyber crime study offers striking revenue loss statistics (57547)

- The PC inside your phone: A guide to the system-on-a-chip (57174)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

January '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | |||

| 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| 12 | 13 | 14 | 15 | 16 | 17 | 18 |

| 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| 26 | 27 | 28 | 29 | 30 | 31 | |