Friday, September 09. 2011

Google -- "How our cloud does more with less"

-----

We’ve worked hard to reduce the amount of energy our services use. In fact, to provide you with Google products for a month—not just search, but Google+, Gmail, YouTube and everything else we have to offer—our servers use less energy per user than a light left on for three hours. And, because we’ve been a carbon-neutral company since 2007, even that small amount of energy is offset completely, so the carbon footprint of your life on Google is zero.

We’ve learned a lot in the process of reducing our environmental impact, so we’ve added a new section called “The Big Picture” to our Google Green site with numbers on our annual energy use and carbon footprint.

We started the process of getting to zero by making sure our operations use as little energy as possible. For the last decade, energy use has been an obsession. We’ve designed and built some of the most efficient servers and data centers in the world—using half the electricity of a typical data center. Our newest facility in Hamina, Finland, opening this weekend, uses a unique seawater cooling system that requires very little electricity.

Whenever possible, we use renewable energy. We have a large solar panel installation at our Mountain View campus, and we’ve purchased the output of two wind farms to power our data centers. For the greenhouse gas emissions we can’t eliminate, we purchase high-quality carbon offsets.

But we’re not stopping there. By investing hundreds of millions of dollars in renewable energy projects and companies, we’re helping to create 1.7 GW of renewable power. That’s the same amount of energy used to power over 350,000 homes, and far more than what our operations consume.

Finally, our products can help people reduce their own carbon footprints. The study (PDF) we released yesterday on Gmail is just one example of how cloud-based services can be much more energy efficient than locally hosted services helping businesses cut their electricity bills.

Tuesday, September 06. 2011

Modular’ 3D Printed Shoes by Objet on Display at London’s Victoria and Albert Museum

Via object

-----

Marloes ten Bhromer is a critically acclaimed Dutch designer. She produces some incredible outworldly shoe designs based on a unique combination of art and technological functionality.

One of her most exciting new designs is called the 'Rapidprototypedshoe' – created on the Objet Connex multi-material 3D printer.

Why did she use rapid prototyping? According to Marloes, this is because; "rapid prototyping – adding material in layers – rather than traditional shoe manufacturing methods – could help me create something entirely new within just a few hours."

And why Objet? Again, in her words; "Objet Connex printers make it possible to print an entire shoe – albeit a concept shoe – including a hard heel and a flexible upper in one build, which just isn't possible with other 3D printing technologies."

The Objet Connex multi-material 3D printer allows the simulatneous printing of both rigid and rubber-like material grades and shades within a single prototype, which is why it's used by many of the world's largest shoe manufacturers. And of course, because it's 3D printing and not traditional manufacturing methods, there are no expensive set-up costs and no minimum quantities to worry about!

This particular shoe design is based on a modular concept – with an interchangeable heel to allow for specific customizations as well as easy repairs (see the bottom photo which shows the heel detatched).

The 3D printed modular shoe will be available for viewing at the Power of Making exhibition – starting today at the world-famous Victoria and Albert Museum in London. If you are anywhere near the UK this is worth a visit.

The 3D printed modular shoe will be available for viewing at the Power of Making exhibition – starting today at the world-famous Victoria and Albert Museum in London. If you are anywhere near the UK this is worth a visit.

If you can't make it right at this moment, don't worry – the shoe and the exhibit will remain there until January 2nd.

The Power of Making exhibition is created in collaboration with the Crafts Council. Curator Daniel Charney's aim is to encourage visitors to consider the process of making, not just the final results. For this the 3D printing process is particularly salient.

For more details on this story read the Press Release here.

-----

See also the first 'printed' plane

Monday, August 29. 2011

Google TV set to launch in Europe

Via TechSpot

-----

During his keynote speech at the annual Edinburgh Television Festival last Friday, Google Executive Eric Schmidt announced plans to launch Google's TV service in Europe starting early next year.

The original launch in the United States back in October 2010 received rather poor reviews, with questions being raised regarding lack of content being available. This has seen prices slashed on Logitech set-top boxes down as low as $99 in July, with similarly poor sales of the integrated Sony Bravia Google TV models.

How much of a success Google TV will become in Europe remains to be seen, with the UK broadcasters especially concerned about the damage it could do to their existing business models. Strong competition from established companies like Sky and Virgin Media could further complicate matters for the Internet search giant.

During the MacTaggart lecture, Schmidt sought to calm the fears of the broadcasting elite, taking full advantage of the fact he was the first non-TV executive to ever be invited to present the prestigious keynote lecture at the festival.

"We seek to support the content industry by providing an open platform for the next generation of TV to evolve, the same way Android is an open platform for the next generation of mobile” said Schmidt. “Some in the US feared we aimed to compete with broadcasters or content creators. Actually our intent is the opposite…".

Much like in the United States, Google faces a long battle to convince broadcasters and major media players of its intent to help evolve TV platforms with open technologies, rather than topple them.

Google seems to have firmly set its sights on a slice of the estimated $190bn TV advertising market which will do little to calm fears, especially when you consider Google's online advertising figures for 2010 were just $28.9bn in comparison.

Friday, August 26. 2011

MicroGen Chips to Power Wireless Sensors Through Environmental Vibrations

Via Daily Tech

-----

MicroGen Systems says its chips differ from other vibrational energy-harvesting devices because they have low manufacturing costs and use nontoxic material instead of PZT, which contains lead.

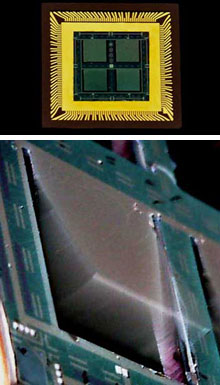

(TOP) Prototype wireless sensor battery with four energy-scavenging chips. (BOTTOM) One chip with a vibrating cantilever (Source: MicroGen Systems )

MicroGen

Systems is in the midst of creating energy-scavenging chips that will convert

environmental vibrations into electricity to power wireless sensors.

The

chip's core consists of an array of small silicon cantilevers that

measure one centimeter squared and are located on a

"postage-stamp-sized" thin film battery. These cantilevers oscillate

when the chip is shook, and at their base is piezoelectric material that produces electrical potential when strained by

vibrations.

The current travels from the piezoelectric array through an electrical device

that converts it to a compatible form for the battery. When jostling the chip

by vibrations of a rotating tire, for example, it can generate 200 microwatts

of power.

Critics such as David Culler, chair of computer science at the University of

California, have said that 200 microwatts may be useful at a small size, but

other harvesting techniques through solar, light, heat, etc. are

more competitive technologies since they can either store the electrical energy

on a battery or use it right away.

But according to Robert Andosca, founder and president of Ithaca, New

York-based MicroGen Systems, his chips differ from other vibrational

energy-harvesting devices because they have low manufacturing costs and use

nontoxic material instead of PZT, which contains lead.

Most piezoelectric materials must be assembled by hand and can be quite large.

But MicroGen's chips can be made inexpensively and small because they are based

on silicon microelectrical mechanical systems. The chips can be made on the

same machines used to make computer chips.

MicroGen Systems hopes to use these energy-harvesting chips to power wireless sensors like those that

monitor tire pressure. The chips could eliminate the need to replace these

batteries.

"It's a pain in the neck to replace those batteries," said Andosca.

MicroGen Systems plans to sell these chips at $1 a piece, depending on the

volume, and hopes to begin selling them in about a year.

Wednesday, August 24. 2011

The First Industrial Evolution

Via Big Think

by Dominic Basulto

-----

If the first industrial revolution was all about mass manufacturing and machine power replacing manual labor, the First Industrial Evolution will be about the ability to evolve your personal designs online and then print them using popular 3D printing technology. Once these 3D printing technologies enter the mainstream, they could lead to a fundamental change in the way that individuals - even those without any design or engineering skills - are able to create beautiful, state-of-the-art objects on demand in their own homes. It is, quite simply, the democratization of personal manufacturing on a massive scale.

At the Cornell Creative Machines Lab, it's possible to glimpse what's next for the future of personal manufacturing. Researchers led by Hod Lipson created the website Endless Forms (a clever allusion to Charles Darwin’s famous last line in The Origin of Species) to "evolve” everyday objects and then bring them to life using 3D printing technologies. Even without any technical or design expertise, it's possible to create and print forms ranging from lamps to mushrooms to butterflies. You literally "evolve" printable, 3D objects through a process that echoes the principles of evolutionary biology. In fact, to create this technology, the Cornell team studied how living items like oak trees and elephants evolve over time.

3D printing capabilities, once limited to the laboratory, are now hitting the mainstream. Consider the fact that MakerBot Industries just landed $10 million from VC investors. In the future, each of us may have a personal 3D printer in the home, ready to print out personal designs on demand.

At the same time, there’s been a radical re-thinking about how products are designed and brought to market. Take On the Origin of Tepees, a book by British scientist Jonnie Hughes,

which presents a highly provocative thesis: What if design evolves the

same way that humans do? What if cultural ideas evolve the way humans

do? One example cited by Hughes is the simple cowboy hat from the American Wild West.

What if an object like the cowboy hat “evolved” itself, using cowboys

and ranch hands simply as a unique "selective environment” so that it

could evolve over time?

Wait a second, what's going on here? Objects using humans to evolve themselves? 3D Printers? Someone's been drinking the Kool-Aid, right?

But if you’ve read Richard Dawkins' bestseller The Selfish Gene,

you can see where Hughes is headed with his ideas. Dawkins coined the

term “meme” back in 1976 to express the concept of thoughts and ideas

having a life of their own, free to mutate and adapt, while cleverly

using their human “hosts” as a reproductive vehicle to pass on these

memes to others. Memes functioned like genes, in that their only goal

was to reproduce for the next generation. In such a way, evolved designs

are a form of "teme" -- a term first popularized by Susan Blackmore, author of The Meme Machine, to denote ideas that are replicated via technology.

What if that gorgeous iPad 2 you’re holding in your hand was actually “evolved” and not “designed”? What if it is the object that controls the design, and not the designer that controls the object? Hod Lipson, an expert on self-aware robots and a pioneer of the 3D printing movement, has claimed that we are on the brink of the second industrial revolution. However, if objects really do "evolve," is it more accurate to say that we are on the brink of The First Industrial Evolution?

The final frontier, of course, is not the ability of humans to print out beautifully-evolved objects on demand using 3D printers in their homes. (Although that’s quite cool). The final frontier is the ability for self-aware objects independently “evolving” humans and then printing them out as they need them. Sound far-fetched? Well, it’s now possible to print 3D human organs and 3D human skin. When machine intelligence progresses to a certain point, what’s to stop independent, self-aware machines from printing human organs? The implications – for both atheists and true believers – are perhaps too overwhelming even to consider.

Friday, August 19. 2011

IBM unveils cognitive computing chips, combining digital 'neurons' and 'synapses'

Via Kurzweil

-----

IBM researchers unveiled today a new generation of experimental computer chips designed to emulate the brain’s abilities for perception, action and cognition.

In a sharp departure from traditional von Neumann computing concepts in designing and building computers, IBM’s first neurosynaptic computing chips recreate the phenomena between spiking neurons and synapses in biological systems, such as the brain, through advanced algorithms and silicon circuitry.

The technology could yield many orders of magnitude less power consumption and space than used in today’s computers, the researchers say. Its first two prototype chips have already been fabricated and are currently undergoing testing.

Called cognitive computers, systems built with these chips won’t be programmed the same way traditional computers are today. Rather, cognitive computers are expected to learn through experiences, find correlations, create hypotheses, and remember — and learn from — the outcomes, mimicking the brains structural and synaptic plasticity.

“This is a major initiative to move beyond the von Neumann paradigm that has been ruling computer architecture for more than half a century,” said Dharmendra Modha, project leader for IBM Research.

“Future applications of computing will increasingly demand functionality that is not efficiently delivered by the traditional architecture. These chips are another significant step in the evolution of computers from calculators to learning systems, signaling the beginning of a new generation of computers and their applications in business, science and government.”

Neurosynaptic chips

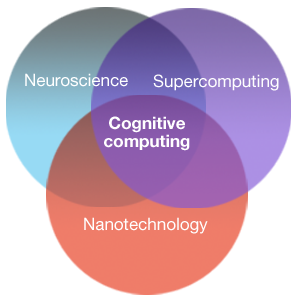

IBM is combining principles from nanoscience, neuroscience, and supercomputing as part of a multi-year cognitive computing initiative. IBM’s long-term goal is to build a chip system with ten billion neurons and hundred trillion synapses, while consuming merely one kilowatt of power and occupying less than two liters of volume.

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

IBM has two working prototype designs. Both cores were fabricated in 45 nm SOICMOS and contain 256 neurons. One core contains 262,144 programmable synapses and the other contains 65,536 learning synapses. The IBM team has successfully demonstrated simple applications like navigation, machine vision, pattern recognition, associative memory and classification.

IBM’s overarching cognitive computing architecture is an on-chip network of lightweight cores, creating a single integrated system of hardware and software. It represents a potentially more power-efficient architecture that has no set programming, integrates memory with processor, and mimics the brain’s event-driven, distributed and parallel processing.

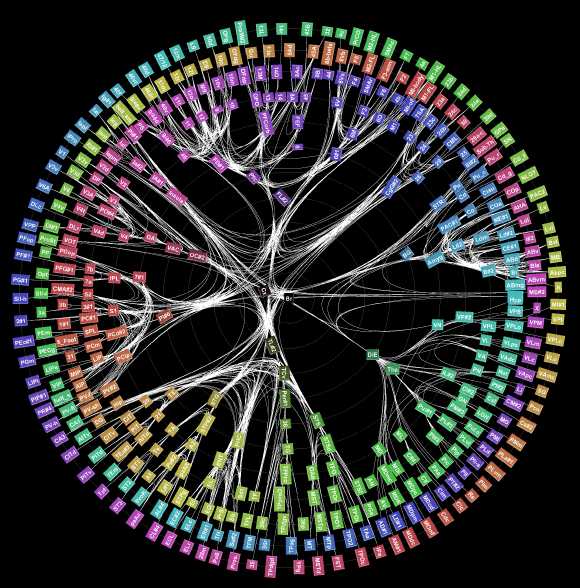

Visualization of the long distance network of a monkey brain (credit: IBM Research)

SyNAPSE

The company and its university collaborators also announced they have been awarded approximately $21 million in new funding from the Defense Advanced Research Projects Agency (DARPA) for Phase 2 of the Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) project.

The goal of SyNAPSE is to create a system that not only analyzes complex information from multiple sensory modalities at once, but also dynamically rewires itself as it interacts with its environment — all while rivaling the brain’s compact size and low power usage.

For Phase 2 of SyNAPSE, IBM has assembled a world-class multi-dimensional team of researchers and collaborators to achieve these ambitious goals. The team includes Columbia University; Cornell University; University of California, Merced; and University of Wisconsin, Madison.

Why Cognitive Computing

Future chips will be able to ingest information from complex, real-world environments through multiple sensory modes and act through multiple motor modes in a coordinated, context-dependent manner.

For example, a cognitive computing system monitoring the world’s water supply could contain a network of sensors and actuators that constantly record and report metrics such as temperature, pressure, wave height, acoustics and ocean tide, and issue tsunami warnings based on its decision making.

Similarly, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag bad or contaminated produce. Making sense of real-time input flowing at an ever-dizzying rate would be a Herculean task for today’s computers, but would be natural for a brain-inspired system.

“Imagine traffic lights that can integrate sights, sounds and smells and flag unsafe intersections before disaster happens or imagine cognitive co-processors that turn servers, laptops, tablets, and phones into machines that can interact better with their environments,” said Dr. Modha.

IBM has a rich history in the area of artificial intelligence research going all the way back to 1956 when IBM performed the world’s first large-scale (512 neuron) cortical simulation. Most recently, IBM Research scientists created Watson, an analytical computing system that specializes in understanding natural human language and provides specific answers to complex questions at rapid speeds.

---

Thursday, August 11. 2011

Does Facial Recognition Technology Mean the End of Privacy?

Via big think

by Dominic Basulto

-----

At the Black Hat security conference in Las Vegas, researchers from Carnegie Mellon demonstrated how the same facial recognition technology used to tag Facebook photos could be used to identify random people on the street. This facial recognition technology, when combined with geo-location, could fundamentally change our notions of personal privacy. In Europe, facial recognition technology has already stirred up its share of controversy, with German regulators threatening to sue Facebook up to half-a-million dollars for violating European privacy rules. But it's not only Facebook - both Google (with PittPatt) and Apple (with Polar Rose) are also putting the finishing touches on new facial recognition technologies that could make it easier than ever before to connect our online and offline identities. If the eyes are the window to the soul, then your face is the window to your personal identity.

And it's for that reason that privacy advocates in both Europe and the USA are up in arms about the new facial recognition technology. What seems harmless at first - the ability to identify your friends in photos - could be something much more dangerous in the hands of anyone else other than your friends for one simple reason: your face is the key to linking your online and offline identities. It's one thing for law enforcement officials to have access to this technology, but what if your neighbor suddenly has the ability to snoop on you?

The researchers at Carnegie Mellon showed how a combination of simple technologies - a smart phone, a webcam and a Facebook account - were enough to identify people after only a three-second visual search. Hackers - once they can put together a face and the basics of a personal profile - like a birthday and hometown - they can start piecing together details like your Social Security Number and bank account information.

And the Carnegie Mellon technology used to show this? You guessed it - it's based on PittPatt

(for Pittsburgh Pattern Recognition Technology), which was acquired by

Google, meaning that you may soon be hearing the Pitter Patter of small

facial recogntion bots following you around any of Google's Web

properties. The photo in your Google+ Profile, connected seamlessly to

video clips of you from YouTube, effortlessly linked to photos of your

family and friends in a Picasa album - all of these could be used to

identify you and uncover your private identity. Thankfully, Google is not evil.

Forget being fingerprinted, it could be far worse to be Faceprinted. It's like the scene from The Terminator, where Arnold Schwarzenegger is able to identify his targets by employing a futuristic form of facial recognition technology. Well, the future is here.

Imagine a complete stranger taking a photo of you and immediately connecting that photo to every element of your personal identity and using that to stalk you (or your wife or your daughter). It happened to reality TV star Adam Savage - when he uploaded a photo to his Twitter page of his SUV parked outside his home, he didn't realize that it included geo-tagging meta-data. Within hours, people knew the exact location of his home. Or, imagine walking into a store, and the sales floor staff doing a quick visual search using a smart phone camera, finding out what your likes and interests are via Facebook or Google, and then tailoring their sales pitch accordingly. It's targeted advertising, taken to the extreme.

Which is not to say that everything about facial recognition technology is scary and creepy. Gizmodo

ran a great piece explaining all the "advantages" of being recognize

onlined. (Yet, two days later, Gizmodo also ran a piece explaining how

military spies could track you down almost instantly with facial

recognition technology, no matter where you are in the world).

Which raises the important question: Is Privacy a Right or a Privilege? Now that we're all celebrities in the Internet age, it doesn't take much to extrapolate that soon we'll all have the equivalent of Internet paparazzi incessantly snapping photos of us and intruding into our daily lives. Cookies, spiders, bots and spyware will seem positively Old School by then. The people with money and privilege and clout will be the people who will be able to erect barriers around their personal lives, living behind the digital equivalent of a gated community. The rest of us? We'll live our lives in public.

Geeks Without Frontiers Pursues Wi-Fi for Everyone

Via OStatic

-----

Recently, you may have heard about new efforts to bring online access to regions where it has been economically nonviable before. This idea is not new, of course. The One Laptop Per Child (OLPC) initiative was squarely aimed at the goal until it ran into some significant hiccups. One of the latest moves on this front comes from Geeks Without Frontiers, which has a stated goal of positively impacting one billion lives with technology over the next 10 years. The organization, sponsored by Google and The Tides Foundation, is working on low cost, open source Wi-Fi solutions for "areas where legacy broadband models are currently considered to be uneconomical."

According to an announcement from Geeks Without Frontiers:

"GEEKS expects that this technology, built mainly by Cozybit, managed by GEEKS and I-Net Solutions, and sponsored by Google, Global Connect, Nortel, One Laptop Per Child, and the Manna Energy Foundation, will enable the development and rollout of large-scale mesh Wi-Fi networks for atleast half of the traditional network cost. This is a major step in achieving the vision of affordable broadband for all."

It's notable that One Laptop Per Child is among the sponsors of this initiative. The organization has open sourced key parts of its software platform, and could have natural synergies with a global Wi-Fi effort.

“By driving down the cost of metropolitan and village scale Wi-Fi networks, millions more people will be able to reap the economic and social benefits of significantly lower cost Internet access,” said Michael Potter, one of the founders of the GEEKS initiative.

The Wi-Fi technology that GEEKS is pursuing is mesh networking technology. Specifically, open80211s (o11s), which implements the AMPE (Authenticated Mesh Peering Exchange) enabling multiple authenticated nodes to encrypt traffic between themselves. Mesh networks are essentially widely distributed wireless networks based on many repeaters throught a specific location.

You can read much more about the open80211s standard here. The GEEKS initiative has significant backers, and with sponsorship from OLPC, will probably benefit from good advice on the topic of bringing advanced technologies to disadvantaged regions of the world. The effort will be worth watching.

Tuesday, August 09. 2011

The Subjectivity of Natural Scrolling

Via Slash Gear

-----

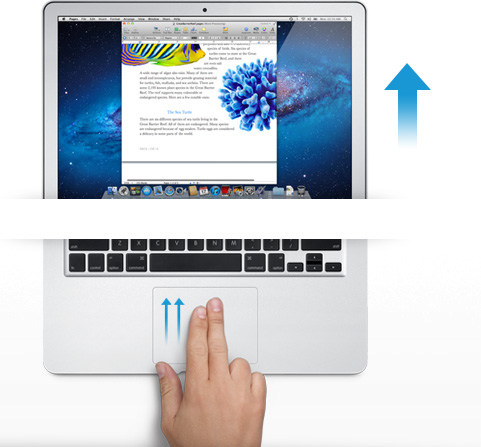

Apple released its new OS X Lion for Mac computers recently, and there was one controversial change that had the technorati chatting nonstop. In the new Lion OS, Apple changed the direction of scrolling. I use a MacBook Pro (among other machines, I’m OS agnostic). On my MacBook, I scroll by placing two fingers on the trackpad and moving them up or down. On the old system, moving my fingers down meant the object on the screen moved up. My fingers are controlling the scroll bars. Moving down means I am pulling the scroll bars down, revealing more of the page below what is visible. So, the object moves upwards. On the new system, moving my fingers down meant the object on screen moves down. My fingers are now controlling the object. If I want the object to move up, and reveal more of what is beneath, I move my fingers up, and content rises on screen.

The scroll bars are still there, but Apple has, by default, hidden them in many apps. You can make them reappear by hunting through the settings menu and turning them back on, but when they do come back, they are much thinner than they used to be, without the arrows at the top and bottom. They are also a bit buggy at the moment. If I try to click and drag the scrolling indicator, the page often jumps around, as if I had missed and clicked on the empty space above or below the scroll bar instead of directly on it. This doesn’t always happen, but it happens often enough that I have trained myself to avoid using the scroll bars this way.

So, the scroll bars, for now, are simply a visual indicator of where my view is located on a long or wide page. Clearly Apple does not think this information is terribly important, or else scroll bars would be turned on by default. As with the scroll bars, you can also hunt through the settings menu to turn off the new, so-called “natural scrolling.” This will bring you back to the method preferred on older Apple OSes, and also on Windows machines.

So, the scroll bars, for now, are simply a visual indicator of where my view is located on a long or wide page. Clearly Apple does not think this information is terribly important, or else scroll bars would be turned on by default. As with the scroll bars, you can also hunt through the settings menu to turn off the new, so-called “natural scrolling.” This will bring you back to the method preferred on older Apple OSes, and also on Windows machines.

Some disclosure: my day job is working for Samsung. We make Windows computers that compete with Macs. I work in the phones division, but my work machine is a Samsung laptop running Windows. My MacBook is a holdover from my days as a tech journalist. When you become a tech journalist, you are issued a MacBook by force and stripped of whatever you were using before.

"Natural scrolling will seem familiar to those of you not frozen in an iceberg since World War II"I am not criticizing or endorsing Apple’s new natural scrolling in this column. In fact, in my own usage, there are times when I like it, and times when I don’t. Those emotions are usually found in direct proportion to the amount of NyQuil I took the night before and how hot it was outside when I walked my dog. I have found no other correlation.

The new natural scrolling method will probably seem familiar to those of you not frozen in an iceberg since World War II. It is the same direction you use for scrolling on most touchscreen phones, and most tablets. Not all, of course. Some phones and tablets still use styli, and these phone often let you scroll by dragging scroll bars with the pointer. But if you have an Android or an iPhone or a Windows Phone, you’re familiar with the new method.

My real interest here is to examine how the user is placed in the conversation between your fingers and the object on screen. I have heard the argument that the new method tries, and perhaps fails, to emulate the touchscreen experience by manipulating objects as if they were physical. On touchscreen phones, this is certainly the case. When we touch something on screen, like an icon or a list, we expect it to react in a physical way. When I drag my finger to the right, I want the object beneath to move with my finger, just as a piece of paper would move with my finger when I drag it.

This argument postulates a problem with Apple’s natural scrolling because of the literal distance between your fingers and the objects on screen. Also, the angle has changed. The plane of your hands and the surface on which they rest are at an oblique angle of more than 90 degrees from the screen and the object at hand.

Think of a magic wand. When you wave a magic wand with the tip facing out before you, do you imagine the spell shooting forth parallel to the ground, or do you imagine the spell shooting directly upward? In our imagination, we do want a direct correlation between the position of our hands and the reaction on screen, this is true. However, is this what we were getting before? Not really.

The difference between classic scrolling and ‘natural’ scrolling seems to be the difference between manipulating a concept and manipulating an object. Scroll bars are not real, or at least they do not correspond to any real thing that we would experience in the physical world. When you read a tabloid, you do not scroll down to see the rest of the story. You move your eyes. If the paper will not fit comfortably in your hands, you fold it. But scrolling is not like folding. It is smoother. It is continuous. Folding is a way of breaking the object into two conceptual halves. Ask a print newspaper reporter (and I will refrain from old media mockery here) about the part of the story that falls “beneath the fold.” That part better not be as important as the top half, because it may never get read.

Natural scrolling correlates more strongly to moving an actual object. It is like reading a newspaper on a table. Some of the newspaper may extend over the edge of the table and bend downward, making it unreadable. When you want to read it, you move the paper upward. In the same way, when you want to read more of the NYTimes.com site, you move your fingers upward.

"Is it better to create objects on screen that appropriate the form of their physical world counterparts?"The argument should not be over whether one is more natural than the other. Let us not forget that we are using an electronic machine. This is not a natural object. The content onscreen is only real insofar as pixels light up and are arranged into a recognizable pattern. Those words are not real, they are the absence of light, in varying degrees if you have anti-aliasing cranked up, around recognizable patterns that our eyes and brain interpret as letters and words.

The argument should be over which is the more successful design for a laptop or desktop operating system. Is it better to create objects on screen that appropriate the form of their physical world counterparts? Should a page in Microsoft Word look like a piece of paper? Should an icon for a hard disk drive look like a hard disk? What percentage of people using a computer have actually seen a hard disk drive? What if your new ultraportable laptop uses a set of interconnected solid state memory chips instead? Does the drive icon still look like a drive?

Or is it better to create objects on screen that do not hew to the physical world? Certainly their form should suggest their function in order to be intuitive and useful, but they do not have to be photorealistic interpretations. They can suggest function through a more abstract image, or simply by their placement and arrangement.

"How should we represent a Web browser, a feature that has no counterpart in real life?"In the former system, the computer interface becomes a part of the users world. The interface tries to fit in with symbols that are already familiar. I know what a printer looks like, so when I want to set up my new printer, I find the picture of the printer and I click on it. My email icon is a stamp. My music player icon is a CD. Wait, where did my CD go? I can’t find my CD?! What happened to my music!?!? Oh, there it is. Now it’s just a circle with a musical note. I guess that makes sense, since I hardly use CDs any more.

In the latter system, the user becomes part of the interface. I have to learn the language of the interface design. This may sound like it is automatically more difficult than the former method of photorealism, but that may not be true. After all, when I want to change the brightness of my display, will my instinct really be to search for a picture of a cog and gears? And how should we represent a Web browser, a feature that has no counterpart in real life? Are we wasting processing power and time trying to create objects that look three dimensional on a two dimensional screen in a 2D space?

I think the photorealistic approach, and Apple’s new natural scrolling, may be the more personal way to design an interface. Apple is clearly thinking of the intimate relationship between the user and the objects that we touch. It is literally a sensual relationship, in that we use a variety of our senses. We touch. We listen. We see.

But perhaps I do not need, nor do I want, to have this relationship with my work computer. I carry my phone with me everywhere. I keep my tablet very close to me when I am using it. With my laptop, I keep some distance. I am productive. We have a lot to get done.

DNA circuits used to make neural network, store memories

Via ars technica

By Kyle Niemeyer

-----

Even as some scientists and engineers develop improved versions of current computing technology, others are looking into drastically different approaches. DNA computing offers the potential of massively parallel calculations with low power consumption and at small sizes. Research in this area has been limited to relatively small systems, but a group from Caltech recently constructed DNA logic gates using over 130 different molecules and used the system to calculate the square roots of numbers. Now, the same group published a paper in Nature that shows an artificial neural network, consisting of four neurons, created using the same DNA circuits.

The artificial neural network approach taken here is based on the perceptron model, also known as a linear threshold gate. This models the neuron as having many inputs, each with its own weight (or significance). The neuron is fired (or the gate is turned on) when the sum of each input times its weight exceeds a set threshold. These gates can be used to construct compact Boolean logical circuits, and other circuits can be constructed to store memory.

As we described in the last article on this approach to DNA computing, the authors represent their implementation with an abstraction called "seesaw" gates. This allows them to design circuits where each element is composed of two base-paired DNA strands, and the interactions between circuit elements occurs as new combinations of DNA strands pair up. The ability of strands to displace each other at a gate (based on things like concentration) creates the seesaw effect that gives the system its name.

In order to construct a linear threshold gate, three basic seesaw gates are needed to perform different operations. Multiplying gates combine a signal and a set weight in a seesaw reaction that uses up fuel molecules as it converts the input signal into output signal. Integrating gates combine multiple inputs into a single summed output, while thresholding gates (which also require fuel) send an output signal only if the input exceeds a designated threshold value. Results are read using reporter gates that fluoresce when given a certain input signal.

To test their designs with a simple configuration, the authors first constructed a single linear threshold circuit with three inputs and four outputs—it compared the value of a three-bit binary number to four numbers. The circuit output the correct answer in each case.

For the primary demonstration on their setup, the authors had their linear threshold circuit play a computer game that tests memory. They used their approach to construct a four-neuron Hopfield network, where all the neurons are connected to the others and, after training (tuning the weights and thresholds) patterns can be stored or remembered. The memory game consists of three steps: 1) the human chooses a scientist from four options (in this case, Rosalind Franklin, Alan Turing, Claude Shannon, and Santiago Ramon y Cajal); 2) the human “tells” the memory network the answers to one or more of four yes/no (binary) questions used to identify the scientist (such as, “Did the scientist study neural networks?” or "Was the scientist British?"); and 3) after eight hours of thinking, the DNA memory guesses the answer and reports it through fluorescent signals.

They played this game 27 total times, for a total of 81 possible question/answer combinations (34). You may be wondering why there are three options to a yes/no question—the state of the answers is actually stored using two bits, so that the neuron can be unsure about answers (those that the human hasn't provided, for example) using a third state. Out of the 27 experimental cases, the neural network was able to correctly guess all but six, and these were all cases where two or more answers were not given.

In the best cases, the neural network was able to correctly guess with only one answer and, in general, it was successful when two or more answers were given. Like the human brain, this network was able to recall memory using incomplete information (and, as with humans, that may have been a lucky guess). The network was also able to determine when inconsistent answers were given (i.e. answers that don’t match any of the scientists).

These results are exciting—simulating the brain using biological computing. Unlike traditional electronics, DNA computing components can easily interact and cooperate with our bodies or other cells—who doesn’t dream of being able to download information into your brain (or anywhere in your body, in this case)? Even the authors admit that it’s difficult to predict how this approach might scale up, but I would expect to see a larger demonstration from this group or another in the near future.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131041)

- Norton cyber crime study offers striking revenue loss statistics (101650)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100053)

- Norton cyber crime study offers striking revenue loss statistics (57879)

- The PC inside your phone: A guide to the system-on-a-chip (57440)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |