Wednesday, March 18. 2015

First Ubuntu Phone Landing In Europe Shortly

Via TechCrunch

-----

A year after it revealed another attempt to muscle in on the smartphone market, Canonical’s first Ubuntu-based smartphone is due to go on sale in Europe in the “coming days”, it said today. The device will be sold for €169.90 (~$190) unlocked to any carrier network, although some regional European carriers will be offering SIM bundles at the point of purchase. The hardware is an existing mid-tier device, the Aquaris E4.5, made by Spain’s BQ — with the Ubuntu version of the device known as the ‘Aquaris E4.5 Ubuntu Edition’. So the only difference here is it will be pre-loaded with Ubuntu’s mobile software, rather than Google’s Android platform.

Canonical has been trying to get into the mobile space for a while now. Back in 2013, the open source software maker failed to crowdfund a high end converged smartphone-cum-desktop-computer, called the Ubuntu Edge — a smartphone-sized device that would been powerful enough to transform from a pocket computer into a fully fledged desktop when plugged into a keyboard and monitor, running Ubuntu’s full-fat desktop OS. Canonical had sought to raise a hefty $32 million in crowdfunds to make that project fly. Hence its more modest, mid-tier smartphone debut now.

On the hardware side, Ubuntu’s first smartphone offers pretty bog standard mid-range specs, with a 4.5 inch screen, 1GB RAM, a quad-core A7 chip running at “up to 1.3Ghz”, 8GB of on-board storage, 8MP rear camera and 5MP front-facing lens, plus a dual-SIM slot. But it’s the mobile software that’s the novelty here (demoed in action in Canonical’s walkthrough video, embedded below).

Canonical has created a gesture-based smartphone interface called Scopes, which puts the homescreen focus on on a series of themed cards that aggregate content and which the user swipes between to navigate around the functions of the phone, while app icons are tucked away to the side of the screen, or gathered together on a single Scope card. Examples include a contextual ‘Today’ card which contains info like weather and calendar, or a ‘Nearby’ card for location-specific local services, or a card for accessing ‘Music’ content on the device, or ‘News’ for accessing various articles in one place.

It’s certainly a different approach to the default grid of apps found on iOS and Android but has some overlap with other, alternative platforms such as Palm’s WebOS, or the rebooted BlackBerry OS, or Jolla’s Sailfish. The problem is, as with all such smaller OSes, it will be an uphill battle for Canonical to attract developers to build content for its platform to make it really live and breathe. (It’s got a few third parties offering content at launch — including Songkick, The Weather Channel and TimeOut.) And, crucially, a huge challenge to convince consumers to try something different which requires they learn new mobile tricks. Especially given that people can’t try before they buy — as the device will be sold online only.

Canonical said the Aquaris E4.5 Ubuntu Edition will be made available in a series of flash sales over the coming weeks, via BQ.com. Sales will be announced through Ubuntu and BQ’s social media channels — perhaps taking a leaf out of the retail strategy of Android smartphone maker Xiaomi, which uses online flash sales to hype device launches and shift inventory quickly. Building similar hype in a mature smartphone market like Europe — for mid-tier hardware — is going to a Sisyphean task. But Canonical claims to be in it for the long, uphill haul.

“We are going for the mass market,” Cristian Parrino, its VP of Mobile, told Engadget. “But that’s a gradual process and a thoughtful process. That’s something we’re going to be doing intelligently over time — but we’ll get there.”

Monday, February 02. 2015

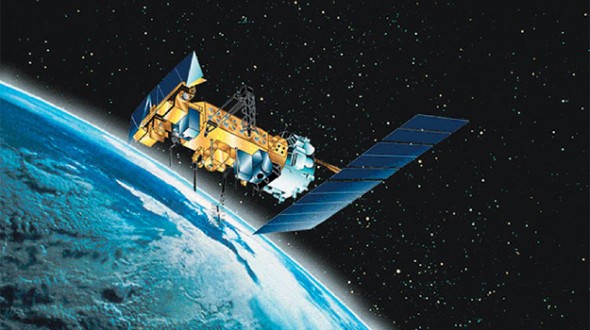

ConnectX wants to put server farms in space

Via Geek

-----

The next generation of cloud servers might be deployed where the clouds can be made of alcohol and cosmic dust: in space. That’s what ConnectX wants to do with their new data visualization platform.

Why space? It’s not as though there isn’t room to set up servers here on Earth, what with Germans willing to give up space in their utility rooms in exchange for a bit of ambient heat and malls now leasing empty storefronts to service providers. But there are certain advantages.

The desire to install servers where there’s abundant, free cooling makes plenty of sense. Down here on Earth, that’s what’s driven companies like Facebook to set up shop in Scandinavia near the edge of the Arctic Circle. Space gets a whole lot colder than the Arctic, so from that standpoint the ConnectX plan makes plenty of sense. There’s also virtually no humidity, which can wreak havoc on computers.

They also believe that the zero-g environment would do wonders for the lifespan of the hard drives in their servers, since it could reduce the resistance they encounter while spinning. That’s the same reason Western Digital started filling hard drives with helium.

But what about data transmission? How does ConnectX plan on moving the bits back and forth between their orbital servers and networks back on the ground? Though something similar to NASA’s Lunar Laser Communication Demonstration — which beamed data to the moon 4,800 times faster than any RF system ever managed — seems like a decent option, they’re leaning on RF.

Mind you, it’s a fairly complex setup. ConnectX says they’re polishing a system that “twists” signals to reap massive transmission gains. A similar system demonstrated last year managed to push data over radio waves at a staggering 32gbps, around 30 times faster than LTE.

So ConnectX seems to have that sorted. The only real question is the cost of deployment. Can the potential reduction in long-term maintenance costs really offset the massive expense of actually getting their servers into orbit? And what about upgrading capacity? It’s certainly not going to be nearly as fast, easy, or cheap as it is to do on Earth. That’s up to ConnectX to figure out, and they seem confident that they can make it work.

Thursday, December 11. 2014

Google X Nano Pill will seek cancer cells in your body

Via Slashgear

-----

Google's moonshot group Google X is working on a pill that, when swallowed, will seek out cancer cells in your body. It'll seek out all sorts of diseases, in fact, pushing the envelope when it comes to finding and destroying diseases at their earliest stages of development. This system would face "a much higher regulatory bar than conventional diagnostic tools," so says Chad A. Mirkin, director of the International Institute for Nanotechnology at Northwestern University.

Word comes from the Wall Street Journal where they've got Andrew Conrad, head of the Life Sciences team at the Google X research lab speaking during their WSDJ Live conference. This system is called "The Nano Particle Platform," and it aims to "functionalize Nano Particles, to make them do what we want."

According to Conrad, Google X is working on two separate devices, more than likely. The first is the pill which contains their smart nanoparticles. The second is a wearable device that attracts the particles so that they might be counted.

"Our dream" said Conrad, is that "every test you ever go to the doctor for will be done through this system."

Sound like a good idea to you?

Friday, November 07. 2014

Predicting the next decade of tech: From the cloud to disappearing computers and the rise of robots

Via ZDNet

-----

For an industry run according to logic and rationality, at least outwardly, the tech world seems to have a surprising weakness for hype and the 'next big thing'.

Perhaps that's because, unlike — say — in sales or HR, where innovation is defined by new management strategies, tech investment is very product driven. Buying a new piece of hardware or software often carries the potential for a 'disruptive' breakthrough in productivity or some other essential business metric. Tech suppliers therefore have a vested interest in promoting their products as vigorously as possible: the level of spending on marketing and customer acquisition by some fast-growing tech companies would turn many consumer brands green with envy.

As a result, CIOs are tempted by an ever-changing array of tech buzzwords (cloud, wearables and the Internet of Things [IoT] are prominent in the recent crop) through which they must sift in order to find the concepts that are a good fit for their organisations, and that match their budgets, timescales and appetite for risk. Short-term decisions are relatively straightforward, but the further you look ahead, the harder it becomes to predict the winners.

Tech innovation in a one-to-three year timeframe

Despite all the temptations, the technologies that CIOs are looking at deploying in the near future are relatively uncontroversial — pretty safe bets, in fact. According to TechRepublic's own research, top CIO investment priorities over the next three years include security, mobile, big data and cloud. Fashionable technologies like 3D printing and wearables find themselves at the bottom of the list.

A separate survey from Deloitte reported similar findings: many of the technologies that CIOs are piloting and planning to implement in the near future are ones that have been around for quite some time — business analytics, mobile apps, social media and big data tools, for example. Augmented reality and gamification were seen as low-priority technologies.

This reflects the priorities of most CIOs, who tend to focus on reliability over disruption: in TechRepublic's research, 'protecting/securing networks and data' trumps 'changing business requirements' for understandably risk-wary tech chiefs.

Another major factor here is money: few CIOs have a big budget for bets on blue-skies innovation projects, even if they wanted to. (And many no doubt remember the excesses of the dotcom years, and are keen to avoid making that mistake again.)

According to the research by Deloitte, less than 10 percent of the tech budget is ring-fenced for technology innovation (and CIOs that do spend more on innovation tend to be in smaller, less conservative, companies). There's another complication in that CIOs increasingly don't control the budget dedicated to innovation, as this is handed onto other business units (such as marketing or digital) that are considered to have a more entrepreneurial outlook.

CIOs tend to blame their boss's conservative attitude to risk as the biggest constraint in making riskier IT investments for innovation and growth. Although CIOs claim to be willing to take risks with IT investments, this attitude does not appear to match up with their current project portfolios.

Another part of the problem is that it's very hard to measure the return on some of these technologies. Managers have been used to measuring the benefits of new technologies using a standard return-on-investment measure that tracks some very obvious costs — headcount or spending on new hardware, for example. But defining the return on a social media project or an IoT trial is much more slippery.

Tech investment: A medium-term view

If CIO investment plans remain conservative and hobbled by a limited budget in the short term, you have to look a little further out to see where the next big thing in tech might come from.

One place to look is in what's probably the best-known set of predictions about the future of IT: Gartner's Hype Cycle for Emerging Technologies, which tries to assess the potential of new technologies while taking into account the expectations surrounding them.

The chart grades technologies not only by how far they are from mainstream adoption, but also on the level of hype surrounding them, and as such it demonstrates what the analysts argue is a fundamental truth: that we can't help getting excited about new technology, but that we also rapidly get turned off when we realize how hard it can be to deploy successfully. The exotically-named Peak of Inflated Expectations is commonly followed by the Trough of Disillusionment, before technologies finally make it up the Slope of Enlightenment to the Plateau of Productivity.

"It was a pattern we were seeing with pretty much all technologies — that up-and-down of expectations, disillusionment and eventual productivity," says Jackie Fenn, vice-president and Gartner fellow, who has been working on the project since the first hype cycle was published 20 years ago, which she says is an example of the human reaction to any novelty.

"It's not really about the technologies themselves, it's about how we respond to anything new. You see it with management trends, you see it with projects. I've had people tell me it applies to their personal lives — that pattern of the initial wave of enthusiasm, then the realisation that this is much harder than we thought, and then eventually coming to terms with what it takes to make something work."

According to Gartner's 2014 list, the technologies expected to reach the Plateau of Productivity, (where they become widely adopted) within the next two years include speech recognition and in-memory analytics.

Technologies that might take two to five years until mainstream adoption include 3D scanners, NFC and cloud computing. Cloud is currently entering Gartner's trough of disillusionment, where early enthusiasm is overtaken by the grim reality of making this stuff work: "there are many signs of fatigue, rampant cloudwashing and disillusionment (for example, highly visible failures)," Gartner notes.

When you look at a 5-10-year horizon, the predictions include virtual reality, cryptocurrencies and wearable user interfaces.

Working out when the technologies will make the grade, and thus how CIOs should time their investments, seems to be the biggest challenge. Several of the technologies on Gartner's first-ever hype curve back in 1995 — including speech recognition and virtual reality — are still on the 2014 hype curve without making it to primetime yet.

These sorts of user interface technologies have taken a long time to mature, says Fenn. For example, voice recognition started to appear in very structured call centre applications, while the latest incarnation is something like Siri — "but it's still not a completely mainstream interface," she says.

Nearly all technologies go through the same rollercoaster ride, because our response to new concepts remains the same, says Fenn. "It's an innate psychological reaction — we get excited when there's something new. Partly it's the wiring of our brains that attracts us — we want to keep going around the first part of the cycle where new technologies are interesting and engaging; the second half tends to be the hard work, so it's easier to get distracted."

But even if they can't escape the hype cycle, CIOs can use concepts like this to manage their own impulses: if a company's investment strategy means it's consistently adopting new technologies when they are most hyped (remember a few years back when every CEO had to blog?) then it may be time to reassess, even if the CIO peer-pressure makes it difficult.

Says Fenn: "There is that pressure, that if you're not doing it you just don't get it — and it's a very real pressure. Look at where [new technology] adds value and if it really doesn't, then sometimes it's fine to be a later adopter and let others learn the hard lessons if it's something that's really not critical to you."

The trick, she says, is not to force-fit innovation, but to continually experiment and not always expect to be right.

Looking further out, the technologies labelled 'more than 10 years' to mainstream adoption on Gartner's hype cycle are the rather sci-fi-inflected ones: holographic displays, quantum computing and human augmentation. As such, it's a surprisingly entertaining romp through the relatively near future of technology, from the rather mundane to the completely exotic. "Employers will need to weigh the value of human augmentation against the growing capabilities of robot workers, particularly as robots may involve fewer ethical and legal minefields than augmentation," notes Gartner.

Where the futurists roam

Beyond the 10-year horizon, you're very much into the realm where the tech futurists roam.

Steve Brown, a futurist at chip-maker Intel argues that three mega-trends will shape the future of computing over the next decade. "They are really simple — it's small, big and natural," he says.

'Small' is the consequence of Moore's Law, which will continue the trend towards small, low-power devices, making the rise of wearables and the IoT more likely. 'Big' refers to the ongoing growth in raw computing power, while 'natural' is the process by which everyday objects are imbued with some level of computing power.

"Computing was a destination: you had to go somewhere to compute — a room that had a giant whirring computer in it that you worshipped, and you were lucky to get in there. Then you had the era where you could carry computing with you," says Brown.

"The next era is where the computing just blends into the world around us, and once you can do that, and instrument the world, you can essentially make everything smart — you can turn anything into a computer. Once you do that, profoundly interesting things happen," argues Brown.

With this level of computing power comes a new set of problems for executives, says Brown. The challenge for CIOs and enterprise architects is that once they can make everything smart, what do they want to use it for? "In the future you have all these big philosophical questions that you have to answer before you make a deployment," he says.

Brown envisages a world of ubiquitous processing power, where robots are able to see and understand the world around them.

"Autonomous machines are going to change everything," he claims. "The challenge for enterprise is how humans will work alongside machines — whether that's a physical machine or an algorithm — and what's the best way to take a task and split it into the innately human piece and the bit that can be optimized in some way by being automated."

The pace of technological development is accelerating: where we used to have a decade to make these decisions, these things are going to hit us faster and faster, argues Brown. All of which means we need to make better decisions about how to use new technology — and will face harder questions about privacy and security.

"If we use this technology, will it make us better humans? Which means we all have to decide ahead of time what do we consider to be better humans? At the enterprise level, what do we stand for? How do we want to do business?".

Not just about the hardware and software

For many organizations there's a big stumbling block in the way of this bright future — their own staff and their ways of working. Figuring out what to invest in may be a lot easier than persuading staff, and whole organisations, to change how they operate.

"What we really need to figure out is the relationship between humans and technology, because right now humans get technology massively wrong," says Dave Coplin, chief envisioning officer for Microsoft (a firmly tongue-in-cheek job title, he assures me).

Coplin argues that most of us tend to use new technology to do things the way we've always been doing them for years, when the point of new technology is to enable us to do things fundamentally differently. The concept of productivity is a classic example: "We've got to pick apart what productivity means. Unfortunately most people think process is productivity — the better I can do the processes, the more productive I am. That leads us to focus on the wrong point, because actually productivity is about leading to better outcomes." Three-quarters of workers think a productive day in the office is clearing their inbox, he notes.

Developing a better relationship with technology is necessary because of the huge changes ahead, argues Coplin: "What happens when technology starts to disappear into the background; what happens when every surface has the capability to have contextual information displayed on it based on what's happening around it, and who is looking at it? This is the kind of world we're heading into — a world of predictive data that will throw up all sorts of ethical issues. If we don't get the humans ready for that change we'll never be able to make the most of it."

Nicola Millard, a futurologist at telecoms giant BT, echoes these ideas, arguing that CIOs have to consider not just changes to the technology ahead of them, but also changes to the workers: a longer working life requires workplace technologies that appeal to new recruits as well as staff into their 70s and older. It also means rethinking the workplace: "The open-plan office is a distraction machine," she says — but can you be innovative in a grey cubicle? Workers using tablets might prefer 'perch points' to desks, those using gesture control may need more space. Even the role of the manager itself may change — becoming less about traditional command and control, and more about being a 'party host', finding the right mix of skills to get the job done.

In the longer term, not only will the technology change profoundly, but the workers and managers themselves will also need to upgrade their thinking.

Wednesday, November 05. 2014

Researchers bridge air gap by turning monitors into FM radios

Via ars technica

-----

A two-stage attack could allow spies to sneak secrets out of the most sensitive buildings, even when the targeted computer system is not connected to any network, researchers from Ben-Gurion University of the Negev in Israel stated in an academic paper describing the refinement of an existing attack.

The technique, called AirHopper, assumes that an attacker has already compromised the targeted system and desires to occasionally sneak out sensitive or classified data. Known as exfiltration, such occasional communication is difficult to maintain, because government technologists frequently separate the most sensitive systems from the public Internet for security. Known as an air gap, such a defensive measure makes it much more difficult for attackers to compromise systems or communicate with infected systems.

Yet, by using a program to create a radio signal using a computer’s video card—a technique known for more than a decade—and a smartphone capable of receiving FM signals, an attacker could collect data from air-gapped devices, a group of four researchers wrote in a paper presented last week at the IEEE 9th International Conference on Malicious and Unwanted Software (MALCON).

“Such technique can be used potentially by people and organizations with malicious intentions and we want to start a discussion on how to mitigate this newly presented risk,” Dudu Mimran, chief technology officer for the cyber security labs at Ben-Gurion University, said in a statement.

For the most part, the attack is a refinement of existing techniques. Intelligence agencies have long known—since at least 1985—that electromagnetic signals could be intercepted from computer monitors to reconstitute the information being displayed. Open-source projects have turned monitors into radio-frequency transmitters. And, from the information leaked by former contractor Edward Snowden, the National Security Agency appears to use radio-frequency devices implanted in various computer-system components to transmit information and exfiltrate data.

AirHopper uses off-the-shelf components, however, to achieve the same result. By using a smartphone with an FM receiver, the exfiltration technique can grab data from nearby systems and send it to a waiting attacker once the smartphone is again connected to a public network.

“This is the first time that a mobile phone is considered in an attack model as the intended receiver of maliciously crafted radio signals emitted from the screen of the isolated computer,” the group said in its statement on the research.

The technique works at a distance of 1 to 7 meters, but can only send data at very slow rates—less than 60 bytes per second, according to the researchers.

Wednesday, October 29. 2014

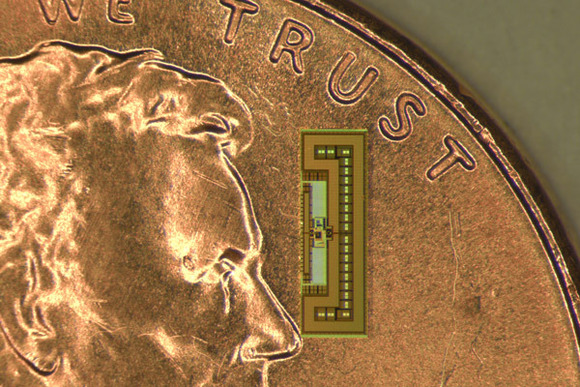

Stanford researchers develop ant-sized radio

Via PCWorld

-----

Engineers at Stanford University have developed a tiny radio that’s about as big as an ant and that’s cheap and small enough that it could help realize the “Internet of things”—the world of everyday objects that send and receive data via the Internet.

The radio is built on a piece of silicon that measures just a few millimeters on each side. Several tens of them can fit on the top of a U.S. penny and the radio itself is expected to cost only a few pennies to manufacture in mass quantities.

Part of the secret to the radio’s size is its lack of a battery. Its power requirements are sufficiently frugal that it can harvest the energy it needs from nearby radio fields, such as those from a reader device when it’s brought nearby.

RFID tags and contactless smartcards can get their power the same way, drawing energy from a radio source, but Stanford’s radio has more processing power than those simpler devices, a university representative said. That means it could query a sensor for its data, for instance, and transmit it when required.

The device operates in the 24GHz and 60GHz bands, suitable for communications over a few tens of centimeters.

Engineers envisage a day when trillions of objects are connected via tiny radios to the Internet. Data from the devices is expected to help realize smarter and more energy-efficient homes, although quite how it will all work is yet to be figured out. Radios like the one from Stanford should help greatly expand the number of devices that can collect and share data.

The radio was demonstrated by Amin Arbabian, an assistant professor of electrical engineering at Stanford and one of the developers of the device, at the recent VLSI Technology and Circuits Symposium in Hawaii.

Tuesday, September 09. 2014

Mimosa Networks Launches Its First Gigabit Wireless Products

Via Tech Crunch

-----

Mimosa Networks is finally ready to help make gigabit wireless technology a reality. The company, which recently came out of stealth, is launching a series of products that it hopes to sell to a new generation of wireless ISPs.

Its wireless Internet products include its new B5 Backhaul radio hardware and its Mimosa Cloud Services planning and analytics offering. By using the two in combination, new ISPs can build high-capacity wireless networks at a fraction of the cost it would take to lay a fiber network.

The B5 backhaul radio is a piece of hardware that uses multiple-input and multiple-output (MIMO) technology to provide up to 16 streams and 4 Gbps of output when multiple radios are using the same channel.

With a single B5 radio, customers can provide a gigabit of throughput for up to eight or nine miles, according to co-founder and chief product officer Jaime Fink. The longer the distance, the less bandwidth is available, of course. But Fink said the company is running one link of about 60 miles that still gets a several hundred megabits of throughput along the California coast.

Not only does the product offer high data speeds on 5 GHz wireless spectrum, but it also makes that spectrum more efficient. It uses spectrum analysis and load balancing to optimize bandwidth, frequency, and power use based on historical and real-time data to adapt to wireless interference and other issues.

In addition to the hardware, Mimosa’s cloud services will help customers plan and deploy networks with analytics tools to determine how powerful and efficiently their existing equipment is running. That will enable new ISPs to more effectively determine where to place new hardware to link up with other base stations.

The product also is designed to support networks as they grow, and it makes sure that ISPs can spot problems as they happen. The Cloud Services product is available now, but the backhaul radio will be available for about $900 later this fall.

Mimosa is launching these first products after raising $38 million in funding from New Enterprise Associates and Oak Investment Partners. That includes a $20 million Series C round led by return investor NEA that was recently closed.

The company was founded by Brian Hinman, who had previously co-founded PictureTel, Polycom, and 2Wire, along with Fink, who previously served as CTO of 2Wire and SVP of technology for Pace after it acquired the home networking equipment company. Now they’re hoping to make wireless gigabit speeds available for new ISPs.

Monday, September 08. 2014

Touch+ transforms any surface into a multitouch gesture controller for your PC or Mac

Via PCWorld

-----

After Leap Motion's somewhat disappointing debut, you'd be forgiving for wanting to wave off the idea of third-party gesture control peripherals. But wait! Unlike Leap, Reactiv isn't trying to revolutionize human-computer interactions with its Touch+ controller—there's no wizard-like finger waggling or Minority Report-style hand waving here. Instead, the Touch+'s dual cameras turn any surface into multi-touch input device.

Touch+ was born out of Haptix, a Kickstarter project that raised more than $180,000 from backers. Over the past year, Reactiv refined the Haptix vision to eventually become Touch+.

While Touch+ certainly won't be for everyone, Reactiv is positioning the multitouch PC controller as more than a mere tool for games and art projects. The video above shows the device being used in an office meeting, acting as a cursor control for a businessman's laptop before being repositioned on the fly to point at a projected display, instantly allowing the man to reach up with his hands to circle objects on the image.

What's more, PCWorld sister site CITEWorld managed to snag a live demo with Touch+, and the founders focused on the potential productivity uses of the device: Enabling mouse-free control of Excel and PowerPoint, naturally manipulating pictures in PhotoShop, creating designs in CAD, the aforementioned presentation capabilities, and so forth.

The Touch+ motion controller connected to the top of a notebook.

The Touch+ works with Windows PCs or Macs, connecting via USB 2.0 or 3.0. If you choose to point it at your keyboard, the device will temporarily suspend its multitouch capabilities while you type, then resume when your fingers stop bobbing up and down.

Sound interesting? An alpha version of Touch+ is available now on the Reactiv website for $75. Until we get our own hands on the device, however, we won't know for sure how the device stacks up to competitors like the Leap Motion.

Friday, September 05. 2014

The Internet of Things is here and there -- but not everywhere yet

Via PCWorld

-----

The Internet of Things is still too hard. Even some of its biggest backers say so.

For all the long-term optimism at the M2M Evolution conference this week in Las Vegas, many vendors and analysts are starkly realistic about how far the vaunted set of technologies for connected objects still has to go. IoT is already saving money for some enterprises and boosting revenue for others, but it hasn’t hit the mainstream yet. That’s partly because it’s too complicated to deploy, some say.

For now, implementations, market growth and standards are mostly concentrated in specific sectors, according to several participants at the conference who would love to see IoT span the world.

Cisco Systems has estimated IoT will generate $14.4 trillion in economic value between last year and 2022. But Kevin Shatzkamer, a distinguished systems architect at Cisco, called IoT a misnomer, for now.

“I think we’re pretty far from envisioning this as an Internet,” Shatzkamer said. “Today, what we have is lots of sets of intranets.” Within enterprises, it’s mostly individual business units deploying IoT, in a pattern that echoes the adoption of cloud computing, he said.

In the past, most of the networked machines in factories, energy grids and other settings have been linked using custom-built, often local networks based on proprietary technologies. IoT links those connected machines to the Internet and lets organizations combine those data streams with others. It’s also expected to foster an industry that’s more like the Internet, with horizontal layers of technology and multivendor ecosystems of products.

What’s holding back the Internet of Things

The good news is that cities, utilities, and companies are getting more familiar with IoT and looking to use it. The less good news is that they’re talking about limited IoT rollouts for specific purposes.

“You can’t sell a platform, because a platform doesn’t solve a problem. A vertical solution solves a problem,” Shatzkamer said. “We’re stuck at this impasse of working toward the horizontal while building the vertical.”

“We’re no longer able to just go in and sort of bluff our way through a technology discussion of what’s possible,” said Rick Lisa, Intel’s group sales director for Global M2M. “They want to know what you can do for me today that solves a problem.”

One of the most cited examples of IoT’s potential is the so-called connected city, where myriad sensors and cameras will track the movement of people and resources and generate data to make everything run more efficiently and openly. But now, the key is to get one municipal project up and running to prove it can be done, Lisa said.

ThroughTek, based in China, used a connected fan to demonstrate an Internet of Things device management system at the M2M Evolution conference in Las Vegas this week.

The conference drew stories of many successful projects: A system for tracking construction gear has caught numerous workers on camera walking off with equipment and led to prosecutions. Sensors in taxis detect unsafe driving maneuvers and alert the driver with a tone and a seat vibration, then report it to the taxi company. Major League Baseball is collecting gigabytes of data about every moment in a game, providing more information for fans and teams.

But for the mass market of small and medium-size enterprises that don’t have the resources to do a lot of custom development, even targeted IoT rollouts are too daunting, said analyst James Brehm, founder of James Brehm & Associates.

There are software platforms that pave over some of the complexity of making various devices and applications talk to each other, such as the Omega DevCloud, which RacoWireless introduced on Tuesday. The DevCloud lets developers write applications in the language they know and make those apps work on almost any type of device in the field, RacoWireless said. Thingworx, Xively and Gemalto also offer software platforms that do some of the work for users. But the various platforms on offer from IoT specialist companies are still too fragmented for most customers, Brehm said. There are too many types of platforms—for device activation, device management, application development, and more. “The solutions are too complex.”

He thinks that’s holding back the industry’s growth. Though the past few years have seen rapid adoption in certain industries in certain countries, sometimes promoted by governments—energy in the U.K., transportation in Brazil, security cameras in China—the IoT industry as a whole is only growing by about 35 percent per year, Brehm estimates. That’s a healthy pace, but not the steep “hockey stick” growth that has made other Internet-driven technologies ubiquitous, he said.

What lies ahead

Brehm thinks IoT is in a period where customers are waiting for more complete toolkits to implement it—essentially off-the-shelf products—and the industry hasn’t consolidated enough to deliver them. More companies have to merge, and it’s not clear when that will happen, he said.

“I thought we’d be out of it by now,” Brehm said. What’s hard about consolidation is partly what’s hard about adoption, in that IoT is a complex set of technologies, he said.

And don’t count on industry standards to simplify everything. IoT’s scope is so broad that there’s no way one standard could define any part of it, analysts said. The industry is evolving too quickly for traditional standards processes, which are often mired in industry politics, to keep up, according to Andy Castonguay, an analyst at IoT research firm Machina.

Instead, individual industries will set their own standards while software platforms such as Omega DevCloud help to solve the broader fragmentation, Castonguay believes. Even the Industrial Internet Consortium, formed earlier this year to bring some coherence to IoT for conservative industries such as energy and aviation, plans to work with existing standards from specific industries rather than write its own.

Ryan Martin, an analyst at 451 Research, compared IoT standards to human languages.

“I’d be hard pressed to say we are going to have one universal language that everyone in the world can speak,” and even if there were one, most people would also speak a more local language, Martin said.

Friday, August 08. 2014

IBM Builds a Chip That Works Like Your Brain

Via re/code

-----

Can a silicon chip act like a human brain? Researchers at IBM say they’ve built one that mimics the brain better than any that has come before it.

In a paper published in the journal Science today, IBM said it used conventional silicon manufacturing techniques to create what it calls a neurosynaptic processor that could rival a traditional supercomputer by handling highly complex computations while consuming no more power than that supplied by a typical hearing aid battery.

The chip is also one of the biggest ever built, boasting some 5.4 billion transistors, which is about a billion more than the number of transistors on an Intel Xeon chip.

To do this, researchers designed the chip with a mesh network of 4,096 neurosynaptic cores. Each core contains elements that handle computing, memory and communicating with other parts of the chip. Each core operates in parallel with the others.

Multiple chips can be connected together seamlessly, IBM says, and they could be used to create a neurosynaptic supercomputer. The company even went so far as to build one using 16 of the chips.

The new design could shake up the conventional approach to computing, which has been more or less unchanged since the 1940s and is known as the Von Neumann architecture. In English, a Von Neumann computer — you’re using one right now — stores the data for a program in memory.

This chip, which has been dubbed TrueNorth, relies on its network of neurons to detect and recognize patterns in much the same way the human brain does. If you’ve read your Ray Kurzweil, this is one way to understand how the brain works — recognizing patterns. Put simply, once your brain knows the patterns associated with different parts of letters, it can string them together in order to recognize words and sentences. If Kurzweil is correct, you’re doing this right now, using some 300 million pattern-recognizing circuits in your brain’s neocortex.

The chip would seem to represent a breakthrough in one of the long-term problems in computing: Computers are really good at doing math and reading words, but discerning and understanding meaning and context, or recognizing and classifying objects — things that are easy for humans — have been difficult for traditional computers. One way IBM tested the chip was to see if it could detect people, cars, trucks and buses in video footage and correctly recognize them. It worked.

In terms of complexity, the TrueNorth chip has a million neurons, which is about the same number as in the brain of a common honeybee. A typical human brain averages 100 billion. But given time, the technology could be used to build computers that can not only see and hear, but understand what is going on around them.

Currently, the chip is capable of 46 billion synaptic operations per second per watt, or SOPS. That’s a tricky apples-to-oranges comparison to a traditional supercomputer, where performance is measured in the number of floating point operations per second, or FLOPS. But the most energy-efficient supercomputer now running tops out at 4.5 billion FLOPS.

Down the road, the researchers say in their paper, they foresee TrueNorth-like chips being combined with traditional systems, each solving problems it is best suited to handle. But it also means that systems that in some ways will rival the capabilities of current supercomputers will fit into a machine the size of your smartphone, while consuming even less energy.

The project was funded with money from DARPA, the Department of Defense’s research organization. IBM collaborated with researchers at Cornell Tech and iniLabs.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (130832)

- Norton cyber crime study offers striking revenue loss statistics (101425)

- MeCam $49 flying camera concept follows you around, streams video to your phone (99848)

- Norton cyber crime study offers striking revenue loss statistics (57654)

- The PC inside your phone: A guide to the system-on-a-chip (57225)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |