Tuesday, April 17. 2012

HTML5: A blessing or a curse?

Via develop

-----

Develop looks at the platform with ambitions to challenge Adobe's ubiquitous Flash web player

Initially heralded as the future of browser gaming and the next step beyond the monopolised world of Flash, HTML5 has since faced criticism for being tough to code with and possessing a string of broken features.

The coding platform, the fifth iteration of the HTML standard, was supposed to be a one stop shop for developers looking to create and distribute their game to a multitude of platforms and browsers, but things haven’t been plain sailing.

Not just including the new HTML mark-up language, but also incorporating other features and APIs such as CSS3, SVG and JavaScript, the platform was supposed to allow for the easy insertion of features for the modern browser such as video and audio, and provide them without the need for users to install numerous plug-ins.

And whilst this has worked to a certain degree, and a number of companies such as Microsoft, Apple, Google and Mozilla under the W3C have collaborated to bring together a single open standard, the problems it possesses cannot be ignored.

Thursday, April 12. 2012

Paranoid Shelter - [Implementation Code]

By Computed·By

-----

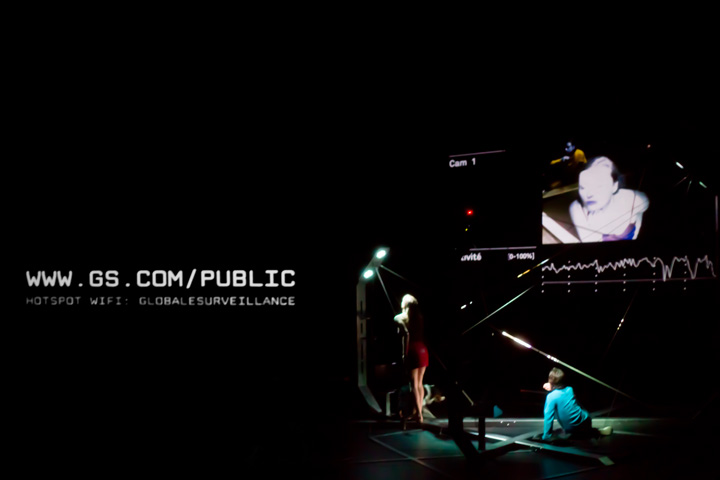

Paranoid Shelter is a recent installation / architectural device that fabric | ch finalized later in 2011 after a 6 months residency at the EPFL-ECAL Lab in Renens (Switzerland). It was realized with the support of Pro Helvetia, the OFC, the City of Lausanne and the State of Vaud. It was initiated and first presented as sketches back in 2008 (!), in the context of a colloquium about surveillance at the Palais de Tokyo in Paris.

Being created in the context of a theatrical collaboration with french writer and essayist Eric Sadin around his books about contemporary surveillance (Surveillance globale and Globale paranoïa --both published back in 2009--), Paranoid Shelter revisits the old figure/myth of the architectural shelter, articulated by the use of surveillance technologies as building blocks.

Additionnal information on the overall project can be found through the two following links:

Paranoid Shelter - (Globale Surveillance)

(Paranoid Shelter) - Globale Surveillance

A compressed preview and short of the play by NOhista.

-----

On the first technical drawings and sketches of the Paranoid Shelter project, the entire system was just looking like a (big) mess of wires, sensors and video cameras, all concentrated on a pretty tiny space where humans will have difficulties to move in. The entire space is consciously organised around tracking methods/systems, the space being delimited by 3 [augmented] posts which host a set of sensors, video cameras and microphones. It includes networked [power over ethernet] video cameras, microphones and a set of wireless ambient sensors (giving the ability of measuring temperature, O2 and CO2 gaz concentration, current atmospheric pressure, light, etc...).

Based on a real-time analysis of major sensors hardware, the system is able to control DMX lights, a set of two displays (one LCD screen and one projector) and to produce sound through a dynamically generated text to speech process.

All programs were developed using openFrameworks enhanced by a set of dedicated in-house C++ libraries in order to be able to capture networked camera video flow, control any DMX compatible piece of hardware and collect wireless Libelum sensor's data. Sound analysis programs, LCD display program and the main program are all connected to each other via a local network. The main program is in charge of collecting other program's data, performing the global analysis of the system's activity, recording system's raw information to a database and controlling system's [re]actions (lights, display).

The overall system can act in an [autonomous] way by controlling the entire installation behavior while it can also be remotely controlled when used on stage, in the context of a theater play.

Collecting all sensor's flows is one of the basic task. Cameras are used to track movements, microphones measure sound activity and sensors collect a set of ambient parameters. Even if data capture consists in some basic network based tasks, it is easily raised to upper complexity level when each data collection should occur simultaneously, in real-time, [without,with] a [limited,acceptable] delay. Major raw data analysis have to occur directly after data acquisition in order to minimize the time-shift in the system's space awareness. This first level of data analysis brings out mainly frequencies information, quantity of activity and 2D location tracking (from the point of view of each camera). Every single piece of raw information is systematically recorded in a dedicated database : it reduces system's memory footprint (by keeping it almost constant) without loosing any activity information. From time to time the system can access these recorded information in its post-analysis process, when required, mainly to add a time-scale dimension on the global activity that occurred in the monitored space. Time isolated information can be interpreted in a rough and basic way, while time composition of the same information or a set of information may bring additional meanings by verifying information consistency over time (of course, it could be in a negative or a positive way, by confirming or refuting a first level deduced activity information). Another level of analysis can be reached by taking in account the spacial distribution of sensors in the overall installation. The system is then able to compute 3D information getting an awareness of activities within the space it is monitoring. It generates a second level of data analysis, spatialised, that will increase the global understanding of captured data by the system.

Recorded activities are made available to the [audience,visitors] through a wifi access point. Networked cameras can be accessed in real time, giving the ability to humans to see some of the system's [inputs]. Thus, network activity is also monitored as another sign of human presence, the system can then [detect] activity elsewhere than in its dedicated space.

Whatever how numerous are collected data, the system faces a real problem when it comes to the interpretation of these data while not having benefit of a human brain. Events that are quite obvious to humans, do not mean anything to computers and softwares. In order to avoid the use of some artificial neural networks simulation (which may still be a good option to explore), I have decided to compute a limited set of parameters, all based on previously analysed data, only computed lately when the system may decide to react to perceived activities. It defines a kind of global [mood] of the system, based on which it will [decide] whether to be aggressive (from a human point of view) by making the global tracking activity [noticeable] by humans evolving in the installation's space, or by focusing tracking sensors on a given area or by trying to enhance some sensor's information analysis, whether to settle in a kind of silent mode.

Moreover, the evolution of these parameters are also studied in time, making the [mood] evolving in a human way, increasing and decreasing [analogically]. System's [mood] may be wrong or [unjustified,weird] from a human point of view, but that's where [multi-dimensional] software becomes interesting. Beyond a certain complexity, by adding computation layers on top of each over, having written every single line of code does not allow the programmer to predict precisely what next system's [re]action will be.

We did reach here monitoring system limitations which is obviously [interpretation,comprehension]. As long as automatic system can not correctly [understand] data, humans will need to be in the loop, making all these monitoring systems quite useless [as expert system], except for producing an enormous quantity of data that still need to be post-analysed by a human brain. As the system is producing an important set of heteregeneous data, a set of rules may suggest to the system some sort of data correlation. These rules should not be too [tights,precises] in order to avoid producing obvious system's interpretation, while keeping them slightly [out of focus] may allow [smart,astonishing] conclusion being produced. So there's rooms here for additional implementation of the data analysis processes that can still completely change the way the entire installation [can,may] behave.

Thursday, April 05. 2012

MIT Project Aims to Deliver Printable, Mass-Market Robots

Via Wired

-----

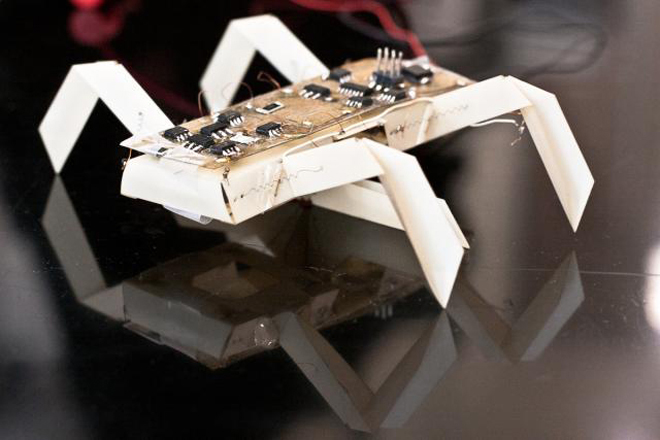

Insect printable robot. Photo: Jason Dorfman, CSAIL/MIT

Printers can make mugs, chocolate and even blood vessels. Now, MIT scientists want to add robo-assistants to the list of printable goodies.

Today, MIT announced a new project, “An Expedition in Computing Printable Programmable Machines,” that aims to give everyone a chance to have his or her own robot.

Need help peering into that unreasonably hard-to-reach cabinet, or wiping down your grimy 15th-story windows? Walk on over to robo-Kinko’s to print, and within 24 hours you could have a fully programmed working origami bot doing your dirty work.

“No system exists today that will take, as specification, your functional needs and will produce a machine capable of fulfilling that need,” MIT robotics engineer and project manager Daniela Rus said.

Unfortunately, the very earliest you’d be able to get your hands on an almost-instant robot might be 2017. The MIT scientists, along with collaborators at Harvard University and the University of Pennsylvania, received a $10 million grant from the National Science Foundation for the 5-year project. Right now, it’s at very early stages of development.

So far, the team has prototyped two mechanical helpers: an

insect-like robot and a gripper. The 6-legged tick-like printable robot

could be used to check your basement for gas leaks or to play with your

cat, Rus says. And the gripper claw, which picks up objects, might be

helpful in manufacturing, or for people with disabilities, she says.

The two prototypes cost about $100 and took about 70 minutes to build. The real cost to customers will depend on the robot’s specifications, its capabilities and the types of parts that are required for it to work.

The researchers want to create a one-size-fits-most platform to circumvent the high costs and special hardware and software often associated with robots. If their project works out, you could go to a local robo-printer, pick a design from a catalog and customize a robot according to your needs. Perhaps down the line you could even order-in your designer bot through an app.

Their approach to machine building could “democratize access to robots,” Rus said. She envisions producing devices that could detect toxic chemicals, aid science education in schools, and help around the house.

Although bringing robots to the masses sounds like a great idea (a sniffing bot to find lost socks would come in handy), there are still several potential roadblocks to consider — for example, how users, especially novice ones, will interact with the printable robots.

“Maybe this novice user will issue a command that will break the device, and we would like to develop programming environments that have the capability of catching these bad commands,” Rus said.

As it stands now, a robot would come pre-programmed to perform a set of tasks, but if a user wanted more advanced actions, he or she could build up those actions using the bot’s basic capabilities. That advanced set of commands could be programmed in a computer and beamed wirelessly to the robot. And as voice parsing systems get better, Rus thinks you might be able to simply tell your robot to do your bidding.

Durability is another issue. Would these robots be single-use only? If so, trekking to robo-Kinko’s every time you needed a bot to look behind the fridge might get old. These are all considerations the scientists will be grappling with in the lab. They’ll have at least five years to tease out some solutions.

In the meantime, it’s worth noting that other other groups are also building robots using printers. German engineers printed a white robotic spider last year. The arachnoid carried a camera and equipment to assess chemical spills.

And at Drexel University, paleontologist Kenneth Lacovara and mechanical engineer James Tangorra are trying to create a robotic dinosaur from dino-bone replicas. The 3-D-printed bones are scaled versions of laser-scanned fossils. By the end of 2012, Lacovara and Tangorra hope to have a fully mobile robotic dinosaur, which they want to use to study how dinosaurs, like large sauropods, moved.

Lancovara thinks the MIT project is an exciting and promising one: “If it’s a plug-and-play system, then it’s feasible,” he said. But “obviously, it [also] depends on the complexity of the robot.” He’s seen complex machines with working gears printed in one piece, he says.

Right now, the MIT researchers are developing an API that would facilitate custom robot design and writing algorithms for the assembly process and operations.

If their project works out, we could all have a bot to call our own in a few years. Who said print was dead?

Friday, March 30. 2012

Fake ID holders beware: facial recognition service Face.com can now detect your age

Via VB

-----

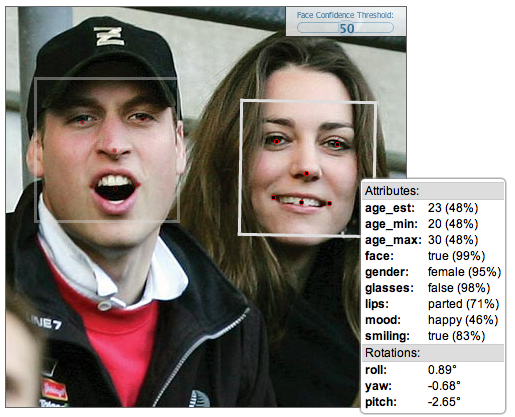

Facial-recognition platform Face.com could foil the plans of all those under-age kids looking to score some booze. Fake IDs might not fool anyone for much longer, because Face.com claims its new application programming interface (API) can be used to detect a person’s age by scanning a photo.

With its facial recognition system, Face.com has built two Facebook apps that can scan photos and tag them for you. The company also offers an API for developers to use its facial recognition technology in the apps they build.

Its latest update to the API can scan a photo and supposedly determine a person’s minimum age, maximum age, and estimated age. It might not be spot-on accurate, but it could get close enough to determine your age group.

“Instead of trying to define what makes a person young or old, we provide our algorithms with a ton of data and the system can reverse engineer what makes someone young or old,” Face.com chief executive Gil Hirsch told VentureBeat in an interview. ”We use the general structure of a face to determine age. As humans, our features are either heighten or soften depending on the age. Kids have round, soft faces and as we age, we have elongated faces.”

The algorithms also take wrinkles, facial smoothness, and other telling age signs into account to place each scanned face into a general age group. The accuracy, Hirsch told me, is determined by how old a person looks, not necessarily how old they actually are. The API also provides a confidence level on how well it could determine the age, based on image quality and how the person looks in photo, i.e. if they are turned to one side or are making a strange face.

“Adults are much harder to figure out [their age], especially celebrities. On average, humans are much better at detecting ages than machines,” said Hirsch.

The hope is to build the technology into apps that restrict or tailor content based on age. For example the API could be built into a Netflix app, scan a child’s face when they open the app, determine they’re too young to watch The Hangover, and block it. Or — and this is where the tech could get futuristic and creepy — a display with a camera could scan someone’s face when they walk into a store and deliver ads based on their age.

In addition to the age-detection feature, Face.com says it has updated its API with 30 percent better facial recognition accuracy and new recognition algorithms. The updates were announced Thursday and the API is available for any developer to use.

One developer has already used the API to build app called Age Meter, which is available in the Apple App Store. On its iTunes page, the entertainment-purposes-only app shows pictures of Justin Bieber and Barack Obama with approximate ages above their photos.

Other companies in this space include Cognitec, with its FaceVACS software development kit, and Bayometric, which offers FaceIt Face Recognition. Google has also developed facial-recognition technology for Android 4.0 and Apple applied for a facial recognition patent last year.

The technology behind scanning someone’s picture, or even their face, to figure out their age still needs to be developed for complete accuracy. But, the day when bouncers and liquor store cashiers can use an app to scan a fake ID’s holder’s face, determine that they are younger than the legal drinking age, and refuse to sell them wine coolers may not be too far off.

TRON... in 219 bytes

Via Christian Babski

-----

You can have an explanation from the authors of this short piece of code.

Or just play it (i, j, k or l to start)

How a web application can download and store over 2GB without you even knowing it

Via Jef Claes

-----

I have been experimenting with the HTML5 offline application cache some more over the last few days, doing boundary tests in an attempt to learn more about browser behaviour in edge cases.

One of these experiments was testing the cache quota.

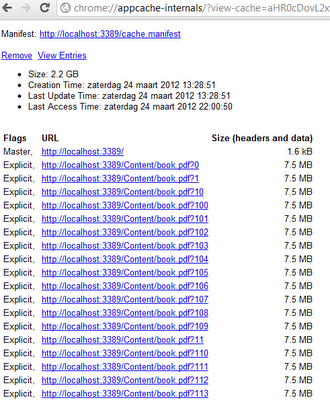

Two weeks ago, I blogged about generating and serving an offline application manifest using ASP.NET MVC. I reused that code to add hundreds of 7MB PDF files to the cache.

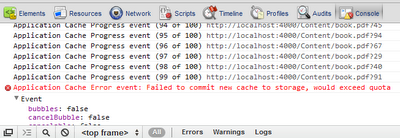

public ActionResult Manifest() { var cacheResources = new List<string>(); var n = 300; // Play with this number for (var i = 0; i < n; i++) cacheResources.Add("Content/" + Url.Content("book.pdf?" + i)); var manifestResult = new ManifestResult("1") { NetworkResources = new string[] { "*" }, CacheResources = cacheResources }; return manifestResult; }I initially tried adding 1000 PDF files to the cache, but this threw an error: Chrome failed to commit the new cache to the storage, because the quota would be exceeded.

After lowering the number of files several times, I hit the sweet spot. I could add 300 PDF files to the cache without breaking it.

Something else I noticed, is that other sites now fail to commit anything to the application cache due to the browser-wide quota being exceeded. I'm pretty sure this 'first browsed, first reserved' approach will be a source of frustration in the future.

To handle this scenario we could use the applicationCache API to listen for quota errors, and inform the user to browse to chrome://appcache-internals/ and remove other caches in favor of the new one. This feels sketchy though; shouldn't the browser intervene in a more elegant way here?

Friday, March 23. 2012

Android and Linux re-merge into one operating system

Via ZDNet

-----

Android has always been Linux, but for years the Android project went its own way and its code wasn’t merged back into the main Linux tree. Now, much sooner than Linus Torvalds, Linux’s founder and lead developer, had expected, Android has officially merged back into Linux’s mainline.

The fork between Android and Linux all began in the fall of 2010, “Google engineer Patrick Brady stated that Android is not Linux” That was never actually the case.Android has always been Linux at heart.

At the same time though Google did take Android in a direction that wasn’t compatible with the mainstream Linux kernel. As Greg Kroah-Hartman, the maintainer of the stable Linux kernel for the Linux Foundation and head of the Linux Driver Project, wrote in Android and the Linux kernel community, “The Android kernel code is more than just the few weird drivers that were in the drivers/staging/androidsubdirectory in the kernel. In order to get a working Android system, you need the new lock type they have created, as well as hooks in the core system for their security model. In order to write a driver for hardware to work on Android, you need to properly integrate into this new lock, as well as sometimes the bizarre security model. Oh, and then there’s the totally-different framebuffer driver infrastructure as well.” That flew like a lead balloon in Android circles.

This disagreement sprang from several sources. One was that Google’s Android developers had adopted their own way to address power issues with WakeLocks. The other cause, as Google open source engineering manager Chris DiBona pointed out, was that Android’s programmers were so busy working on Android device specifics that they had done a poor job of co-coordinating with the Linux kernel developers.

The upshot was that developer circles have had many heated words over what’s the right way of handling Android specific code in Linux. The upshot of the dispute was that Torvalds dropped the Android drivers from the main Linux kernel in late 2009.

Despite these disagreements, there was never any danger as one claim had it in March 2011, that Android was somehow in danger of being sued by Linux because of Gnu General Public License, version 2 (GPLv2) violations. As Linus himself said at the time, claims that the Android violated the GPL were “totally bogus. We’ve always made it very clear that the kernel system call interfaces do not in any way result in a derived work as per the GPL, and the kernel details are exported through the kernel headers to all the normal glibc interfaces too.”

Over the last few months though, as Torvalds explained last fall, that while “there’s still a lot of merger to be done … eventually Android and Linux would come back to a common kernel, but it will probably not be for four to five years.” Kroah-Hartman added at the time that one problem is that “Google’s Android team is very small and over-subscribed to so they’re resource restrained It would be cheaper in the long run for them to work with us.” Torvalds then added that “We’re just going different directions for a while, but in the long run the sides will come together so I’m not worried.”

In the event the re-merger of the two went much faster than expected. At the 2011 Kernel Summit in Prague in late October, the Linux kernel developers “agreed that the bulk of theAndroid kernel code should probably be merged into the mainline.” To help this process along, theAndroid Mainlining Project was formed.

Things continued to go along much faster then anyone had foreseen. By December, Kroah-Hartman could write, “by the 3.3 kernel release, the majority of the Android code will be merged, but more work is still left to do to better integrate the kernel and userspace portions in ways that are more palatable to the rest of the kernel community. That will take longer, but I don’t foresee any major issues involved.” He was right.

Today, you can compile the Android code in Linux 3.3 and it will boot. Still, as Kroah-Hartman warned, WakeLocks, still aren’t in the main kernel, but even that’s getting worked on. For all essential purposes, Android and Linux are back together again.

Related Stories:

Linus Torvalds on Android, the Linux fork

It’s time Google starts paying for Android updates

Google open source guru says Android code will be in Linux kernel in time

Tuesday, March 13. 2012

Research in Programming Languages

Via Tagide

By Crista Videira Lopes

-----

Is there still research to be done in Programming Languages? This essay touches both on the topic of programming languages and on the nature of research work. I am mostly concerned in analyzing this question in the context of Academia, i.e. within the expectations of academic programs and research funding agencies that support research work in the STEM disciplines (Science, Technology, Engineering, and Mathematics). This is not the only possible perspective, but it is the one I am taking here.

PLs are dear to my heart, and a considerable chunk of my career was made in that area. As a designer, there is something fundamentally interesting in designing a language of any kind. It’s even more interesting and gratifying when people actually start exercising those languages to create non-trivial software systems. As a user, I love to use programming languages that I haven’t used before, even when the languages in question make me curse every other line.

But the truth of the matter is that ever since I finished my Ph.D. in the late 90s, and especially since I joined the ranks of Academia, I have been having a hard time convincing myself that research in PLs is a worthy endeavor. I feel really bad about my rational arguments against it, though. Hence this essay. Perhaps by the time I am done with it I will have come to terms with this dilemma.

Back in the 50s, 60s and 70s, programming languages were a BigDeal, with large investments, upfront planning, and big drama on standardization committees (Ada was the epitome of that model). Things have changed dramatically during the 80s. Since the 90s, a considerable percentage of new languages that ended up being very popular were designed by lone programmers, some of them kids with no research inclination, some as a side hobby, and without any grand goal other than either making some routine activities easier or for plain hacking fun. Examples:

- PHP, by Rasmus Lerdorf circa 1994, “originally used for tracking visits to his online resume, he named the suite of scripts ‘Personal Home Page Tools,’ more frequently referenced as ‘PHP Tools.’ ” [1] PHP is a marvel of how a horrible language can become the foundation of large numbers of applications… for a second time! Worse is Better redux. According one informal but interesting survey, PHP is now the 4th most popular programming language out there, losing only to C, Java and C++.

- JavaScript, by Brendan Eich circa 1995, “Plus, I had to be done in ten days or something worse than JS would have happened.” [2] According to that same survey, JavaScript is the 5th most popular language, and I suspect it is climbing up that rank really fast. It may be #1 by now.

- Python, by Guido van Rossum circa 1990, “I was looking for a ‘hobby’ programming project that would keep me occupied during the week around Christmas.” [3] Python comes at #6, and its strong adoption by scientific computing communities is well know.

- Ruby, by Yukihiro “Matz” Matsumoto circa 1994, “I wanted a scripting language that was more powerful than Perl, and more object-oriented than Python. That’s why I decided to design my own language.” [4] At #10 in that survey.

Compare this mindset with the context in which the the older well-known programming languages emerged:

- Fortran, 50s, originally developed by IBM as part of their core business in computing machines.

- Cobol, late 50s, designed by a large committee from the onset, sponsored by the DoD.

- Lisp, late 50s, main project occupying 2 professors at MIT and their students, with the grand goal of producing an algebraic list processing language for artificial intelligence work, also funded by the DoD.

- C, early 70s, part of the large investment that Bell Labs was doing in the development of Unix.

- Smalltalk, early 70s, part of a large investment that Xerox did in “inventing the future” of computers.

Back then, developing a language processor was, indeed, a very big deal. Computers were slow, didn’t have a lot of memory, the language processors had to be written in low-level assembly languages… it wasn’t something someone would do in their rooms as a hobby, to put it mildly. Since the 90s, however, with the emergence of PCs and of decent low-level languages like C, developing a language processor is no longer a BigDeal. Hence, languages like PHP and JavaScript.

There is a lot of fun in designing new languages, but this fun is not an exclusive right of researchers with, or working towards, Ph.Ds. Given all the knowledge about programming languages these days, anyone can do it. And many do. And here’s the first itchy point: there appears to be no correlation between the success of a programming language and its emergence in the form of someone’s doctoral or post-doctoral work. This bothers me a lot, as an academic. It appears that deep thoughts, consistency, rigor and all other things we value as scientists aren’t that important for mass adoption of programming languages. But then again, I’m not the first to say it. It’s just that this phenomenon is hard to digest, and if you really grasp it, it has tremendous consequences. If people (the potential users) don’t care about conceptual consistency, why do we keep on trying to achieve that?

To be fair, some of those languages designed in the 90s as side projects, as they became important, eventually became more rigorous and consistent, and attracted a fair amount of academic attention and industry investment. For example, the Netscape JavaScript hacks quickly fell on Guy Steele’s lap resulting in the ECMAScript specification. Python was never a hack even if it started as a Christmas hobby. Ruby is a fun language and quite elegant from the beginning. PHP… well… it’s fun for possibly the wrong reasons. But the core of the matter is that “the right thing” was not the goal. It seems that a reliable implementation of a language that addresses an important practical need is the key for the popularity of a programming language. But being opportunistic isn’t what research is supposed to be about… (or is it?)

Also to be fair, not all languages designed in the 90s and later started as side projects. For example, Java was a relatively large investment by Sun Microsystems. So was .NET later by Microsoft.

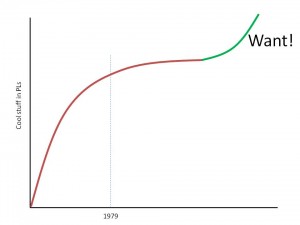

And, finally, all of these new languages, even when created over a week as someone’s pet project, sit on the shoulders of all things that existed before. This leads me to the second itch: one striking commonality in all modern programming languages, especially the popular ones, is how little innovation there is in them! Without exception, including the languages developed in research groups, they all feel like mashups of concepts that already existed in programming languages in 1979, wrapped up in their own idiosyncratic syntax. (I lied: exceptions go to aspects and monads both of which came in the 90s)

So

one pertinent question is: given that not much seems to have emerged

since 1979 (that’s 30+ years!), is there still anything to innovate in programming languages? Or have we reached the asymptotic plateau of innovation in this area?

So

one pertinent question is: given that not much seems to have emerged

since 1979 (that’s 30+ years!), is there still anything to innovate in programming languages? Or have we reached the asymptotic plateau of innovation in this area?

I need to make an important detour here on the nature of research.

<Begin Detour>

Perhaps I’m completely off; perhaps producing innovative new software is not a goal of [STEM] research. Under this approach, any software work is dismissed from STEM pursuits, unless it is necessary for some specific goal — like if you want to study some far-off galaxy and you need an IT infrastructure to collect the data and make simulations (S for Science); or if you need some glue code for piecing existing systems together (T for Technology); or if you need to improve the performance of something that already exists (E for Engineering); or if you are a working on some Mathematical model of computation and want to make your ideas come to life in the form of a language (M for Mathematics). This is an extreme submissive view of software systems, one that places software in the back sit of STEM and that denies the existence of value in research in/by software itself. If we want to lead something on our own, let’s just… do empirical studies of technology or become biologists/physicists/chemists/mathematicians or make existing things perform better or do theoretical/statistical models of universes that already exist or that are created by others. Right?

I confess I have a dysfunctional relationship with this idea. Personally, I can’t be happy without creating software things, but I have been able to make my scientist-self function both as a cold-minded analyst and, at times, as an expert passenger in someone else’s research project. The design work, for me, has moved to sabbatical time, evenings and weekends; I don’t publish it [much] other than the code itself and some informal descriptions. And yet, I loathe this situation.

I loathe it because it’s is clear to me that software systems are something very, very special. Software revolutionized everything in unexpected ways, including the methods and practices that our esteemed colleagues in the “hard” sciences hold near and dear for a very long time. The evolution of information technology in the past 60 years has been way off from what our colleagues thought they needed. Over and over again, software systems have been created that weren’t part of any scientific project, as such, and that ended up playing a central role in Science. Instead of trying to mimic our colleagues’ traditional practices, “computer scientists” ought to be showing the way to a new kind of science — maybe that new kind of science or that one or maybe something else. I dare to suggest that the something else is related to the design of things that have software in them. It should not be called Science. It is a bit like Engineering, but it’s not it either because we’re not dealing [just] with physical things. Technology doesn’t cut it either. It needs a new name, something that denotes “the design of things with software in them.” I will call it Design for short, even though that word is so abused that it has lost its meaning.

<Suspend Detour>

Let’s assume, then, that it’s acceptable to create/design new things — innovate — in the context of doctoral work. Now comes the real hard question.

If anyone — researchers, engineers, talented kids, summer interns — can design and implement programming languages, what are the actual hard goals that doctoral research work in programming languages seeks that distinguishes it from what anyone can do?

Let me attempt to answer these questions, first, with some well-known goals of language design:

- Performance — one can always have more of this; certain application domains need it more than others. This usually involves having to come up with interesting data structures and algorithms for the implementation of PLs that weren’t easy to devise.

- Human Productivity — one can always want more of this. There is no ending to trying to make development activities easier/faster.

- Verifiability — in some domains this is important.

There are other goals, but they are second-order. For example, languages may also need to catch up with innovations in hardware design — multi-core comes to mind. This is a second-order goal, the real goal behind it is to increase performance by taking advantage of potentially higher-performing hardware architectures.

In other words, someone wanting to do doctoral research work in programming languages ought to have one or more of these goals in mind, and — very important — ought to be ready to demonstrate how his/her ideas meet those goals. If you tell me that your language makes something run faster, consume less energy, makes some task easier or results in programs with less bugs, the scientist in me demands that you show me the data that supports such claims.

A lot of research activity in programming languages falls under the performance goal, the Engineering side of things. I think everyone in our field understands what this entails, and is able to differentiate good work from bad work under that goal. But a considerable amount of research activities in programming languages invoke the human productivity argument; entire sub-fields have emerged focusing on the engineering of languages that are believed to increase human productivity. So I’m going to focus on the human productivity goal. The human productivity argument touches on the core of what attracts most of us to creating things: having a direct positive effect on other people. It has been carelessly invoked since the beginning of Computer Science. (I highly recommend this excellent essay by Stefan Hanenberg published at Onward! 2010 with a critique of software science’s neglect of human factors)

Unfortunately, this argument is the hardest to defend. In fact, I am yet to see the first study that convincingly demonstrates that a programming language, or a certain feature of programming languages, makes software development a more productive process. If you know of such study, please point me to it. I have seen many observational studies and controlled experiments that try to do it [5, 6, 7, 8, 9, 10, among many]. I think those studies are really important, there ought to be more of them, but they are always very difficult to do [well]. Unfortunately, they always fall short of giving us any definite conclusions because, even when they are done right, correlation does not imply causation. Hence the never-ending ping-pong between studies that focus on the same thing and seem to reach opposite conclusions, best known in the health sciences. We are starting to see that ping-pong in software science too, for example 7 vs 9. But at least these studies show some correlations, or lack thereof, given specific experimental conditions, and they open the healthy discussion about what conditions should be used in order to get meaningful results.

I have seen even more research and informal articles about programming languages that claim benefits to human productivity without providing any evidence for it whatsoever, other than the authors’ or the community’s intuition, at best based on rational deductions from abstract beliefs that have never been empirically verified. Here is one that surprised me because I have the highest respect for the academic soundness of Haskell. Statements like this “Haskell programs have fewer bugs because Haskell is: pure [...], strongly typed [...], high-level [...], memory managed [...], modular [...] [...] There just isn’t any room for bugs!” are nothing but wishful thinking. Without the data to support this claim, this statement is deceptive; while it can be made informally in a blog post designed to evangelize the crowd, it definitely should not be made in the context of doctoral work unless that work provides solid evidence for such a strong statement.

That article is not an outlier. The Internets are full of articles claiming improved software development productivity for just about every other language. No evidence is ever provided, the argumentation is always either (a) deducted from principles that are supposed to be true but that have never been verified, or (b) extrapolated from ad-hoc, highly biased, severely skewed personal experiences.

This is the main reason why I stopped doing research in Programming Languages in any official capacity. Back when I was one of the main evangelists for AOP I realized at some point that I had crossed the line to saying things for which I had very little evidence. I was simply… evangelizing, i.e. convincing others of an idea that I believed strongly. At some point I felt I needed empirical evidence for what I was saying. But providing evidence for the human productivity argument is damn hard! My scientist self cannot lead doctoral students into that trap, a trap that I know too well.

Moreover, designing and executing the experiments that lead to uncovering such evidence requires a lot of time and a whole other set of skills that have absolutely nothing to do with the time and skills for actually designing programming languages. We need to learn the methods that experimental psychologists use. And, in the end of all that work, we will be lucky if we unveil correlations but we will not be able to draw any definite conclusions, which is… depressing.

But without empirical evidence of any kind, and from a scientific perspective, unsubstantiated claims pertaining to, say, Haskell or AspectJ (which are mostly developed and used by academics and have been the topic of many PhD dissertations) are as good as unsubstantiated claims pertaining to, say, PHP (which is mostly developed and used by non-academics). The PHP community is actually very honest when it comes to stating the benefits of using the language. For example, here is an honest-to-god set of reasons for using PHP. Notice that there are no claims whatsoever about PHP leading to less bugs or higher programmer productivity (as if anyone would dare to state that!); they’re just pragmatic reasons. (Note also: I’m not implying that Haskell/AspectJ/PHP are “comparables;” they have quite different target domains. I’m just comparing the narratives surrounding those languages, the “stories” that the communities tell within themselves and to others)

OK, now that I made 823 enemies by pointing out that the claims about human productivity surrounding languages that have emerged in academic communities — and therefore ought to know better — are unsubstantiated, PLUS 865 enemies by saying that empirical user studies are inconclusive and depressing… let me try to turn my argument around.

Is the high bar of scientific evidence killing innovation in programming languages? Is this what’s causing the asymptotic behavior? It certainly is what’s keeping me away from that topic, but I’m just a grain of sand. What about the work of many who propose intriguing new design ideas that are then shot down in peer-review committees because of the lack of evidence?

This ties back to my detour on the nature of research.

<Join Detour> Design experimentation vs. Scientific evidence

So, we’re back to whether design innovation per se is an admissible first-order goal of doctoral work or not. And now that question is joined by a counterpart: is the provision of scientific evidence really required for doctoral work in programming languages?

If what we have in hand is not Science, we need to be careful not to blindly adopt methods that work well for Science, because that may kill the essence of our discipline. In my view, that essence has been the radical, fast-paced, off the mark design experimentation enabled by software. This rush is fairly incompatible with the need to provide scientific evidence for the design “hopes.”

I’ll try a parallel: drug design, the modern-day equivalent of alchemy. In terms of research it is similar to software: partly based on rigor, partly on intuitions, and now also on automated tools that simply perform an enormous amount of logical combinations of molecules and determine some objective function. When it comes to deployment, whoever is driving that work better put in place a plan for actually testing the theoretical expectations in the context of actual people. Does the drug really do what it is supposed to do without any harmful side effects? We require scientific evidence for the claimed value of experimental drugs. Should we require scientific evidence for the value of experimental software?

The parallel diverges significantly with respect to the consequences of failure. A failure in drug design experimentation may lead to people dying or getting even more sick. A failure in software design experimentation is only a big deal if the experiment had a huge investment from the beginning and/or pertains to safety-critical systems. There are still some projects like that, and for those, seeking solid evidence of their benefits before deploying the production version of the experiment is a good thing. But not all software systems are like that. Therefore the burden of scientific evidence may be too much to bear. It is also often the case that over time, the enormous amount of testing by real use is enough to provide assurances of all kinds.

One good example of design experimentation being at odds with scientific evidence is the proposal that Tim Berners-Lee made to CERN regarding the implementation of the hypertext system that became the Web. Nowhere in that proposal do we find a plan for verification of claims. That’s just a solid good proposal for an intriguing “linked information system.” I can imagine TB-L’s manager thinking: “hmm, ok, this is intriguing, he’s a smart guy, he’s not asking that many resources, let’s have him do it and see what comes of it. If nothing comes of it, no big deal.” Had TB-L have to devise a scientific or engineering assessment plan for that system beyond “in the second phase, we’ll install it on many machines” maybe the world would be very different today, because he might have gotten caught in the black hole of trying to find quantifiable evidence for something that didn’t need that kind of validation.

Granted, this was not a doctoral topic proposal; it was a proposal for the design and implementation of a very concrete system with software in it, one that (1) clearly identified the problem, (2) built on previous ideas, including the author’s own experience, (3) had some intriguing insights in it, (4) stated expected benefits and potential applications — down to the prediction of search engines and graph-based data analysis. Should a proposal like TB-L’s be rejected if it were to be a doctoral topic proposal? When is an unproven design idea doctoral material and other isn’t? If we are to accept design ideas without validation plans as doctoral material, how do we assess them?

Towards the discipline of Design

In order to do experimental design research AND be scientifically honest at the same time, one needs to let go of claims altogether. In that dreadful part of a topic proposal where the committee asks the student “what are your claims?” the student should probably answer “none of interest.” In experimental design research, one can have hopes or expectations about the effects of the system, and those must be clearly articulated, but very few certainties will likely come out of such type of work. And that’s ok! It’s very important to be honest. For example, it’s not ok to claim “my language produces bug-free programs” and then defend this with a deductive argument based on unproven assumptions; but it’s ok to state “I expect that my language produces programs with fewer bugs [but I don't have data to prove it].” TB-L’s proposal was really good at being honest.

Finally, here is an attempt at establishing a rigorous criteria for design assessment in the context of doctoral and post-doctoral research:

- Problem: how important and surprising is the problem and how good is its description? The problem space is, perhaps, the most important component for a piece of design research work. If the design is not well grounded in an interesting and important problem, then perhaps it’s not worth pursuing as research work. If it’s a old hard problem, it should be formulated in a surprising manner. Very often, the novelty of a design lies not in the design itself but in its designer seeing the problem differently. So — surprise me with the problem. Show me insights on the nature of the problem that we don’t already know.

- Potential: what intriguing possibilities are unveiled by the design? Good design research work should open up doors for new avenues of exploration.

- Feasibility: good design research work should be grounded on what is possible to do. The ideas should be demonstrated in the form of a working system.

- Additionally, design research work, like any other research work, needs to be placed in a solid context of what already exists.

This criteria has two consequences that I really like: first, it substantiates our intuitions about proposals such as TB-L’s “linked information system” being a fine piece of [design] research work; second, it substantiates our intuitions on the difference of languages like Haskell vs. languages like PHP. I leave that as an exercise to the reader!

Coming to terms

I would love to bring design back to my daytime activities. I would love to let my students engage in designing new things such as new programming languages and environments — I have lots of ideas for what I would like to do in that area! I believe there is a path to establishing a set of rigorous criteria regarding the assessment of design that is different from scientific/quantitative validation. All this, however, doesn’t depend on me alone. If my students’ papers are going to be shot down in program committees because of the lack of validation, then my wish is a curse for them. If my grant proposals are going to be rejected because they have no validation plan other than “and then we install it in many machines” or “and then we make the software open source and free of charge” then my wish is a curse for me. We need buy-in from a much larger community — in a way, reverse the trend of placing software research under the auspices of science and engineering [alone].

This, however, should only be done after the community understands what science and scientific methods are all about (the engineering ones — everyone knows about them). At this point there is still a severe lack of understanding of science within the CS community. Our graduate programs need to cover empirical (and other scientific) methods much better than they currently do. If we simply continue to ignore the workings of science and the burden of scientific proof, we end up continuing to make careless religious statements about our programming languages and systems that simply will lead nowhere, under the misguided impression that we are scientists because the name says so.

Copyright © Crista Videira Lopes. All rights reserved.Note: this is a work-in-progress essay. I may update it from time to time. Feedback welcome.

Thursday, February 23. 2012

MOTHER artificial intelligence forces nerds to do the chores… or else

Via Slash Gear

-----

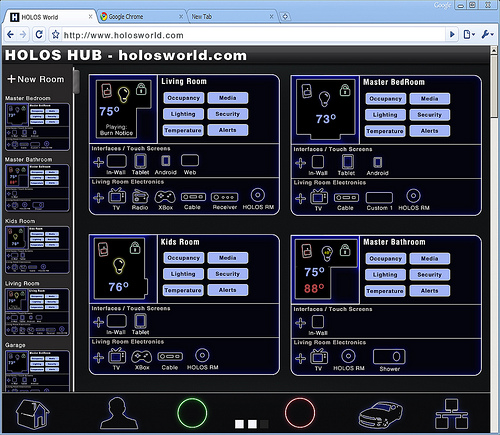

If you’ve ever been inside a dormitory full of computer science undergraduates, you know what horrors come of young men free of responsibility. To help combat the lack of homemaking skills in nerds everywhere, a group of them banded together to create MOTHER, a combination of home automation, basic artificial intelligence and gentle nagging designed to keep a domicile running at peak efficiency. And also possibly kill an entire crew of space truckers if they should come in contact with a xenomorphic alien – but that code module hasn’t been installed yet.

The project comes from the LVL1 Hackerspace, a group of like-minded

programmers and engineers. The aim is to create an AI suited for a home

environment that detect issues and gets its users (i.e. the people living in

the home) to fix it. Through an array of digital sensors, MOTHER knows

when the trash needs to be taken out, when the door is left unlocked, et

cetera. If something isn’t done soon enough, she it can even

disable the Internet connection for individual computers. MOTHER can

notify users of tasks that need to be completed through a standard

computer, phones or email, or stock ticker-like displays. In addition,

MOTHER can use video and audio tools to recognize individual users,

adjust the lighting, video or audio to their tastes, and generally keep

users informed and creeped out at the same time.

MOTHER’s abilities are technically limitless – since it’s all based on open source software, those with the skill, inclination and hardware components can add functions at any time. Some of the more humorous additions already in the project include an instant dubstep command. You can build your own MOTHER (boy, there’s a sentence I never thought I’d be writing) by reading through the official Wiki and assembling the right software, sensors, servers and the like. Or you could just invite your mom over and take your lumps. Your choice.

Monday, February 13. 2012

Computer Algorithm Used To Make Movie For Sundance Film Festival

Via Singularity Hub

-----

Indie movie makers can be a strange bunch, pushing the envelope of their craft and often losing us along the way. In any case, if you’re going to produce something unintelligible anyway, why not let a computer do it? Eve Sussmam and the Rufus Corporation did just that. She and lead actor Jeff Wood traveled to the Kazakhstan border of the Caspian Sea for two years of filming. But instead of a movie with a beginning, middle and end, they shot 3,000 individual and unrelated clips. To the clips they added 80 voice-overs and 150 pieces of music, mixed it all together and put it in a computer. A program on her Mac G5 tower, known at Rufus as the “serendipity machine,” then splices the bits together to create a final product.

As you might imagine, the resultant film doesn’t always make sense. But that’s part of the fun! As the Rufus Corporation writes on their website, “The unexpected juxtapositions create a sense of suspense alluding to a story that the viewer composes.”

It’s a clever experiment even if some viewers end up wanting to gouge their eyes out after a sitting. And there is some method to their madness. The film, titled “whiteonwhite:algorithnoir,” is centered on a geophysicist named Holz (played by Wood) who’s stuck in a gloomy, 1970’s-looking city operated by the New Method Oil Well Cementing Company. Distinct scenes such as wire tapped conversations or a job interview for Mr. Holz are (hopefully) woven together by distinct voiceovers and dialogues. When the scenes and audio are entered into the computer they’re tagged with keywords. The program then pieces them together in a way similar to Pandora’s stringing together of like music. If a clip is tagged “white,” the computer will randomly select from tens of other clips also having the “white” tag. The final product is intended to be a kind of “dystopian futuropolis.” What that means, however, changes with each viewing as no two runs are the same.

Watching the following trailer, I actually got a sense…um, I think…of a story.

Rufus Corporation says the movie was “inspired by Suprematist quests for transcendence, pure space and artistic higher ground.” I have no idea what that means but I hope they’ve achieved it. Beautiful things can happen when computers create art. And it’s only a matter of time before people attempt the same sort of thing with novel writing. Just watching the trailer, it’s hard to tell if the movie’s any good or not. I missed the showings at the Sundance Film Festival, but even so, they probably didn’t resemble the trailer anyway. And that’s okay, because that’s the whole point.

[image credits: Rufus Corporation and PRI via YouTube][video credit: PRI via YouTube]

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131057)

- Norton cyber crime study offers striking revenue loss statistics (101676)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100067)

- Norton cyber crime study offers striking revenue loss statistics (57905)

- The PC inside your phone: A guide to the system-on-a-chip (57466)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |