Wednesday, December 05. 2012

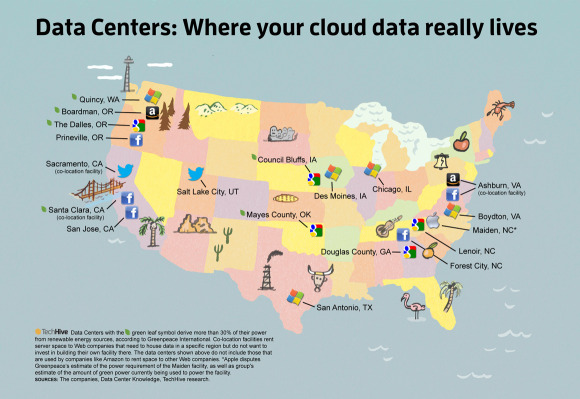

Infographic: Where your cloud data really lives

Via TechHive

-----

More and more of our data--our credit card numbers, tweets, photos, personal documents, browsing habits, music, and a hundred other things--is stored "in the cloud." The cloud metaphor evokes images of bits and bytes floating around in the ether somewhere, and we rarely hear tech companies talking about their data centers, where the data really lives.

That's partly because data centers are boring. They're typically huge concrete buildings that contain rows and rows of servers in racks, with a couple of guys who walk around looking thoughfully at little blinking lights, and then making little checkmarks on a clipboard. Another reason you don't hear much about data centers is that all those servers require huge amounts of power to run them and keep them cool—and in some cases this makes them far from green.

At any rate, the image below shows the locations of many of the major data centers that preserve your Amazon, Apple, Facebook, Google, Microsoft, and Twitter data.

Monday, December 03. 2012

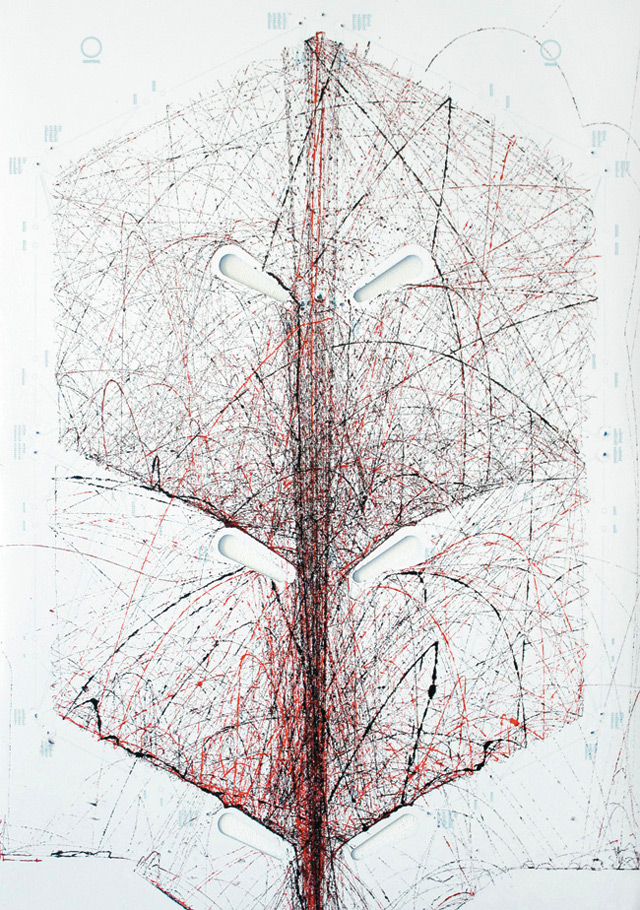

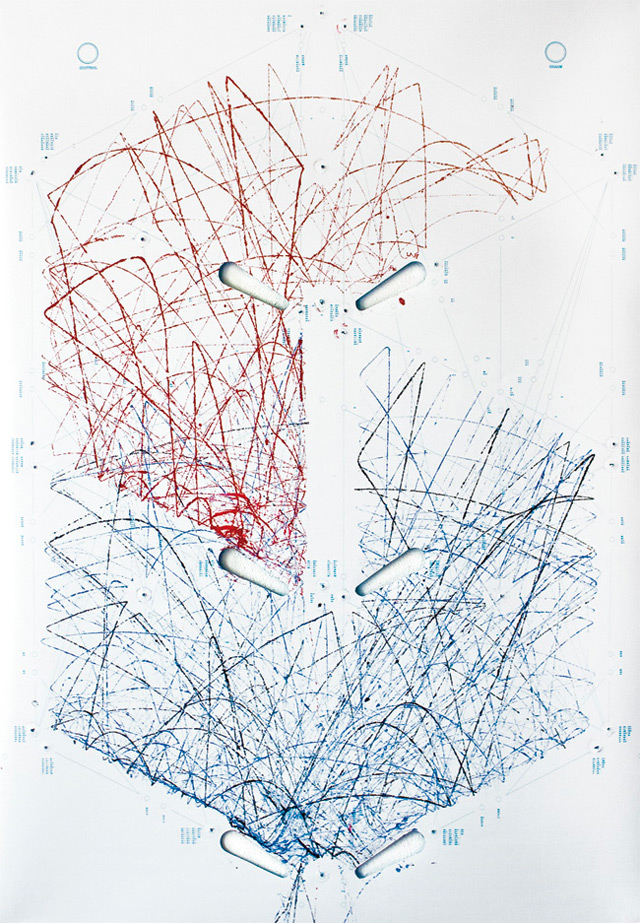

A Drawing Machine that Records the Chaos of Pinball

Via This is colossal

-----

From the pendulum-based drawing machine by Eske Rex to the art of Tim Knowles

who attaches writing implements to trees, I love when the seemingly

random lines of chaos (or maybe just physics) are rendered visible using

ink or pencil. This latest project titled STYN by Netherlands-based graduate student Sam van Doorn

is no exception. Using modified parts from an old pinball machine van

Doorn created a one-of-a-kind drawing device that utilizes standard

flippers to control a ink-covered sphere that moves across a temporary

poster placed on the game surface. He suggets that skill then becomes a

factor, as the better you are at pinball the more complex the drawing

becomes. See much more on his website, here. My drawing would have a single line that goes between the flippers and then have TILT written all over it.

Thursday, July 12. 2012

Why the GUI Will Never Kill the Sacred Command Line

Via Wired

-----

He called himself “MSP,” and he appeared out of nowhere, launching a one-man flame war against a sacred cow of hardcore computing: the command line.

The venue was TuxRadar, a news and reviews site that shines a spotlight on the Linux operating system and other open source software. The site had just published a piece in praise of the command line — where you interact with a computer by passing it line after line of text, rather than using a graphical user interface, or GUI. “The command line isn’t a crusty, old-fashioned way to interact with a computer, made obsolete by GUIs, but rather a fantastically flexible and powerful way to perform tasks,” the site said.

Then MSP appeared with his flame thrower. “There seem to be a number of obvious errors in the introduction to this article,” he wrote. “The command line is a crusty, old-fashioned way to interact with a computer, made obsolete by GUIs, but a small hardcore of people who refuse to move on still use it.”

As he likely expected, the Linux-happy commenters at TuxRadar didn’t take kindly to his “corrections.” Dozens vehemently defended the command line, insisting it still has a very important place in the world of computing. And they’re right. Though the average computer user has no need for a command line, it’s still an essential tool for developers and system adminstrators who require access to guts of our machines — and it’s not going away anytime soon.

“People drive cars with steering wheels and gas pedals. Does that mean you don’t need wrenches?” says Rob Pike, who was part of the team at Bell Labs that developed the UNIX operating system and now works at Google, where he oversaw the creation of the Go programming language.

Back in ’70s and early ’80s, if you used a computer, you used a command line. DOS — the disk operating system that runs atop IBM PCs — used a command line interface, and that’s what UNIX used too. But then came the Apple Macintosh and Microsoft Windows, and by the mid-’90s, most of us had moved to GUIs. The GUI is more effective when you’re navigating an operating system you’re not exactly familiar with, but also when you’re typing large amounts of text. Your word processor, for instance, uses a WYSIWYG, or what-you-see-is-what-you-get graphical interface.

“Try creating a complex document in a mark-up language using a text editor,” writes one commenter on TuxRadar. “It can be done, but generally using a graphical WYSIWYG interface is a far faster and accurate approach.”

GUIs have even reinvented the world of software development, beginning with tools like Visual Basic, before extending coding tasks to the average joe with new-age tools such as Scratch and Google’s App Inventor.

But among hardcore computer types — i.e., the audience reading TuxRadar — the command line persists. If you’re a developer or a sysadmin, there are times when it makes more sense to use the command line interface, or “shell,” built into operating systems like Linux and UNIX. “It depends on what you’re doing,” Pike tells Wired. “All computing, at some level, is abstraction and yet deep down beneath there are hardware instructions doing the job. It depends on the level you’re working at.”

In some cases, command line interfaces provide access to lower levels of a machine’s software and hardware. And they’re often easier to manipulate with “scripts,” mini text programs that automate processes for system adminstrators and others.

“Anyone insisting the command line is a relic of a by-gone time is hopelessly deluded,” argues another commenter in the Tuxradar debate. “I have a very nice [desktop] set up at home, with lots of graphical applications, but I just find it quicker to write scripts and use the shell than to hunt through menus to find what I want.”

But in other cases, geeks like command lines just because you have to know what you’re doing to use it. You have to know the commands. You can’t hunt and peck like you do with a GUI.

Pike calls the kerfuffle sparked by MSP a “sterile debate.” But MSP insists that the command line should disappear. The problem, he writes, is that GUIs just aren’t as effective as they should be. “When people using a particular system say ‘the command line is better because it can do things you can’t do in the GUI’ they are not talking about the strengths of the command line interface, but about the shortcomings in the GUI,” he says.

OK. Fine. But until the GUI evolves again, the command is here to stay.

Thursday, May 31. 2012

In Seoul, retailer uses 3D QR codes and the sun to deliver discounts only during its quiet times

Via Spring Wise

-----

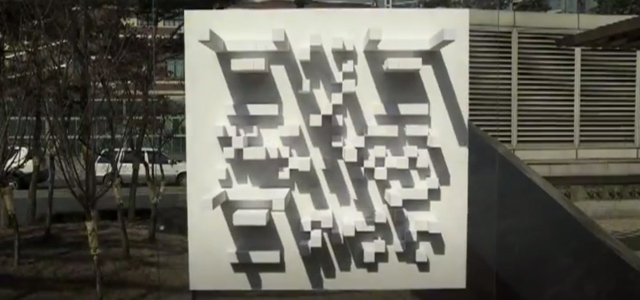

Korean Emart recently placed 3D QR code sculptures throughout the city of Seoul that could only be scanned between noon and 1 pm each day — consumers were given discounts at the store during those quiet shopping hours.

Periodic lulls in business are a fact of life for most retailers, and we’ve already seen solutions including daily deals that are valid only during those quiet times. Recently, however, we came across a concept that takes such efforts even further. Specifically, Korean Emart recently placed 3D QR code sculptures throughout the city of Seoul that could only be scanned between noon and 1 pm each day — consumers who succeeded were rewarded with discounts at the store during those quiet shopping hours.

Dubbed “Sunny Sale,” Emart’s effort involved setting up a series of what it calls “shadow” QR codes that depend on peak sunlight for proper viewing and were scannable only between 12 and 1 pm each day. Successfully scanning a code took consumers to a dedicated home page with special offers including a coupon worth USD 12. Purchases could then be made via smartphone for delivery direct to the consumer’s door. The video below explains the campaign in more detail:

As a result of its creative promotion, Emart reportedly saw membership increase by 58 percent in February over the previous month, they also observed a 25 percent increase in sales during lunch hours. Retailers around the globe: One for inspiration?

Wednesday, April 04. 2012

8 bits wide world

Wednesday, November 16. 2011

Artist Prints Out 24 Hours of Flickr Uploads, Over One Million Photos

Via GeekOSystem

-----

A new installation at the Amsterdam Foam gallery by Erik Kessels takes a literal look at the digital deluge of photos online by printing out 24 hours worth of uploads to Flickr. The result is rooms filled with over 1,000,000 printed photos, piled up against the walls.

There’s a sense of waste and a maddening disorganization to it all, both of which are apparently intentional. According to Creative Review, Kessels said of his own project:

“We’re exposed to an overload of images nowadays,” says Kessels. “This glut is in large part the result of image-sharing sites like Flickr, networking sites like Facebook, and picture-based search engines. Their content mingles public and private, with the very personal being openly and un-selfconsciously displayed. By printing all the images uploaded in a 24-hour period, I visualise the feeling of drowning in representations of other peoples’ experiences.”

Humbling, and certainly thought provoking, Kessel’s work challenges the notion that everything can and should be shared, which has become fundamental to the modern web. Then again, perhaps it’s only wasteful and overwhelming when you print all the pictures and divorce them from their original context.

Tuesday, November 08. 2011

The Big Picture: True Machine Intelligence & Predictive Power

-----

At the beginning of last week, I launched GreedAndFearIndex - a SaaS platform that automatically reads thousands of financial news articles daily to deduce what companies are in the news and whether financial sentiment is positive or negative.

It’s an app built largely on Scala, with MongoDB and Akka playing prominent roles to be able to deal with the massive amounts of data on a relatively small and cheap amount of hardware.

The app itself took about 4-5 weeks to build, although the underlying technology in terms of web crawling, data cleansing/normalization, text mining, sentiment analysis, name recognition, language grammar comprehension such as subject-action-object resolution and the underlying “God”-algorithm that underpins it all took considerably longer to get right.

Doing it all was not only lots of late nights of coding, but also reading more academic papers than I ever did at university, not only on machine learning but also on neuroscience and research on the human neocortex.

What I am getting at is that financial news and sentiment analysis might be a good showcase and the beginning, but it is only part of a bigger picture and problem to solve.

Unlocking True Machine Intelligence & Predictive Power

The

human brain is an amazing pattern matching & prediction machine -

in terms of being able to pull together, associate, correlate and

understand causation between disparate, seemingly unrelated strands of

information it is unsurpassed in nature and also makes much of what has

passed for “Artificial Intelligence” look like a joke.

However, the human brain is also severely limited: it is slow, it’s immediate memory is small, we can famously only keep track of 7 (+-) things at any one time unless we put considerable effort into it. We are awash in amounts of data, information and noise that our brain is evolutionary not yet adapted to deal with.

So the bigger picture of what I’m working on is not a SaaS sentiment analysis tool, it is the first step of a bigger picture (which admittedly, I may not solve, or not solve in my lifetime):

What if we could make machines match our own ability to find patterns based on seemingly unrelated data, but far quicker and with far more than 5-9 pieces of information at a time?

What if we could accurately predict the movements of financial markets, the best price point for a product, the likelihood of natural disasters, the spreading patterns of infectious diseases or even unlock the secrets of solving disease and aging themselves?

The Enablers

I see a number of enablers that are making this future a real possibility within my lifetime:

- Advances in neuroscience: our understanding of the human brain is getting better year by year, the fact that we can now look inside the brain on a very small scale and that we are starting to build a basic understanding of the neocortex will be the key to the future of machine learning. Computer Science and Neuroscience must intermingle to a higher degree to further both fields.

- Cloud Computing, parallelism & increased computing power: Computing power is cheaper than ever with the cloud, the software to take advantage of multi-core computers is finally starting to arrive and Moore’s law is still advancing at ever (the latest generation of MacBook Pro’s have roughly 2.5 times the performance of my barely 2 year old MBP).

- “Big Data”: we have the data needed to both train and apply the next generation of machine learning algorithms on abundantly available to us. It is no longer locked away in the silos of corporations or the pages of paper archives, it’s available and accessible to anyone online.

- Crowdsourcing: There are two things that are very time intensive when working with machine learning - training the algorithms, and once in production, providing them with feedback (“on the job training”) to continually improve and correct. The internet and crowdsourcing lowers the barriers immensely. Digg, Reddit, Tweetmeme, DZone are all early examples of simplistic crowdsourcing with little learning, but where participants have a personal interest in participating in the crowdsourcing. Combine that with machine learning and you have a very powerful tool at your disposal.

Babysteps & The Perfect Storms

All

things considered, I think we are getting closer to the perfect storm of

taking machine intelligence out of the dark ages where they have

lingered far too long and quite literally into a brave new world where

one day we may struggle to distinguish machine from man and artificial

intelligence from biological intelligence.

It will be a road fraught with setbacks, trial and error where the

errors will seem insurmountable, but we’ll eventually get there one

babystep at a time.

I’m betting on it and the first natural step is

predictive analytics & adaptive systems able to automatically detect

and solve problems within well-defined domains.

Friday, July 29. 2011

New Geographic Data Analysis Gives Historians a Futuristic Window Into the Past

Via POPSCI

By Rebecca Boyle

-----

"Spatial humanities," the future of history

Even using the most detailed sources, studying history often requires a great imagination, so historians can visualize what the past looked and felt like. Now, new computer-assisted data analysis can help them really see it.

Geographic Information Systems, which can analyze information related to a physical location, are helping historians and geographers study past landscapes like Gettysburg, reconstructing what Robert E. Lee would have seen from Seminary Ridge. Researchers are studying the parched farmlands of the 1930s Dust Bowl, and even reconstructing scenes from Shakespeare’s 17th-century London.

But far from simply adding layers of complexity to historical study, GIS-enhanced landscape analysis is leading to new findings, the New York Times reports. Historians studying the Battle of Gettysburg have shed light on the tactical decisions that led to the turning point in the Civil War. And others examining records from the Dust Bowl era have found that extensive and irresponsible land use was not necessarily to blame for the disaster.

GIS has long been used by city planners who want to record changes to the landscape over time. And interactive map technology like Google Maps has led to several new discoveries. But by analyzing data that describes the physical attributes of a place, historians are finding answers to new questions.

Anne Kelly Knowles and colleagues at Middlebury College in Vermont culled information from historical maps, military documents explaining troop positions, and even paintings to reconstruct the Gettysburg battlefield. The researchers were able to explain what Robert E. Lee could and could not see from his vantage points at the Lutheran seminary and on Seminary Hill. He probably could not see the Union forces amassing on the eastern side of the battlefield, which helps explain some of his tactical decisions, Knowles said.

Geoff Cunfer at the University of Saskatchewan studied a trove of data from all 208 affected counties in Texas, New Mexico, Colorado, Oklahoma and Kansas — annual precipitation reports, wind direction, agricultural censuses and other data that would have been impossible to sift through without the help of a computer. He learned dust storms were common throughout the 19th century, and that areas that saw nary a tiller blade suffered just as much.

The new data-mapping phenomenon is known as spatial humanities, the Times reports. Check out their story to find out how advanced technology is the future of history.

Wednesday, July 27. 2011

Volume of data darn near indescribable ...

Via Network World

-----

The world's digital data is doubling every two years and the amount created or replicated in 2011 will reach 1.8 zettabytes, a pile so gargantuan that its size can only be rendered understandable to the layman when translated into iPads.

That's right, words no longer suffice; we need something magical for this job.

The problem is presented in a press release touting the fifth annual IDC Digital Universe study, sponsored by EMC. A bar chart, right, and enormous infographic at the bottom of this post will give you an overview of the study's findings. Here we're going to try to get our minds around 1.8 zettabytes. The sentences in bold are from the press release.

In terms of sheer volume, 1.8 zettabytes of data is equivalent to:

Every person in the United States tweeting 3 tweets per minute for 26,976 years nonstop.

The trouble here is that even if you know the population of the United States (311 million, give or take) and the length of a tweet (140 characters, maximum) you're still left to plug those pieces into a length of time (26,976 years) that has no meaning to most anyone not a paleontologist.

Every person in the world having over 215 million high-resolution MRI scans per day.

Don't worry, I looked it up for you; the world has almost 7 billion people. And while I haven't the foggiest notion as to how much data would be represented by a single high-resolution MRI scan, never mind 215 million of them, I can tell you based on recent experience that the so-called "open MRI machines" are really not all that open.

Over 200 billion HD movies (each 2 hours in length) - Would take 1 person 47 million years to watch every movie.

Honestly, that one doesn't help at all.

But look what happens when the study's authors use their handy-dandy zettabyte-to-iPad translator:

The amount of information needed to fill 57.5 billion 32GB Apple iPads. With that many iPads we could:

Create a wall of iPads, 4,005-miles long and 61-feet high extending from Anchorage, Alaska to Miami, Florida.

There's a map of the United States, and there's a wall of iPads about 10 times my height.

Build a mountain 25-times higher than Mt. Fuji.

You don't even need to know the elevation of the mountain (12,388 feet) to form that mental picture.

Cover 86% of Mexico City.

Take a big city, cover almost all of it with iPads. Got it.

Build the Great iPad Wall of China - at twice the average height of the original.

Bet that baby would be visible from space.

Yes, yes, I understand that this exercise need not be about the iPad at all and that any old 9.5-by-7.31-by-.34-inch box packing 32 GB would do. But what picture of what tablet has been seared into your brain by two-plus years of Apple marketing?

I mean they used to use the Library of Congress for these types of comparisons. Libraries don't stack as well.

(Disclaimer: All of the math here belongs to IDC and EMC; blame me not for any errors.)

Tuesday, July 19. 2011

Time-lapse video shows how iPhones move across Europe

Via geek.com

-----

Back in April, the news of Apple’s somewhat-secret iPhone location-data-tracking broke to much disapproval. The Internet was abuzz with outraged iPhone users concerned about their privacy. With a few months’ time, the dust has settled a bit, and a few people have even figured out ways to put this technology to good use.

Take Crowdflow’s Michael Kreil for example. Kreil took location data from 880 iPhones all across Europe over one month’s time, he aggregated the data from April 2011, and then visualized it by creating an amazing time-lapse video. We’re able to see how iPhone customers move across the different countries in Europe. The video definitely has a psychedelic feel to it with its bright undulating lights flying around the eye-catching-colored maps of Europe.

The map style resembles a bunch of fireflies buzzing about Europe. The lights fade on and off to represent when data is turned off, presumably at night when we tend to turn off our phones when going to sleep. Kreil said that most iPhones don’t collect data at night since the owner is, typically, not moving. Because of this, the image becomes blurry at night, and the lights dissolve.

Kreil said that he couldn’t decide on a color scheme, so he made three videos of the same data, but in different colors. We chose our favorite below, but make sure to watch the others if you have a different color preference. We also recommend watching the videos in full HD and in full-screen mode.

He also noted that he’d like to see the same project applied to the entire globe, which makes our little time-lapse-loving geek hearts flutter with excitement.

-----

More videos @ CrowdFlow

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131035)

- Norton cyber crime study offers striking revenue loss statistics (101643)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100046)

- Norton cyber crime study offers striking revenue loss statistics (57872)

- The PC inside your phone: A guide to the system-on-a-chip (57436)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |