Entries tagged as artificial intelligence

Related tags

algorythm ai big data cloud computing coding fft innovation&society network neural network program programming software technology automation drone hardware light mobile robot wifi cloud data mining data visualisation privacy car google sensors art crowd-sourcing flickr gui history internet internet of things maps photos amazon android api apple book chrome os display glass interface laptop microsoft mirror 3d 3d printing 3d scanner ad amd ar arduino army asus augmented reality camera chrome advertisements API browser code computer history DNA facial recognition genome hack health monitoring picture search security dna app c c++ cobol databse smartobject touch app store botnet super computer cray 3g cpu data centerFriday, May 03. 2013

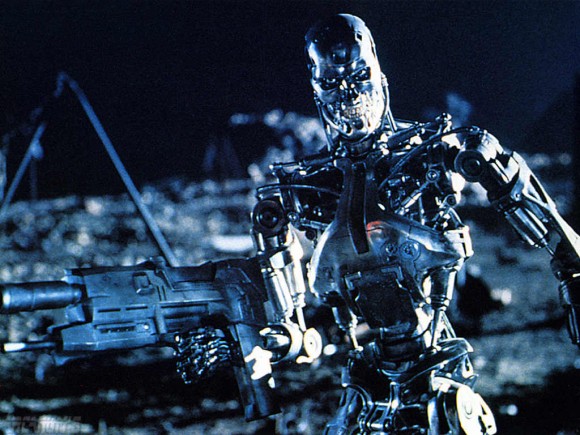

UN Reports on Killer Robots

Via InventorSpot

-----

How serious is the threat of killer robots? Well, it depends on whom you ask. Some people will tell you that the threat is very real, and I don’t mean the guy with the tinfoil hat standing on the street corner. A new draft of a report coming out of the U.N. Human Rights Commission looks to negate the possible threat of the use of unmanned vehicles with the ability to end human life without the intervention of another human being. As you can guess the UN is anti-killer robots.

In the 22-page report, which was released online as a PDF, the Human Rights Commission explained the mission of the document in the following terms:

“Lethal autonomous robotics (LARs) are weapon systems that, once activated, can select and engage targets without further human intervention. They raise far-reaching concerns about the protection of life during war and peace. This includes the question of the extent to which they can be programmed to comply with the requirements of international humanitarian law and the standards protecting life under international human rights law. Beyond this, their deployment may be unacceptable because no adequate system of legal accountability can be devised, and because robots should not have the power of life and death over human beings. The Special Rapporteur recommends that States establish national moratoria on aspects of LARs, and calls for the establishment of a high level panel on LARs to articulate a policy for the international community on the issue.”

So it looks like you may just have to watch the sky’s after all.

Thursday, May 02. 2013

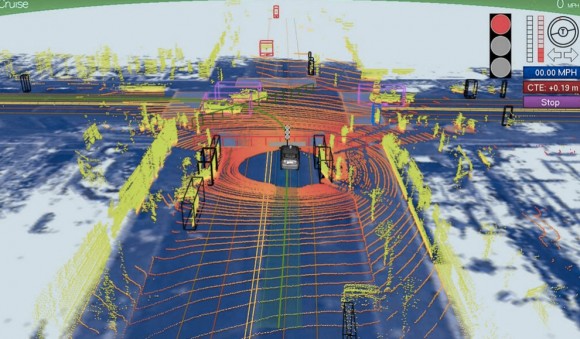

Driving Miss dAIsy: What Google’s self-driving cars see on the road

Via Slash Gear

-----

We’ve been hearing a lot about Google‘s self-driving car lately, and we’re all probably wanting to know how exactly the search giant is able to construct such a thing and drive itself without hitting anything or anyone. A new photo has surfaced that demonstrates what Google’s self-driving vehicles see while they’re out on the town, and it looks rather frightening.

The image was tweeted by Idealab founder Bill Gross, along with a claim that the self-driving car collects almost 1GB of data every second (yes, every second). This data includes imagery of the cars surroundings in order to effectively and safely navigate roads. The image shows that the car sees its surroundings through an infrared-like camera sensor, and it even can pick out people walking on the sidewalk.

Of course, 1GB of data every second isn’t too surprising when you consider that the car has to get a 360-degree image of its surroundings at all times. The image we see above even distinguishes different objects by color and shape. For instance, pedestrians are in bright green, cars are shaped like boxes, and the road is in dark blue.

However, we’re not sure where this photo came from, so it could simply be a rendering of someone’s idea of what Google’s self-driving car sees. Either way, Google says that we could see self-driving cars make their way to public roads in the next five years or so, which actually isn’t that far off, and Tesla Motors CEO Elon Musk is even interested in developing self-driving cars as well. However, they certainly don’t come without their problems, and we’re guessing that the first batch of self-driving cars probably won’t be in 100% tip-top shape.

Tuesday, December 04. 2012

The Relevance of Algorithms

By Tarleton Gillespie

-----

I’m really excited to share my new essay, “The Relevance of Algorithms,” with those of you who are interested in such things. It’s been a treat to get to think through the issues surrounding algorithms and their place in public culture and knowledge, with some of the participants in Culture Digitally (here’s the full litany: Braun, Gillespie, Striphas, Thomas, the third CD podcast, and Anderson‘s post just last week), as well as with panelists and attendees at the recent 4S and AoIR conferences, with colleagues at Microsoft Research, and with all of you who are gravitating towards these issues in their scholarship right now.

The motivation of the essay was two-fold: first, in my research on online platforms and their efforts to manage what they deem to be “bad content,” I’m finding an emerging array of algorithmic techniques being deployed: for either locating and removing sex, violence, and other offenses, or (more troublingly) for quietly choreographing some users away from questionable materials while keeping it available for others. Second, I’ve been helping to shepherd along this anthology, and wanted my contribution to be in the spirit of the its aims: to take one step back from my research to articulate an emerging issue of concern or theoretical insight that (I hope) will be of value to my colleagues in communication, sociology, science & technology studies, and information science.

The anthology will ideally be out in Fall 2013. And we’re still finalizing the subtitle. So here’s the best citation I have.

Gillespie, Tarleton. “The Relevance of Algorithms. forthcoming, in Media Technologies, ed. Tarleton Gillespie, Pablo Boczkowski, and Kirsten Foot. Cambridge, MA: MIT Press.

Below is the introduction, to give you a taste.

Algorithms play an increasingly important role in selecting what information is considered most relevant to us, a crucial feature of our participation in public life. Search engines help us navigate massive databases of information, or the entire web. Recommendation algorithms map our preferences against others, suggesting new or forgotten bits of culture for us to encounter. Algorithms manage our interactions on social networking sites, highlighting the news of one friend while excluding another’s. Algorithms designed to calculate what is “hot” or “trending” or “most discussed” skim the cream from the seemingly boundless chatter that’s on offer. Together, these algorithms not only help us find information, they provide a means to know what there is to know and how to know it, to participate in social and political discourse, and to familiarize ourselves with the publics in which we participate. They are now a key logic governing the flows of information on which we depend, with the “power to enable and assign meaningfulness, managing how information is perceived by users, the ‘distribution of the sensible.’” (Langlois 2012)

Algorithms need not be software: in the broadest sense, they are encoded procedures for transforming input data into a desired output, based on specified calculations. The procedures name both a problem and the steps by which it should be solved. Instructions for navigation may be considered an algorithm, or the mathematical formulas required to predict the movement of a celestial body across the sky. “Algorithms do things, and their syntax embodies a command structure to enable this to happen” (Goffey 2008, 17). We might think of computers, then, fundamentally as algorithm machines — designed to store and read data, apply mathematical procedures to it in a controlled fashion, and offer new information as the output.

But as we have embraced computational tools as our primary media of expression, and have made not just mathematics but all information digital, we are subjecting human discourse and knowledge to these procedural logics that undergird all computation. And there are specific implications when we use algorithms to select what is most relevant from a corpus of data composed of traces of our activities, preferences, and expressions.

These algorithms, which I’ll call public relevance algorithms, are — by the very same mathematical procedures — producing and certifying knowledge. The algorithmic assessment of information, then, represents a particular knowledge logic, one built on specific presumptions about what knowledge is and how one should identify its most relevant components. That we are now turning to algorithms to identify what we need to know is as momentous as having relied on credentialed experts, the scientific method, common sense, or the word of God.

What we need is an interrogation of algorithms as a key feature of our information ecosystem (Anderson 2011), and of the cultural forms emerging in their shadows (Striphas 2010), with a close attention to where and in what ways the introduction of algorithms into human knowledge practices may have political ramifications. This essay is a conceptual map to do just that. I will highlight six dimensions of public relevance algorithms that have political valence:

1. Patterns of inclusion: the choices behind what makes it into an index in the first place, what is excluded, and how data is made algorithm ready

2. Cycles of anticipation: the implications of algorithm providers’ attempts to thoroughly know and predict their users, and how the conclusions they draw can matter

3. The evaluation of relevance: the criteria by which algorithms determine what is relevant, how those criteria are obscured from us, and how they enact political choices about appropriate and legitimate knowledge

4. The promise of algorithmic objectivity: the way the technical character of the algorithm is positioned as an assurance of impartiality, and how that claim is maintained in the face of controversy

5. Entanglement with practice: how users reshape their practices to suit the algorithms they depend on, and how they can turn algorithms into terrains for political contest, sometimes even to interrogate the politics of the algorithm itself

6. The production of calculated publics: how the algorithmic presentation of publics back to themselves shape a public’s sense of itself, and who is best positioned to benefit from that knowledge.

Considering how fast these technologies and the uses to which they are put are changing, this list must be taken as provisional, not exhaustive. But as I see it, these are the most important lines of inquiry into understanding algorithms as emerging tools of public knowledge and discourse.

It would also be seductively easy to get this wrong. In attempting to say something of substance about the way algorithms are shifting our public discourse, we must firmly resist putting the technology in the explanatory driver’s seat. While recent sociological study of the Internet has labored to undo the simplistic technological determinism that plagued earlier work, that determinism remains an alluring analytical stance. A sociological analysis must not conceive of algorithms as abstract, technical achievements, but must unpack the warm human and institutional choices that lie behind these cold mechanisms. I suspect that a more fruitful approach will turn as much to the sociology of knowledge as to the sociology of technology — to see how these tools are called into being by, enlisted as part of, and negotiated around collective efforts to know and be known. This might help reveal that the seemingly solid algorithm is in fact a fragile accomplishment.

~ ~ ~

Here is the full article [PDF]. Please feel free to share it, or point people to this post.

Thursday, September 22. 2011

Dr. Watson - Come Here - I Need You

Via big think

By Dominic Basulto

-----

The next time you go to the doctor, you may be dealing with a supercomputer rather than a human. Watson, the groundbreaking artificial intelligence machine from IBM that took on chess champions and Jeopardy! contestants alike, is about to get its first real-world application in the healthcare sector. In partnership with health benefits company WellPoint, Watson will soon be diagnosing medical cases – and not just the everyday cases, either. The vision is for Watson to be working hand-in-surgical-glove with oncologists to diagnose and treat cancer in patients.

The WellPoint clinical trial, which could roll out as early as 2012, is

exciting proof that supercomputing intelligence, when properly

harnessed, can lead to revolutionary breakthroughs in complex fields

like medicine. At a time when talk about reforming the healthcare system

is primarily about the creation of digital health records, the

integration of Watson into the healthcare industry could really shake

things up. By some accounts, Watson

is able to process as many as 200 million pages of medical information

in seconds – giving it a number-crunching head start on doctors for

diagnosing cases. In one test case cited by WellPoint, Watson was able

to diagnose a rare form of an illness within seconds – a case that had

left doctors baffled.

While having super-knowledgeable medical experts on call is exciting, it also raises several thorny issues. At what point – if ever - would you ask for a “second opinion” on your medical condition from a human doctor? Will “Watson” ever be included in the names of physicians included in your HMO listings? And, perhaps most importantly, can supercomputers ever provide the type of bedside manner that we are accustomed to in our human doctors?

This last question has attracted much attention from medical practitioners and health industry thought leaders alike. Abraham Verghese,

a professor at the Stanford University School of Medicine as well as

bestselling author, has been particularly outspoken about the inability

of computers to provide the type of medical handholding that we are used to from human doctors.

Verghese claims that the steady digitization of records and clinical

data is reducing every patient to an "iPatient" – simply a set of

digital 1’s and 0’s that can be calculated, crunched, and computed.

Forget whether androids dream of digital sheep – can they take a digital Hippocratic Oath?

Given that the cost of healthcare is simply too high, as a society we will need to accept some compromises. Once the healthcare industry is fully digitized, supercomputers like Watson could result in a more cost-effective way to sift through the ever-growing amount of medical information and provide real-time medical analysis that could save lives. If Watson also results in a significant improvement in patient treatment as well, it’s clear that the world of medicine will never be the same again. Right now, IBM envisions Watson supplementing – not actually replacing - doctors. But the time is coming when nurses across the nation will be saying, “Watson -- Come Here –- I Need You,” instead of turning to doctors whenever they need a sophisticated medical evaluation of a patient.

Friday, September 16. 2011

Data Analytics: Crunching the Future

The technicians at SecureAlert’s monitoring center in Salt Lake City sit in front of computer screens filled with multicolored dots. Each dot represents someone on parole or probation wearing one of the company’s location-reporting ankle cuffs. As the people move around a city, their dots move around the map. “It looks a bit like an animated gumball machine,” says Steven Florek, SecureAlert’s vice-president of offender insights and knowledge management. As long as the gumballs don’t go where they’re not supposed to, all is well.

The company works with law enforcement agencies around the U.S. to keep track of about 15,000 ex-cons, meaning it must collect and analyze billions of GPS signals transmitted by the cuffs each day. The more traditional part of the work consists of making sure that people under house arrest stay in their houses. But advances in the way information is collected and sorted mean SecureAlert isn’t just watching; the company says it can actually predict when a crime is about to go down. If that sounds like the “pre-cogs”—crime prognosticators—in the movie Minority Report, Florek thinks so, too. He calls SecureAlert’s newest capability “pre-crime” detection.

Using data from the ankle cuffs and other sources, SecureAlert identifies patterns of suspicious behavior. A person convicted of domestic violence, for example, might get out of jail and set up a law-abiding routine. Quite often, though, SecureAlert’s technology sees such people backslide and start visiting the restaurants or schools or other places their victims frequent. “We know they’re looking to force an encounter,” Florek says. If the person gets too close for comfort, he says, “an alarm goes off and a flashing siren appears on the screen.” The system doesn’t go quite as far as Minority Report, where the cops break down doors and blow away the perpetrators before they perpetrate. Rather, the system can call an offender through a two-way cellphone attached to the ankle cuff to ask what the person is doing, or set off a 95-decibel shriek as a warning to others. More typically, the company will notify probation officers or police about the suspicious activity and have them investigate. Presumably with weapons holstered. “It’s like a strategy game,” Florek says. (BeforeBloomberg Businessweek went to press, Florek left the company for undisclosed reasons.)

It didn’t used to be that a company the size of SecureAlert, with about $16 million in annual revenue, could engage in such a real-world chess match. For decades, only Fortune 500-scale corporations and three-letter government agencies had the money and resources to pull off this kind of data crunching. Wal-Mart Stores (WMT) is famous for using data analysis to adjust its inventory levels and prices. FedEx (FDX) earned similar respect for tweaking its delivery routes, while airlines and telecommunications companies used this technology to pinpoint and take care of their best customers. But even at the most sophisticated corporations, data analytics was often a cumbersome, ad hoc affair. Companies would pile information in “data warehouses,” and if executives had a question about some demographic trend, they had to supplicate “data priests” to tease the answers out of their costly, fragile systems. “This resulted in a situation where the analytics were always done looking in the rearview mirror,” says Paul Maritz, chief executive officer of VMware (VMW). “You were reasoning over things to find out what happened six months ago.”

In the early 2000s a wave of startups made it possible to gather huge volumes of data and analyze it in record speed—à la SecureAlert. A retailer such as Macy’s (M) that once pored over last season’s sales information could shift to looking instantly at how an e-mail coupon for women’s shoes played out in different regions. “We have a banking client that used to need four days to make a decision on whether or not to trade a mortgage-backed security,” says Charles W. Berger, CEO of ParAccel, a data analytics startup founded in 2005 that powers SecureAlert’s pre-crime operation. “They do that in seven minutes now.”

Now a second wave of startups is finding ways to use cheap but powerful servers to analyze new categories of data such as blog posts, videos, photos, tweets, DNA sequences, and medical images. “The old days were about asking, ‘What is the biggest, smallest, and average?’?” says Michael Olson, CEO of startup Cloudera. “Today it’s, ‘What do you like? Who do you know?’ It’s answering these complex questions.”

The big bang in data analytics occurred in 2006 with the release of an open-source system called Hadoop. The technology was created by a software consultant named Doug Cutting, who had been examining a series of technical papers released by Google (GOOG). The papers described how the company spread tremendous amounts of information across its data centers and probed that pool of data for answers to queries. Where traditional data warehouses crammed as much information as possible on a few expensive computers, Google chopped up databases into bite-size chunks and sprinkled them among tens of thousands of cheap computers. The result was a lower-cost and higher-capacity system that lots of people can use at the same time. Google uses the technology throughout its operations. Its systems study billions of search results, match them to the first letters of a query, take a guess at what people are looking for, and display suggestions as they type. You can see the bite-size nature of the technology in action on Google Maps as tiny tiles come together to form a full map.

Cutting created Hadoop to mimic Google’s technology so the rest of the world could have a way to sift through massive data sets quickly and cheaply. (Hadoop was the name of his son’s toy elephant.) The software first took off at Web companies such as Yahoo! (YHOO) and Facebook and then spread far and wide, with Walt Disney (DIS), the New York Times, Samsung, and hundreds of others starting their own projects. Cloudera, where Cutting, 48, now works, makes its own version of Hadoop and has sales partnerships withHewlett-Packard (HPQ) and Dell (DELL).

Dozens of startups are trying to develop easier-to-use versions of Hadoop. For example, Datameer, in San Mateo, Calif., has built an Excel-like dashboard that allows regular business people, instead of data priests, to pose questions. “For 20 years you had limited amounts of computing and storage power and could only ask certain things,” says Datameer CEO Stefan Groschupf. “Now you just dump everything in there and ask whatever you want.” Top venture capital firms Kleiner Perkins Caufield & Byers and Redpoint Ventures have backed Datameer, while Accel Partners, Greylock Partners, and In-Q-Tel, the investment arm of the CIA, have helped finance Cloudera.

Past technology worked with data that fell neatly into rows and columns—purchase dates, prices, the location of a store. Amazon.com (AMZN), for instance, would use traditional systems to track how many people bought a certain type of camera and for what price. Hadoop can handle data that don’t fit into spreadsheets. That ability, combined with Hadoop’s speedy divide-and-conquer approach to data, lets users get answers to questions they couldn’t even ask before. Retailers can dig into not just what people bought but why they bought it. Amazon can (and does) analyze its website logs to see what other items people look at before they buy that camera, how long they look at them, whether certain colors on a Web page generate more sales—and synthesize all that into real-time intelligence. Are they telling their friends about that camera? Is some new model poised to be the next big hit? “These insights don’t come super easily, but the information is there, and we do have the machine power now to process it and search for it,” says James Markarian, chief technology officer at data specialist Informatica (INFA).

Take the case of U.S. Xpress Enterprises, one of the largest private trucking companies. Through a device installed in the cabs of its 10,000-truck fleet, U.S. Xpress can track a driver’s location, how many times the driver has braked hard in the last few hours, if he sent a text message to the customer saying he would be late, and how long he rested. U.S. Xpress pays particular attention to the fuel economy of each driver, separating out the “guzzlers from the misers,” says Timothy Leonard, U.S. Xpress CTO. Truckers keep the engines running and the air conditioning on after they’ve pulled over for the night. “If you have a 10-hour break, we want your AC going for the first two hours at 70 degrees so you can go to sleep,” says Leonard. “After that, we want it back up to 78 or 79 degrees.” By adjusting the temperature, U.S. Xpress has lowered annual fuel consumption by 62 gallons per truck, which works out to a total of about $24 million per year. Less numerically, the company’s systems also analyze drivers’ tweets and blog posts. “We have a sentiment dashboard that monitors how they are feeling,” Leonard says. “If we see they hate something, we can respond with some new software or policies in a few hours.” The monitoring may come off as Big Brotherish, but U.S. Xpress sees it as key to keeping its drivers from quitting. (Driver turnover is a chronic issue in the trucking business.)

How are IBM (IBM) and the other big players in the data warehousing business responding to all this? In the usual way: They’re buying startups. Last year, IBM bought Netezza for $1.7 billion. HP, EMC (EMC), and Teradata (TDC) have also acquired data analytics companies in the past 24 months.

It’s not going too far to say that data analytics has even gotten hip. The San Francisco offices of startup Splunk have all the of-the-moment accoutrements you’d find at Twitter or Zynga. The engineers work in what amounts to a giant living room with pinball machines, foosball tables, and Hello Kitty-themed cubes. Weekday parties often break out—during a recent visit, it was Mexican fiesta. Employees were wearing sombreros and fake moustaches while a dude near the tequila bar played the bongos.

Splunk got its start as a type of nuts-and-bolts tool in data centers, giving administrators a way to search through data tied to the low-level operations of computers and software. The company indexes “machine events”—the second-by-second records produced by computing devices to keep track of their actions. This could include records of every time a server stores information, or it could be the length of a cell phone call and what type of handset was used. Splunk helps companies search through this morass, looking for events that caused problems or stood out as unusual. “We can see someone visit a shopping website from a certain computer, see that they got an error message while on the lady’s lingerie page, see how many times they tried to log in, where they went after, and what machine in some far-off data center caused the problem,” says Erik Swan, CTO and co-founder of Splunk. While it started as troubleshooting software for data centers, the company has morphed into an analysis tool that can be aimed at fine-tuning fraud detection systems at credit-card companies and measuring the success of online ad campaigns.

A few blocks away from Splunk’s office are the more sedate headquarters of IRhythm Technologies, a medical device startup. IRhythm makes a type of oversize, plastic band-aid called the Zio Patch that helps doctors detect cardiac problems before they become fatal. Patients affix the Zio Patch to their chests for two weeks to measure their heart activity. The patients then mail the devices back to IRhythm’s offices, where a technician feeds the information into Amazon’s cloud computing service. Patients typically wear rivals’ much chunkier devices for just a couple of days and remove them when they sleep or shower—which happen to be when heart abnormalities often manifest. The upside of the waterproof Zio Patch is the length of time that people wear it—but 14 days is a whole lot of data.

IRhythm’s Hadoop system chops the 14-day periods into chunks and analyzes them with algorithms. Unusual activity gets passed along to technicians who flag worrisome patterns to doctors. For quality control of the device itself, IRhythm uses Splunk. The system monitors the strength of the Zio Patch’s recording signals, whether hot weather affects its adhesiveness to the skin, or how long a patient actually wore the device. On the Zio Patch manufacturing floor, IRhythm discovered that operations at some workstations were taking longer than expected. It used Splunk to go back to the day when the problems cropped up and discovered a computer glitch that was hanging up the operation.

Mark Day, IRhythm’s vice-president of research and development, says he’s able to fine-tune his tiny startup’s operations the way a world-class manufacturer like Honda Motor (HMC) or Dell could a couple years ago. Even if he could have afforded the old-line data warehouses, they were too inflexible to provide much help. “The problem with those systems was that you don’t know ahead of time what problems you will face,” Day says. “Now, we just adapt as things come up.”

At SecureAlert, Florek says that despite the much-improved tools, extracting useful meaning from data still requires effort—and in his line of work, sensitivity. If some ankle-cuff-wearing parolee wanders out-of-bounds, there’s a human in the process to make a judgment call. “We are constantly tuning our system to achieve a balance between crying wolf and catching serious situations,” he says. “Sometimes a guy just goes to a location because he got a new girlfriend.”

Monday, September 12. 2011

Narrative Science Is a Computer Program That Can Write

Via Nexus 404

by J Angelo Racoma

-----

Automatic content generators are the scourge of most legitimate writers and publishers, especially if these take some original content, spin it around and generate a mashup of different content but obviously based on something else. An app made by computer science and journalism experts involves artificial intelligence that writes like a human, though.

Developers at the Northwestern University’s Intelligent Information Laboratory have come up with a program called Narrative Science, which composes articles and reports based on data, facts, and styles plugged in. The application is worth more than 10 years’ work by Kris Hammond and Larry Birnbaum who are both professors of journalism and computer science at the university.

Artificial vs Human Intelligence

Currently being used by 20 companies, an example of work done by Narrative Science include post-game reports for collegiate athletics events and articles for medical journals, in which the software can compose an entire, unique article in about 60 seconds or so. What’s striking is that even language experts say you won’t know the difference between the software and an actual human, in terms of style, tone and usage. The developers have recently received $6 million in venture capital, which is indicative that the technology has potential in business and revenue-generating applications.

AI today is increasingly becoming sophisticated in terms of understanding and generating language. AI has gone a long way from spewing out pre-encoded responses from a list of sentences and words in reaction to keywords. Narrative Science can actually compose paragraphs using a human-like voice. The question here is whether the technology will undermine traditional journalism. Will AI simply assist humans in doing research and delivering content? Or, will AI eventually replace human beings in reporting the news, generating editorials and even communicating with other people?

What Does it Mean for Journalism and the Writing Profession?

Perhaps the main indicator here will be cost. Narrative Science charges about $10 each 500-word article, which is not really far from how human copy writers might charge for content. Once this technology becomes popular with newspapers and other publications, will this mean writers and journalists — tech bloggers included — need to find a new career? It seems it’s not just the manufacturing industry that’s prone to being replaced by machines. Maybe we can just input a few keywords like iPhone, iOS, Jailbreak, Touchscreen, Apple and the like, and the Narrative Science app will be able to create an entirely new rumor about the upcoming iPhone 5, for instance!

The potential is great, although the possibility for abuse is also there. Think of spammers and scammers using the software to create more appealing emails that recipients are more likely to act on. Still, with tools like these, it’s only up for us humans to up the ante in terms of quality.

And yes, in case you’re wondering, a real human did write this post.

-----

Personal comments:

One book to read about this: "Exemplaire de démonstration" by Philippe Vasset

Friday, August 19. 2011

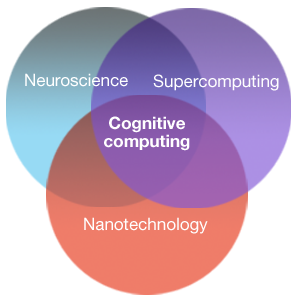

IBM unveils cognitive computing chips, combining digital 'neurons' and 'synapses'

Via Kurzweil

-----

IBM researchers unveiled today a new generation of experimental computer chips designed to emulate the brain’s abilities for perception, action and cognition.

In a sharp departure from traditional von Neumann computing concepts in designing and building computers, IBM’s first neurosynaptic computing chips recreate the phenomena between spiking neurons and synapses in biological systems, such as the brain, through advanced algorithms and silicon circuitry.

The technology could yield many orders of magnitude less power consumption and space than used in today’s computers, the researchers say. Its first two prototype chips have already been fabricated and are currently undergoing testing.

Called cognitive computers, systems built with these chips won’t be programmed the same way traditional computers are today. Rather, cognitive computers are expected to learn through experiences, find correlations, create hypotheses, and remember — and learn from — the outcomes, mimicking the brains structural and synaptic plasticity.

“This is a major initiative to move beyond the von Neumann paradigm that has been ruling computer architecture for more than half a century,” said Dharmendra Modha, project leader for IBM Research.

“Future applications of computing will increasingly demand functionality that is not efficiently delivered by the traditional architecture. These chips are another significant step in the evolution of computers from calculators to learning systems, signaling the beginning of a new generation of computers and their applications in business, science and government.”

Neurosynaptic chips

IBM is combining principles from nanoscience, neuroscience, and supercomputing as part of a multi-year cognitive computing initiative. IBM’s long-term goal is to build a chip system with ten billion neurons and hundred trillion synapses, while consuming merely one kilowatt of power and occupying less than two liters of volume.

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

IBM has two working prototype designs. Both cores were fabricated in 45 nm SOICMOS and contain 256 neurons. One core contains 262,144 programmable synapses and the other contains 65,536 learning synapses. The IBM team has successfully demonstrated simple applications like navigation, machine vision, pattern recognition, associative memory and classification.

IBM’s overarching cognitive computing architecture is an on-chip network of lightweight cores, creating a single integrated system of hardware and software. It represents a potentially more power-efficient architecture that has no set programming, integrates memory with processor, and mimics the brain’s event-driven, distributed and parallel processing.

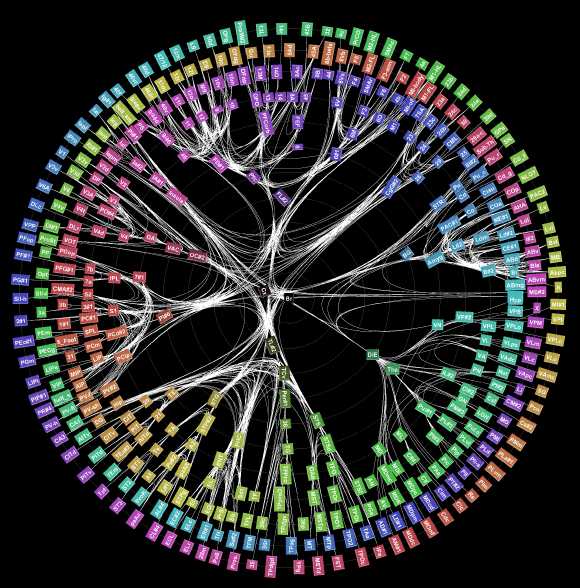

Visualization of the long distance network of a monkey brain (credit: IBM Research)

SyNAPSE

The company and its university collaborators also announced they have been awarded approximately $21 million in new funding from the Defense Advanced Research Projects Agency (DARPA) for Phase 2 of the Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) project.

The goal of SyNAPSE is to create a system that not only analyzes complex information from multiple sensory modalities at once, but also dynamically rewires itself as it interacts with its environment — all while rivaling the brain’s compact size and low power usage.

For Phase 2 of SyNAPSE, IBM has assembled a world-class multi-dimensional team of researchers and collaborators to achieve these ambitious goals. The team includes Columbia University; Cornell University; University of California, Merced; and University of Wisconsin, Madison.

Why Cognitive Computing

Future chips will be able to ingest information from complex, real-world environments through multiple sensory modes and act through multiple motor modes in a coordinated, context-dependent manner.

For example, a cognitive computing system monitoring the world’s water supply could contain a network of sensors and actuators that constantly record and report metrics such as temperature, pressure, wave height, acoustics and ocean tide, and issue tsunami warnings based on its decision making.

Similarly, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag bad or contaminated produce. Making sense of real-time input flowing at an ever-dizzying rate would be a Herculean task for today’s computers, but would be natural for a brain-inspired system.

“Imagine traffic lights that can integrate sights, sounds and smells and flag unsafe intersections before disaster happens or imagine cognitive co-processors that turn servers, laptops, tablets, and phones into machines that can interact better with their environments,” said Dr. Modha.

IBM has a rich history in the area of artificial intelligence research going all the way back to 1956 when IBM performed the world’s first large-scale (512 neuron) cortical simulation. Most recently, IBM Research scientists created Watson, an analytical computing system that specializes in understanding natural human language and provides specific answers to complex questions at rapid speeds.

---

Quicksearch

Popular Entries

- The great Ars Android interface shootout (129277)

- MeCam $49 flying camera concept follows you around, streams video to your phone (97971)

- Norton cyber crime study offers striking revenue loss statistics (94465)

- The PC inside your phone: A guide to the system-on-a-chip (55660)

- Norton cyber crime study offers striking revenue loss statistics (50694)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

July '24 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 | 31 | ||||