Tuesday, August 09. 2011

The Subjectivity of Natural Scrolling

Via Slash Gear

-----

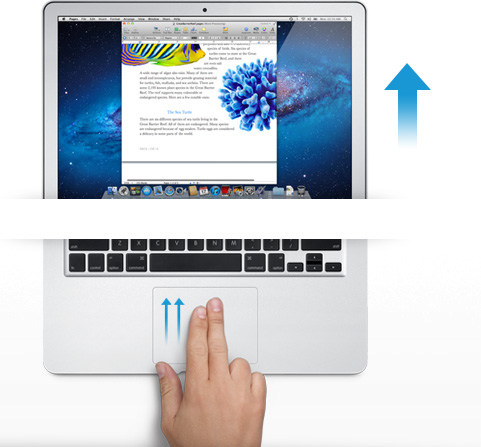

Apple released its new OS X Lion for Mac computers recently, and there was one controversial change that had the technorati chatting nonstop. In the new Lion OS, Apple changed the direction of scrolling. I use a MacBook Pro (among other machines, I’m OS agnostic). On my MacBook, I scroll by placing two fingers on the trackpad and moving them up or down. On the old system, moving my fingers down meant the object on the screen moved up. My fingers are controlling the scroll bars. Moving down means I am pulling the scroll bars down, revealing more of the page below what is visible. So, the object moves upwards. On the new system, moving my fingers down meant the object on screen moves down. My fingers are now controlling the object. If I want the object to move up, and reveal more of what is beneath, I move my fingers up, and content rises on screen.

The scroll bars are still there, but Apple has, by default, hidden them in many apps. You can make them reappear by hunting through the settings menu and turning them back on, but when they do come back, they are much thinner than they used to be, without the arrows at the top and bottom. They are also a bit buggy at the moment. If I try to click and drag the scrolling indicator, the page often jumps around, as if I had missed and clicked on the empty space above or below the scroll bar instead of directly on it. This doesn’t always happen, but it happens often enough that I have trained myself to avoid using the scroll bars this way.

So, the scroll bars, for now, are simply a visual indicator of where my view is located on a long or wide page. Clearly Apple does not think this information is terribly important, or else scroll bars would be turned on by default. As with the scroll bars, you can also hunt through the settings menu to turn off the new, so-called “natural scrolling.” This will bring you back to the method preferred on older Apple OSes, and also on Windows machines.

So, the scroll bars, for now, are simply a visual indicator of where my view is located on a long or wide page. Clearly Apple does not think this information is terribly important, or else scroll bars would be turned on by default. As with the scroll bars, you can also hunt through the settings menu to turn off the new, so-called “natural scrolling.” This will bring you back to the method preferred on older Apple OSes, and also on Windows machines.

Some disclosure: my day job is working for Samsung. We make Windows computers that compete with Macs. I work in the phones division, but my work machine is a Samsung laptop running Windows. My MacBook is a holdover from my days as a tech journalist. When you become a tech journalist, you are issued a MacBook by force and stripped of whatever you were using before.

"Natural scrolling will seem familiar to those of you not frozen in an iceberg since World War II"I am not criticizing or endorsing Apple’s new natural scrolling in this column. In fact, in my own usage, there are times when I like it, and times when I don’t. Those emotions are usually found in direct proportion to the amount of NyQuil I took the night before and how hot it was outside when I walked my dog. I have found no other correlation.

The new natural scrolling method will probably seem familiar to those of you not frozen in an iceberg since World War II. It is the same direction you use for scrolling on most touchscreen phones, and most tablets. Not all, of course. Some phones and tablets still use styli, and these phone often let you scroll by dragging scroll bars with the pointer. But if you have an Android or an iPhone or a Windows Phone, you’re familiar with the new method.

My real interest here is to examine how the user is placed in the conversation between your fingers and the object on screen. I have heard the argument that the new method tries, and perhaps fails, to emulate the touchscreen experience by manipulating objects as if they were physical. On touchscreen phones, this is certainly the case. When we touch something on screen, like an icon or a list, we expect it to react in a physical way. When I drag my finger to the right, I want the object beneath to move with my finger, just as a piece of paper would move with my finger when I drag it.

This argument postulates a problem with Apple’s natural scrolling because of the literal distance between your fingers and the objects on screen. Also, the angle has changed. The plane of your hands and the surface on which they rest are at an oblique angle of more than 90 degrees from the screen and the object at hand.

Think of a magic wand. When you wave a magic wand with the tip facing out before you, do you imagine the spell shooting forth parallel to the ground, or do you imagine the spell shooting directly upward? In our imagination, we do want a direct correlation between the position of our hands and the reaction on screen, this is true. However, is this what we were getting before? Not really.

The difference between classic scrolling and ‘natural’ scrolling seems to be the difference between manipulating a concept and manipulating an object. Scroll bars are not real, or at least they do not correspond to any real thing that we would experience in the physical world. When you read a tabloid, you do not scroll down to see the rest of the story. You move your eyes. If the paper will not fit comfortably in your hands, you fold it. But scrolling is not like folding. It is smoother. It is continuous. Folding is a way of breaking the object into two conceptual halves. Ask a print newspaper reporter (and I will refrain from old media mockery here) about the part of the story that falls “beneath the fold.” That part better not be as important as the top half, because it may never get read.

Natural scrolling correlates more strongly to moving an actual object. It is like reading a newspaper on a table. Some of the newspaper may extend over the edge of the table and bend downward, making it unreadable. When you want to read it, you move the paper upward. In the same way, when you want to read more of the NYTimes.com site, you move your fingers upward.

"Is it better to create objects on screen that appropriate the form of their physical world counterparts?"The argument should not be over whether one is more natural than the other. Let us not forget that we are using an electronic machine. This is not a natural object. The content onscreen is only real insofar as pixels light up and are arranged into a recognizable pattern. Those words are not real, they are the absence of light, in varying degrees if you have anti-aliasing cranked up, around recognizable patterns that our eyes and brain interpret as letters and words.

The argument should be over which is the more successful design for a laptop or desktop operating system. Is it better to create objects on screen that appropriate the form of their physical world counterparts? Should a page in Microsoft Word look like a piece of paper? Should an icon for a hard disk drive look like a hard disk? What percentage of people using a computer have actually seen a hard disk drive? What if your new ultraportable laptop uses a set of interconnected solid state memory chips instead? Does the drive icon still look like a drive?

Or is it better to create objects on screen that do not hew to the physical world? Certainly their form should suggest their function in order to be intuitive and useful, but they do not have to be photorealistic interpretations. They can suggest function through a more abstract image, or simply by their placement and arrangement.

"How should we represent a Web browser, a feature that has no counterpart in real life?"In the former system, the computer interface becomes a part of the users world. The interface tries to fit in with symbols that are already familiar. I know what a printer looks like, so when I want to set up my new printer, I find the picture of the printer and I click on it. My email icon is a stamp. My music player icon is a CD. Wait, where did my CD go? I can’t find my CD?! What happened to my music!?!? Oh, there it is. Now it’s just a circle with a musical note. I guess that makes sense, since I hardly use CDs any more.

In the latter system, the user becomes part of the interface. I have to learn the language of the interface design. This may sound like it is automatically more difficult than the former method of photorealism, but that may not be true. After all, when I want to change the brightness of my display, will my instinct really be to search for a picture of a cog and gears? And how should we represent a Web browser, a feature that has no counterpart in real life? Are we wasting processing power and time trying to create objects that look three dimensional on a two dimensional screen in a 2D space?

I think the photorealistic approach, and Apple’s new natural scrolling, may be the more personal way to design an interface. Apple is clearly thinking of the intimate relationship between the user and the objects that we touch. It is literally a sensual relationship, in that we use a variety of our senses. We touch. We listen. We see.

But perhaps I do not need, nor do I want, to have this relationship with my work computer. I carry my phone with me everywhere. I keep my tablet very close to me when I am using it. With my laptop, I keep some distance. I am productive. We have a lot to get done.

DNA circuits used to make neural network, store memories

Via ars technica

By Kyle Niemeyer

-----

Even as some scientists and engineers develop improved versions of current computing technology, others are looking into drastically different approaches. DNA computing offers the potential of massively parallel calculations with low power consumption and at small sizes. Research in this area has been limited to relatively small systems, but a group from Caltech recently constructed DNA logic gates using over 130 different molecules and used the system to calculate the square roots of numbers. Now, the same group published a paper in Nature that shows an artificial neural network, consisting of four neurons, created using the same DNA circuits.

The artificial neural network approach taken here is based on the perceptron model, also known as a linear threshold gate. This models the neuron as having many inputs, each with its own weight (or significance). The neuron is fired (or the gate is turned on) when the sum of each input times its weight exceeds a set threshold. These gates can be used to construct compact Boolean logical circuits, and other circuits can be constructed to store memory.

As we described in the last article on this approach to DNA computing, the authors represent their implementation with an abstraction called "seesaw" gates. This allows them to design circuits where each element is composed of two base-paired DNA strands, and the interactions between circuit elements occurs as new combinations of DNA strands pair up. The ability of strands to displace each other at a gate (based on things like concentration) creates the seesaw effect that gives the system its name.

In order to construct a linear threshold gate, three basic seesaw gates are needed to perform different operations. Multiplying gates combine a signal and a set weight in a seesaw reaction that uses up fuel molecules as it converts the input signal into output signal. Integrating gates combine multiple inputs into a single summed output, while thresholding gates (which also require fuel) send an output signal only if the input exceeds a designated threshold value. Results are read using reporter gates that fluoresce when given a certain input signal.

To test their designs with a simple configuration, the authors first constructed a single linear threshold circuit with three inputs and four outputs—it compared the value of a three-bit binary number to four numbers. The circuit output the correct answer in each case.

For the primary demonstration on their setup, the authors had their linear threshold circuit play a computer game that tests memory. They used their approach to construct a four-neuron Hopfield network, where all the neurons are connected to the others and, after training (tuning the weights and thresholds) patterns can be stored or remembered. The memory game consists of three steps: 1) the human chooses a scientist from four options (in this case, Rosalind Franklin, Alan Turing, Claude Shannon, and Santiago Ramon y Cajal); 2) the human “tells” the memory network the answers to one or more of four yes/no (binary) questions used to identify the scientist (such as, “Did the scientist study neural networks?” or "Was the scientist British?"); and 3) after eight hours of thinking, the DNA memory guesses the answer and reports it through fluorescent signals.

They played this game 27 total times, for a total of 81 possible question/answer combinations (34). You may be wondering why there are three options to a yes/no question—the state of the answers is actually stored using two bits, so that the neuron can be unsure about answers (those that the human hasn't provided, for example) using a third state. Out of the 27 experimental cases, the neural network was able to correctly guess all but six, and these were all cases where two or more answers were not given.

In the best cases, the neural network was able to correctly guess with only one answer and, in general, it was successful when two or more answers were given. Like the human brain, this network was able to recall memory using incomplete information (and, as with humans, that may have been a lucky guess). The network was also able to determine when inconsistent answers were given (i.e. answers that don’t match any of the scientists).

These results are exciting—simulating the brain using biological computing. Unlike traditional electronics, DNA computing components can easily interact and cooperate with our bodies or other cells—who doesn’t dream of being able to download information into your brain (or anywhere in your body, in this case)? Even the authors admit that it’s difficult to predict how this approach might scale up, but I would expect to see a larger demonstration from this group or another in the near future.

Help CERN in the hunt for the Higgs Boson

Via bit-tech

-----

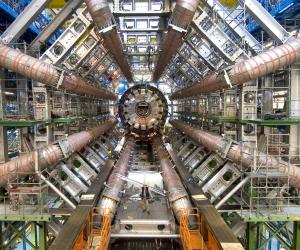

The Citizen Cyberscience Centre based at CERN, launched a new version of LHC@home today.

You've probably heard of Folding@Home or Seti@Home - both are

distributed computing programs designed to harness the power of your

average home PC to number crunch data, aiding real scientific research.

LHC@home, as science fans will probably have already guessed, is a

similar venture for the Large Hadron Collider (LHC). The latest version

of the Citizen Cyberscience Centre's software, called LHC@home 2.0,

simulates collisions between two beams of protons travelling at close to

the speed of light in the LHC.

Professor Dave Britton of the University of Glasgow is Project Leader of

the GridPP project that provides Grid computing for particle physics

throughout the UK. He is a member of the ATLAS collaboration, one of the

experiments at the LHC and had this to say about LHC@home 2.0 and the

Citizen Cyberscience Centre:

'Scientists like me are trying to answer fundamental questions about

the structure and origin of the Universe. Through the Citizen

Cyberscience Centre and its volunteers around the world, the Grid

computing tools and techniques that I use everyday are available to

scientists in developing countries, giving them access to the latest

computing technology and the ability to solve the problems that they are

facing, such as providing clean water.

Whether you’re interested in finding the Higgs boson, playing a part in

humanitarian aid or advancing knowledge in developing countries, this is

a great project to get involved with.;

You can check out the LHC@home home page here. Have you taken part in Seti or Folding? Maybe you're already in the hunt for the Higgs Boson?

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131044)

- Norton cyber crime study offers striking revenue loss statistics (101656)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100056)

- Norton cyber crime study offers striking revenue loss statistics (57885)

- The PC inside your phone: A guide to the system-on-a-chip (57445)