Tuesday, August 30. 2011

Automatic spelling corrections on Github

By Holden Karau

-----

English has never been one of my strong points (as is fairly obvious by reading my blog), so my latest side project might surprise you a bit. Inspired by the results of tarsnap’s bug bounty and the first pull request received for a new project(slashem - a type safe rogue like DSL for querying solr in scala) I decided to write a bot for github to fix spelling mistakes.

The code its self is very simple (albeit not very good, it was written after I got back from clubbing @ JWZ’s club [DNA lounge]). There is something about a lack of sleep which makes perl code and regexs seem like a good idea. If despite the previous warnings you still want to look at the codehttps://github.com/holdenk/holdensmagicalunicorn is the place to go. It works by doing a github search for all the README files in markdown format and then running a limited spell checker on them. Documents with a known misspelled word are flagged and output to a file. Thanks to the wonderful github api the next steps is are easy. It forks the repo and clones it locally, performs the spelling correction, commits, pushes and submits a pull request.

The spelling correction is based on Pod::Spell::CommonMistakes, it works using a very restricted set of misspelled words to corrections.

Writing a “future directions” sections always seems like such a cliche, but here it is anyways. The code as it stands is really simple. For example it only handles one repo of a given name, and the dictionary is small, etc. The next version should probably also try and only submit corrections against the conical repo. Some future plans extending the dictionary. In the longer term I think it would be awesome to attempt detect really simple bugs in actual code (things like memcpy(dest,0,0)).

You can follow the bot on twitter holdensunicorn .

Comments, suggestions, and patches always appreciated. -holdenkarau (although I’m going to be AFK at burning man for awhile, you can find me @ 6:30 & D)

Monday, August 29. 2011

Google TV set to launch in Europe

Via TechSpot

-----

During his keynote speech at the annual Edinburgh Television Festival last Friday, Google Executive Eric Schmidt announced plans to launch Google's TV service in Europe starting early next year.

The original launch in the United States back in October 2010 received rather poor reviews, with questions being raised regarding lack of content being available. This has seen prices slashed on Logitech set-top boxes down as low as $99 in July, with similarly poor sales of the integrated Sony Bravia Google TV models.

How much of a success Google TV will become in Europe remains to be seen, with the UK broadcasters especially concerned about the damage it could do to their existing business models. Strong competition from established companies like Sky and Virgin Media could further complicate matters for the Internet search giant.

During the MacTaggart lecture, Schmidt sought to calm the fears of the broadcasting elite, taking full advantage of the fact he was the first non-TV executive to ever be invited to present the prestigious keynote lecture at the festival.

"We seek to support the content industry by providing an open platform for the next generation of TV to evolve, the same way Android is an open platform for the next generation of mobile” said Schmidt. “Some in the US feared we aimed to compete with broadcasters or content creators. Actually our intent is the opposite…".

Much like in the United States, Google faces a long battle to convince broadcasters and major media players of its intent to help evolve TV platforms with open technologies, rather than topple them.

Google seems to have firmly set its sights on a slice of the estimated $190bn TV advertising market which will do little to calm fears, especially when you consider Google's online advertising figures for 2010 were just $28.9bn in comparison.

Friday, August 26. 2011

StarCraft: Coming to a sports bar near you

-----

SAN FRANCISCO—One Sunday afternoon last month, a hundred boisterous patrons crowded into Mad Dog in the Fog, a British sports bar here, to watch a live broadcast.

Half the flat-screen TVs were tuned to a blood-filled match between two Korean competitors, "MC" and "Puma." The crowd erupted in chants of "M-C! M-C!" when the favorite started a comeback.

The pub is known for showing European soccer and other sports, but Puma and MC aren't athletes. They are 20-year-old professional videogame players who were leading computerized armies of humans and aliens in a science-fiction war game called "Starcraft II" from a Los Angeles convention center. The Koreans were fighting over a tournament prize of $50,000.

This summer, "Starcraft II" has become

the newest barroom spectator sport. Fans organize so-called Barcraft

events, taking over pubs and bistros from Honolulu to Florida and

switching big-screen TV sets to Internet broadcasts of professional game

matches happening often thousands of miles away.

Fans of 'Starcraft II' watch a live game broadcast in Washington, D.C.

As they root for their on-screen superstars, "Starcraft" enthusiasts can sow confusion among regular patrons. Longtime Mad Dog customers were taken aback by the young men fist-pumping while digital swarms of an insect-like race called "Zerg" battled the humanoid "Protoss" on the bar's TVs.

"I thought I'd come here for a quiet beer after a crazy day at work," said Michael McMahan, a 59-year-old carpenter who is a 17-year veteran of the bar, over the sound of noisy fans as he sipped on a draught pint.

But for sports-bar owners, "Starcraft" viewers represent a key new source of revenue from a demographic—self-described geeks—they hadn't attracted before.

"It was unbelievable," said Jim Biddle, a manager of Bistro 153 in Beaverton, Ore., which hosted its first Barcraft in July. The 50 gamers in attendance "doubled what I'd normally take in on a normal Sunday night."

For "Starcraft" fans, watching in bars fulfills their desire to share the love of a game that many watched at home alone before. During a Barcraft at San Francisco's Mad Dog in July, Justin Ng, a bespectacled 29-year-old software engineer, often rose to his feet during pivotal clashes of a match.

"This feels like the World Cup," he said. "You experience the energy and screams of everyone around you when a player makes an amazing play."

Millions of Internet users already tune in each month on their PCs to watch live "eSports" events featuring big-name stars like MC, who is Jang Min Chul in real life, or replays of recent matches.

In the U.S., fervor for "Starcraft II" is spilling into public view for the first time, as many players now prefer to watch the pros. In mid-July, during the first North American Star League tournament in Los Angeles, 85,000 online viewers watched Puma defeat MC in the live championship match on Twitch.tv, said Emmett Shear, who runs the recently-launched site.

The "Starcraft" franchise is more popular in Korea, where two cable TV stations, MBC Game and Ongamenet, provide dedicated coverage. The cable channels and Web networks broadcast other war games such as "Halo," "Counter-Strike," and "Call of Duty." But "Starcraft II" is often the biggest draw.

The pros, mostly in their teens and 20s, get prize money and endorsements. Professional leagues in the U.S. and Korea and have sprouted since "Starcraft II" launched last year. Pro-match broadcasts often include breathless play-by-play announcers who cover each move like a wrestling match. (A typical commentary: "It's a drone genocide! Flaming drone carcasses all over the place!").

Barcraft goers credit a Seattle bar, Chao Bistro, for launching the Barcraft fad this year. Glen Bowers, a 35-year-old Chao patron and "Starcraft" fan, suggested to owner Hyung Chung that he show professional "Starcraft" matches. Seeing that customers were ignoring Mariners baseball broadcasts on the bar's TVs, Mr. Chung, a videogame fan, OK'ed the experiment.

In mid-May Mr. Bowers configured Chao's five TVs to show Internet feeds and posted an online notice to "Starcraft" devotees. About 150 people showed up two days later. Since then, Mr. Bowers has organized twice-a-week viewings; attendance has averaged between 40 and 50 people, including employees of Amazon.com Inc. and Microsoft Corp., he said.

The trend ended up spreading to more than a dozen Barcrafts across the country, including joints in Raleigh, N.C., and Boston.

The "Starcraft II" game lends itself to sports bars because it "was built from the ground up as a spectator sport," said Bob Colayco, a publicist for the game's publisher, Activision Blizzard Inc. Websites like Twitch.tv helped "Starcraft's" spectator-sport appeal by letting players "stream" live games.

Two University of Washington graduate students recently published a research paper seeking to scientifically pinpoint "Starcraft's" appeal as a spectator sport. The paper posits that "information asymmetry," in which one party has more information than the other, is the "fundamental source of entertainment."

MicroGen Chips to Power Wireless Sensors Through Environmental Vibrations

Via Daily Tech

-----

MicroGen Systems says its chips differ from other vibrational energy-harvesting devices because they have low manufacturing costs and use nontoxic material instead of PZT, which contains lead.

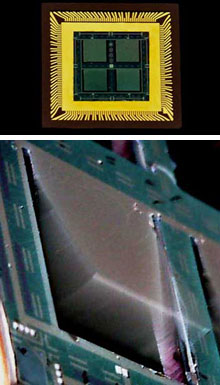

(TOP) Prototype wireless sensor battery with four energy-scavenging chips. (BOTTOM) One chip with a vibrating cantilever (Source: MicroGen Systems )

MicroGen

Systems is in the midst of creating energy-scavenging chips that will convert

environmental vibrations into electricity to power wireless sensors.

The

chip's core consists of an array of small silicon cantilevers that

measure one centimeter squared and are located on a

"postage-stamp-sized" thin film battery. These cantilevers oscillate

when the chip is shook, and at their base is piezoelectric material that produces electrical potential when strained by

vibrations.

The current travels from the piezoelectric array through an electrical device

that converts it to a compatible form for the battery. When jostling the chip

by vibrations of a rotating tire, for example, it can generate 200 microwatts

of power.

Critics such as David Culler, chair of computer science at the University of

California, have said that 200 microwatts may be useful at a small size, but

other harvesting techniques through solar, light, heat, etc. are

more competitive technologies since they can either store the electrical energy

on a battery or use it right away.

But according to Robert Andosca, founder and president of Ithaca, New

York-based MicroGen Systems, his chips differ from other vibrational

energy-harvesting devices because they have low manufacturing costs and use

nontoxic material instead of PZT, which contains lead.

Most piezoelectric materials must be assembled by hand and can be quite large.

But MicroGen's chips can be made inexpensively and small because they are based

on silicon microelectrical mechanical systems. The chips can be made on the

same machines used to make computer chips.

MicroGen Systems hopes to use these energy-harvesting chips to power wireless sensors like those that

monitor tire pressure. The chips could eliminate the need to replace these

batteries.

"It's a pain in the neck to replace those batteries," said Andosca.

MicroGen Systems plans to sell these chips at $1 a piece, depending on the

volume, and hopes to begin selling them in about a year.

Wednesday, August 24. 2011

The First Industrial Evolution

Via Big Think

by Dominic Basulto

-----

If the first industrial revolution was all about mass manufacturing and machine power replacing manual labor, the First Industrial Evolution will be about the ability to evolve your personal designs online and then print them using popular 3D printing technology. Once these 3D printing technologies enter the mainstream, they could lead to a fundamental change in the way that individuals - even those without any design or engineering skills - are able to create beautiful, state-of-the-art objects on demand in their own homes. It is, quite simply, the democratization of personal manufacturing on a massive scale.

At the Cornell Creative Machines Lab, it's possible to glimpse what's next for the future of personal manufacturing. Researchers led by Hod Lipson created the website Endless Forms (a clever allusion to Charles Darwin’s famous last line in The Origin of Species) to "evolve” everyday objects and then bring them to life using 3D printing technologies. Even without any technical or design expertise, it's possible to create and print forms ranging from lamps to mushrooms to butterflies. You literally "evolve" printable, 3D objects through a process that echoes the principles of evolutionary biology. In fact, to create this technology, the Cornell team studied how living items like oak trees and elephants evolve over time.

3D printing capabilities, once limited to the laboratory, are now hitting the mainstream. Consider the fact that MakerBot Industries just landed $10 million from VC investors. In the future, each of us may have a personal 3D printer in the home, ready to print out personal designs on demand.

At the same time, there’s been a radical re-thinking about how products are designed and brought to market. Take On the Origin of Tepees, a book by British scientist Jonnie Hughes,

which presents a highly provocative thesis: What if design evolves the

same way that humans do? What if cultural ideas evolve the way humans

do? One example cited by Hughes is the simple cowboy hat from the American Wild West.

What if an object like the cowboy hat “evolved” itself, using cowboys

and ranch hands simply as a unique "selective environment” so that it

could evolve over time?

Wait a second, what's going on here? Objects using humans to evolve themselves? 3D Printers? Someone's been drinking the Kool-Aid, right?

But if you’ve read Richard Dawkins' bestseller The Selfish Gene,

you can see where Hughes is headed with his ideas. Dawkins coined the

term “meme” back in 1976 to express the concept of thoughts and ideas

having a life of their own, free to mutate and adapt, while cleverly

using their human “hosts” as a reproductive vehicle to pass on these

memes to others. Memes functioned like genes, in that their only goal

was to reproduce for the next generation. In such a way, evolved designs

are a form of "teme" -- a term first popularized by Susan Blackmore, author of The Meme Machine, to denote ideas that are replicated via technology.

What if that gorgeous iPad 2 you’re holding in your hand was actually “evolved” and not “designed”? What if it is the object that controls the design, and not the designer that controls the object? Hod Lipson, an expert on self-aware robots and a pioneer of the 3D printing movement, has claimed that we are on the brink of the second industrial revolution. However, if objects really do "evolve," is it more accurate to say that we are on the brink of The First Industrial Evolution?

The final frontier, of course, is not the ability of humans to print out beautifully-evolved objects on demand using 3D printers in their homes. (Although that’s quite cool). The final frontier is the ability for self-aware objects independently “evolving” humans and then printing them out as they need them. Sound far-fetched? Well, it’s now possible to print 3D human organs and 3D human skin. When machine intelligence progresses to a certain point, what’s to stop independent, self-aware machines from printing human organs? The implications – for both atheists and true believers – are perhaps too overwhelming even to consider.

Google TV add-on for Android SDK gives developers a path to the big screen

Via ars technica

-----

At the Google I/O conference earlier this year, Google revealed that the Android Market would come to the Google TV set-top platform. Some evidence of the Honeycomb-based Google TV refresh surfaced in June when screenshots from developer hardware were leaked. Google TV development is now being opened to a broader audience.

In a post on the official Google TV blog, the search giant has announced the availability of a Google TV add-on for the Android SDK. The add-on is an early preview that will give third-party developers an opportunity to start porting their applications to Google TV.

The SDK add-on will currently only work on Linux desktop systems because it relies on Linux's native KVM virtualization system to provide a Google TV emulator. Google says that other environments will be supported in the future. Unlike the conventional phone and tablet versions of Android, which are largely designed to run on ARM devices, the Google TV reference hardware uses x86 hardware. The architecture difference might account for the lack of support in Android's traditional emulator.

We are planning to put the SDK add-on to the test later this week so we can report some hands-on findings. We suspect that the KVM-based emulator will offer better performance than the conventional Honeycomb emulator that Google's SDK currently provides for tablet development.

In addition to the SDK add-on, Google has also published a detailed user interface design guideline document that offers insight into best practices for building a 10-foot interface that will work will on Google TV hardware. The document addresses a wide range of issues, including D-pad navigation and television color variance.

The first iteration of Google TV flopped in the market and didn't see much consumer adoption. Introducing support for third-party applications could make Google TV significantly more compelling to consumers. The ability to trivially run applications like Plex could make Google TV a lot more useful. It's also worth noting that Android's recently added support for game controllers and other similar input devices could make Google TV hardware serve as a casual gaming console.

Tuesday, August 23. 2011

Your Two Things

Via KK

-----

In ten years from now, how many gadgets will people carry?

Apple would like you to carry 3 things today. The iPad, iPhone and MacBook. Once they would be happy if you carried one. What do they have in mind for the next decade? Ten?

I claim that what technology wants is to specialize, so I predict that any device we have today we'll have yet more specialized devices in the future. That means there will be hundreds of new devices in the coming years. Are we going to carry them all? Will we have a daypack full of devices? Will every pocket have its own critter?

I think the answer for the average person is 2. We'll carry two devices in the next decade. Over the long term, say 100 years, we may carry no devices.

The two devices we'll carry (on average) will be 1) a close-to-body handheld thingie, and 2) a larger tablet thingie at arms length. The handled will be our wallet, purse, camera, phone, navigator, watch, swiss army knife combo. The tablet will be a bigger screen and multi sensor input. It may unfold, or unroll, or expand, or be just a plain plank. Different folks will have different sizes.

But there are caveats. First, we'll wear a a lot of devices -- which is not the same as carrying them. We'll have devices built into belts, wristbands, necklaces, clothes, or more immediately into glasses or worn on our ears, etc. We wear a watch; we don't carry it. We wear necklaces, or fit bits, rather than carry. Main difference is being attached it is harder to lose (or lose track of), always intimate. This will be particularly true of quantified self-tracking devices. If we ask the question, ow may devices will you wear in ten years, the answer may be ten.

Secondly, the two devices you carry may not always be the same devices. You may switch them out depending on the location, mode (vacation or work), task at hand. Some days you may need a bigger screen than others.

More importantly, the devices may depend on your vocation. Some jobs want a small text based device (programmers), others may want a large screen (filmmakers), others a very blinding bright display (contractor), or others a flexible collapsible device (salesperson).

The law of technology is that a specialized tool will always be superior to a general purpose tool. No matter how great the built in camera in your phone gets, the best single purpose camera will be better. No matter who great your navigator in your handheld combo gets, the best dedicated navigator will be a lot better. Professionals, or ardent enthusiasts will continue to use the best tools, which will mean the specialized tools.

Just to be clear, the combo is a specialized tool itself, just as a swiss army knife is a specialized knife -- it specializes in the combo. It does everything okay.

So another way to restate the equation: the 2 devices each person will carry are one general purpose combination device, and one specialized device (per your major interests and style).

Of course, some folks will carry more than two, like the New York City police officer in the image above (taken in Times Square a few weeks ago). That may be because of their job, or vocation. But they won't carry them all the time. Even when they are "off" they will carry at least one device, and maybe two.

But I predict that in the longer term we will tend to not carry any devices at all. That's because we will have so many devices around us, both handheld and built-ins, and each will be capable of recognizing us and displaying to us our own personal interface, that they in effect become ours for the duration of our use. Not too long ago no one carried their own phone. You just used the nearest phone at hand. You borrowed it and did not need to carry your own personal phone around.That would have seemed absurd in 1960. But of course not every room had a phone, not every store had one, not every street had one. So we wanted our own cell phones. But what if almost any device made could be borrowed and used as a communication device? You pick up a camera, or tablet, or remote and talk into it. Then you might not need to carry your own phone again. What if every screen could be hijacked for your immediate purposes? Why carry a screen of your own?

This will not happen in 10 years. But I believe in the goodness of time the highly evolved person will not carry anything.

At the same time the attraction of a totem object, or something to hold in your hands, particularly a gorgeous object, will not diminish. We may remain with one single object that we love, that does most of what we need okay, and that in some ways comes to represent us. Perhaps the highly evolved person carries one distinctive object -- which will be buried with them when they die.

At the very least, I don't think we'll normally carry more than a couple of things at once, on an ordinary day. The number of devices will proliferate, but each will occupy a smaller and smaller niche. There will be a long tail distribution of devices.

50 years from now a very common ritual upon meeting of old friends will be the mutual exchange and cross examination of what lovely personal thing they have in their pocket or purse. You'll be able to tell a lot about a person by what they carry.

-----

Friday, August 19. 2011

IBM unveils cognitive computing chips, combining digital 'neurons' and 'synapses'

Via Kurzweil

-----

IBM researchers unveiled today a new generation of experimental computer chips designed to emulate the brain’s abilities for perception, action and cognition.

In a sharp departure from traditional von Neumann computing concepts in designing and building computers, IBM’s first neurosynaptic computing chips recreate the phenomena between spiking neurons and synapses in biological systems, such as the brain, through advanced algorithms and silicon circuitry.

The technology could yield many orders of magnitude less power consumption and space than used in today’s computers, the researchers say. Its first two prototype chips have already been fabricated and are currently undergoing testing.

Called cognitive computers, systems built with these chips won’t be programmed the same way traditional computers are today. Rather, cognitive computers are expected to learn through experiences, find correlations, create hypotheses, and remember — and learn from — the outcomes, mimicking the brains structural and synaptic plasticity.

“This is a major initiative to move beyond the von Neumann paradigm that has been ruling computer architecture for more than half a century,” said Dharmendra Modha, project leader for IBM Research.

“Future applications of computing will increasingly demand functionality that is not efficiently delivered by the traditional architecture. These chips are another significant step in the evolution of computers from calculators to learning systems, signaling the beginning of a new generation of computers and their applications in business, science and government.”

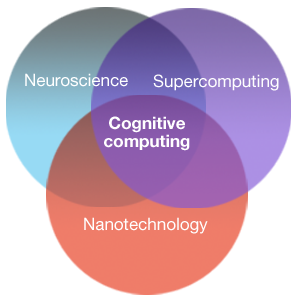

Neurosynaptic chips

IBM is combining principles from nanoscience, neuroscience, and supercomputing as part of a multi-year cognitive computing initiative. IBM’s long-term goal is to build a chip system with ten billion neurons and hundred trillion synapses, while consuming merely one kilowatt of power and occupying less than two liters of volume.

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

While

they contain no biological elements, IBM’s first cognitive computing

prototype chips use digital silicon circuits inspired by neurobiology

to make up a “neurosynaptic core” with integrated memory (replicated

synapses), computation (replicated neurons) and communication

(replicated axons).

IBM has two working prototype designs. Both cores were fabricated in 45 nm SOICMOS and contain 256 neurons. One core contains 262,144 programmable synapses and the other contains 65,536 learning synapses. The IBM team has successfully demonstrated simple applications like navigation, machine vision, pattern recognition, associative memory and classification.

IBM’s overarching cognitive computing architecture is an on-chip network of lightweight cores, creating a single integrated system of hardware and software. It represents a potentially more power-efficient architecture that has no set programming, integrates memory with processor, and mimics the brain’s event-driven, distributed and parallel processing.

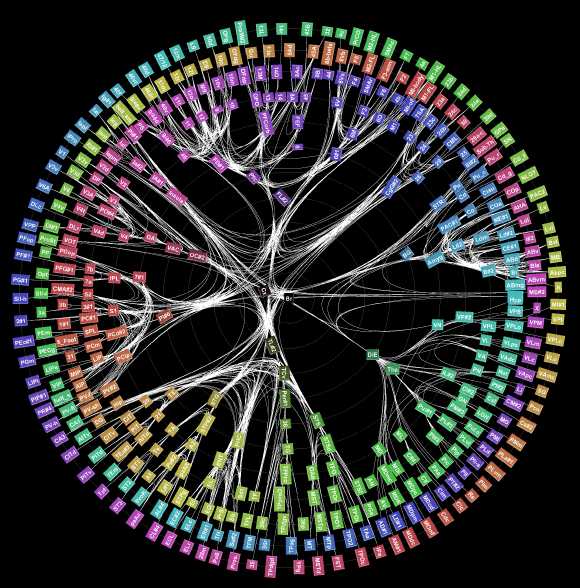

Visualization of the long distance network of a monkey brain (credit: IBM Research)

SyNAPSE

The company and its university collaborators also announced they have been awarded approximately $21 million in new funding from the Defense Advanced Research Projects Agency (DARPA) for Phase 2 of the Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE) project.

The goal of SyNAPSE is to create a system that not only analyzes complex information from multiple sensory modalities at once, but also dynamically rewires itself as it interacts with its environment — all while rivaling the brain’s compact size and low power usage.

For Phase 2 of SyNAPSE, IBM has assembled a world-class multi-dimensional team of researchers and collaborators to achieve these ambitious goals. The team includes Columbia University; Cornell University; University of California, Merced; and University of Wisconsin, Madison.

Why Cognitive Computing

Future chips will be able to ingest information from complex, real-world environments through multiple sensory modes and act through multiple motor modes in a coordinated, context-dependent manner.

For example, a cognitive computing system monitoring the world’s water supply could contain a network of sensors and actuators that constantly record and report metrics such as temperature, pressure, wave height, acoustics and ocean tide, and issue tsunami warnings based on its decision making.

Similarly, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag bad or contaminated produce. Making sense of real-time input flowing at an ever-dizzying rate would be a Herculean task for today’s computers, but would be natural for a brain-inspired system.

“Imagine traffic lights that can integrate sights, sounds and smells and flag unsafe intersections before disaster happens or imagine cognitive co-processors that turn servers, laptops, tablets, and phones into machines that can interact better with their environments,” said Dr. Modha.

IBM has a rich history in the area of artificial intelligence research going all the way back to 1956 when IBM performed the world’s first large-scale (512 neuron) cortical simulation. Most recently, IBM Research scientists created Watson, an analytical computing system that specializes in understanding natural human language and provides specific answers to complex questions at rapid speeds.

---

Thursday, August 18. 2011

Wi-Fi Security: Cracking WPA With CPUs, GPUs, And The Cloud

Is your network safe? Almost all of us prefer the convenience of Wi-Fi over the hassle of a wired connection. But what does that mean for security? Our tests tell the whole story. We go from password cracking on the desktop to hacking in the cloud.

We hear about security breaches with such increasing frequency that it's easy to assume the security world is losing its battle to protect our privacy. The idea that our information is safe is what enables so many online products and services; without it, life online would be so very different than it is today. And yet, there are plenty of examples where someone (or a group of someones) circumvents the security that even large companies put in place, compromising our identities and shaking our confidence to the core.

Understandably, then, we're interested in security, and how our behaviors and hardware can help improve it. It's not just the headache of replacing a credit card or choosing a new password when a breach happens that irks us. Rather, it's that feeling of violation when you log into your banking account and discover that someone spent funds out of it all day.

In Harden Up: Can We Break Your Password With Our GPUs?, we took a look at archive security and identified the potential weaknesses of encrypted data on your hard drive. Although the data was useful (and indeed served to scare plenty of people who were previously using insufficient protection on files they really thought were secure), that story was admittedly limited in scope. Most of us don't encrypt the data that we hold dear.

At the same time, most of us are vulnerable in other ways. For example, we don't run on LAN-only networks. We're generally connected to the Internet, and for many enthusiasts, that connectivity is extended wirelessly through our homes and businesses. They say a chain is only as strong as its weakest link. In many cases, that weak link is the password protecting your wireless network.

There is plenty of information online about wireless security. Sorting through it all can be overwhelming. The purpose of this piece is to provide clarification, and then apply our lab's collection of hardware to the task of testing wireless security's strength. We start by breaking WEP and end with distributed WPA cracking in the cloud. By the end, you'll have a much better idea of how secure your Wi-Fi network really is.

-----

Complete article/survey @tomshardware.com

Thursday, August 11. 2011

Does Facial Recognition Technology Mean the End of Privacy?

Via big think

by Dominic Basulto

-----

At the Black Hat security conference in Las Vegas, researchers from Carnegie Mellon demonstrated how the same facial recognition technology used to tag Facebook photos could be used to identify random people on the street. This facial recognition technology, when combined with geo-location, could fundamentally change our notions of personal privacy. In Europe, facial recognition technology has already stirred up its share of controversy, with German regulators threatening to sue Facebook up to half-a-million dollars for violating European privacy rules. But it's not only Facebook - both Google (with PittPatt) and Apple (with Polar Rose) are also putting the finishing touches on new facial recognition technologies that could make it easier than ever before to connect our online and offline identities. If the eyes are the window to the soul, then your face is the window to your personal identity.

And it's for that reason that privacy advocates in both Europe and the USA are up in arms about the new facial recognition technology. What seems harmless at first - the ability to identify your friends in photos - could be something much more dangerous in the hands of anyone else other than your friends for one simple reason: your face is the key to linking your online and offline identities. It's one thing for law enforcement officials to have access to this technology, but what if your neighbor suddenly has the ability to snoop on you?

The researchers at Carnegie Mellon showed how a combination of simple technologies - a smart phone, a webcam and a Facebook account - were enough to identify people after only a three-second visual search. Hackers - once they can put together a face and the basics of a personal profile - like a birthday and hometown - they can start piecing together details like your Social Security Number and bank account information.

And the Carnegie Mellon technology used to show this? You guessed it - it's based on PittPatt

(for Pittsburgh Pattern Recognition Technology), which was acquired by

Google, meaning that you may soon be hearing the Pitter Patter of small

facial recogntion bots following you around any of Google's Web

properties. The photo in your Google+ Profile, connected seamlessly to

video clips of you from YouTube, effortlessly linked to photos of your

family and friends in a Picasa album - all of these could be used to

identify you and uncover your private identity. Thankfully, Google is not evil.

Forget being fingerprinted, it could be far worse to be Faceprinted. It's like the scene from The Terminator, where Arnold Schwarzenegger is able to identify his targets by employing a futuristic form of facial recognition technology. Well, the future is here.

Imagine a complete stranger taking a photo of you and immediately connecting that photo to every element of your personal identity and using that to stalk you (or your wife or your daughter). It happened to reality TV star Adam Savage - when he uploaded a photo to his Twitter page of his SUV parked outside his home, he didn't realize that it included geo-tagging meta-data. Within hours, people knew the exact location of his home. Or, imagine walking into a store, and the sales floor staff doing a quick visual search using a smart phone camera, finding out what your likes and interests are via Facebook or Google, and then tailoring their sales pitch accordingly. It's targeted advertising, taken to the extreme.

Which is not to say that everything about facial recognition technology is scary and creepy. Gizmodo

ran a great piece explaining all the "advantages" of being recognize

onlined. (Yet, two days later, Gizmodo also ran a piece explaining how

military spies could track you down almost instantly with facial

recognition technology, no matter where you are in the world).

Which raises the important question: Is Privacy a Right or a Privilege? Now that we're all celebrities in the Internet age, it doesn't take much to extrapolate that soon we'll all have the equivalent of Internet paparazzi incessantly snapping photos of us and intruding into our daily lives. Cookies, spiders, bots and spyware will seem positively Old School by then. The people with money and privilege and clout will be the people who will be able to erect barriers around their personal lives, living behind the digital equivalent of a gated community. The rest of us? We'll live our lives in public.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131044)

- Norton cyber crime study offers striking revenue loss statistics (101656)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100056)

- Norton cyber crime study offers striking revenue loss statistics (57885)

- The PC inside your phone: A guide to the system-on-a-chip (57445)