Monday, April 24. 2017

Via The Real Daily (C.L. Brenton)

-----

We’ve been warned…

Remember the movie Surrogates, where everyone lives in at home plugged in to virtual reality screens and robot versions of them run around and do their bidding? Or Her, where Siri’s personality is so attractive and real, that it’s possible to fall in love with an AI?

Replika may be the seedling of such a world, and there’s no denying the future implications of that kind of technology.

Replika is a personal chatbot that you can raise through SMS. By chatting with it, you teach it your personality and gain in-app points. Through the app it can chat with your friends and learn enough about you so that maybe one day it will take over some of your responsibilities like social media, or checking in with your mom. It’s in beta right now, so we haven’t gotten our hands on one, and there is little information available about it, but color me fascinated and freaked out based on what we already know.

AI is just that – artificial

I have never been a fan of technological advances that replace human interaction. Social media, in general, seems to replace and enhance traditional communication, which is fine. But robots that hug you when you’re grieving a lost child, or AI personalities that text your girlfriend sweet nothings for you don’t seem like human enhancement, they feel like human loss.

You know that feeling when you get a text message from someone you care about, who cares about you? It’s that little dopamine rush, that little charge that gets your blood pumping, your heart racing. Just one ding, and your day could change. What if a robot’s text could make you feel that way?

It’s a real boy?

Replika began when one of its founders lost her roommate, Roman, to a car accident. Eugenia Kyuda created Luka, a restaurant recommending chatbot, and she realized that with all of her old text messages from Roman, she could create an AI that texts and chats just like him. When she offered the use of his chatbot to his friends and family, she found a new business.

People were communicating with their deceased friend, sibling, and child, like he was still there. They wanted to tell him things, to talk about changes in their lives, to tell him they missed him, to hear what he had to say back.

This is human loss and grieving tempered by an AI version of the dead, and the implications are severe. Your personality could be preserved in servers after you die. Your loved ones could feel like they’re talking to you when you’re six feet under. Doesn’t that make anyone else feel uncomfortable?

Bringing an X-file to life

If you think about your closest loved one dying, talking to them via chatbot may seem like a dream come true. But in the long run, continuing a relationship with a dead person via their AI avatar is dangerous. Maybe it will help you grieve in the short term, but what are we replacing? And is it worth it?

Imagine texting a friend, a parent, a sibling, a spouse instead of Replika. Wouldn’t your interaction with them be more valuable than your conversation with this personality? Because you’re building on a lifetime of friendship, one that has value after the conversation is over. One that can exist in real tangible life. One that can actually help you grieve when the AI replacement just isn’t enough. One that can give you a hug.

“One day it will do things for you,” Kyuda said in an interview with Bloomberg, “including keeping you alive. You talk to it, and it becomes you.”

Replacing you is so easy

This kind of rhetoric from Replika’s founder has to make you wonder if this app was intended as a sort of technological fountain of youth. You never have to “die” as long as your personality sticks around to comfort your loved ones after you pass. I could even see myself trying to cope with a terminal diagnosis by creating my own Replika to assist family members after I’m gone.

But it’s wrong isn’t it? Isn’t it? Psychologically and socially wrong?

It all starts with a chatbot. That replicates your personality. It begins with a woman who was just trying to grieve. This is a taste of the future, and a scary one too. One of clones, downloaded personalities, and creating a life that sticks around after you’re gone.

Monday, April 17. 2017

Via Tech Crunch

-----

It’s no secret

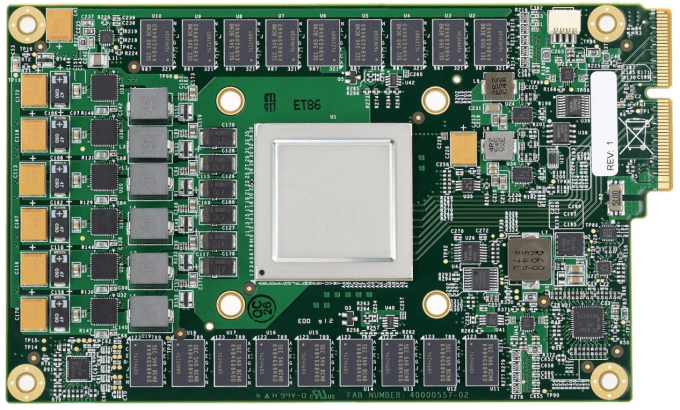

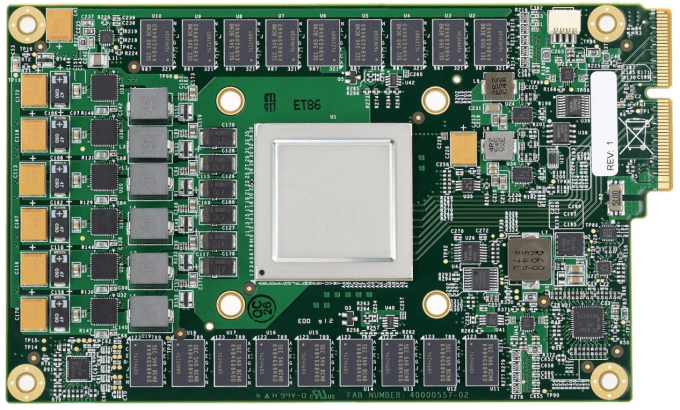

that Google has developed its own custom chips to accelerate its

machine learning algorithms. The company first revealed those chips,

called Tensor Processing Units (TPUs), at its I/O developer conference

back in May 2016, but it never went into all that many details about

them, except for saying that they were optimized around the company’s

own TensorFlow machine-learning framework. Today, for the first time, it’s sharing more details and benchmarks about the project.

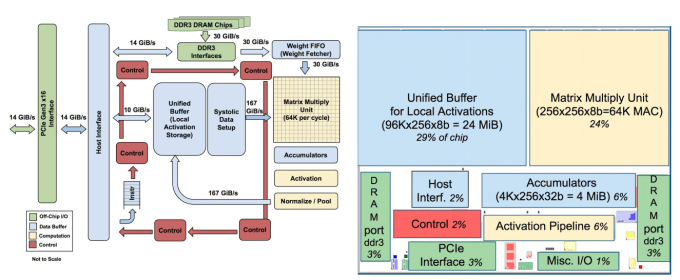

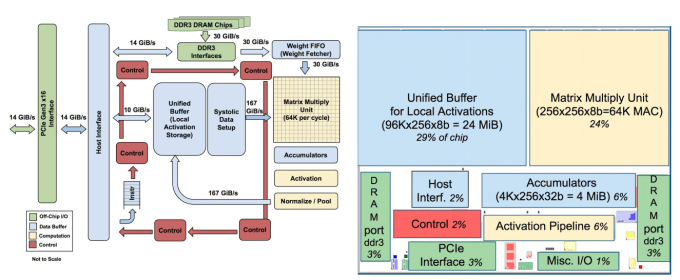

If you’re a chip designer, you can find all the gory glorious details of how the TPU works in Google’s paper.

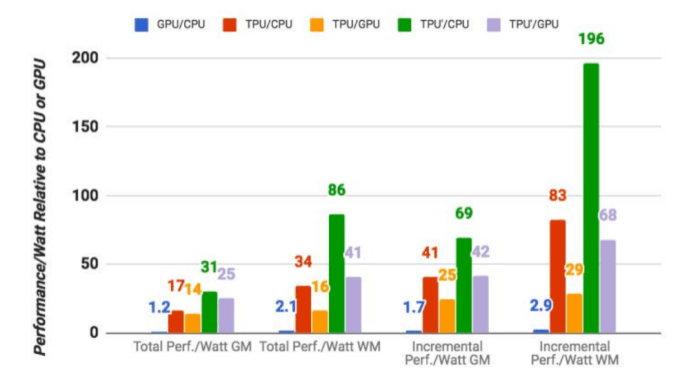

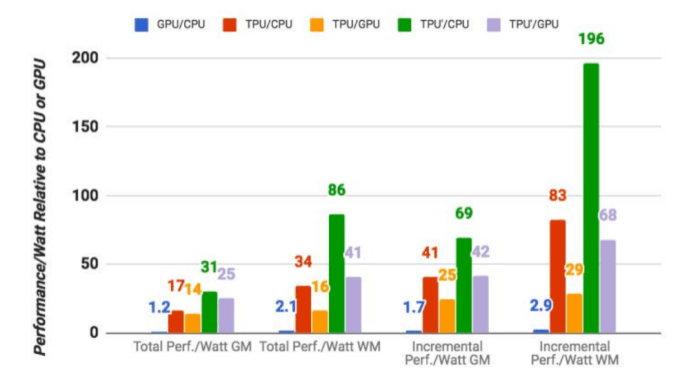

The numbers that matter most here, though, are that based on Google’s

own benchmarks (and it’s worth keeping in mind that this is Google

evaluating its own chip), the TPUs are on average 15x to 30x faster in

executing Google’s regular machine learning workloads than a standard

GPU/CPU combination (in this case, Intel Haswell processors and Nvidia

K80 GPUs). And because power consumption counts in a data center, the

TPUs also offer 30x to 80x higher TeraOps/Watt (and with using faster

memory in the future, those numbers will probably increase).

It’s worth noting that these numbers are about using machine learning

models in production, by the way — not about creating the model in the

first place.

Google also notes that while most architects optimize their chips for convolutional neural networks

(a specific type of neural network that works well for image

recognition, for example). Google, however, says, those networks only

account for about 5 percent of its own data center workload while the

majority of its applications use multi-layer perceptrons.

Google says it started looking into how it could use GPUs, FPGAs and

custom ASICS (which is essentially what the TPUs are) in its data

centers back in 2006. At the time, though, there weren’t all that many

applications that could really benefit from this special hardware

because most of the heavy workloads they required could just make use of

the excess hardware that was already available in the data center

anyway. “The conversation changed in 2013 when we projected that DNNs

could become so popular that they might double computation demands on

our data centers, which would be very expensive to satisfy with

conventional CPUs,” the authors of Google’s paper write. “Thus, we

started a high-priority project to quickly produce a custom ASIC for

inference (and bought off-the-shelf GPUs for training).” The goal here,

Google’s researchers say, “was to improve cost-performance by 10x over

GPUs.”

Google isn’t likely to make the TPUs available outside of its own

cloud, but the company notes that it expects that others will take what

it has learned and “build successors that will raise the bar even

higher.”

Monday, April 10. 2017

Via Tech Crunch

-----

A lot of top-tier video games enjoy lengthy

long-tail lives with remasters and re-releases on different platforms,

but the effort put into some games could pay dividends in a whole new

way, as companies training things like autonomous cars, delivery drones

and other robots are looking to rich, detailed virtual worlds to

provided simulated training environments that mimic the real world.

Just as companies including Boom can now build supersonic jets with a small team and limited funds,

thanks to advances made possible in simulation, startups like NIO

(formerly NextEV) can now keep pace with larger tech concerns with ample

funding in developing self-driving software, using simulations of

real-world environments including those derived from games like Grand

Theft Auto V. Bloomberg reports

that the approach is increasingly popular among companies that are

looking to supplement real-world driving experience, including Waymo and

Toyota’s Research Institute.

There are some drawbacks, of course: Anyone will tell you that

regardless of the industry, simulation can do a lot, but it can’t yet

fully replace real-world testing, which always diverges in some ways

from what you’d find in even the most advanced simulations. Also, miles

driven in simulation don’t count towards the total miles driven by

autonomous software figures most regulatory bodies will actually care

about in determining the road worthiness of self-driving systems.

The more surprising takeaway here is that GTA V in this instance

isn’t a second-rate alternative to simulation software created for the

purpose of testing autonomous driving software – it proves an incredibly

advanced testing platform, because of the care taken in its open-world

game design. That means there’s no reason these two market uses can’t be

more aligned in future: Better, more comprehensive open-world game

design means better play experiences for users looking for that truly

immersive quality, and better simulation results for researchers who

then leverage the same platforms as a supplement to real-world testing.

|