Tuesday, October 29. 2013

Surprisingly simple scheme for self-assembling robots

Via MIT

-----

Small cubes with no exterior moving parts can propel themselves forward, jump on top of each other, and snap together to form arbitrary shapes.

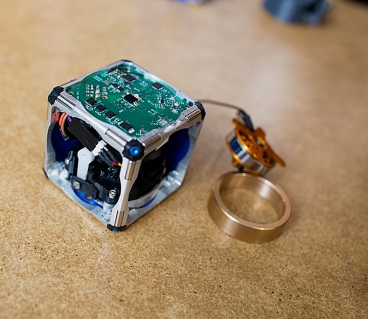

A prototype of a new modular robot,

with its innards exposed and its

flywheel — which gives it the ability to move independently — pulled

out.

Photo: M. Scott Brauer

In 2011, when an MIT senior named John Romanishin proposed a new design

for modular robots to his robotics professor, Daniela Rus, she said,

“That can’t be done.”

Two years later, Rus showed her colleague

Hod Lipson, a robotics researcher at Cornell University, a video of

prototype robots, based on Romanishin’s design, in action. “That can’t

be done,” Lipson said.

In November, Romanishin — now a research

scientist in MIT’s Computer Science and Artificial Intelligence

Laboratory (CSAIL) — Rus, and postdoc Kyle Gilpin will establish once

and for all that it can be done, when they present a paper describing

their new robots at the IEEE/RSJ International Conference on Intelligent

Robots and Systems.

Known as M-Blocks, the robots are cubes with

no external moving parts. Nonetheless, they’re able to climb over and

around one another, leap through the air, roll across the ground, and

even move while suspended upside down from metallic surfaces.

Inside

each M-Block is a flywheel that can reach speeds of 20,000 revolutions

per minute; when the flywheel is braked, it imparts its angular momentum

to the cube. On each edge of an M-Block, and on every face, are

cleverly arranged permanent magnets that allow any two cubes to attach

to each other.

“It’s one of these things that the

[modular-robotics] community has been trying to do for a long time,”

says Rus, a professor of electrical engineering and computer science and

director of CSAIL. “We just needed a creative insight and somebody who

was passionate enough to keep coming at it — despite being discouraged.”

Embodied abstraction

As Rus explains,

researchers studying reconfigurable robots have long used an abstraction

called the sliding-cube model. In this model, if two cubes are face to

face, one of them can slide up the side of the other and, without

changing orientation, slide across its top.

The sliding-cube

model simplifies the development of self-assembly algorithms, but the

robots that implement them tend to be much more complex devices. Rus’

group, for instance, previously developed a modular robot called the Molecule,

which consisted of two cubes connected by an angled bar and had 18

separate motors. “We were quite proud of it at the time,” Rus says.

According

to Gilpin, existing modular-robot systems are also “statically stable,”

meaning that “you can pause the motion at any point, and they’ll stay

where they are.” What enabled the MIT researchers to drastically

simplify their robots’ design was giving up on the principle of static

stability.

“There’s a point in time when the cube is essentially

flying through the air,” Gilpin says. “And you are depending on the

magnets to bring it into alignment when it lands. That’s something

that’s totally unique to this system.”

That’s also what made Rus

skeptical about Romanishin’s initial proposal. “I asked him build a

prototype,” Rus says. “Then I said, ‘OK, maybe I was wrong.’”

Sticking the landing

To

compensate for its static instability, the researchers’ robot relies on

some ingenious engineering. On each edge of a cube are two cylindrical

magnets, mounted like rolling pins. When two cubes approach each other,

the magnets naturally rotate, so that north poles align with south, and

vice versa. Any face of any cube can thus attach to any face of any

other.

The cubes’ edges are also beveled, so when two cubes are

face to face, there’s a slight gap between their magnets. When one cube

begins to flip on top of another, the bevels, and thus the magnets,

touch. The connection between the cubes becomes much stronger, anchoring

the pivot. On each face of a cube are four more pairs of smaller

magnets, arranged symmetrically, which help snap a moving cube into

place when it lands on top of another.

As with any modular-robot

system, the hope is that the modules can be miniaturized: the ultimate

aim of most such research is hordes of swarming microbots that can

self-assemble, like the “liquid steel” androids in the movie “Terminator

II.” And the simplicity of the cubes’ design makes miniaturization

promising.

But the researchers believe that a more refined

version of their system could prove useful even at something like its

current scale. Armies of mobile cubes could temporarily repair bridges

or buildings during emergencies, or raise and reconfigure scaffolding

for building projects. They could assemble into different types of

furniture or heavy equipment as needed. And they could swarm into

environments hostile or inaccessible to humans, diagnose problems, and

reorganize themselves to provide solutions.

Strength in diversity

The

researchers also imagine that among the mobile cubes could be

special-purpose cubes, containing cameras, or lights, or battery packs,

or other equipment, which the mobile cubes could transport. “In the vast

majority of other modular systems, an individual module cannot move on

its own,” Gilpin says. “If you drop one of these along the way, or

something goes wrong, it can rejoin the group, no problem.”

“It’s

one of those things that you kick yourself for not thinking of,”

Cornell’s Lipson says. “It’s a low-tech solution to a problem that

people have been trying to solve with extraordinarily high-tech

approaches.”

“What they did that was very interesting is they

showed several modes of locomotion,” Lipson adds. “Not just one cube

flipping around, but multiple cubes working together, multiple cubes

moving other cubes — a lot of other modes of motion that really open the

door to many, many applications, much beyond what people usually

consider when they talk about self-assembly. They rarely think about

parts dragging other parts — this kind of cooperative group behavior.”

In

ongoing work, the MIT researchers are building an army of 100 cubes,

each of which can move in any direction, and designing algorithms to

guide them. “We want hundreds of cubes, scattered randomly across the

floor, to be able to identify each other, coalesce, and autonomously

transform into a chair, or a ladder, or a desk, on demand,” Romanishin

says.

Monday, October 28. 2013

Cuttable, Foldable Sensors Can Add Multi-Touch To Any Device

Via TechCrunch

-----

Researchers at the MIT Media Lab and the Max Planck Institutes have created a foldable, cuttable multi-touch sensor that works no matter how you cut it, allowing multi-touch input on nearly any surface.

In traditional sensors the connectors are laid out in a grid and when one part of the grid is damaged you lose sensitivity in a wide swathe of other sensors. This system lays the sensors out like a star which means that cut parts of the sensor only effect other parts down the line. For example, you cut the corners off of a square and still get the sensor to work or even cut all the way down to the main, central connector array and, as long as there are still sensors on the surface, it will pick up input.

The team that created it, Simon Olberding, Nan-Wei Gong, John Tiab, Joseph A. Paradiso, and Jürgen Steimle, write:

This very direct manipulation allows the end-user to easily make real-world objects and surfaces touch interactive,

to augment physical prototypes and to enhance paper craft. We contribute

a set of technical principles for the design of printable circuitry

that makes the sensor more robust against cuts, damages and removed

areas. This includes

novel physical topologies and printed forward error correction.

You can read the research paper here but this looks to be very useful in the DIY hacker space as well as for flexible, wearable projects that require some sort of multi-touch input. While I can’t imagine we need shirts made of this stuff, I could see a sleeve with lots of inputs or, say, a watch with a multi-touch band.

Don’t expect this to hit the next iWatch any time soon – it’s still very much in prototype stages but definitely looks quite cool.

Thursday, October 24. 2013

IBM unveils computer fed by 'electronic blood'

Via BBC

-----

Is liquid fuel the key to zettascale computing? Dr Patrick Ruch with IBM's test kit

IBM has unveiled a prototype of a new brain-inspired computer powered by what it calls "electronic blood".

The firm says it is learning from nature by building computers fuelled and cooled by a liquid, like our minds.

The human brain packs phenomenal computing power into a tiny space and uses only 20 watts of energy - an efficiency IBM is keen to match.

Its new "redox flow" system pumps an electrolyte "blood" through a computer, carrying power in and taking heat out.

A very basic model was demonstrated this week at the technology giant's Zurich lab by Dr Patrick Ruch and Dr Bruno Michel.

Their vision is that by 2060, a one petaflop computer that would fill half a football field today, will fit on your desktop.

"We want to fit a supercomputer inside a sugarcube. To do that, we need a paradigm shift in electronics - we need to be motivated by our brain," says Michel.

"The human brain is 10,000 times more dense and efficient than any computer today.

"That's possible because it uses only one - extremely efficient - network of capillaries and blood vessels to transport heat and energy - all at the same time."

IBM's brainiest computer to date is Watson, which famously trounced two champions of the US TV quiz show Jeopardy.

The victory was hailed as a landmark for cognitive computing - machine had surpassed man.

The future of computing? IBM's model uses a liquid to deliver power and remove heat

But the contest was unfair, says Michel. The brains of Ken Jennings and Brad Rutter ran on only 20 watts of energy, whereas Watson needed 85,000 watts.

Energy efficiency - not raw computing power - is the guiding principle for the next generation of computer chips, IBM believes.

Our current 2D silicon chips, which for half a century have doubled in power through Moore's Law, are approaching a physical limit where they cannot shrink further without overheating.

Bionic vision"The computer industry uses $30bn of energy and throws it out of the window. We're creating hot air for $30bn," says Michel.

"Ninety-nine per cent of a computer's volume is devoted to cooling and powering. Only 1% is used to process information. And we think we've built a good computer?"

"The brain uses 40% of its volume for functional performance - and only 10% for energy and cooling."

Michel's vision is for a new "bionic" computing architecture, inspired by one of the laws of nature - allometric scaling - where an animal's metabolic power increases with its body size.

An elephant, for example, weighs as much as a million mice. But it consumes 30 times less energy, and can perform a task even a million mice cannot accomplish.

The same principle holds true in computing, says Michel, whose bionic vision has three core design features.

The first is 3D architecture, with chips stacked high, and memory storage units interwoven with processors.

"It's the difference between a low-rise building, where everything is spread out flat, and a high rise building. You shorten the connection distances," says Matthias Kaiserswerth, director of IBM Zurich.

But there is a very good reason today's chips are gridiron pancakes - exposure to the air is critical to dissipate the intense heat generated by ever-smaller transistors.

Piling chips on top of one another locks this heat inside - a major roadblock to 3D computing.

IBM's solution is integrated liquid cooling - where chips are interlayered with tiny water pipes.

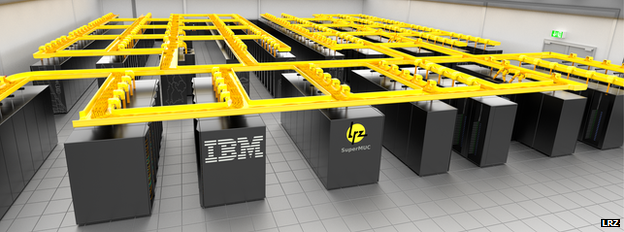

The art of liquid cooling has been demonstrated by Aquasar and put to work inside the German supercomputer SuperMUC which - perversely - harnesses warm water to cool its circuits.

SuperMUC consumes 40% less electricity as a result.

Liquid engineeringBut for IBM to truly match the marvels of the brain, there is a third evolutionary step it must achieve - simultaneous liquid fuelling and cooling.

Just as blood gives sugar in one hand and takes heat with another, IBM is looking for a fluid that can multitask.

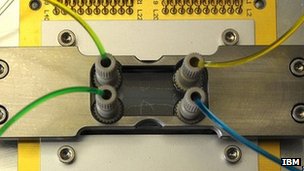

Vanadium is the best performer in their current laboratory test system - a type of redox flow unit - similar to a simple battery.

First a liquid - the electrolyte - is charged via electrodes, then pumped into the computer, where it discharges energy to the chip.

SuperMUC uses liquid cooling instead of air - a model for future computer designs

Redox flow is far from a new technology, and neither is it especially complex.

But IBM is the first to stake its chips on this "electronic blood" as the food of future computers - and will attempt to optimise it over the coming decades to achieve zettascale computing.

"To power a zettascale computer today would take more electricity than is produced in the entire world," says Michel.

He is confident that the design hurdles in his bionic model can be surmounted - not least that a whole additional unit is needed to charge the liquid.

And while other labs are betting on spintronics, quantum computing, or photonics to take us beyond silicon, the Zurich team believes the real answer lies right behind our eyes.

"Just as computers help us understand our brains, if we understand our brains we'll make better computers," says director Matthias Kaiserswerth.

He would like to see a future Watson win Jeopardy on a level playing field.

A redox flow test system - the different coloured liquids have different oxidation states

Other experts in computing agree that IBM's 3D principles are sound. But as to whether bionic computing will be the breakthrough technology, the jury is out.

"The idea of using a fluid to both power and cool strikes me as very novel engineering - killing two birds with one stone," says Prof Alan Woodward, of the University of Surrey's computing department.

"But every form of future computing has its champions - whether it be quantum computing, DNA computing or neuromorphic computing.

"There is a long way to go from the lab to having one of these sitting under your desk."

Prof Steve Furber, leader of the SpiNNaker project agrees that "going into the third dimension" has more to offer than continually shrinking transistors.

"The big issue with 3D computing is getting the heat out - and liquid cooling could be very effective if integrated into 3D systems as proposed here," he told the BBC.

"But all of the above will not get electronics down to the energy-efficiency of the brain.

"That will require many more changes, including a move to analogue computation instead of digital.

"It will also involve breakthroughs in new non-Turing models of computation, for example based on an understanding of how the brain processes information."

Tuesday, October 15. 2013

Qualcomm Neural Processing Units to mimic human brains for your phone

Via SlashGear

-----

Qualcomm

is readying a new kind of artificial brain chip, dubbed neural

processing units (NPUs), modeling human cognition and opening the door

to phones, computers, and robots that could be taught in the same ways

that children learn. The first NPUs are likely to go into production by

2014, CTO Matt Grob confirmed at the MIT Technology Review

EmTech conference, with Qualcomm in talks with companies about using

the specialist chips for artificial vision, more efficient and

contextually-aware smartphones and tablets, and even potentially brain

implants.

According to Grob, the advantage of NPUs over traditional chips like

Qualcomm’s own Snapdragon range will be in how they can be programmed.

Instead of explicitly instructing the chips in how processing should

take place, developers would be able to teach the chips by example. “This ‘neuromorphic’ hardware

is biologically inspired – a completely different architecture – and

can solve a very different class of problems that conventional

architecture is not good at,” Grob explained of the NPUs. “It really

uses physical structures derived from real neurons – parallel and

distributed.” As a result, “this is a kind of machine that can learn, and be

programmed without software – be programmed the way you teach your kid”

Grob predicted. However it’s not only robots that can learn which will benefit

from the NPUs, Qualcomm says. “We want to make it easier for

researchers to make a part of the brain” Grob said, bringing abilities

like classification and prediction to a new generation of electronics. That might mean computers

that are better able to filter large quantities of data to suit the

particular needs of the user at any one time, smartphone assistants like

Google Now with supercharged contextual intuition, and autonomous cars

that can dynamically recognize and understand potential perils in the

road ahead. The first partnerships actually implementing NPUs in that way are

likely to come in 2014, Grob confirmed, with Qualcomm envisaging hugely

parallel arrays of the chips being put into practice to model how humans

might handle complex problems.

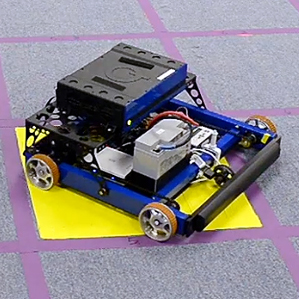

In fact, Qualcomm already has a learning machine in its labs that uses the same sort of biologically-inspired programming system

that the NPUs will enable. A simple wheeled robot, it’s capable of

rediscovering a goal location after being told just once that it’s

reached the right point.

In fact, Qualcomm already has a learning machine in its labs that uses the same sort of biologically-inspired programming system

that the NPUs will enable. A simple wheeled robot, it’s capable of

rediscovering a goal location after being told just once that it’s

reached the right point.

Monday, October 14. 2013

MIT's 'Kinect of the future' looks through walls with X-ray like vision

Via PCWorld

-----

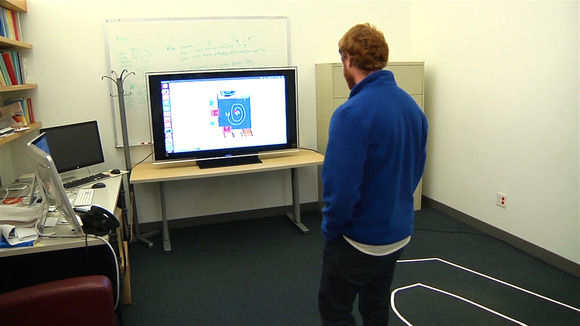

Massachusetts Institute of Technology researchers have developed a device that can see through walls and pinpoint a person with incredible accuracy. They call it the “Kinect of the future,” after Microsoft’s Xbox 360 motion-sensing camera.

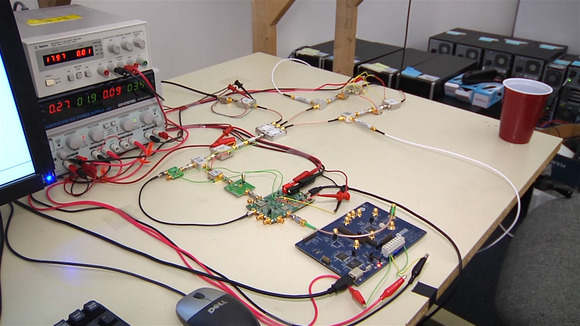

Shown publicly this week for the first time, the project from MIT’s Computer Science and Artificial Laboratory (CSAIL) used three radio antennas spaced about a meter apart and pointed at a wall.

Photo: Nick Barber

Photo: Nick Barber

A desk cluttered with wires and circuits generated and interpreted the radio waves. On the other side of the wall, a person walked around the room and the system represented that person as a red dot on a computer screen. The system tracked the movements with an accuracy of plus or minus 10 centimeters, which is about the width of an adult hand.

Fadel Adib, a Ph.D student on the project, said that gaming could be one use for the technology, but that localization is also very important. He said that Wi-Fi localization, or determining someone’s position based on Wi-Fi, requires the user to hold a transmitter, like a smartphone for example.

“What we’re doing here is localization through a wall without requiring you to hold any transmitter or receiver [and] simply by using reflections off a human body,” he said. “What is impressive is that our accuracy is higher than even state of the art Wi-Fi localization.”

He said that he hopes further iterations of the project will offer a real-time silhouette rather than just a red dot.

In the room where users walked around there was white tape on the floor in a circular design. The tape on the floor was also in the virtual representation of the room on the computer screen. It wasn’t being used an aid to the technology, rather it showed onlookers just how accurate the system was. As testers walked on the floor design their actions were mirrored on the computer screen.

One of the drawbacks of the system is that it can only track one moving person at a time and the area around the project needs to be completely free of movement. That meant that when the group wanted to test the system they would need to leave the room with the transmitters as well as the surrounding area; only the person being tracked could be nearby.

At the CSAIL facility the researchers had the system set up between two offices, which shared an interior wall. In order to operate it, onlookers needed to stand about a meter or two outside of both of the offices as to not create interference for the system.

Photo: Nick Barber

Photo: Nick Barber

The system can only track one person at a time, but that doesn’t mean two people can’t be in the same room at once. As long as one person is relatively still the system will only track the person that is moving.

The group is working on making the system even more precise. “We now have an initial algorithm that can tell us if a person is just standing and breathing,” Adib said. He was also able to show how raising an arm could also be tracked using radio signals. The red dot would move just slightly to the side where the arm was raised.

Adib also said that unlike previous versions of the project that used Wi-Fi, the new system allows for 3D tracking and could be useful in telling when someone has fallen at home.

The system now is quite bulky. It takes up an entire desk that is strewn with wires and then there’s also the space used by the antennas.

“We can put a lot of work into miniaturizing the hardware,” said research Zach Kabelac, a masters student at MIT. He said that the antennas don’t need to be as far apart as they are now.

“We can actually bring these closer together to the size of a Kinect [sensor] or possibly smaller,” he said. That would mean that the system would “lose a little bit of accuracy,” but that it would be minimal.

The researchers filed a patent this week and while there are no immediate plans for commercialization the team members were speaking with representatives from major wireless and component companies during the CSAIL open house.

Wednesday, October 09. 2013

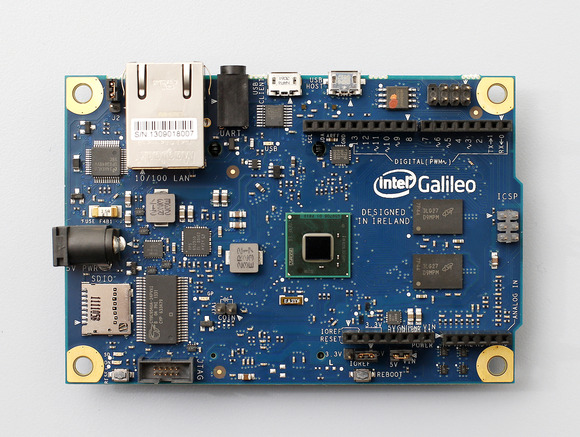

Intel outfits open-source Galileo DIY computer with new Quark chip

Via pcworld

-----

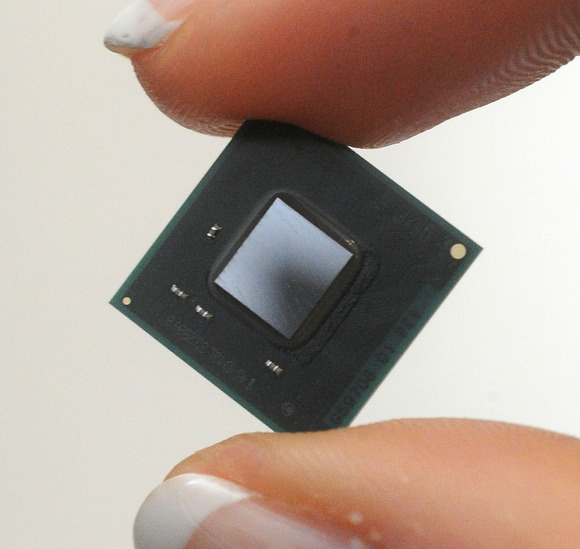

With its first computer based on the extremely low-power Quark processor, Intel is tapping into the 'maker' community to figure out ways the new chip could be best used.

The chip maker announced the Galileo computer -- which is a board without a case -- with the Intel Quark X1000 processor on Thursday. The board is targeted at the community of do-it-yourself enthusiasts who make computing devices ranging from robots and health monitors to home media centers and PCs.

The Galileo board should become widely available for under $60 by the end of November, said Mike Bell, vice president and general manager of the New Devices Group at Intel.

Bell hopes the maker community will use the board to build prototypes and debug devices. The Galileo board will be open-source, and the schematics will be released over time so it can be replicated by individuals and companies.

Bell's New Devices Group is investigating business opportunities in the emerging markets of wearable devices and the "Internet of things." The chip maker launched the extremely low-power Quark processor for such devices last month.

"People want to be able to use our chips to do creative things," Bell said. "All of the coolest devices are coming from the maker community."

But at around $60, the Galileo will be more expensive than the popular Raspberry Pi, which is based on an ARM processor and sells for $25. The Raspberry Pi can also render 1080p graphics, which Intel's Galileo can't match.

Making inroads in the enthusiast community

Questions also remain on whether Intel's overtures will be accepted by the maker community, which embraces the open-source ethos of a community working together to tweak hardware designs. Intel has made a lot of contributions to the Linux OS, but has kept its hardware designs secret. Intel's efforts to reach out to the enthusiast community is recent; the company's first open-source PC went on sale in July.

Intel is committed long-term to the enthusiast community, Bell said.

Intel also announced a partnership with Arduino, which provides a software development environment for the Galileo motherboard. The enthusiast community has largely relied on Arduino microcontrollers and boards with ARM processors to create interactive computing devices.

The Galileo is equipped with a 32-bit Quark SoC X1000 CPU, which has a clock speed of 400MHz and is based on the x86 Pentium Instruction Set Architecture. The Galileo board supports Linux OS and the Arduino development environment. It also supports standard data transfer and networking interfaces such as PCI-Express, Ethernet and USB 2.0.

Intel has demonstrated its Quark chip running in eyewear and a medical patch to check for vitals. The company has also talked about the possibility of using the chip in personalized medicine, sensor devices and cars.

Intel hopes creating interactive computing devices with Galileo will be easy. Writing applications for the board is as simple as writing programs to standard microcontrollers with support for the Arduino development environment.

"Essentially it's transparent to the development," Bell said.

Intel is shipping out 50,000 Galileo boards for free to students at over 1,000 universities over the next 18 months.

Friday, October 04. 2013

The First Carbon Nanotube Computer: The Hyper-Efficient Future Is Here

Via Gizmodo

-----

Coming just a year after the creation of the first carbon nanotube computer chip, scientists have just built the very first actual computer with a central processor centered entirely around carbon nanotubes. Which means the future of electronics just got tinier, more efficient, and a whole lot faster.

Built by a group of engineers at Stanford University, the computer itself is relatively basic compared to what we're used to today. In fact, according to Suhasish Mitra, an electrical engineer at Stanford and project co-leader, its capabilities are comparable to an Intel 4004—Intel's first microprocessor released in 1971. It can switch between basic tasks (like counting and organizing numbers) and send data back to an external memory, but that's pretty much it. Of course, the slowness is partially due to the fact the computer wasn't exactly built under the best conditions, MIT Technology Review explains:

"Don't let that fool you, though—this is just the first step. Last year, IBM proved that carbon nanotube transistors can run about three times as fast as the traditional silicon variety, and we'd already managed to arrange over 10,000 carbon nanotubes onto a single chip. They just hadn't connected them in a functioning circuit. But now that we have, the future is looking awfully bright."

Theoretically, carbon nanotube computing would be an order of magnitude faster that what we've seen thus far with any material. And since carbon nanotubes naturally dissipate heat at an incredible rate, computers made out of the stuff could hit blinding speeds without even breaking a sweat. So the speed limits we have using silicon—which doesn't do so well with heat—would be effectively obliterated.

The future of breakneck computing doesn't come without its little speedbumps, though. One of the problems with nanotubes is that they grow in a generally haphazard fashion, and some of them even come out with metallic properties, short-circuiting whatever transistor you decided to shove it in. In order to overcome these challenges, the researchers at Stanford had to use electricity to vaporize any metallic nanotubes that cropped up and formulated design algorithms that would be able to function regardless of any nanotube alignment problems. Now it's just a matter of scaling their lab-grown methods to an industrial level—easier said than done.

Still, despite the limitations at hand, this huge advancement just put another nail in silicon's ever-looming coffin.

Image: Norbert von der Groeben/Stanford

Wednesday, October 02. 2013

Scientists want to turn smartphones into earthquake sensors

Via The Verge

-----

For years, scientists have struggled to collect accurate real-time data on earthquakes, but a new article published today in the Bulletin of the Seismological Society of America may have found a better tool for the job, using the same accelerometers found in most modern smartphones. The article finds that the MEMS accelerometers in current smartphones are sensitive enough to detect earthquakes of magnitude five or higher when located near the epicenter. Because the devices are so widely used, scientists speculate future smartphone models could be used to create an "urban seismic network," transmitting real-time geological data to authorities whenever a quake takes place.

The authors pointed to Stanford's Quake-Catcher Network as an inspiration, which connects seismographic equipment to volunteer computers to create a similar network. But using smartphone accelerometers would be cheaper and easier to carry into extreme environments. The sensor will need to become more sensitive before it can be used in the field, but the authors say once technology catches up, a smartphone accelerometer could be the perfect earthquake research tool. As one researcher told The Verge, "right from the start, this technology seemed to have all the requirements for monitoring earthquakes — especially in extreme environments, like volcanoes or underwater sites."

Monday, September 30. 2013

The $400 Deltaprintr Is A Cheap Way To Make Really Big 3D Prints

Via TechCrunch

-----

Another day, another 3D printer. This time we have a model that comes from SUNY Purchase College where they are working on a laser-cut, compact 3D printer that can make extra tall models simply by swapping out a few pieces.

The printer pumps out plastic at 100 microns, a more than acceptable resolution, and uses very few moving parts. You’ll notice that the print head rides up three rails. This would allow you to add longer bars or extensions to bring things bigger than the platform.

Created by students Shai Schechter, Andrey Kovalev, Yasick Nemenov and Eugene Sokolov, the project is currently in pre-beta and they aim to launch a crowdfunding campaign in November. You can sign up for updates here.

The team hopes to make the product completely open source and because it uses very few expensive parts they’re able to price it very aggressively. While I love projects like these, I’m anxious to see how they build their software – one of the most important parts of a 3D printing package. As long as it’s solid I’d totally be down with this cool rig.

Monday, September 23. 2013

Qualcomm joins Wireless Power Consortium board, sparks hope for A4WP and Qi unification

Via endgadget

-----

Qualcomm, the founding member of Alliance for Wireless Power (or A4WP in short), made a surprise move today by joining the management board of the rival Wireless Power Consortium (or WPC), the group behind the already commercially available Qi standard. This is quite an interesting development considering how both alliances have been openly critical of each other, and yet now there's a chance of seeing just one standard getting the best of both worlds. That is, of course, dependent on Qualcomm's real intentions behind joining the WPC.

While Qi is now a well-established ecosystem backed by 172 companies, its current "first-gen" inductive charging method is somewhat sensitive to the alignment of the devices on the charging mats (though there has been recent breakthrough). Another issue is Qi can currently provide just up to 5W of power (which is dependent on both the quality of the coil and the operating frequency), and this may not be sufficient for charging up large devices at a reasonable pace. For instance, even with the 10W USB adapter, the iPad takes hours to fully juice up, let alone with just half of that power.

Looking ahead, both the WPC and the 63-strong A4WP are already working on their own magnetic resonance implementations to enable longer range charging. Additionally, A4WP's standard has also been approved for up to 24W of output, whereas the WPC is already developing medium power (from 15W) Qi specification for the likes of laptops and power tools.

Here's where the two standards differentiate. A4WP's implementation allows simultaneous charging of devices that require different power requirement on the same pad, thus offering more spacial freedom. On the other hand, Qi follows a one-to-one control design to maximize efficiency -- as in the power transfer is totally dependent on how much juice the device needs, and it can even go completely off once the device is charged.

What remains unclear is whether Qualcomm has other motives behind its participation in the WPC's board of management. While the WPC folks "encourage competitors to join" for the sake of "open development of Qi," this could also hamper the development of their new standard. Late last year, we spoke to the WPC's co-chair Camille Tang (the name "Qi" was actually her idea; plus she's also the president and co-founder of Hong Kong-based Convenient Power), and she expressed concern over the potential disruption from the new wireless power groups.

"The question to ask is: why are all these groups now coming out and saying they're doing a standard? It's possible that some people might say they really are a standard, but they may actually not intend to put products out there," said Tang.

"For example, there's one company with their technology and one thing that's rolling out in infrastructure. They don't have any devices, it's not compatible with anything else, so you think: why do they do that?

"It's about money. Not just licensing money, but other types of money as well."

At least on the surface, Qualcomm is showing its keen side to get things going for everyone's best interest. In a statement we received from a spokesperson earlier, the company implied that joining the WPC's board is "the logical step to grow the wireless power industry beyond the current first generation products and towards next generation, loosely coupled technology." However, Qualcomm still "believes the A4WP represents the most mature and best implementation of resonant charging."

Quicksearch

Popular Entries

- The great Ars Android interface shootout (130834)

- Norton cyber crime study offers striking revenue loss statistics (101425)

- MeCam $49 flying camera concept follows you around, streams video to your phone (99849)

- Norton cyber crime study offers striking revenue loss statistics (57654)

- The PC inside your phone: A guide to the system-on-a-chip (57227)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |