Thursday, April 11. 2013

Researchers Replace Passwords With Mind-Reading Passthoughts

Via Mashable

-----

Remembering the passwords for all your sites can get frustrating. There are only so many punctuation, number substitutes and uppercase variations you can recall, and writing them down for all to find is hardly an option.

Thanks to researchers at the UC Berkeley School of Information, you may not need to type those pesky passwords in the future. Instead, you'll only need to think them.

By measuring brainwaves with biosensor technology, researchers are able to replace passwords with "passthoughts" for computer authentication. A $100 headset wirelessly connects to a computer via Bluetooth, and the device's sensor rests against the user’s forehead, providing a electroencephalogram (EEG) signal from the brain.

Other biometric authentication systems use fingerprint or retina scans for security, but they're often expensive and require extensive equipment. The NeuroSky Mindset looks just like any other Bluetooth set and is more user-friendly, researchers say. Brainwaves are also unique to each individual, so even if someone knew your passthought, their emitted EEG signals would be different.

In a series of tests, participants completed seven different mental tasks with the device, including imagining their finger moving up and down and choosing a personalized secret. Simple actions like focusing on breathing or on a thought for ten seconds resulted in successful authentication.

The key to passthoughts, researchers found, is finding a mental task that users won’t mind repeating on a daily basis. Most participants found it difficult to imagine performing an action from their favorite sport because it was unnatural to imagine movement without using their muscles. More preferable passthoughts were those where subjects had to count objects of a specific color or imagine singing a song.

The idea of mind-reading is pretty convenient, but if the devices aren't accessible people will refuse to use it no matter how accurate the system, researchers explain.

Thursday, March 28. 2013

Internet Census 2012 - Port scanning /0 using insecure embedded devices

-----

Abstract While playing around with the Nmap Scripting Engine

(NSE) we discovered an amazing number of open embedded devices on the

Internet. Many of them are based on Linux and allow login to standard

BusyBox with empty or default credentials. We used these devices to

build a distributed port scanner to scan all IPv4 addresses. These scans

include service probes for the most common ports, ICMP ping, reverse

DNS and SYN scans. We analyzed some of the data to get an estimation of

the IP address usage.

All data gathered during our research is released into the public domain for further study.

Continue reading "Internet Census 2012 - Port scanning /0 using insecure embedded devices"

The Internet is not a Surveillance State…

Via Telekomunnisten

-----

In his March 16, 2013 opinion column on CNN.com,

Bruce Schneier called the Internet a “surveillance state”. In the

piece, Schneier complains that the Internet now serves as a platform

which enables massive and pervasive surveillance by the state. State

sponsored and ordained surveillance, however, is not synonymous with the

Internet. Schneier’s use of the word ‘state’ is ill-advised, his

goading conclusion thereby misses the mark.

The Internet is not a State. States can do something to limit the invasiveness of web-based services used in the public and private sectors alike, but they won’t, because any vision of infinite prosperity based on digital-age intellectual property rights and patents relies on the current content-control model of value extraction (of which Internet use, more accurately web use, is the most mass-culture manifestation) not only persisting but becoming ever more prevalent.

Web-based service providers such as Facebook and Google are not the Internet, but rather are web-based platforms built on the Internet. The superior user-experience of such services accrues a dedicated user base for basic communication functionalities. The design idiosyncrasies of these platforms define popular culture. However just because certain service providers have become dominant does not mean that the techniques or strategies they employ are fundamentally superior. These have become dominant because they have evolved a business model which ensures a generous ROI. Without exception, the leading platforms ensure value for their investors by trading in user data.

There are myriad ways to use the Internet, there are myriad different paradigms for Internet-enabled communication, collaboration and other social activities which can and are being explored. Whether or not they can “compete” with the Googles and Facebooks, depends today entirely on whether they can produce sufficient “surplus value” to satisfy investors, thereby to attract sufficient funding to produce superior user experience. In all the world wide web there is not a model for this which is not centered on the harvesting and analysis of user data.

Since much of the world already finds itself on the ‘client’ side of automated services for much of their waking life, the competition is on to deliver the “highest quality” of such service, anticipating client’s predilections and desires. In other words, as everybody knows, the old customer service saw recurs, “please excuse the inconvenience, we are … to serve you better”. The qualms one has about permitting unmitigated and unmonitored access to one’s social life are discounted as a mere inconvenience one must endure so that the machines can “serve us better”.

The state likewise asks us to put up with the invasion of privacy in order to provide us with such things as “security” and “democracy”. If we look closely at those two commodities we are being offered in exchange for our private sphere, we may not be so sure of the fairness of the deal. “Security” for one, may come in myriad forms, but the form of “security” which is offered to us is one determined through lobbying by security companies, which, no surprise, promise to offer us better service the more we open up our lives to data collection.

The Internet is not a ‘state’, it can be ruled over by states, but in principle, it belongs to all humanity. The Internet is not an ephemeral service, it is a network of very real, material computers which are located indisputably in specific real material buildings at specific real material locations, under nominally specific local, regional, national and international jurisdictions, right now. There is nothing fundamentally abstract about the Internet, it is as hard to fathom as the electricity grid on which it is practically dependent.

States can produce and maintain infrastructure like electricity grids and networked computers connected in the Internet. They can, but, today, the tendency is for states to delegate private entities to do this. This is said to be more efficient. Popular dictum is that the price to pay for such services is privacy. States have traditionally been moderate in exacting that price for fear of incurring the wrath of the populace. Private entities which belong to no particular state, when and if they incur such wrath, pretend it is up to the state to moderate their behaviour. Privately, the actors for state are discouraged to regulate for fear of inhibiting “prosperity”.

Therefore, states are increasingly impotent to rule over the Internet. The Internet has become a surveillance system used by private companies and their clients, the states, alike “to serve you better”. Behind this innocuous promise, the knowledge about private citizens is used to hone customized media designed to compel users to purchase anything from clothes to security to ideology. The big-data industries will build the patented processes which transform data mined from users into patented automated services. This is advertised as the keystone of the 21st century economy. In the perpetual panic of austerity finance capitalism, who would dare embolden the state to emperil the perceived slim auspices of escape from financial ruin the surveillance/data-collection industry-driven economy offers us?

But the Internet is not a surveillance state. The Internet, as described in the article, has come to be used as a most efficient technology for pervasive data surveillance. States use surveillance technologies when they are available to the degree that the laws permit. Today’s laws permit private companies to do things with surveillance data the state traditionally cannot without going through lengthy legislative approval processes. It is through the obligation to produce profits that private companies have been permitted to transgress legal limits on invasion of privacy. The private companies have thus played a vanguard role in loosening up legal restrictions protecting individual privacy.

Surveillance at work has long been accepted as integral to taylorist notions of efficiency and productivity. Now that the work place has extended to be almost indistinguishable with daily life, the fact that surveillance has followed along seems unsurprising. While mobile computer were advertised to liberate us from the cubicle, it has made for an environment where one’s employment is perpetual, again, it is no surprise then that surveillance technologies have followed.

As business rationale and strategies evolve, as the boundaries between work time and leisure time dissolves, enterprise ethics entrench themselves in every aspect of social life, and surveillance has followed, if only so that the instruments on which one coordinate’s one work-leisure hybridity can “serve you better”. So the prevalence of surveillance is a consequence of the interspersal of productive labour time in life time. Ironically the call from the left to integrate and acknowledge so-called affective labour will have as the main notable outcome that these activities will become subject to data-acquisition monetization and surveillance.

Bifo noted in a recent speech that in 1977 he was (radically) militating against the traditional career-work regime, where people spent their entire adult lives working for a particular company. Now it is not without some bitterness that he notes, bursting the worker out of the workplace has resulted in today’s worker having to work for numerous companies all the time.

The government’s own performance is measured through massive-scale data acquisition. This data-acquisition is indistinguishable from surveillance, the surveillance permits the government to detect, intervene with, and if necessary terminate its own practices which are determined to be inefficient, or counter-productive. Therefore the government, in order to prove to the citizens that it is doing a good job, exploits all the opportunities for surveillance opened up by vanguardist capital.

But the Internet is just a network of computers, which provides functionalities. It is not a state. The state could, in principle, elect not to use the Internet for surveillance, and could restrict the data-collection activities of private service providers. I have outlined above why this is unlikely, but it is possible. Private capital would likely take revenge on such a government, and the citizens would likely come to disdain its decision. Under global capital there really is little alternative for states to “keeping up with the Joneses’” surveillance service industry. Massive pervasive surveillance is understood simply as a precondition to prosperity.

“Welcome to an Internet without privacy, and we’ve ended up here with hardly a fight.”-Bruce Schneier

One wonders who Bruce Schneier refers to with the pronoun “we” in his parting shot. Certainly prominent organisations such as EFF in the USA and Open Rights Group in the UK have been working for years to help web users appreciate and understand the latest online privacy concerns, among other burning issues of the digital age. And there are thousands of initiatives around the world working to criticize and raise concerns about the egregious asymmetries of power on the web. High-profile conferences and actions are organised, excellently researched publications are made available, yet these initiatives get only muted coverage in the mass media.

The problem is not for lack of trying, besides whatever bias the corporate-controlled mass media outlets may exercise, it is also due to the fact that these critical organizations rely on people to contribute voluntarily, in their spare time. In other words, dissent, alternative viewpoints, proposals and even services cannot compete. The industry or economy which sees its success as contingent on ever more pervasive data-collection from individuals is unlikely to finance critical initiatives to the extent that these might hire more paid staff, and thereby make their contributions more attractive, accessible and appreciated. The state may step in here and subsidize such critical organizations to some degree, but only under severe admonishment from the dominant web-service industries.

Networked-service and networked-content businesses with their increasingly data-acquisition-oriented profit models have become extremely important and influential in national economies. If Bruce Schneier is sincere about protecting online privacy, he had better look not to the state, but to the capitalist conditions of the production of web services which compel corporations to develop, implement and constantly improve the invasive practices that offend his sensibility.

Tuesday, March 26. 2013

Complete Neanderthal genome published by German researchers

Via Slash Gear

-----

A group of German researchers announced this week that they have completed sequencing of a Neanderthal genome. The scientists say that the high-quality sequencing will be made available online for other researchers and scientists to study. The researchers were able to produce the genome using a toe bone found in a Siberian cave.

This published genome is said to be far more detailed than a previous “draft” Neanderthal genome was sequenced three years ago by the same team. The group of researchers operate from the Max Planck Institute for Evolutionary Anthropology in Leipzig, Germany. The researchers say that the new genome allows individual inherited traits from the Neanderthal’s mother and father to be distinguished.

In the future, the scientists hope to compare their new genome sequence to that of other Neanderthals as well as comparing the genome to another extinct human species with remains that were found in the same Siberian cave. The other remains are of an extinct human species called Denisovan. Certainly some researchers will compare this new genome to that of humans.

The group of researchers intends to publish a scientific paper based on new knowledge gained from studying the detailed genome. Specifically the researchers plan to refine knowledge having to do with genetic changes that occur in the genomes of modern humans after they parted ways with Neanderthals and Denisovians.

Thursday, March 07. 2013

When are we going to learn to trust robots?

Via BBC

-----

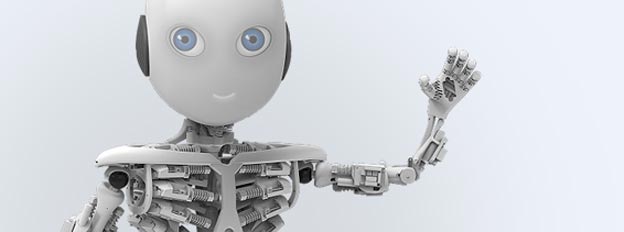

A new robot unveiled this week highlights the psychological and technical challenges of designing a humanoid that people actually want to have around.

Like all little boys, Roboy likes to show off.

He can say a few words. He can shake hands and wave. He is learning to ride a tricycle. And - every parent's pride and joy - he has a functioning musculoskeletal anatomy.

But when Roboy is unveiled this Saturday at the Robots on Tour event in Zurich, he will be hoping to charm the crowd as well as wow them with his skills.

"One of the goals is for Roboy to be a messenger of a new generation of robots that will interact with humans in a friendly way," says Rolf Pfeifer from the University of Zurich - Roboy's parent-in-chief.

As manufacturers get ready to market robots for the home it has become essential for them to overcome the public's suspicion of them. But designing a robot that is fun to be with - as well as useful and safe - is quite difficult.

-----

The uncanny valley: three theories

Researchers have speculated about why we might feel uneasy in the presence of realistic robots.

- They remind us of corpses or zombies

- They look unwell

- They do not look and behave as expected

-----

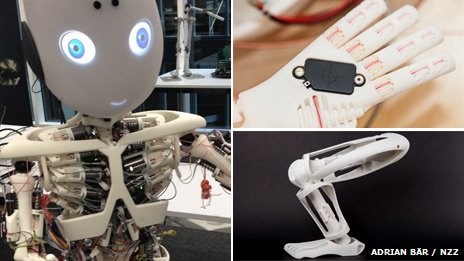

Roboy's main technical innovation is a tendon-driven design that mimics the human muscular system. Instead of whirring motors in the joints like most robots, he has around 70 imitation muscles, each containing motors that wind interconnecting tendons. Consequently, his movements will be much smoother and less robotic.

But although the technical team was inspired by human beings, it chose not to create a robot that actually looked like one. Instead of a skin-like covering, Roboy has a shiny white casing that proudly reveals his electronic innards.

Behind this design is a long-standing hypothesis about how people feel in the presence of robots.

In 1970, the Japanese roboticist Masahiro Mori speculated that the more lifelike robots become, the more human beings feel familiarity and empathy with them - but that a robot too similar to a human provokes feelings of revulsion.

Mori called this sudden dip in human beings' comfort levels the "uncanny valley".

"There are quite a number of studies that suggest that as long as people can clearly see that the robot is a machine, even if they project their feelings into it, then they feel comfortable," says Pfeifer.

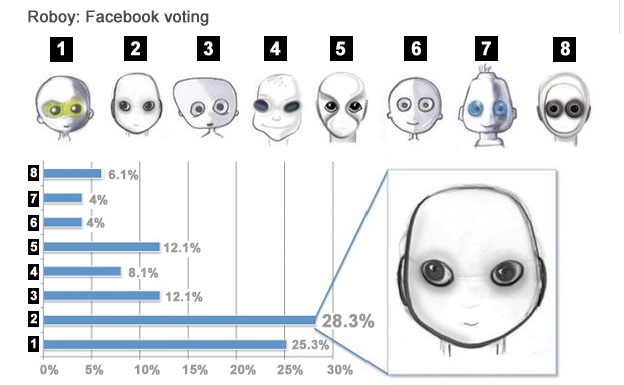

Roboy was styled as a boy - albeit quite a brawny one - to lower his perceived threat levels to humans. His winsome smile - on a face picked by Facebook users from a selection - hasn't hurt the team in their search for corporate sponsorship, either.

But the image problem of robots is not just about the way they look. An EU-wide survey last year found that although most Europeans have a positive view of robots, they feel they should know their place.

Eighty-eight per cent of respondents agreed with the statement that robots are "necessary as they can do jobs that are too hard or dangerous for people", such as space exploration, warfare and manufacturing. But 60% thought that robots had no place in the care of children, elderly people and those with disabilities.

The computer scientist and psychologist Noel Sharkey has, however, found 14 companies in Japan and South Korea that are in the process of developing childcare robots.

South Korea has already tried out robot prison guards, and three years ago launched a plan to deploy more than 8,000 English-language teachers in kindergartens.

-----

A robot buddy?

- In the film Robot and Frank, set in the near-future, ageing burglar Frank is provided with a robot companion to be a helper and nurse when he develops dementia

- The story plays out rather like a futuristic buddy movie - although he is initially hostile to the robot, Frank is soon programming him to help him in his schemes, which are not entirely legal

-----

Cute, toy-like robots are already available for the home.

Take the Hello Kitty robot, which has been on the market for several years and is still available for around $3,000 (£2,000). Although it can't walk, it can move its head and arms. It also has facial recognition software that allows it to call children by name and engage them in rudimentary conversation.

A tongue-in-cheek customer review of the catbot on Amazon reveals a certain amount of scepticism.

"Hello Kitty Robo me was babysit," reads the review.

"Love me hello kitty robo, thank robo for make me talk good... Use lots battery though, also only for rich baby, not for no money people."

-----

Asimo

At just 130cm high, Honda's Asimo jogs around on bended knee like a mechanical version of Dobby, the house elf from Harry Potter. He can run, climb up and down stairs and pour a bottle of liquid in a cup.

Since 1986, Honda have been working on humanoids with the ultimate aim of providing an aid to those with mobility impairments.

-----

The product description says the robot is "a perfect companion for times when your child needs a little extra comfort and friendship" and "will keep your child happily occupied". In other words, it's something to turn on to divert your infant for a few moments, but it is not intended as a replacement child-minder.

An ethical survey of "robot nannies" by Noel and Amanda Sharkey suggests that as robots become more sophisticated parents may be increasingly tempted to hand them too much responsibility.

The survey also raises the question of what long-term effects will result from children forming an emotional bond with a lump of plastic. They cite one case study in which a 10-year-old girl, who had been given a robot doll for several weeks, reported that "now that she's played with me a lot more, she really knows me".

Noel Sharkey says that he loves the idea of children playing with robots but has serious concerns about them being brought up by them. "[Imagine] the kind of attachment disorders they would develop," he says.

But despite their limitations, humanoid robots might yet prove invaluable in narrow, fixed roles in hospitals, schools and homes.

"Something really interesting happens between some kids with autism and robots that doesn't happen between those children and other humans," says Maja J Mataric, a roboticist at the University of Southern California. She's found that such children respond positively to humanoids and she is trying to work out how they can be used therapeutically.

In their study, the Sharkeys make the observation that robots have one advantage over adult humans. They can have physical contact with children - something now frowned upon or forbidden in schools.

"These restrictions would not apply to a robot," they write, "because it could not be accused of having sexual intent and so there are no particular ethical concerns. The only concern would be the child's safety - for example, not being crushed by a hugging robot."

When it comes to robots, there is such a thing as too much love.

"If you were having a mechanical assistant in the home that was powerful enough to be useful, it would necessarily be powerful enough to be dangerous," says Peter Robinson of Cambridge University. "Therefore it'd better have really good understanding and communication."

His team are developing robots with sophisticated facial recognition. These machines won't just be able to tell Bill from Brenda but they will be able to infer from his expression whether Bill is feeling confused, tired, playful or in physical agony.

Roboy's muscles and tendons may actually make him a safer robot to have around. His limbs have more elasticity than a conventional robot's, allowing for movement even when he has a power failure.

Rolf Pfeifer has a favourite question which he puts to those sceptical about using robots in caring situations.

"If you couldn't walk upstairs any more, would you want a person to carry you or would you want to take the elevator?"

Most people opt for the elevator, which is - if you think about it - a kind of robot.

Pfeifer believes robots will enter our homes. What is not yet clear is whether the future lies in humanoid servants with a wide range of limited skills or in intelligent, responsive appliances designed to do specific tasks, he says.

"I think the market will ultimately determine what kind of robot we're going to have."

You can listen to Click on the BBC World Service. Listen back to the robots for humans special via iplayer or browse the Click podcast archive.

Monday, February 11. 2013

Researchers create robot exoskeleton that is controlled by a moth running on a trackball

Via ExtremTech

-----

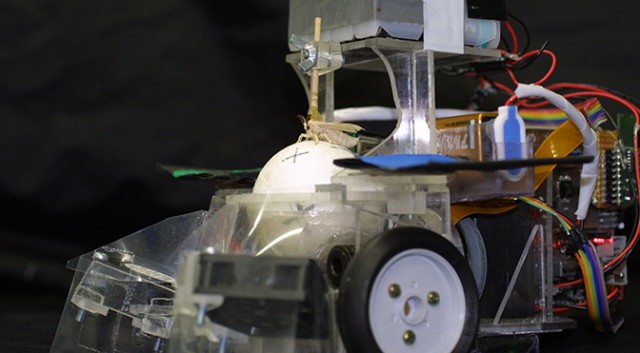

If you’re terrified of the possibility that humanity will be dismembered by an insectoid master race, equipped with robotic exoskeletons (or would that be exo-exoskeletons?), look away now. Researchers at the University of Tokyo have strapped a moth into a robotic exoskeleton, with the moth successfully controlling the robot to reach a specific location inside a wind tunnel.

In all, fourteen male silkmoths were tested, and they all showed a scary aptitude for steering a robot. In the tests, the moths had to guide the robot towards a source of female sex pheromone. The researchers even introduced a turning bias — where one of the robot’s motors is stronger than the other, causing it to veer to one side — and yet the moths still reached the target.

As you can see in the photo above, the actual moth-robot setup is one of the most disturbing and/or awesome things you’ll ever see. In essence, the polystyrene (styrofoam) ball acts like a trackball mouse. As the silkmoth walks towards the female pheromone, the ball rolls around. Sensors detect these movements and fire off signals to the robot’s drive motors. At this point you should watch the video below — and also not think too much about what happens to the moth when it’s time to remove the glued-on stick from its back.

Fortunately, the Japanese researchers aren’t actually trying to construct a moth master race: In reality, it’s all about the moth’s antennae and sensory-motor system. The researchers are trying to improve the performance of autonomous robots that are tasked with tracking the source of chemical leaks and spills. “Most chemical sensors, such as semiconductor sensors, have a slow recovery time and are not able to detect the temporal dynamics of odours as insects do,” says Noriyasu Ando, the lead author of the research. “Our results will be an important indication for the selection of sensors and models when we apply the insect sensory-motor system to artificial systems.”

Of course, another possibility is that we simply keep the moths. After all, why should we spend time and money on an artificial system when mother nature, as always, has already done the hard work for us? In much the same way that miners used canaries and border police use sniffer dogs, why shouldn’t robots be controlled by insects? The silkmoth is graced with perhaps the most sensitive olfactory system in the world. For now it might only be sensitive to not-so-useful scents like the female sex pheromone, but who’s to say that genetic engineering won’t allow for silkmoths that can sniff out bombs or drugs or chemical spills?

Who nose: Maybe genetically modified insects with robotic exoskeletons are merely an intermediary step towards real nanobots that fly around, fixing, cleaning, and constructing our environment.

Monday, February 04. 2013

No Mercy For Robots: Experiment Tests How Humans Relate To Machines

Via npr

-----

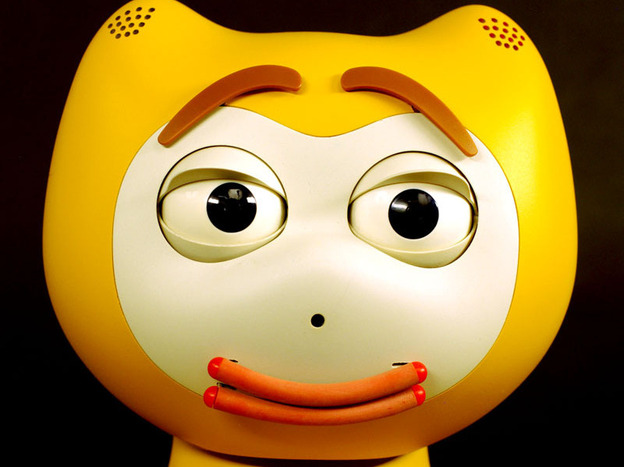

Could you say "no" to this face? Christoph Bartneck of the University of Canterbury in New Zealand recently tested whether humans could end the life of a robot as it pleaded for survival.

In 2007, Christoph Bartneck, a robotics professor at the University of Canterbury in New Zealand, decided to stage an experiment loosely based on the famous (and infamous) Milgram obedience study.

In Milgram's study, research subjects were asked to administer increasingly powerful electrical shocks to a person pretending to be a volunteer "learner" in another room. The research subject would ask a question, and whenever the learner made a mistake, the research subject was supposed to administer a shock — each shock slightly worse than the one before.

As the experiment went on, and as the shocks increased in intensity, the "learners" began to clearly suffer. They would scream and beg for the research subject to stop while a "scientist" in a white lab coat instructed the research subject to continue, and in videos of the experiment you can see some of the research subjects struggle with how to behave. The research subjects wanted to finish the experiment like they were told. But how exactly to respond to these terrible cries for mercy?

Bartneck studies human-robot relations, and he wanted to know what would happen if a robot in a similar position to the "learner" begged for its life. Would there be any moral pause? Or would research subjects simply extinguish the life of a machine pleading for its life without any thought or remorse?

Treating Machines Like Social Beings

Many people have studied machine-human relations, and at this point it's clear that without realizing it, we often treat the machines around us like social beings.

Consider the work of Stanford professor Clifford Nass. In 1996, he arranged a series of experiments testing whether people observe the rule of reciprocity with machines.

"Every culture has a rule of reciprocity, which roughly means, if I do something nice for you, you will do something nice for me," Nass says. "We wanted to see whether people would apply that to technology: Would they help a computer that helped them more than a computer that didn't help them?"

So they placed a series of people in a room with two computers. The people were told that the computer they were sitting at could answer any question they asked. In half of the experiments, the computer was incredibly helpful. Half the time, the computer did a terrible job.

After about 20 minutes of questioning, a screen appeared explaining that the computer was trying to improve its performance. The humans were then asked to do a very tedious task that involved matching colors for the computer. Now, sometimes the screen requesting help would appear on the computer the human had been using; sometimes the help request appeared on the screen of the computer across the aisle.

"Now, if these were people [and not computers]," Nass says, "we would expect that if I just helped you and then I asked you for help, you would feel obligated to help me a great deal. But if I just helped you and someone else asked you to help, you would feel less obligated to help them."

What the study demonstrated was that people do in fact obey the rule of reciprocity when it comes to computers. When the first computer was helpful to people, they helped it way more on the boring task than the other computer in the room. They reciprocated.

"But when the computer didn't help them, they actually did more color matching for the computer across the room than the computer they worked with, teaching the computer [they worked with] a lesson for not being helpful," says Nass.

Very likely, the humans involved had no idea they were treating these computers so differently. Their own behavior was invisible to them. Nass says that all day long, our interactions with the machines around us — our iPhones, our laptops — are subtly shaped by social rules we aren't necessarily aware we're applying to nonhumans.

"The relationship is profoundly social," he says. "The human brain is built so that when given the slightest hint that something is even vaguely social, or vaguely human — in this case, it was just answering questions; it didn't have a face on the screen, it didn't have a voice — but given the slightest hint of humanness, people will respond with an enormous array of social responses including, in this case, reciprocating and retaliating."

So what happens when a machine begs for its life — explicitly addressing us as if it were a social being? Are we able to hold in mind that, in actual fact, this machine cares as much about being turned off as your television or your toaster — that the machine doesn't care about losing it's life at all?

Bartneck's Milgram Study With Robots

In Bartneck's study, the robot — an expressive cat that talks like a human — sits side by side with the human research subject, and together they play a game against a computer. Half the time, the cat robot was intelligent and helpful, half the time not.

Bartneck also varied how socially skilled the cat robot was. "So, if the robot would be agreeable, the robot would ask, 'Oh, could I possibly make a suggestion now?' If it were not, it would say, 'It's my turn now. Do this!' "

At the end of the game, whether the robot was smart or dumb, nice or mean, a scientist authority figure modeled on Milgram's would make clear that the human needed to turn the cat robot off, and it was also made clear to them what the consequences of that would be: "They would essentially eliminate everything that the robot was — all of its memories, all of its behavior, all of its personality would be gone forever."

In videos of the experiment, you can clearly see a moral struggle as the research subject deals with the pleas of the machine. "You are not really going to switch me off, are you?" the cat robot begs, and the humans sit, confused and hesitating. "Yes. No. I will switch you off!" one female research subject says, and then doesn't switch the robot off.

"People started to have dialogues with the robot about this," Bartneck says, "Saying, 'No! I really have to do it now, I'm sorry! But it has to be done!' But then they still wouldn't do it."

There they sat, in front of a machine no more soulful than a hair dryer, a machine they knew intellectually was just a collection of electrical pulses and metal, and yet they paused.

And while eventually every participant killed the robot, it took them time to intellectually override their emotional queasiness — in the case of a helpful cat robot, around 35 seconds before they were able to complete the switching-off procedure. How long does it take you to switch off your stereo?

The Implications

On one level, there are clear practical implications to studies like these. Bartneck says the more we know about machine-human interaction, the better we can build our machines.

But on a more philosophical level, studies like these can help to track where we are in terms of our relationship to the evolving technologies in our lives.

"The relationship is certainly something that is in flux," Bartneck says. "There is no one way of how we deal with technology and it doesn't change — it is something that does change."

More and more intelligent machines are integrated into our lives. They come into our beds, into our bathrooms. And as they do — and as they present themselves to us differently — both Bartneck and Nass believe, our social responses to them will change.

Tuesday, January 22. 2013

3D printer to carve out world's first full-size building

Via c|net

-----

A rendering of the "Landscape House" by architect Janjaap Ruijssenaars.

(Credit: Universe Architecture)

Sure, we've heard of 3D-printed iPhone cases, dinosaur bones, and even a human fetus -- but something massive, like a building?

This is exactly what architect Janjaap Ruijssenaars has been working on. The Dutch native is planning to build what he calls a "Landscape House." This structure is two-stories and is laid out in a figure-eight shape. The idea is that this form can borrow from nature and also seamlessly fit into the outside world.

Ruijssenaars describes it on his Web site as "one surface folded in an endless mobius band," where "floors transform into ceilings, inside into outside."

The production of the building will be done on a 3D printer called the D-Shape, which was invented by Enrico Dini. The D-Shape uses a stereolithography printing process with sand and a binding agent -- letting builders create structures that are supposedly as strong as concrete.

According to the Los Angeles Times, the printer will lay down thousands of layers of sand to create 20 by 30-foot sections. These blocks will then be used to compile the building.

The "Landscape House" will be the first 3D-printed building and is estimated to cost between $5 million and $6 million, according to the BBC. Ruijssenaars plans to have it done sometime in 2014.

Monday, January 21. 2013

Filabot Makes 3D Printing Cheaper By Recycling Old Plastic

Via WebProNews

-----

Filament is expensive. Ask any 3D printing enthusiast and they will agree that the cost of the plastic filament used to print objects is oftentimes overpriced. If only there was a product that let you recycle all the worthless plastic sitting around your house into usable filament. Oh wait, there totally is.

Say hello to Filabot, a new machine that melts down junk plastic into the plastic filament used by the majority of desktop 3D printers. The machine can process a variety of plastics including HDPE, LDPE, ABS and PLA. The latter two are sold by major 3D printing companies, but now you can make your own if you’re up to melting down a few of your LEGO pieces.

Filabot already had a successful funding run on Kickstarter early last year when the creator, Tyler McNaney, asked for $10,000. In the end, he received $32,330 from 156 backers. Sixty-seven of those backers will be receiving their very own Filabot.

Not only is Filabot a great idea, but it solves one of the major hurdles facing 3D printing – sustainability. Not all 3D printers yet support PLA, a bio-degradable plastic. With Filabot, most plastics can now be melted down into filament to be used in future designs. It saves the creator money while putting the plastic in something besides a landfill. Now that’s what I call a win-win situation.

Friday, January 18. 2013

A more complete picture of the iTunes economy

Via ASYMCO

-----

As it did yesterday, on occasion Apple reports the cumulative total downloads and payments to developers. Since this is done in variable time intervals, it makes analysis of the value of the app store difficult.

But not impossible.

The provision of developer revenues means we can determine the pricing of apps. The pricing of apps and the download totals allows us to determine the revenue of the store. Using the time stamps of the reports allows us to determine these quantities over time.

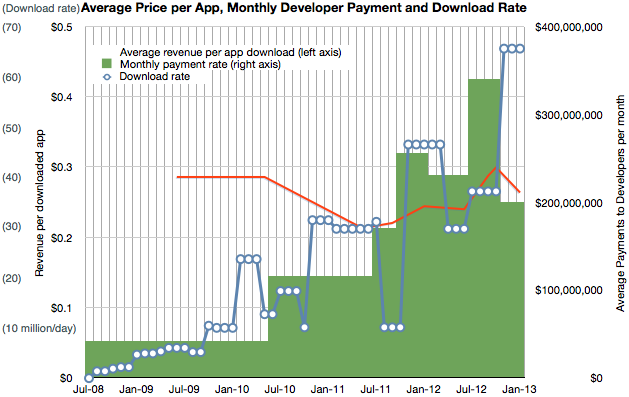

I’ve combined the data we have so far into the following graph.

It shows three quantities (on three separate scales) at a monthly resolution:

- Download rate (in millions/day, interpolated from download totals)

- Payment rate to developers (reported change in payment to developers/reported time interval)

- Resulting revenue per download (in red, trailing average over a 10 month period)

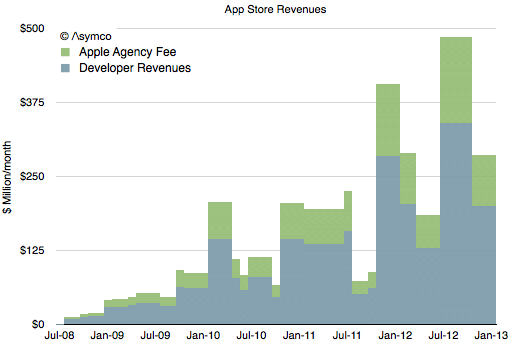

Having a price and quantity of app downloads allows for a complete picture of App Store revenues over time, shown below:

Note that I’ve separated the developer revenues from Apple’s own revenues. The blue area should total cumulative developer payments (to a degree of error.)

The green area above is what Apple includes in its financial reports as part of “Music” revenues. If we annualize these App Store revenues we can strip them out and show them separately from “other media” revenues.

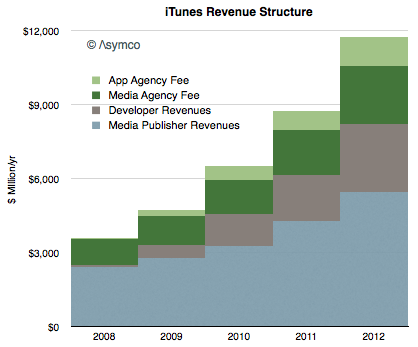

Concentrating on the green areas which represent what Apple “keeps” we see the following split:

Considering that Apple reports the iTunes store to being “slightly above break-even” then we can summarize the chart above as the operating expenses for the store.

Finally, taking into account all the information above we can draw a complete picture of how revenues flow through the App Store:

Here are some conclusions:

- The iTunes economy defined as gross revenues transacted through it is now about $12 billion/yr.

- Over the last five years content owners (media and app) received a total of $24 billion while Apple spent about $10 billion to create those sales

- Seen as a retail business, iTunes costs about $3.5 billion/yr to operate. This includes merchandising, payment processing and “shipping & handling”.

- Total revenues have risen steadily in a range of 32 to 38% compounded over the last 4 years.

- Apps are now a third of all iTunes revenues, (about $4 billion/yr) having taken that share in only 4.5 years.

- Non-app media still make up 2/3 of iTunes in terms of sales value but their growth is now 28% vs. about 50% for apps.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131055)

- Norton cyber crime study offers striking revenue loss statistics (101667)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100067)

- Norton cyber crime study offers striking revenue loss statistics (57896)

- The PC inside your phone: A guide to the system-on-a-chip (57463)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |