Entries tagged as ai

algorythm artificial intelligence big data cloud computing coding fft innovation&society network neural network program programming software technology android ad app google hardware history htc ios linux mobile mobile phone os sdk super collider tablet usb apple amazon cloud iphone microsoft siri arduino physical computing satellite sensors automation drone light robot wifi crowd-sourcing 3d printing data mining data visualisation art car flickr gui internet internet of things maps photos privacy 3d 3d scanner amd api ar army asus augmented reality camera chrome advertisements API book browser code computer history 3g cpu display facebook flash game geolocalisation gps botnet chrome os data center firefox c c++ cobol databse dna github transhumanity health smartobject touch app store cray web css database html html5 ie interface

Wednesday, November 23. 2011

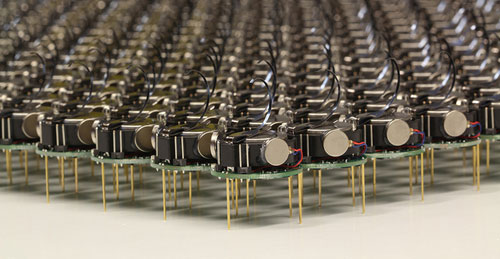

Kilobots - tiny, collaborative robots - are leaving the nest

Tuesday, November 08. 2011

The Big Picture: True Machine Intelligence & Predictive Power

Wednesday, October 19. 2011

Neuromancing the Cloud: How Siri Could Lead to Real Artificial Intelligence

Monday, October 17. 2011

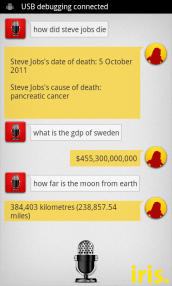

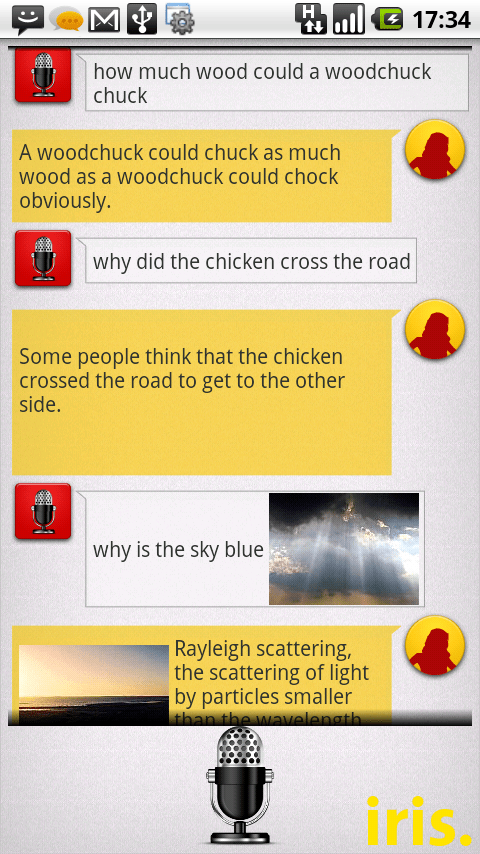

Iris Is (Sort Of) Siri For Android

Friday, July 22. 2011

Computer masters Civilization after reading the instructions

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131027)

- Norton cyber crime study offers striking revenue loss statistics (101637)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100042)

- Norton cyber crime study offers striking revenue loss statistics (57866)

- The PC inside your phone: A guide to the system-on-a-chip (57427)

Categories

Show tagged entries

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |