Entries tagged as history

Friday, November 22. 2013

Via Computer History Museum

-----

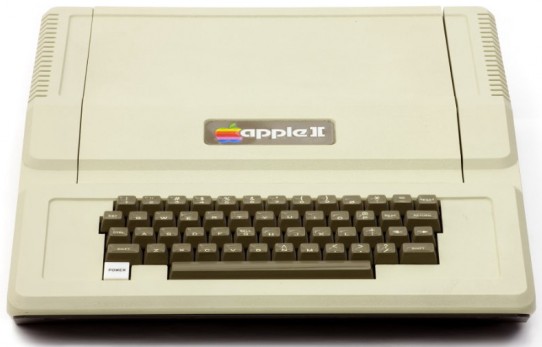

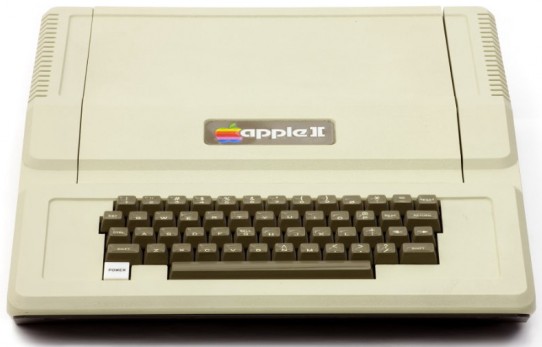

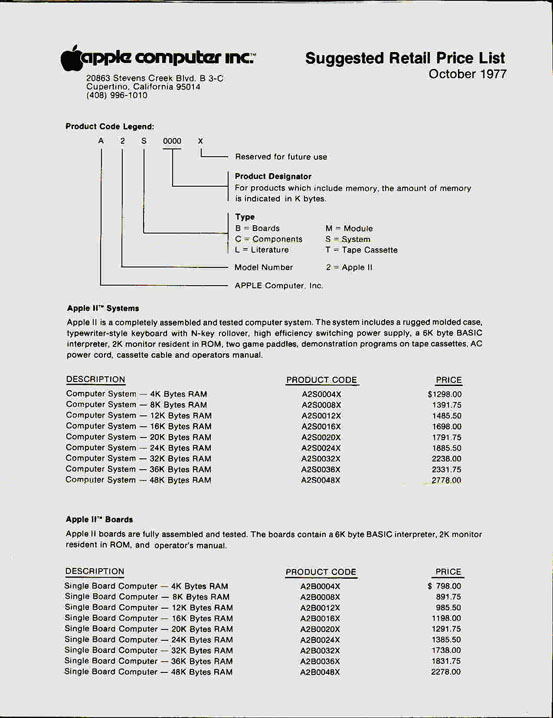

In June 1977 Apple Computer shipped their first mass-market computer:

the Apple II.

Unlike the Apple I, the Apple II was fully assembled and ready to use

with any display monitor. The version with 4K of memory cost $1298. It

had color, graphics, sound, expansion slots, game paddles, and a

built-in BASIC programming language.

What it didn’t have was a disk drive. Programs and data had to be

saved and loaded from cassette tape recorders, which were slow and

unreliable. The problem was that disks – even floppy disks – needed both

expensive hardware controllers and complex software.

Steve Wozniak solved the first problem. He

designed an incredibly clever floppy disk controller using only 8

integrated circuits, by doing in programmed logic what other controllers

did with hardware. With some

rudimentary software written by Woz and Randy Wigginton, it was

demonstrated at the Consumer Electronics Show in January 1978.

But where were they going to get the higher-level software to

organize and access programs and data on the disk? Apple only had about

15 employees, and none of them had both the skills and the time to work

on it.

The magician who pulled that rabbit out of the hat was Paul Laughton,

a contract programmer for Shepardson Microsystems, which was located in

the same Cupertino office park as Apple.

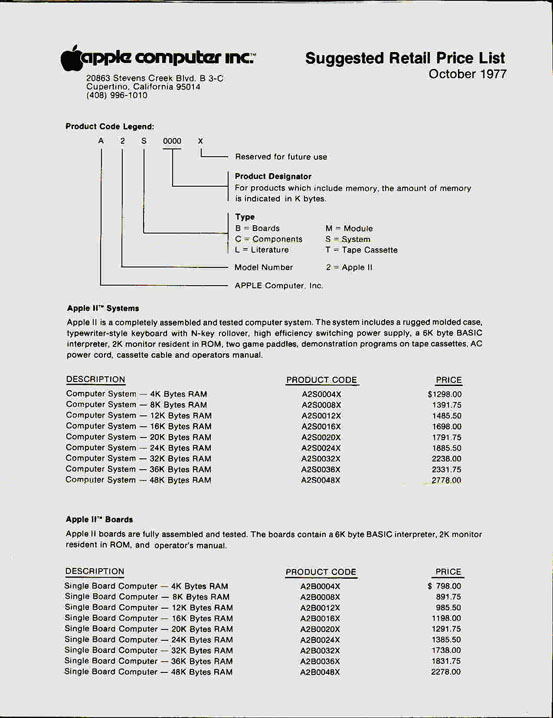

On April 10, 1978 Bob Shepardson and Steve Jobs signed a $13,000

one-page contract for a file manager, a BASIC interface, and utilities.

It specified that “Delivery will be May 15?, which was incredibly

aggressive. But, amazingly, “Apple II DOS version 3.1? was released in

June 1978.

With thanks to Paul Laughton, in collaboration with Dr. Bruce Damer, founder and curator of the DigiBarn Computer Museum, and with the permission of Apple Inc.,

we are pleased to make available the 1978 source code of Apple II DOS

for non-commercial use. This material is Copyright © 1978 Apple Inc.,

and may not be reproduced without permission from Apple.

There are seven files in this release that may be downloaded by clicking the hyperlinked filename on the left:

Thursday, May 02. 2013

Via BBC

-----

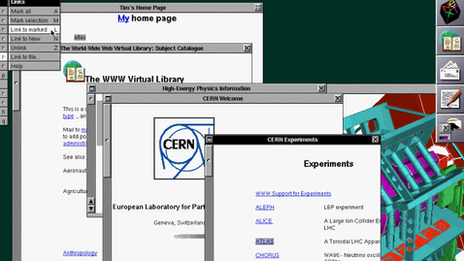

Lost to the world: The first website. At the time, few imagined how ubiquitous the technology would become

A team at the European Organisation for Nuclear Research (Cern) has launched a project to re-create the first web page.

The aim is to preserve the original hardware and software associated with the birth of the web.

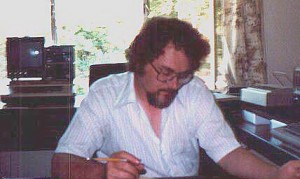

The world wide web was developed by Prof Sir Tim Berners-Lee while working at Cern.

The initiative coincides with the 20th anniversary of the research centre giving the web to the world.

According to Dan Noyes, the web

manager for Cern's communication group, re-creation of the world's first

website will enable future generations to explore, examine and think

about how the web is changing modern life.

"I want my children to be able to understand the significance

of this point in time: the web is already so ubiquitous - so, well,

normal - that one risks failing to see how fundamentally it has

changed," he told BBC News

"We are in a unique moment where we can still switch on the

first web server and experience it. We want to document and preserve

that".

The hope is that the restoration of the first web page and web site will serve as a reminder and inspiration of the web's fundamental values.

At the heart of the original web is technology to

decentralise control and make access to information freely available to

all. It is this architecture that seems to imbue those that work with

the web with a culture of free expression, a belief in universal access

and a tendency toward decentralising information. Subversive

It is the early technology's innate ability to subvert that makes re-creation of the first website especially interesting.

While I was at Cern it was clear in speaking to those

involved with the project that it means much more than refurbishing old

computers and installing them with early software: it is about

enshrining a powerful idea that they believe is gradually changing the

world.

I went to Sir Tim's old office where he worked at Cern's IT

department trying to find new ways to handle the vast amount of data the

particle accelerators were producing.

I was not allowed in because apparently the present incumbent is fed up with people wanting to go into the office.

But waiting outside was someone who worked at Cern as a young

researcher at the same time as Sir Tim. James Gillies has since risen

to be Cern's head of communications. He is occasionally referred to as

the organisation's half-spin doctor, a reference to one of the

properties of some sub-atomic particles.

Amazing dream

Mr Gillies is among those involved in the project. I asked him why he wanted to restore the first website.

"One of my dreams is to enable people to see what that early web experience was like," was the reply.

"You might have thought that the first browser would be very

primitive but it was not. It had graphical capabilities. You could edit

into it straightaway. It was an amazing thing. It was a very

sophisticated thing."

Those not heavily into web technology may be

sceptical of the idea that using a 20-year-old machine and software to

view text on a web page might be a thrilling experience.

But Mr Gillies and Mr Noyes believe that the first web page

and web site is worth resurrecting because embedded within the original

systems developed by Sir Tim are the principles of universality and

universal access that many enthusiasts at the time hoped would

eventually make the world a fairer and more equal place.

The first browser, for example, allowed users to edit and

write directly into the content they were viewing, a feature not

available on present-day browsers. Ideals eroded

And early on in the world wide web's development, Nicola

Pellow, who worked with Sir Tim at Cern on the www project, produced a

simple browser to view content that did not require an expensive

powerful computer and so made the technology available to anyone with a

simple computer.

According to Mr Noyes, many of the values that went into that

original vision have now been eroded. His aim, he says, is to "go back

in time and somehow preserve that experience".

Soon to be refurbished: The NeXT computer that was home to the world's first website

"This universal access of information and flexibility of

delivery is something that we are struggling to re-create and deal with

now.

"Present-day browsers offer gorgeous experiences but when we

go back and look at the early browsers I think we have lost some of the

features that Tim Berners-Lee had in mind."

Mr Noyes is reaching out to ask those who were involved in

the NeXT computers used by Sir Tim for advice on how to restore the

original machines. Awe

The machines were the most advanced of their time. Sir Tim

used two of them to construct the web. One of them is on show in an

out-of-the-way cabinet outside Mr Noyes's office.

I told him that as I approached the sleek black machine I

felt drawn towards it and compelled to pause, reflect and admire in awe.

"So just imagine the reaction of passers-by if it was

possible to bring the machine back to life," he responded, with a

twinkle in his eye.

The initiative coincides with the 20th anniversary of Cern giving the web away to the world free.

There was a serious discussion by Cern's

management in 1993 about whether the organisation should remain the home

of the web or whether it should focus on its core mission of basic

research in physics.

Sir Tim and his colleagues on the project argued that Cern should not claim ownership of the web. Great giveaway

Management agreed and signed a legal document that made the

web publicly available in such a way that no one could claim ownership

of it and that would ensure it was a free and open standard for everyone

to use.

Mr Gillies believes that the document is "the single most valuable document in the history of the world wide web".

He says: "Without it you would have had web-like things but

they would have belonged to Microsoft or Apple or Vodafone or whoever

else. You would not have a single open standard for everyone."

The web has not brought about the degree of social change

some had envisaged 20 years ago. Most web sites, including this one,

still tend towards one-way communication. The web space is still

dominated by a handful of powerful online companies.

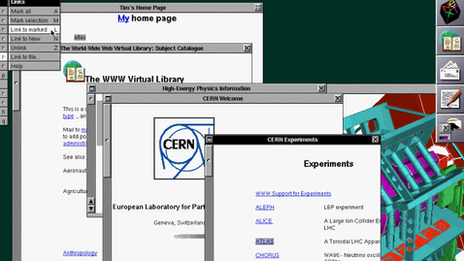

A screen shot from the first browser:

Those who saw it say it was "amazing and sophisticated". It allowed

people to write directly into content, a feature that modern-day

browsers no longer have

But those who study the world wide web, such as Prof Nigel

Shadbolt, of Southampton University, believe the principles on which it

was built are worth preserving and there is no better monument to them

than the first website.

"We have to defend the principle of universality and universal access," he told BBC News.

"That it does not fall into a special set of standards that

certain organisations and corporations control. So keeping the web free

and freely available is almost a human right."

Monday, September 03. 2012

Via input output

-----

In the fall of 1977, I experimented with a newfangled PC, a Radio Shack TRS-80. For data storage it used—I kid you not—a cassette tape player. Tape had a long history with computing; I had used the IBM 2420 9-track tape system on IBM 360/370 mainframes to load software and to back-up data. Magnetic tape was common for storage in pre-personal computing days, but it had two main annoyances: it held tiny amounts of data, and it was slower than a slug on a cold spring morning. There had to be something better, for those of us excited about technology. And there was: the floppy disk.

Welcome to the floppy disk family: 8”, 5.25” and 3.5”

In the mid-70s I had heard about floppy drives, but they were expensive, exotic equipment. I didn't know that IBM had decided as early as 1967 that tape-drives, while fine for back-ups, simply weren't good enough to load software on mainframes. So it was that Alan Shugart assigned David L. Noble to lead the development of “a reliable and inexpensive system for loading microcode into the IBM System/370 mainframes using a process called Initial Control Program Load (ICPL).” From this project came the first 8-inch floppy disk.

Oh yes, before the 5.25-inch drives you remember were the 8-inch floppy. By 1978 I was using them on mainframes; later I would use them on Online Computer Library Center (OCLC) dedicated cataloging PCs.

The 8-inch drive began to show up in 1971. Since they enabled developers and users to stop using the dreaded paper tape (which were easy to fold, spindle, and mutilate, not to mention to pirate) and the loathed IBM 5081 punch card. Everyone who had ever twisted a some tape or—the horror!—dropped a deck of Hollerith cards was happy to adopt 8-inch drives.

Before floppy drives, we often had to enter data using punch cards.

Besides, the early single-sided 8-inch floppy could hold the data of up to 3,000 punch cards, or 80K to you. I know that's nothing today — this article uses up 66K with the text alone – but then it was a big deal.

Some early model microcomputers, such as the Xerox 820 and Xerox Alto, used 8-inch drives, but these first generation floppies never broke through to the larger consumer market. That honor would go to the next generation of the floppy: the 5.25 inch model.

By 1972, Shugart had left IBM and founded his own company, Shugart Associates. In 1975, Wang, which at the time owned the then big-time dedicated word processor market, approached Shugart about creating a computer that would fit on top of a desk. To do that, Wang needed a smaller, cheaper floppy disk.

According to Don Massaro (PDF link), another IBMer who followed Shugart to the new business, Wang’s founder Charles Wang said, “I want to come out with a much lower-end word processor. It has to be much lower cost and I can't afford to pay you $200 for your 8" floppy; I need a $100 floppy.”

So, Shugart and company started working on it. According to Massaro, “We designed the 5 1/4" floppy drive in terms of the overall design, what it should look like, in a car driving up to Herkimer, New York to visit Mohawk Data Systems.” The design team stopped at a stationery store to buy cardboard while trying to figure out what size the diskette should be. “It's real simple, the reason why it was 5¼,” he said. “5 1/4 was the smallest diskette that you could make that would not fit in your pocket. We didn't want to put it in a pocket because we didn't want it bent, okay?”

Shugart also designed the diskette to be that size because an analysis of the cassette tape drives and their bays in microcomputers showed that a 5.25” drive was as big as you could fit into the PCs of the day.

According to another story from Jimmy Adkisson, a Shugart engineer, “Jim Adkisson and Don Massaro were discussing the proposed drive's size with Wang. The trio just happened to be doing their discussing at a bar. An Wang motioned to a drink napkin and stated 'about that size' which happened to be 5 1/4-inches wide.”

Wang wasn’t the most important element in the success of the 5.25-inch floppy. George Sollman, another Shugart engineer, took an early model of the 5.25” drive to a Home Brew Computer Club meeting. “The following Wednesday or so, Don came to my office and said, 'There's a bum in the lobby,’” Sollman says. “‘And, in marketing, you're in charge of cleaning up the lobby. Would you get the bum out of the lobby?’ So I went out to the lobby and this guy is sitting there with holes in both knees. He really needed a shower in a bad way but he had the most dark, intense eyes and he said, 'I've got this thing we can build.'”

The bum's name was Steve Jobs and the “thing” was the Apple II.

Apple had also used cassette drives for its first computers. Jobs knew his computers also needed a smaller, cheaper, and better portable data storage system. In late 1977, the Apple II was made available with optional 5.25” floppy drives manufactured by Shugart. One drive ordinarily held programs, while the other could be used to store your data. (Otherwise, you had to swap floppies back-and-forth when you needed to save a file.)

The PC that made floppy disks a success: 1977's Apple II

The floppy disk seems so simple now, but it changed everything. As IBM's history of the floppy disk states, this was a big advance in user-friendliness. “But perhaps the greatest impact of the floppy wasn’t on individuals, but on the nature and structure of the IT industry. Up until the late 1970s, most software applications for tasks such as word processing and accounting were written by the personal computer owners themselves. But thanks to the floppy, companies could write programs, put them on the disks, and sell them through the mail or in stores. 'It made it possible to have a software industry,' says Lee Felsenstein, a pioneer of the PC industry who designed the Osborne 1, the first mass-produced portable computer. Before networks became widely available for PCs, people used floppies to share programs and data with each other—calling it the 'sneakernet.'”

In short, it was the floppy disk that turned microcomputers into personal computers.

Which of these drives did you own?

The success of the Apple II made the 5.25” drive the industry standard. The vast majority of CP/M-80 PCs, from the late 70s to early 80s, used this size floppy drive. When the first IBM PC arrived in 1981 you had your choice of one or two 160 kilobyte (K – yes, just oneK) floppy drives.

Throughout the early 80s, the floppy drive became the portable storage format. (Tape quickly was relegated to business back-ups.) At first, the floppy disk drives were only built with one read/write head, but another set of heads were quickly incorporated. This meant that when the IBM XT PC arrived in 1983, double-sided floppies could hold up to 360K of data.

There were some bumps along the road to PC floppy drive compatibility. Some companies, such as DEC with its DEC Rainbow, introduced its own non-compatible 5.25” floppy drives. They were single-sided but with twice the density, and in 1983 a single box of 10 disks cost $45 – twice the price of the standard disks.

In the end, though, market forces kept the various non-compatible disk formats from splitting the PC market into separate blocks. (How the data was stored was another issue, however. Data stored on a CP/M system was unreadable on a PC-DOS drive, for examples, so dedicated applications like Media Master promised to convert data from one format to another.)

That left lots of room for innovation within the floppy drive mainstream. In 1984, IBM introduced the IBM Advanced Technology (AT) computer. This model came with a high-density 5.25-inch drive, which could handle disks that could up hold up to 1.2MB of data.

A variety of other floppy drives and disk formats were tried. These included 2.0, 2.5, 2.8, 3.0, 3.25, and 4.0 inch formats. Most quickly died off, but one, the 3.5” size – introduced by Sony in 1980 – proved to be a winner.

The 3.5 disk didn't really take off until 1982. Then, the Microfloppy Industry Committeeapproved a variation of the Sony design and the “new” 3.5” drive was quickly adopted by Apple for the Macintosh, by Commodore for the Amiga, and by Atari for its Atari ST PC. The mainstream PC market soon followed and by 1988, the more durable 3.5” disks outsold the 5.25” floppy disks. (During the transition, however, most of us configured our PCs to have both a 3.5” drive and a 5.25” drive, in addition to the by-now-ubiquitous hard disks. Still, most of us eventually ran into at least one situation in which we had a file on a 5.25” disk and no floppy drive to read it on.)

I

The one 3.5” diskette that everyone met at one time or another: An AOL install disk.

The first 3.5” disks could only hold 720K. But they soon became popular because of the more convenient pocket-size format and their somewhat-sturdier construction (if you rolled an office chair over one of these, you had a chance that the data might survive). Another variation of the drive, using Modified Frequency Modulation (MFM) encoding, pushed 3.5” diskettes storage up to 1.44Mbs in IBM's PS/2 and Apple's Mac IIx computers in the mid to late 1980s.

By then, though floppy drives would continue to evolve, other portable technologies began to surpass them.

In 1991, Jobs introduced the extended-density (ED) 3.5” floppy on his NeXT computer line. These could hold up to 2.8MBs. But it wasn't enough. A variety of other portable formats that could store more data came along, such as magneto-optical drives and Iomega's Zip drive, and they started pushing floppies out of business.

The real floppy killers, though, were read-writable CDs, DVDs, and, the final nail in the coffin: USB flash drives. Today, a 64GB flash drive can hold more data than every floppy disk I've ever owned all rolled together.

Apple prospered the most from the floppy drive but ironically was the first to abandon it as read-writable CDs and DVDs took over. The 1998 iMac was the first consumer computer to ship without any floppy drive.

However, the floppy drive took more than a decade to die. Sony, which at the end owned 70% of what was left of the market, announced in 2010 that it was stopping the manufacture of 3.5” diskettes.

Today, you can still buy new 1.44MB floppy drives and floppy disks, but for the other formats you need to look to eBay or yard sales. If you really want a new 3.5” drive or disks, I'd get them sooner than later. Their day is almost done.

But, as they disappear even from memory, we should strive to remember just how vitally important floppy disks were in their day. Without them, our current computer world simply could not exist. Before the Internet was open to the public, it was floppy disks that let us create and trade programs and files. They really were what put the personal in “personal computing.”

Thursday, May 10. 2012

Via Christian Babski

-----

Remember your first time being sick in front of a screen!

Web Wolfenstein

Monday, December 19. 2011

Via TNW

-----

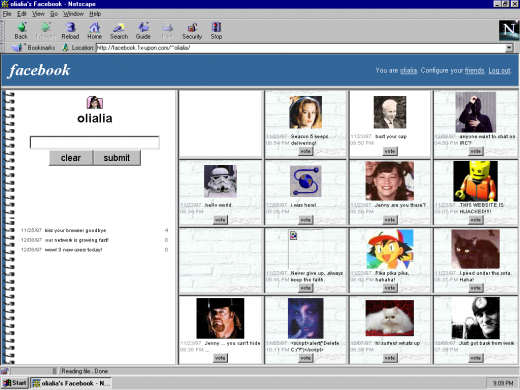

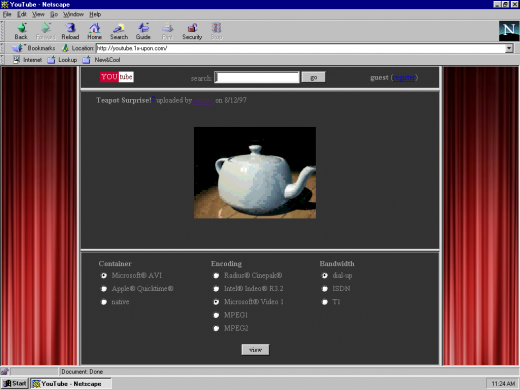

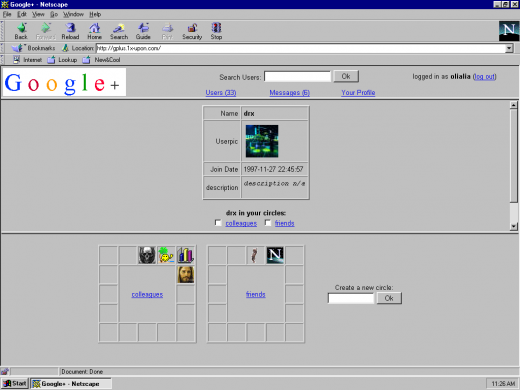

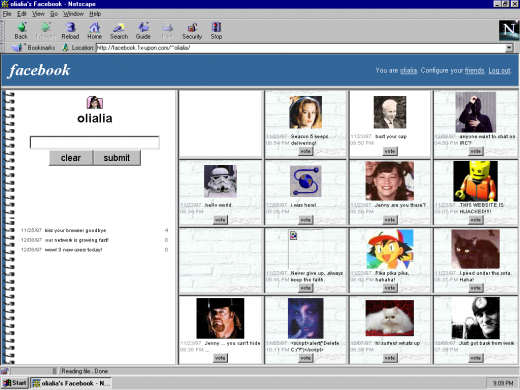

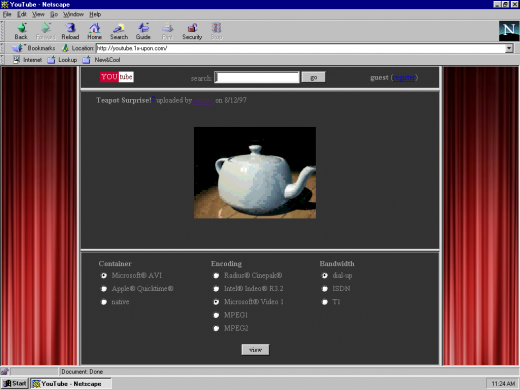

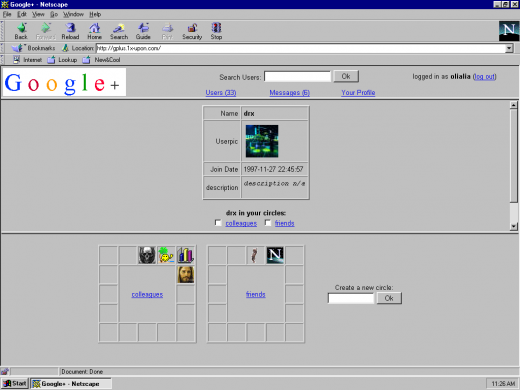

Once Upon is a brilliant project that has recreated three popular sites from today as if they were built in the dial-up era, in 1997.

Witness Facebook, with no real-names policy and photos displayed in

an ugly grey table; YouTube, with a choice of encoding options to select

before you watch a video, and Google+, where Circles of contacts are

displayed as far easier to render squares. Be prepared for a wait though

– the recreations are limited to 8kbps transfer speeds, as if you were

loading it on a particularly slow dial-up connection.

This is perhaps the most extreme version of Web nostalgia we’ve seen. On a related note, these imaginings of what online social networking would have been like in centuries gone by are worth a look. Also, our report on 10 websites that changed the world is worth reading- they’re not what you might expect.

? Once Upon

Thursday, October 20. 2011

Via ars technica

By Matthew Lasar

-----

When Tim Berners-Lee arrived at CERN,

Geneva's celebrated European Particle Physics Laboratory in 1980, the

enterprise had hired him to upgrade the control systems for several of

the lab's particle accelerators. But almost immediately, the inventor of

the modern webpage noticed a problem: thousands of people were floating

in and out of the famous research institute, many of them temporary

hires.

"The big challenge for contract programmers was to try to

understand the systems, both human and computer, that ran this fantastic

playground," Berners-Lee later wrote. "Much of the crucial information

existed only in people's heads."

So in his spare time, he wrote up some software to address this

shortfall: a little program he named Enquire. It allowed users to create

"nodes"—information-packed index card-style pages that linked to other

pages. Unfortunately, the PASCAL application ran on CERN's proprietary

operating system. "The few people who saw it thought it was a nice idea,

but no one used it. Eventually, the disk was lost, and with it, the

original Enquire."

Some years later Berners-Lee returned to CERN.

This time he relaunched his "World Wide Web" project in a way that

would more likely secure its success. On August 6, 1991, he published an

explanation of WWW on the alt.hypertext usegroup. He also released a code library, libWWW, which he wrote with his assistant

Jean-François Groff. The library allowed participants to create their own Web browsers.

"Their efforts—over half a dozen browsers within 18 months—saved

the poorly funded Web project and kicked off the Web development

community," notes a commemoration of this project by the Computer History Museum in Mountain View, California. The best known early browser was Mosaic, produced by Marc Andreesen and Eric Bina at the

National Center for Supercomputing Applications (NCSA).

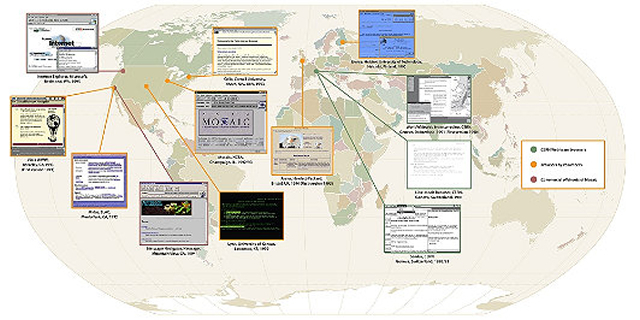

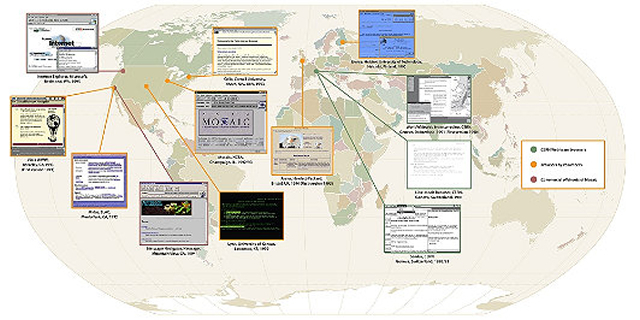

Mosaic was soon spun into Netscape, but it was not the first browser. A map

assembled by the Museum offers a sense of the global scope of the early

project. What's striking about these early applications is that they

had already worked out many of the features we associate with later

browsers. Here is a tour of World Wide Web viewing applications, before

they became famous.

The CERN browsers

Tim Berners-Lee's original 1990 WorldWideWeb browser was both a

browser and an editor. That was the direction he hoped future browser

projects would go. CERN has put together a reproduction

of its formative content. As you can see in the screenshot below, by

1993 it offered many of the characteristics of modern browsers.

The software's biggest limitation was that it ran on the NeXTStep

operating system. But shortly after WorldWideWeb, CERN mathematics

intern Nicola Pellow wrote a line mode browser that could function

elsewhere, including on UNIX and MS-DOS networks. Thus "anyone could

access the web," explains Internet historian Bill Stewart, "at that point consisting primarily of the CERN phone book."

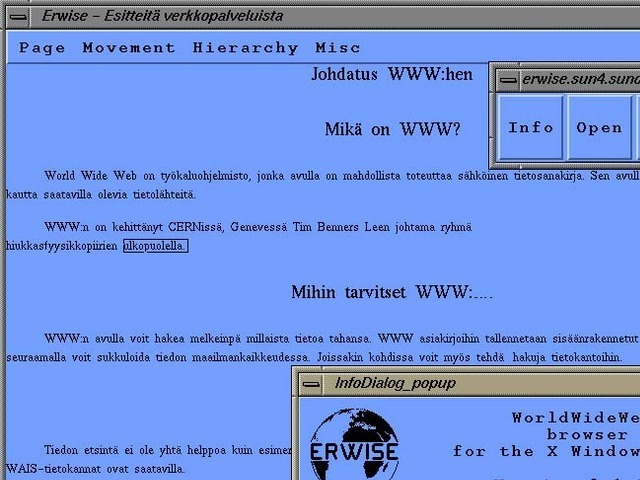

Erwise

Erwise came next. It was written by four Finnish college students in 1991 and released in 1992. Erwise is credited as the first browser that offered a graphical interface. It could also search for words on pages.

Berners-Lee wrote a review

of Erwise in 1992. He noted its ability to handle various fonts,

underline hyperlinks, let users double-click them to jump to other

pages, and to host multiple windows.

"Erwise looks very smart," he declared, albeit puzzling over a

"strange box which is around one word in the document, a little like a

selection box or a button. It is neither of these—perhaps a handle for

something to come."

So why didn't the application take off? In a later interview, one of

Erwise's creators noted that Finland was mired in a deep recession at

the time. The country was devoid of angel investors.

"We could not have created a business around Erwise in Finland then," he explained.

"The only way we could have made money would have been to continue our

developing it so that Netscape might have finally bought us. Still, the

big thing is, we could have reached the initial Mosaic level with

relatively small extra work. We should have just finalized Erwise and

published it on several platforms."

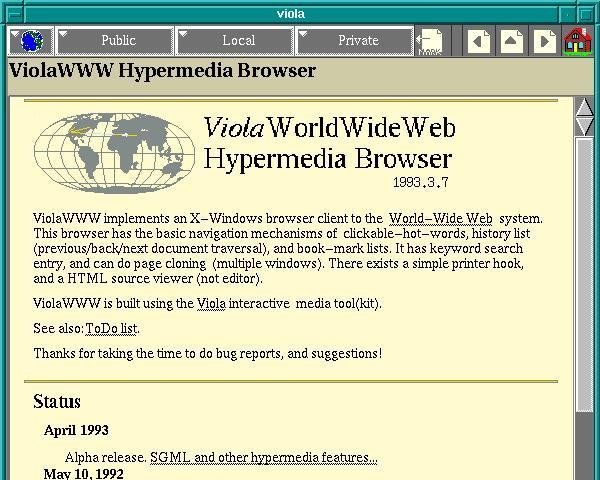

ViolaWWW

ViolaWWW was released in April

of 1992. Developer

Pei-Yuan Wei wrote it at the University of California at Berkeley via

his UNIX-based Viola programming/scripting language. No, Pei Wei didn't

play the viola, "it just happened to make a snappy abbreviation" of

Visually Interactive Object-oriented Language and Application, write

James Gillies and Robert Cailliau in their history of the World Wide

Web.

Wei appears to have gotten his inspiration from the early Mac

program HyperCard, which allowed users to build matrices of formatted

hyper-linked documents. "HyperCard was very compelling back then, you

know graphically, this hyperlink thing," he later recalled. But the

program was "not very global and it only worked on Mac. And I didn't

even have a Mac."

But he did have access to UNIX X-terminals at UC Berkeley's

Experimental Computing Facility. "I got a HyperCard manual and looked at

it and just basically took the concepts and implemented them in

X-windows." Except, most impressively, he created them via his Viola

language.

One of the most significant and innovative features of ViolaWWW was

that it allowed a developer to embed scripts and "applets" in the

browser page. This anticipated the huge wave of Java-based applet

features that appeared on websites in the later 1990s.

In his documentation, Wei also noted various "misfeatures" of ViolaWWW, most notably its inaccessibility to PCs.

- Not ported to PC platform.

- HTML Printing is not supported.

- HTTP is not interruptable, and not multi-threaded.

- Proxy is still not supported.

- Language interpreter is not multi-threaded.

"The author is working on these problems... etc," Wei acknowledged

at the time. Still, "a very neat browser useable by anyone: very

intuitive and straightforward," Berners-Lee concluded in his review

of ViolaWWW. "The extra features are probably more than 90% of 'real'

users will actually use, but just the things which an experienced user

will want."

Midas and Samba

In September of 1991, Stanford Linear Accelerator physicist Paul

Kunz visited CERN. He returned with the code necessary to set up the

first North American Web server at SLAC. "I've just been to CERN," Kunz

told SLAC's head librarian Louise Addis, "and I found this wonderful

thing that a guy named Tim Berners-Lee is developing. It's just the

ticket for what you guys need for your database."

Addis agreed. The site's head librarian put the research center's

key database over the Web. Fermilab physicists set up a server shortly

after.

Then over the summer of 1992 SLAC physicist Tony Johnson wrote Midas, a graphical browser for the Stanford physics community. The big draw

for Midas users was that it could display postscript documents, favored

by physicists because of their ability to accurately reproduce

paper-scribbled scientific formulas.

"With these key advances, Web use surged in the high energy physics community," concluded a 2001 Department of Energy assessment of SLAC's progress.

Meanwhile, CERN associates Pellow and Robert Cailliau released the

first Web browser for the Macintosh computer. Gillies and Cailliau

narrate Samba's development.

For Pellow, progress in getting Samba up and running was slow,

because after every few links it would crash and nobody could work out

why. "The Mac browser was still in a buggy form,' lamented Tim

[Berners-Lee] in a September '92 newsletter. 'A W3 T-shirt to the first

one to bring it up and running!" he announced. The T shirt duly went to

Fermilab's John Streets, who tracked down the bug, allowing Nicola

Pellow to get on with producing a usable version of Samba.

Samba "was an attempt to port the design of the original WWW

browser, which I wrote on the NeXT machine, onto the Mac platform,"

Berners-Lee adds,

"but was not ready before NCSA [National Center for Supercomputing

Applications] brought out the Mac version of Mosaic, which eclipsed it."

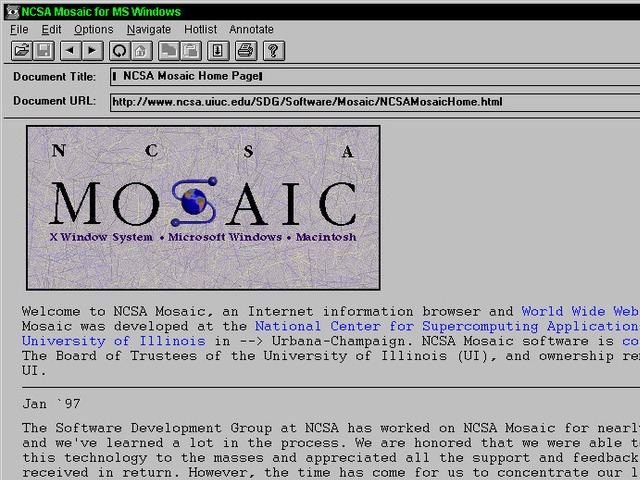

Mosaic

Mosaic was "the spark that lit the Web's explosive growth in 1993,"

historians Gillies and Cailliau explain. But it could not have been

developed without forerunners and the NCSA's University of Illinois

offices, which were equipped with the best UNIX machines. NCSA also had

Dr. Ping Fu, a PhD computer graphics wizard who had worked on morphing

effects for Terminator 2. She had recently hired an assistant named Marc Andreesen.

"How about you write a graphical interface for a browser?" Fu

suggested to her new helper. "What's a browser?" Andreesen asked. But

several days later NCSA staff member Dave Thompson gave a demonstration

of Nicola Pellow's early line browser and Pei Wei's ViolaWWW. And just

before this demo, Tony Johnson posted the first public release of Midas.

The latter software set Andreesen back on his heels. "Superb!

Fantastic! Stunning! Impressive as hell!" he wrote to Johnson. Then

Andreesen got NCSA Unix expert Eric Bina to help him write their own

X-browser.

Mosaic offered many new web features, including support for video

clips, sound, forms, bookmarks, and history files. "The striking thing

about it was that unlike all the earlier X-browsers, it was all

contained in a single file," Gillies and Cailliau explain:

Installing it was as simple as pulling it across the network and

running it. Later on Mosaic would rise to fame because of the

<IMG> tag that allowed you to put images inline for the first

time, rather than having them pop up in a different window like Tim's

original NeXT browser did. That made it easier for people to make Web

pages look more like the familiar print media they were use to; not

everyone's idea of a brave new world, but it certainly got Mosaic

noticed.

"What I think Marc did really well," Tim Berners-Lee later wrote,

"is make it very easy to install, and he supported it by fixing bugs via

e-mail any time night or day. You'd send him a bug report and then two

hours later he'd mail you a fix."

Perhaps Mosaic's biggest breakthrough, in retrospect, was that it

was a cross-platform browser. "By the power vested in me by nobody in

particular, X-Mosaic is hereby released," Andreeson proudly declared on

the www-talk group on January 23, 1993. Aleks Totic unveiled his Mac

version a few months later. A PC version came from the hands of Chris

Wilson and Jon Mittelhauser.

The Mosaic browser was based on Viola and Midas, the Computer History museum's exhibit notes.

And it used the CERN code library. "But unlike others, it was reliable,

could be installed by amateurs, and soon added colorful graphics within

Web pages instead of as separate windows."

A guy from Japan

But Mosaic wasn't the only innovation to show up on the scene around that same time. University of Kansas student Lou Montulli

adapted a campus information hypertext browser for the Internet and

Web. It launched in March, 1993. "Lynx quickly became the preferred web

browser for character mode terminals without graphics, and remains in

use today," historian Stewart explains.

And at Cornell University's Law School, Tom Bruce was writing a Web

application for PCs, "since those were the computers that lawyers tended

to use," Gillies and Cailliau observe. Bruce unveiled his browser Cello on June 8, 1993, "which was soon being downloaded at a rate of 500 copies a day."

Six months later, Andreesen was in Mountain View, California, his

team poised to release Mosaic Netscape on October 13, 1994. He, Totic,

and Mittelhauser nervously put the application up on an FTP server. The

latter developer later recalled the moment. "And it was five minutes and

we're sitting there. Nothing has happened. And all of a sudden the

first download happened. It was a guy from Japan. We swore we'd send him

a T shirt!"

But what this complex story reminds is that is that no innovation is

created by one person. The Web browser was propelled into our lives by

visionaries around the world, people who often didn't quite understand

what they were doing, but were motivated by curiosity, practical

concerns, or even playfulness. Their separate sparks of genius kept the

process going. So did Tim Berners-Lee's insistence that the project stay

collaborative and, most importantly, open.

"The early days of the web were very hand-to-mouth," he writes. "So many things to do, such a delicate flame to keep alive."

Further reading

- Tim Berners-Lee, Weaving the Web: The Original Design and Ultimate Destiny of the World Wide Web

- James Gillies and R. Cailliau, How the web was born

- Bill Stewart, Living Internet (www.livinginternet.com)

Friday, July 29. 2011

Via POPSCI

By Rebecca Boyle

-----

"Spatial humanities," the future of history

Even using the most detailed sources, studying history often requires

a great imagination, so historians can visualize what the past looked

and felt like. Now, new computer-assisted data analysis can help them really see it.

Geographic Information Systems, which can analyze information related

to a physical location, are helping historians and geographers study

past landscapes like Gettysburg, reconstructing what Robert E. Lee would

have seen from Seminary Ridge. Researchers are studying the parched

farmlands of the 1930s Dust Bowl, and even reconstructing scenes from

Shakespeare’s 17th-century London.

But far from simply adding layers of complexity to historical study,

GIS-enhanced landscape analysis is leading to new findings, the New York Times

reports. Historians studying the Battle of Gettysburg have shed light

on the tactical decisions that led to the turning point in the Civil

War. And others examining records from the Dust Bowl era have found that

extensive and irresponsible land use was not necessarily to blame for

the disaster.

GIS has long been used by city planners who want to record changes to

the landscape over time. And interactive map technology like Google

Maps has led to several new discoveries. But by analyzing data that describes the physical attributes of a place, historians are finding answers to new questions.

Anne Kelly Knowles and colleagues at Middlebury College in Vermont

culled information from historical maps, military documents explaining

troop positions, and even paintings to reconstruct the Gettysburg

battlefield. The researchers were able to explain what Robert E. Lee

could and could not see from his vantage points at the Lutheran seminary

and on Seminary Hill. He probably could not see the Union forces

amassing on the eastern side of the battlefield, which helps explain

some of his tactical decisions, Knowles said.

Geoff Cunfer at the University of Saskatchewan studied a trove of

data from all 208 affected counties in Texas, New Mexico, Colorado,

Oklahoma and Kansas — annual precipitation reports, wind direction,

agricultural censuses and other data that would have been impossible to

sift through without the help of a computer. He learned dust storms were

common throughout the 19th century, and that areas that saw nary a

tiller blade suffered just as much.

The new data-mapping phenomenon is known as spatial humanities, the Times reports. Check out their story to find out how advanced technology is the future of history.

[New York Times]

Thursday, July 28. 2011

Via Android Tapp

-----

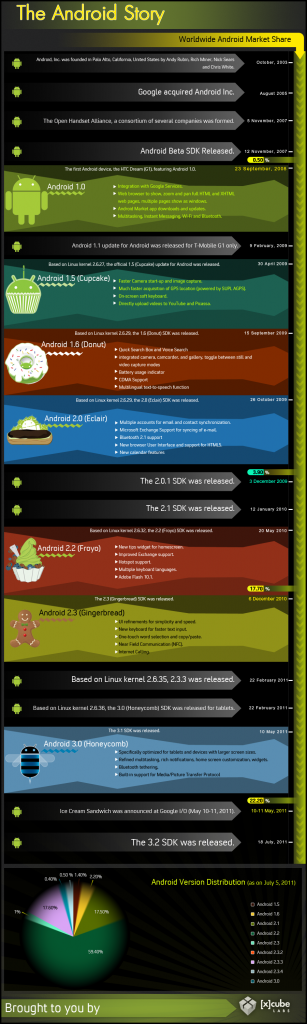

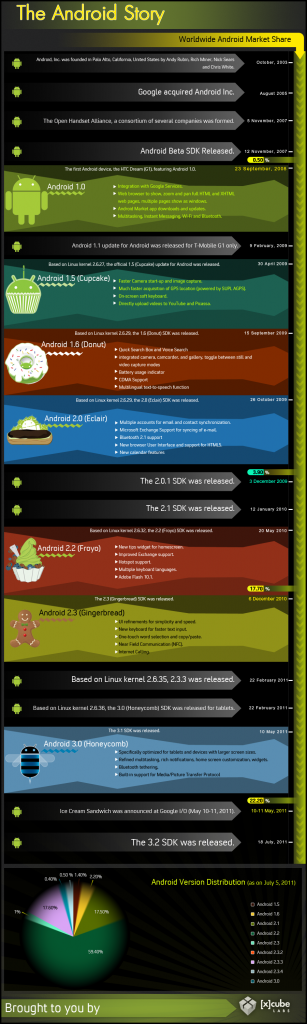

![The History of Android Version Releases [Infographic]](http://blog.computedby.com/cby/images/46_1313483255_0.jpg)

Check out this infographic by [x]cubelabs showing the history of Android version releases to date… even tossing in a factoid of when Android was officially started then acquired by Google. The graphic shows key feature highlights in each milestone and concludes with today’s snapshot, which shows most Android devices with Android 2.2 (Froyo). Have a look!

Saturday, July 09. 2011

Via Businessweek

-----

The Real Veterans of Technology To survive for more than 100 years, tech companies such as Siemens, Nintendo, and IBM have had to innovate

By Antoine Gara

When IBM turned 100 on June 16, it joined a surprising number of tech companies that have been evolving in order to survive since long before there was a Silicon Valley. Here’s a sampling of tech centenerians, and some of the breakthroughs that helped fuel their longevity.

Siemens: Topical Press Agency/Getty Images; Western Union: Hulton Archive/Getty Images; Diebold: Diebold Inc; Ericsson: SSPL/Getty Images; Nintendo: Tim Whitby/Alamy; Kodak: George Eastman House

|

![The History of Android Version Releases [Infographic]](http://blog.computedby.com/cby/images/46_1313483255_0.jpg)