Entries tagged as mobile

Related tags

3d camera flash game hardware headset history mobile phone software technology tracking virtual reality web wiki www 3g gsm lte network ai algorythm android apple arduino automation crowd-sourcing data mining data visualisation innovation&society neural network programming robot sensors siri ad app google htc ios linux os sdk super collider tablet usb microsoft windows 8 amazon cloud iphone ar augmented reality artificial intelligence drone light wifi cpu amd ibm intel nvidia qualcomm art big data car flickr gui internet internet of things maps photos privacy display epaper interface touch wireless army virus facebook data center energy social network html geolocalisation gps api book chrome os glass laptop mirrorTuesday, June 24. 2014

New open-source router firmware opens your Wi-Fi network to strangers

ComputedBy - The idea to share a WiFi access point is far to be a new one (it is obviously as old as the technology of the WiFi access point itself), but previous solutions were not addressing many issues (including the legal ones) that this proposal seems finally to consider seriously. This may really succeed in transforming a ridiculously endless utopia in something tangible!

Now, Internet providers (including mobile networks) may have a word to say about that. Just by changing their terms of service they can just make this practice illegal... as business does not rhyme with effectiveness (yes, I know, that is strange!!...) neither with objectivity. It took some time but geographical boundaries were raised up over the Internet (which is somehow a as impressive as ridiculous achievement when you think about it), so I'm pretty sure 'they' can find a work around to make this idea not possible or put their hands over it.

Via ars technica

-----

We’ve often heard security folks explain their belief that one of the best ways to protect Web privacy and security on one's home turf is to lock down one's private Wi-Fi network with a strong password. But a coalition of advocacy organizations is calling such conventional wisdom into question.

Members of the “Open Wireless Movement,” including the Electronic Frontier Foundation (EFF), Free Press, Mozilla, and Fight for the Future are advocating that we open up our Wi-Fi private networks (or at least a small slice of our available bandwidth) to strangers. They claim that such a random act of kindness can actually make us safer online while simultaneously facilitating a better allocation of finite broadband resources.

The OpenWireless.org website explains the group’s initiative. “We are aiming to build technologies that would make it easy for Internet subscribers to portion off their wireless networks for guests and the public while maintaining security, protecting privacy, and preserving quality of access," its mission statement reads. "And we are working to debunk myths (and confront truths) about open wireless while creating technologies and legal precedent to ensure it is safe, private, and legal to open your network.”

One such technology, which EFF plans to unveil at the Hackers on Planet Earth (HOPE X) conference next month, is open-sourced router firmware called Open Wireless Router. This firmware would enable individuals to share a portion of their Wi-Fi networks with anyone nearby, password-free, as Adi Kamdar, an EFF activist, told Ars on Friday.

Home network sharing tools are not new, and the EFF has been touting the benefits of open-sourcing Web connections for years, but Kamdar believes this new tool marks the second phase in the open wireless initiative. Unlike previous tools, he claims, EFF’s software will be free for all, will not require any sort of registration, and will actually make surfing the Web safer and more efficient.

Open Wi-Fi initiative members have argued that the act of providing wireless networks to others is a form of “basic politeness… like providing heat and electricity, or a hot cup of tea” to a neighbor, as security expert Bruce Schneier described it.

Walled off

Kamdar said that the new firmware utilizes smart technologies that prioritize the network owner's traffic over others', so good samaritans won't have to wait for Netflix to load because of strangers using their home networks. What's more, he said, "every connection is walled off from all other connections," so as to decrease the risk of unwanted snooping.

Additionally, EFF hopes that opening one’s Wi-Fi network will, in the long run, make it more difficult to tie an IP address to an individual.

“From a legal perspective, we have been trying to tackle this idea that law enforcement and certain bad plaintiffs have been pushing, that your IP address is tied to your identity. Your identity is not your IP address. You shouldn't be targeted by a copyright troll just because they know your IP address," said Kamdar.

This isn’t an abstract problem, either. Consider the case of the Californian who, after allowing a friend access to his home Wi-Fi network, found his home turned inside-out by police officers asking tough questions about child pornography. The man later learned that his houseguest had downloaded illicit materials, thus subjecting the homeowner to police interrogation. Should a critical mass begin to open private networks to strangers, the practice of correlating individuals with IP addresses would prove increasingly difficult and therefore might be reduced.

While the EFF firmware will initially be compatible with only one specific router, the organization would like to eventually make it compatible with other routers and even, perhaps, develop its own router. “We noticed that router software, in general, is pretty insecure and inefficient," Kamdar said. “There are a few major players in the router space. Even though various flaws have been exposed, there have not been many fixes.”

Wednesday, April 16. 2014

Qualcomm is getting high on 64-bit chips

Via PCWorld

-----

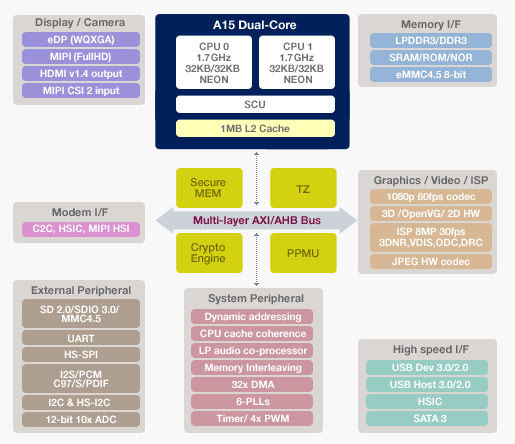

Qualcomm is getting high on 64-bit chips with its fastest ever Snapdragon processor, which will render 4K video, support LTE Advanced and could run the 64-bit Android OS.

The new Snapdragon 810 is the company’s “highest performing” mobile chip for smartphones and tablets, Qualcomm said in a statement. Mobile devices with the 64-bit chip will ship in the first half of next year, and be faster and more power-efficient. Snapdragon chips are used in handsets with Android and Windows Phone operating systems, which are not available in 64-bit form yet.

The Snapdragon 810 is loaded with the latest communication and graphics technologies from Qualcomm. The graphics processor can render 4K (3840 x 2160 pixel) video at 30 frames per second, and 1080p video at 120 frames per second. The chip also has an integrated modem that supports LTE and its successor, LTE-Advanced, which is emerging.

The 810 also is among the first mobile chips to support the latest low-power LPDDR4 memory, which will allow programs to run faster while consuming less power. This will be beneficial, especially for tablets, as 64-bit chips allow mobile devices to have more than 4GB of memory, which is the limit on current 32-bit chips.

The layout of the Snapdragon 810 chip. (Click to enlarge.)

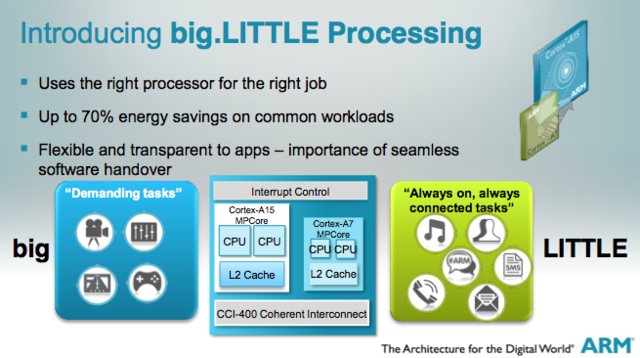

The quad-core chip has a mix of high-power ARM Cortex-A57 CPU cores for demanding tasks and low-power A53 CPU cores for mundane tasks like taking calls, messaging and MP3 playback. The multiple cores ensure more power-efficient use of the chip, which helps extend battery life of mobile devices.

The company also introduced a Snapdragon 808 six-core 64-bit chip. The chips will be among the first made using the latest 20-nanometer manufacturing process, which is an advance from the 28-nm process used to make Snapdragon chips today.

Qualcomm now has to wait for Google to release a 64-bit version of Android for ARM-based mobile devices. Intel has already shown mobile devices running 64-bit Android with its Merrifield chip, but most mobile products today run on ARM processors. Qualcomm licenses Snapdragon processor architecture and designs from ARM.

Work for 64-bit Android is already underway, and applications like the Chrome browser are already being developed for the OS. Google has not officially commented on when 64-bit Android would be released, but industry observers believe it could be announced at the Google I/O conference in late June.

Qualcomm spokesman Jon Carvill declined to comment on support for 64-bit Android. But the chips are “further evidence of our commitment to deliver top-to-bottom mobile 64-bit leadership across product tiers for our customers,” Carvill said in an email.

Qualcomm’s chips are used in some of the world’s top smartphones, and will appear in Samsung’s Galaxy S5. A Qualcomm executive in October last year called Apple’s A7, the world’s first 64-bit mobile chip, a “marketing gimmick,” but the company has moved on and now has five 64-bit chips coming to medium-priced and premium smartphones and tablets. But no 64-bit Android smartphones are available yet, and Apple has a headstart and remains the only company selling a 64-bit smartphone with its iPhone 5S.

The 810 supports HDMI 1.4 for 4K video output, and the Adreno 430 graphics processor is 30 percent faster on graphics performance and 20 percent more power efficient than the older Adreno 420 GPU. The graphics processor will support 55-megapixel sensors, Qualcomm said. Other chip features include 802.11ac Wi-Fi with built-in technology for faster wireless data transfers, Bluetooth 4.1 and a processing core for location services.

The six-core Snapdragon 808 is a notch down on performance compared to the 810, and also has fewer features. The 808 supports LTE-Advanced, but can support displays with up to 2560 x 1600 pixels. It will support LPDDR3 memory. The chip has two Cortex-A57 CPUs and four Cortex-A53 cores.

The chips will ship out to device makers for testing in the second half of this year.

Monday, February 24. 2014

Qloudlab

Qloudlab is the inventor and patent holder of the world’s first touchscreen-based biosensor. We are developing a cost-effective technology that is able to turn your smartphone touchscreen into a medical device for multiple blood diagnostics testing: no plug-in required with just a simple disposable. Our innovation is at the convergence of Smartphones, Healthcare, and Cloud solutions. The development is supported by EPFL (Pr. Philippe Renaud, Microsystems Laboratory) and by a major industrial player in cutting-edge touchscreen solutions for consumer, industrial and automotive products.

Wednesday, October 30. 2013

The Matterhorn like you've never seen it

Via EPFL

-----

15.10.13 - Two EPFL spin-offs, senseFly and Pix4D, have modeled the Matterhorn in 3D, at a level of detail never before achieved. It took senseFly’s ultralight drones just six hours to snap the high altitude photographs that were needed to build the model.

They weigh less than a kilo each, but they’re as agile as eagles in the high mountain air. These “ebees” flying robots developed by senseFly, a spin-off of EPFL’s Intelligent Systems Laboratory (LIS), took off in September to photograph the Matterhorn from every conceivable angle. The drones are completely autonomous, requiring nothing more than a computer-conceived flight plan before being launched by hand into the air to complete their mission.

Three of them were launched from a 3,000m “base camp,” and the fourth made the final assault from the summit of the stereotypical Swiss landmark, at 4,478m above sea level. In their six-hour flights, the completely autonomous flying machines took more than 2,000 high-resolution photographs. The only remaining task was for software developed by Pix4D, another EPFL spin-off from the Computer Vision Lab (CVLab), to assemble them into an impressive 300-million-point 3D model. The model was presented last weekend to participants of the Drone and Aerial Robots Conference (DARC), in New York, by Henri Seydoux, CEO of the French company Parrot, majority shareholder in senseFly.

All-terrain and even in swarms

“We want above all to demonstrate what our devices are capable of

achieving in the extreme conditions that are found at high altitudes,”

explains Jean-Christophe Zufferey, head of senseFly. In addition to the

challenges of altitude and atmospheric turbulence, the drones also had

to take into consideration, for the first time, the volume of the object

being photographed. Up to this point they had only been used to survey

relatively flat terrain.

Last week the dynamic Swiss company – which has just moved into new, larger quarters in Cheseaux-sur-Lausanne – also announced that it had made software improvements enabling drones to avoid colliding with each other in flight; now a swarm of drones can be launched simultaneously to undertake even more rapid and precise mapping missions.

Wednesday, October 02. 2013

Scientists want to turn smartphones into earthquake sensors

Via The Verge

-----

For years, scientists have struggled to collect accurate real-time data on earthquakes, but a new article published today in the Bulletin of the Seismological Society of America may have found a better tool for the job, using the same accelerometers found in most modern smartphones. The article finds that the MEMS accelerometers in current smartphones are sensitive enough to detect earthquakes of magnitude five or higher when located near the epicenter. Because the devices are so widely used, scientists speculate future smartphone models could be used to create an "urban seismic network," transmitting real-time geological data to authorities whenever a quake takes place.

The authors pointed to Stanford's Quake-Catcher Network as an inspiration, which connects seismographic equipment to volunteer computers to create a similar network. But using smartphone accelerometers would be cheaper and easier to carry into extreme environments. The sensor will need to become more sensitive before it can be used in the field, but the authors say once technology catches up, a smartphone accelerometer could be the perfect earthquake research tool. As one researcher told The Verge, "right from the start, this technology seemed to have all the requirements for monitoring earthquakes — especially in extreme environments, like volcanoes or underwater sites."

Monday, September 23. 2013

Qualcomm joins Wireless Power Consortium board, sparks hope for A4WP and Qi unification

Via endgadget

-----

Qualcomm, the founding member of Alliance for Wireless Power (or A4WP in short), made a surprise move today by joining the management board of the rival Wireless Power Consortium (or WPC), the group behind the already commercially available Qi standard. This is quite an interesting development considering how both alliances have been openly critical of each other, and yet now there's a chance of seeing just one standard getting the best of both worlds. That is, of course, dependent on Qualcomm's real intentions behind joining the WPC.

While Qi is now a well-established ecosystem backed by 172 companies, its current "first-gen" inductive charging method is somewhat sensitive to the alignment of the devices on the charging mats (though there has been recent breakthrough). Another issue is Qi can currently provide just up to 5W of power (which is dependent on both the quality of the coil and the operating frequency), and this may not be sufficient for charging up large devices at a reasonable pace. For instance, even with the 10W USB adapter, the iPad takes hours to fully juice up, let alone with just half of that power.

Looking ahead, both the WPC and the 63-strong A4WP are already working on their own magnetic resonance implementations to enable longer range charging. Additionally, A4WP's standard has also been approved for up to 24W of output, whereas the WPC is already developing medium power (from 15W) Qi specification for the likes of laptops and power tools.

Here's where the two standards differentiate. A4WP's implementation allows simultaneous charging of devices that require different power requirement on the same pad, thus offering more spacial freedom. On the other hand, Qi follows a one-to-one control design to maximize efficiency -- as in the power transfer is totally dependent on how much juice the device needs, and it can even go completely off once the device is charged.

What remains unclear is whether Qualcomm has other motives behind its participation in the WPC's board of management. While the WPC folks "encourage competitors to join" for the sake of "open development of Qi," this could also hamper the development of their new standard. Late last year, we spoke to the WPC's co-chair Camille Tang (the name "Qi" was actually her idea; plus she's also the president and co-founder of Hong Kong-based Convenient Power), and she expressed concern over the potential disruption from the new wireless power groups.

"The question to ask is: why are all these groups now coming out and saying they're doing a standard? It's possible that some people might say they really are a standard, but they may actually not intend to put products out there," said Tang.

"For example, there's one company with their technology and one thing that's rolling out in infrastructure. They don't have any devices, it's not compatible with anything else, so you think: why do they do that?

"It's about money. Not just licensing money, but other types of money as well."

At least on the surface, Qualcomm is showing its keen side to get things going for everyone's best interest. In a statement we received from a spokesperson earlier, the company implied that joining the WPC's board is "the logical step to grow the wireless power industry beyond the current first generation products and towards next generation, loosely coupled technology." However, Qualcomm still "believes the A4WP represents the most mature and best implementation of resonant charging."

Monday, September 09. 2013

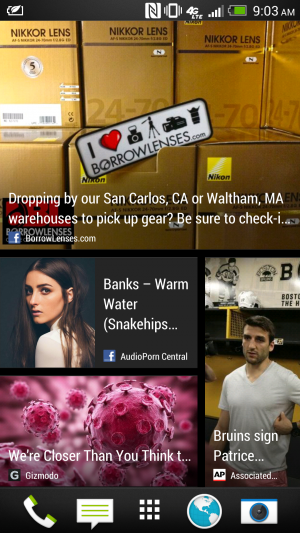

Why cards are the future of the web

-----

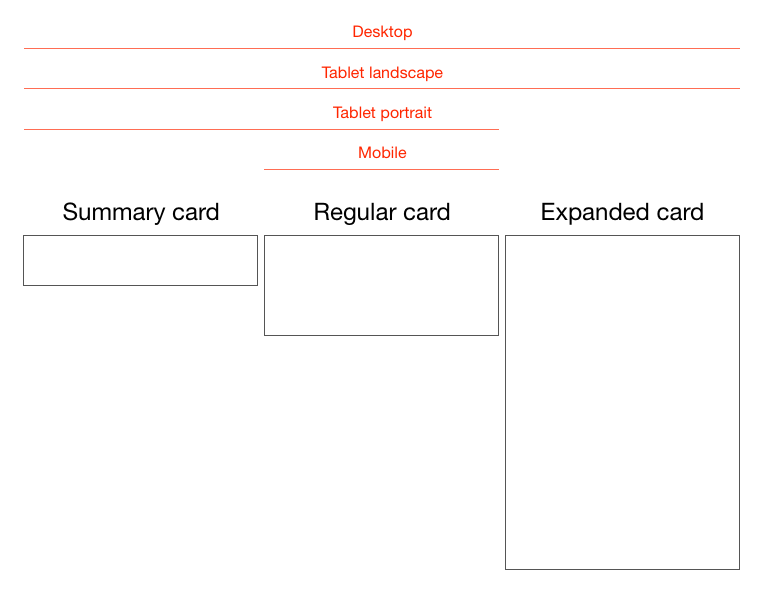

Cards are fast becoming the best design pattern for mobile devices.

We are currently witnessing a re-architecture of the web, away from pages and destinations, towards completely personalised experiences built on an aggregation of many individual pieces of content. Content being broken down into individual components and re-aggregated is the result of the rise of mobile technologies, billions of screens of all shapes and sizes, and unprecedented access to data from all kinds of sources through APIs and SDKs. This is driving the web away from many pages of content linked together, towards individual pieces of content aggregated together into one experience.

The aggregation depends on:

- The person consuming the content and their interests, preferences, behaviour.

- Their location and environmental context.

- Their friends’ interests, preferences and behaviour.

- The targeting advertising eco-system.

If the predominant medium of our time is set to be the portable screen (think phones and tablets), then the predominant design pattern is set to be cards. The signs are already here…

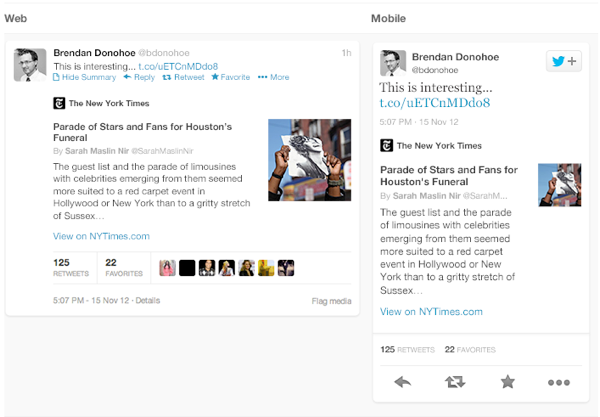

Twitter is moving to cards

Twitter recently launched Cards, a way to attached multimedia inline with tweets. Now the NYT should care more about how their story appears on the Twitter card (right hand in image above) than on their own web properties, because the likelihood is that the content will be seen more often in card format.

Google is moving to cards

With Google Now, Google is rethinking information distribution, away from search, to personalised information pushed to mobile devices. Their design pattern for this is cards.

Everyone is moving to cards

Pinterest (above left) is built around cards. The new Discover feature on Spotify (above right) is built around cards. Much of Facebook now represents cards. Many parts of iOS7 are now card based, for example the app switcher and Airdrop.

The list goes on. The most exciting thing is that despite these many early card based designs, I think we’re only getting started. Cards are an incredible design pattern, and they have been around for a long time.

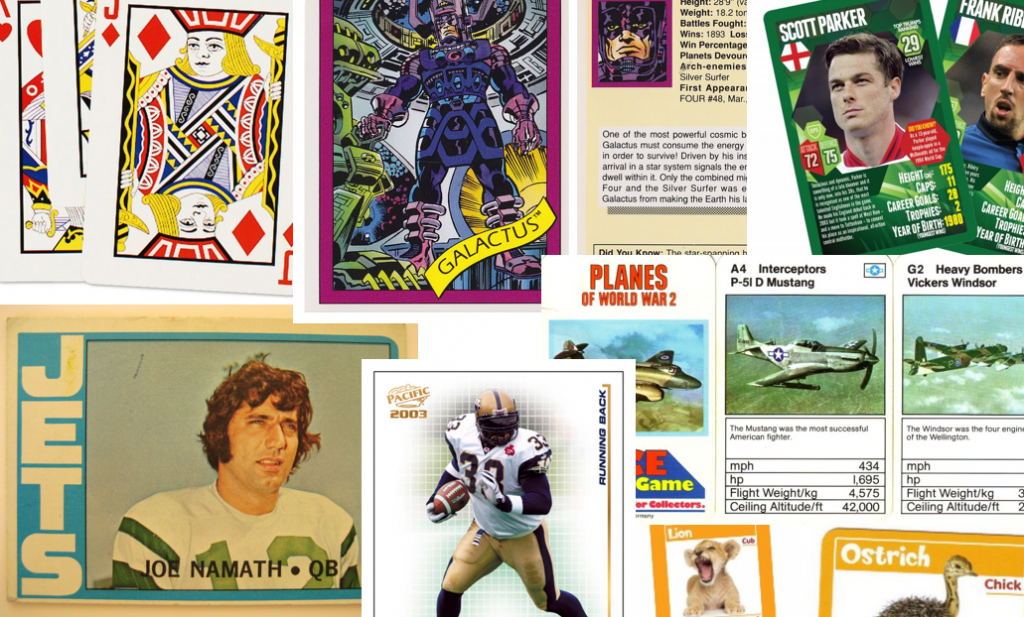

Cards give bursts of information

Cards as an information dissemination medium have been around for a very long time. Imperial China used them in the 9th century for games. Trade cards in 17th century London helped people find businesses. In 18th century Europe footmen of aristocrats used cards to introduce the impending arrival of the distinguished guest. For hundreds of years people have handed around business cards.

We send birthday cards, greeting cards. My wallet is full of debit cards, credit cards, my driving licence card. During my childhood, I was surrounded by games with cards. Top Trumps, Pokemon, Panini sticker albums and swapsies. Monopoly, Cluedo, Trivial Pursuit. Before computer technology, air traffic controllers used cards to manage the planes in the sky. Some still do.

Cards are a great medium for communicating quick stories. Indeed the great (and terrible) films of our time are all storyboarded using a card like format. Each card representing a scene. Card, Card, Card. Telling the story. Think about flipping through printed photos, each photo telling it’s own little tale. When we travelled we sent back postcards.

What about commerce? Cards are the predominant pattern for coupons. Remember cutting out the corner of the breakfast cereal box? Or being handed coupon cards as you walk through a shopping mall? Circulars, sent out to hundreds of millions of people every week are a full page aggregation of many individual cards. People cut them out and stick them to their fridge for later.

Cards can be manipulated.

In addition to their reputable past as an information medium, the most important thing about cards is that they are almost infinitely manipulatable. See the simple example above from Samuel Couto Think about cards in the physical world. They can be turned over to reveal more, folded for a summary and expanded for more details, stacked to save space, sorted, grouped, and spread out to survey more than one.

When designing for screens, we can take advantage of all these things. In addition, we can take advantage of animation and movement. We can hint at what is on the reverse, or that the card can be folded out. We can embed multimedia content, photos, videos, music. There are so many new things to invent here.

Cards are perfect for mobile devices and varying screen sizes. Remember, mobile devices are the heart and soul of the future of your business, no matter who you are and what you do. On mobile devices, cards can be stacked vertically, like an activity stream on a phone. They can be stacked horizontally, adding a column as a tablet is turned 90 degrees. They can be a fixed or variable height.

Cards are the new creative canvas

It’s already clear that product and interaction designers will heavily use cards. I think the same is true for marketers and creatives in advertising. As social media continues to rise, and continues to fragment into many services, taking up more and more of our time, marketing dollars will inevitably follow. The consistent thread through these services, the predominant canvas for creativity, will be card based. Content consumption on Facebook, Twitter, Pinterest, Instagram, Line, you name it, is all built on the card design metaphor.

I think there is no getting away from it. Cards are the next big thing in design and the creative arts. To me that’s incredibly exciting.

Wednesday, August 07. 2013

On Batteries and Innovation

Via O'Reilly Radar

----

Lately there’s been a spate of articles about breakthroughs in battery technology. Better batteries are important, for any of a number of reasons: electric cars, smoothing out variations in the power grid, cell phones, and laptops that don’t need to be recharged daily.

All of these nascent technologies are important, but some of them leave me cold, and in a way that seems important. It’s relatively easy to invent new technology, but a lot harder to bring it to market. I’m starting to understand why. The problem isn’t just commercializing a new technology — it’s everything that surrounds that new technology.

Take an article like Battery Breakthrough Offers 30 Times More Power, Charges 1,000 Times Faster. For the purposes of argument, let’s assume that the technology works; I’m not an expert on the chemistry of batteries, so I have no reason to believe that it doesn’t. But then let’s take a step back and think about what a battery does. When you discharge a battery, you’re using a chemical reaction to create electrical current (which is moving electrical charge). When you charge a battery, you’re reversing that reaction: you’re essentially taking the current and putting that back in the battery.

So, if a battery is going to store 30 times as much power and charge 1,000 times faster, that means that the wires that connect to it need to carry 30,000 times more current. (Let’s ignore questions like “faster than what?,” but most batteries I’ve seen take between two and eight hours to charge.) It’s reasonable to assume that a new battery technology might be able to store electrical charge more efficiently, but the charging process is already surprisingly efficient: on the order of 50% to 80%, but possibly much higher for a lithium battery. So improved charging efficiency isn’t going to help much — if charging a battery is already 50% efficient, making it 100% efficient only improves things by a factor of two. How big are the wires for an automobile battery charger? Can you imagine wires big enough to handle thousands of times as much current? I don’t think Apple is going to make any thin, sexy laptops if the charging cable is made from 0000 gauge wire (roughly 1/2 inch thick, capacity of 195 amps at 60 degrees C). And I certainly don’t think, as the article claims, that I’ll be able to jump-start my car with the battery in my cell phone — I don’t have any idea how I’d connect a wire with the current-handling capacity of a jumper cable to any cell phone I’d be willing to carry, nor do I want a phone that turns into an incendiary firebrick when it’s charged, even if I only need to charge it once a year.

Here’s an older article that’s much more in touch with reality: Battery breakthrough could bring electric cars to all. The claims are much more limited: these new batteries deliver 2.5 times as much energy with roughly the same weight as current batteries. But more than that, look at the picture. You don’t get a sense of the scale, but notice that the tabs extending from the batteries (no doubt the electrical contacts) are relatively large in relation to the battery’s body, certainly larger in relation to the battery’s size than the terminal posts on a typical auto battery. And even more, the terminals are flat, which maximizes surface area, which maximizes both heat dissipation (a big issue at high current), and surface area (to transfer power more efficiently). That’s what I like to see, and that’s what makes me think that this is a breakthrough that, while less dramatic, isn’t being over-hyped by irresponsible reporting.

I’m not saying that the problems presented by ultra-high capacity batteries aren’t solvable. I’m sure that the researchers are well aware of the issues. Sadly, I’m not so surprised that the reporters who wrote about the research didn’t understand the issues, resulting in some rather naive claims about what the technology could accomplish. I can imagine that there are ways to distribute current within the batteries that might solve some of the current carrying issues. (For example, high terminal voltages with an internal voltage divider network that distributes current to a huge number of cells). As we used to say in college, “That’s an engineering problem” — but it’s an engineering problem that’s certainly not trivial.

This argument isn’t intended to dump cold water on battery research, nor is it really to complain about the press coverage (though it was relatively uninformed, to put it politely, about the realities of moving electrical charge around). There’s a bigger point here: innovation is hard. It’s not just about the conceptual breakthrough that lets you put 30 times as much charge in one battery, or 64 times as many power-hungry CPUs on one Google Glass frame. It’s about everything else that surrounds the breakthrough: supplying power, dissipating heat, connecting wires, and more. The cost of innovation has plummeted in recent years, and that will allow innovators to focus more on the hard problems and less on peripheral issues like design and manufacturing. But the hard problems remain hard.

Wednesday, July 24. 2013

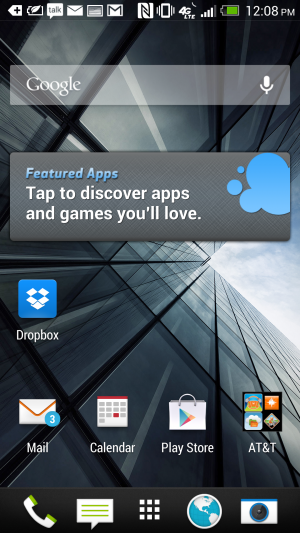

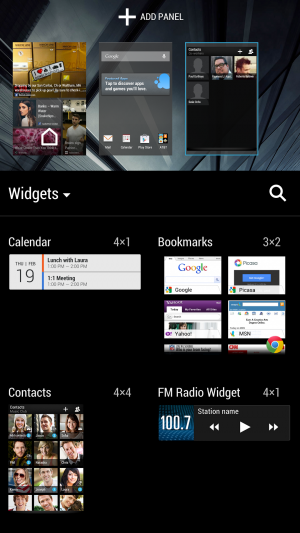

The great Ars Android interface shootout

Via ars technica

-----

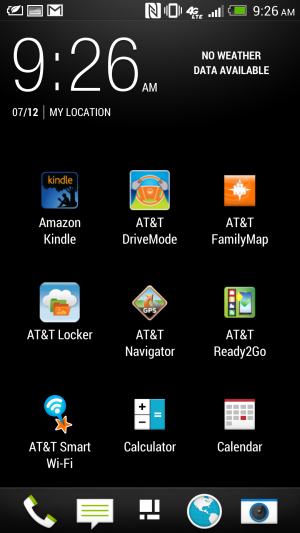

It’s been quite a year of surprises from Google. Before the company’s annual developer conference in May, we anticipated at least an incremental version of Android to hit the scene. Instead, we encountered a different game plan—Google not only started offering stock features like its keyboard as separate, downloadable apps for other Android handset users, but it’s also offering stock Android versions of non-Nexus-branded hardware like Samsung's Galaxy S4 and the HTC One in the Google Play store. So if you’d rather not deal with OEM overlays and carrier restrictions, you can plop down some cash and purchase unlocked, untainted Android hardware.

But the OEM-tied handsets aren't all bad. Sometimes the manufacturer’s Android offerings tack on a little extra something to the device that stock or Nexus Android hardware might not. These perks include things like software improvements and hardware enhancements—sometimes even thoughtful little extra touches. We’ll take a look at four of the major manufacturer overlays available right now to compare how they stack up to stock Android. Sometimes the differences are obvious, especially when it comes to the interface and user experience. You may be wondering what the overall benefit is to sticking with a manufacturer’s skin. The reasons for doing so can be very compelling.

A brief history of OEM interfaces

Why do OEM overlays happen in the first place? iOS and Windows Phone 8 don’t have to deal with this nonsense, so what's the deal with Android? Well, Android was unveiled in 2007 alongside the Open Handset Alliance (a consortium of hardware, software, and carriers to help further advance open standards for mobile devices). The mission was to keep the operating system open and accessible to all so users could mostly do whatever they wanted to do with it. As Samsung VP of Product Planning and Marketing Nick DiCarlo told Gizmodo, “Google has induced a system where some of the world's largest companies—the biggest handset manufacturer and a bunch of other really big ones—are also investing huge money behind their ecosystem. It's a really powerful and honestly pretty brilliant business model.”

The main issue with all of these different companies using the same software for their hardware is one of differentiation—how does Samsung or HTC or LG or Sony make an Android phone that doesn't have the same look, feel, and features as the competition's similarly specced phones? Putting their own skins, software, and services on top of Android gives them access to the good parts of Google's ecosystem (in most cases, the Google Play store and the surrounding software ecosystem) while theoretically helping them stand out from other phones on the shelf.

These skins haven't exactly been received with open arms. For many enthusiasts, skinned Android sometimes means that outstanding hardware is bogged down by all these extra offerings that manufacturers think will make their handset more appealing. But the overlays—or skins, as they’re often referred to—usually change the way the interface looks and acts. Sometimes it introduces new features that don't already exist on Android.

For Samsung, its Android interface domination began with the Samsung Behold II, which ran the first incarnation of the Samsung's TouchWiz Android UI. The name previously referred to Samsung's own proprietary operating system for its phones. Reviewers weren't too excited about Samsung's iteration of the Android interface, with sites like CNET writing that the “TouchWiz interface doesn't really add much to the user experience and in fact, at times, hinders it." Sometimes it still feels that way.

Samsung is notorious for packing in a breadth of features, but its aesthetics are often lacking. But again, that’s subjective, and it all comes down to the user. I’ve been using the last version of TouchWiz on a Galaxy S III. While I’ve had some instances where it was frustrating, I’ve come to appreciate some of the extra perks that shine through.

HTC's Sense UI back in the day

HTC's Android skin is called the Sense UI, and it was introduced in 2009. I’ve had some experience with it in my earlier Android days when I had an HTC Incredible, but it’s come a long way since then. According to Gizmodo, the interface's roots go back to 2007, when HTC had to alter its software for Windows Mobile to make it touch-sensitive so that it wouldn't just be limited to stylus input. It was eventually ported over to the HTC Hero, which was also the first Android device to feature a manufacturer’s interface overlay.

As for LG and Sony, their interfaces have less storied histories (and less prominent branding). Each borrows moves from what the two major players do and then implements the ideas a little better or a little worse. It’s an interesting dynamic, but the big theme here is choice: there is so much to choose from when you’re an Android user that it can be overwhelming if you’re not entirely sure of where to go next. Brand loyalty and past experiences can only go so far as the constant stream of updates and releases means manufacturers seek new directions nonstop.

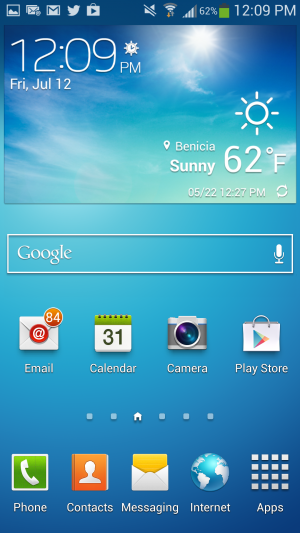

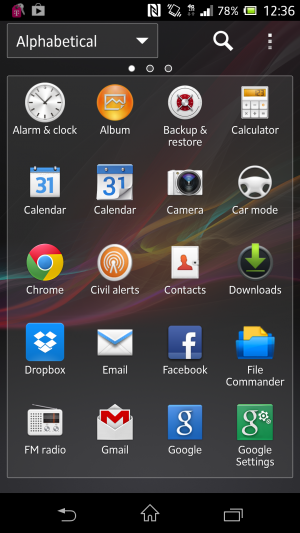

Samsung's TouchWiz Nature UX 2.0 interface overlay.

Each manufacturer puts some flair on its version of Android. Samsung's TouchWiz Nature UX 2.0, for instance, features a bubble blue interface with bright, vibrant colors and drop shadows to accompany every icon. LG’s Optimus UI uses... a similar aesthetic. But both interfaces allow you to customize the font style and size from within the Settings menu, even if the end product could ultimately end up as a garish looking interface.

Among the selection of manufacturers, Sony and HTC have been the most successful in designing an Android interface that complements the chassis on their respective flagship devices. HTC's Sense UI has always been one of my favorites for its overall sleekness and simplicity. Though it's not as barebones as the stock Android interface, Sense 5.0 now sports a thin, narrow font with modern-looking iconography that pairs well with its latest handset, the HTC One.

Sony's interface doesn't have an official name, but it can be referred to as the Xperia interface.

Sony's interface is not only pleasing to use, it also matches the general design philosophy that the company continues to maintain throughout its lifespan. Though it has no official alias, the Xperia interface showcases clean lines and extra offerings that don’t completely sour the overall user experience.

In this comparison, we're taking a look at recent phones from all of the manufacturers: a Nexus 4 and a Samsung Galaxy S 4 equipped with Android 4.2.2. The HTC One, LG Optimus G Pro, and Sony Xperia Z are all still on Android 4.1.2. We were unable to actually get any hands-on time with the latest Android 4.2 update to the HTC One.

We didn't include Motorola in the gang because the company is undergoing a massive makeover right now. Plus, its last flagship handset was the Droid Razr Maxx HD, which debuted back in October and has relatively dated specifications. We have some high hopes for what might be in store for Motorola’s future, especially with Google’s immediate backing, but we’re waiting to see what’s to come of that acquisition later this year.

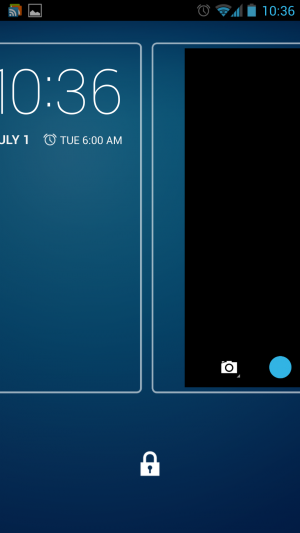

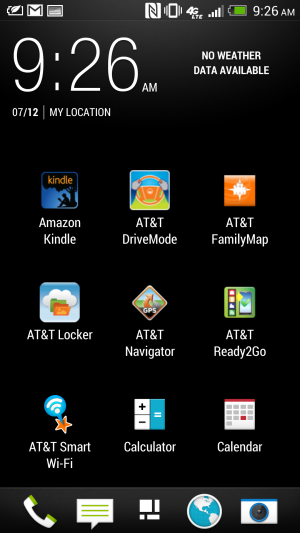

Lock screens, home screens, and settings, too

Home screens and lock screens are perhaps the most important element of a user interface because that's what the user will deal with the most. Think about it: every time you turn on your phone, you see the lock screen. We need to consider how many swipes it takes to get to the thing you want to do from the time you unlock your phone to the next executable action. (And hopefully there are some shortcuts that we can implement along the way to save time.)

The lock screen on the stock version of Android 4.2.2 Jelly Bean.

On stock Android 4.2.2 Jelly Bean, Google included a plethora of options for users to interact with their phone right from the lock screen. The Jelly Bean update that hit late last year added lock screen widgets and quick, swipe-over access to the camera application. The lock screen can also be disengaged entirely if you would rather have instant access to your applications by hitting the power button.

The home screen on the Galaxy S 4.

The lock screen on Samsung's TouchWiz.

In TouchWiz, Samsung kept the ability to add multiple widgets to the lock screen, including one that provides one-touch access to favorite applications and the ability to swipe over to the camera app. You can also add a clock or a personal message to the lock screen. TouchWiz even takes it a step further through the use of wake-up commands, which let you check for missed calls and messages by simply uttering a phrase at the lock screen. To actually unlock the device, you can choose to swipe you finger across the lock screen or take advantage of common Android features like Face unlock.

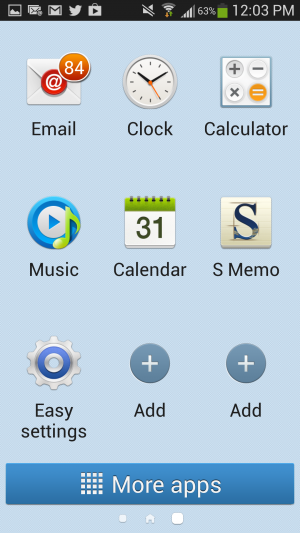

TouchWiz Easy mode is easy to set up...

As for the home screen, Samsung continues to offer the standard Android experience here, except that it tacks on a few extra perks. Hitting the Menu button on the home screen will bring up extra options, like the ability to create a folder or easily edit and remove apps from a particular page. There's also an "Easy" home screen mode, which dials down the interface to a scant few options for those users with limited smartphone experience—like your technophobic parents, for instance. Easy mode will display bigger buttons and limit the interface to three home screens, though basic app functionality remains.

Sense 5's home screen.

Sense 5's lock screen.

The HTC One just recently received an update for Android 4.2.2, though we didn't receive it in time to update our unit for this article. Regardless, HTC's Sense 5 is a far cry from its interface of yore—but that’s not a bad thing. It features a flatter, more condensed design with a new font that makes it appear more futuristic than other interfaces.

And don't forget to choose your lock screen.

Choose your home screen—any home screen.

Rather than implement a lock screen widget feature, HTC lets users choose between five different lock screen modes, including a Photo album mode, music mode, and productivity mode, which lets you glance at your notifications.

HTC Sense's BlinkFeed aggregates information for at-a-glance viewing.

Sense’s home screen can be a bit of a wreck if you’re looking for something simplistic. Its BlinkFeed feature takes up an entire page. Though it’s meant for aggregating news sites and social networks that you set up yourself, you can’t link up your favorite RSS feeds. By default, it’s your home page when you unlock the phone. You can change the home page in Sense 5 so that you don’t have to actually use the feature, but it can’t be entirely removed.

The home screen.

The lock screen for the Optimus UI.

LG's lock screen is just as busy and impacted as Samsung's, with a Settings menu that's almost as disjointed. Like Samsung's, it offers different unlock screen effects, varying clocks and shortcuts, and the ability to display owner information in case you lose your phone. As for the home screen, Optimus UI offers a home backup and restore option, as well as the ability to display the home screen in constant Portrait view.

Sony's Xperia Z handset is currently limited to Android 4.1.2. Its settings are laid out like stock Android, but there is no ability to add widgets or different clocks to the lock screen. We'd expect this sort of thing to become available when the phone is updated to Android 4.2.

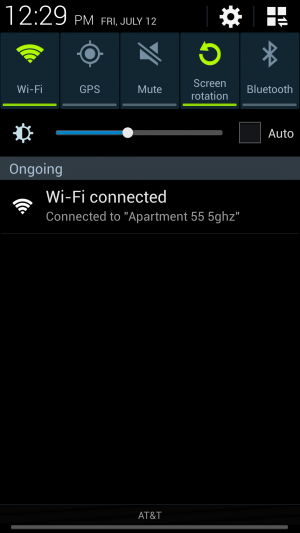

Notifications

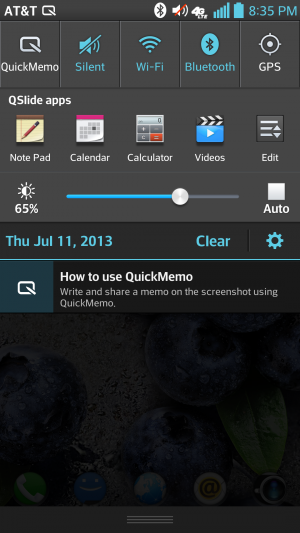

Samsung's TouchWiz notifications panel.

In the latest version of Jelly Bean, Google introduced Quick Settings, meant to provide quick access to frequently used settings. This is one area where the OEMs were ahead of Google—many of the interfaces have integrated at least a few different quick settings options for a while now.

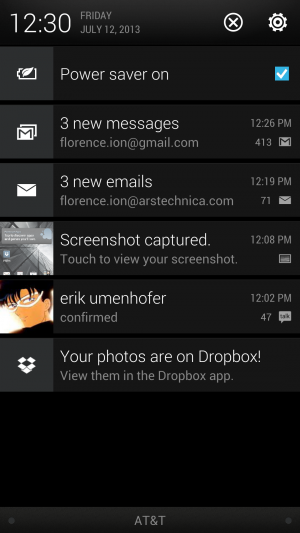

LG's crowded notifications panel.

Samsung's TouchWiz has been the most successful in implementing these features. You can scroll through a carousel of options or display them as a grid by pressing a button. LG’s Optimus UI borrows this same idea but overcrowds the Notifications panel with extra features like Qslide (more on this later). Even on the Optimus G Pro’s 5.5-inch display, the Quick Settings panel feels too congested to quickly find what you want without glancing over everything else first.

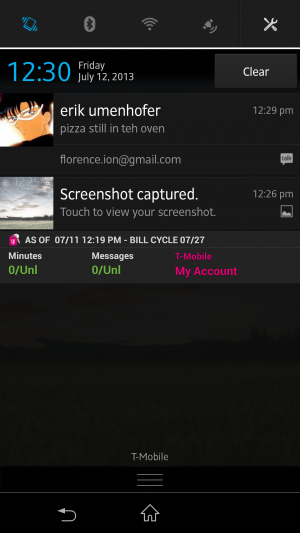

Sense UI's Notifications panel.

Xperia Z's Notification panel.

Sony and HTC kept it simple with their Notifications shade for the most part. Our HTC One and Xperia Z are running Android 4.1.2, so there's no built-in Quick Settings panel to take advantage of. Neither OEM implements its own version of the feature. The One's Android 4.2.2 update reportedly adds quick settings, and we assume that the same will be true of the Xperia Z when it gets its update.

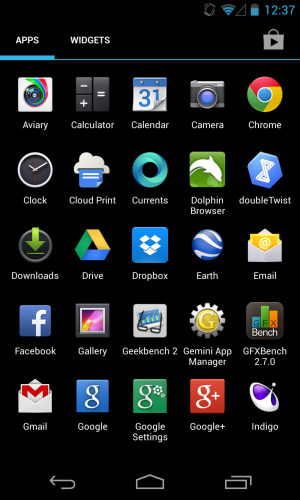

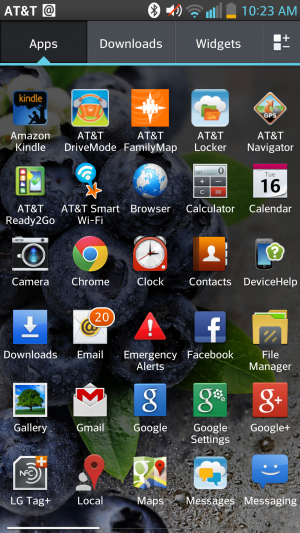

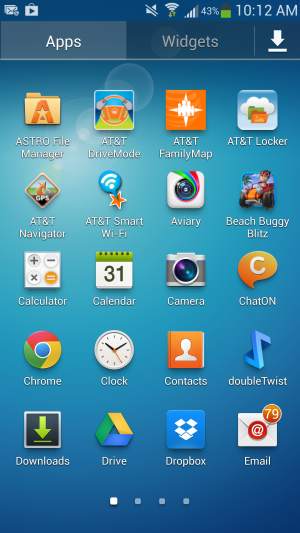

App drawers

Android 4.2.2 stock app drawer.

Sony's Xperia Z app drawer.

The only OEM overlay that keeps it as simplistic and straightforward as stock Android is Sony. You can categorize how you want to display apps and in what order, but beyond that there's not much else between you and your applications. You can uninstall applications by long-pressing them and dragging them to the "remove" icon that appears rather than dragging them to one of the home screens.

LG's app drawer.

Samsung's app drawer.

That's not to say what Samsung and LG provide isn't user friendly. Both manufacturers offer additional options once you head into the applications drawer. LG enables you to sort alphabetically or by download date, and you can increase icon size and change the wallpaper just for the application menu. You can also choose which applications to hide in case you would rather not be reminded of all the bloatware that sometimes comes with handsets.

As for the Galaxy S 4, Samsung offers a quick hit button for the Play Store. You can view your apps by category or by type, and you can share applications, which essentially advertises the Play Store link to various social networks.

Sense 5's app drawer.

HTC's interface falls short when it comes to the application launcher. As Phil Nickinson from Android Central put it, the HTC Sense 5 application drawer makes simple look complicated. The grid size, for instance, appears narrower and takes up precious space. Rather than make good use of the screen resolution, HTC displays the apps in a 3-by-4 icon grid by default, with the weather and clock widget taking up a huge chunk at the top. You also can't long-press on an application to place it on a home screen. Instead, you have to drag it up to the top left corner and then select the shortcut button to finally place it on the home screen—apparently, this feature is fixed in the HTC One’s Android 4.2.2 update (but we've yet to try it ourselves). It’s a bit of an ordeal to make the app drawer feel "normal" as defined by the rest of the Android OEMs. In general, we feel like Sense takes the most work to achieve some level of comfort.

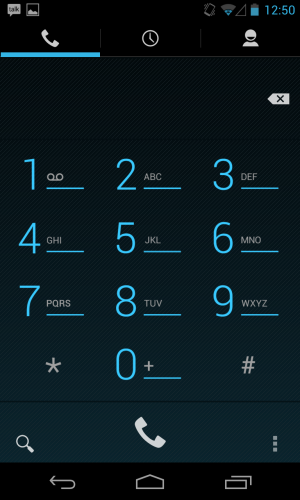

Dialers

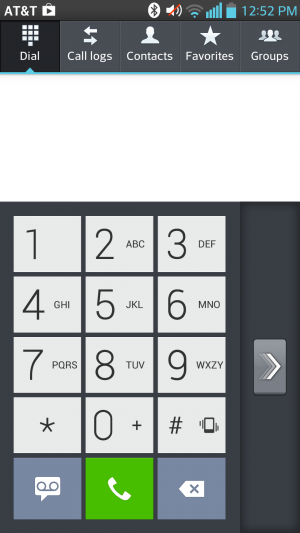

LG's Optimus UI includes a setting that lets you switch the side the dialer is on so that it's easier to use with just one thumb.

The Optimus UI offers an information-dense Dialer app. You can sift through logs, mark certain contacts as favorites, and peruse through your contacts right from within the app. LG offers an option to make the dialer easy to use one-handed.

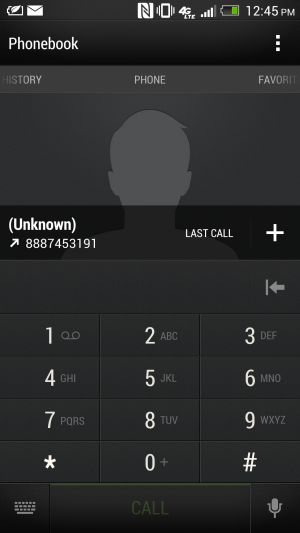

HTC's dialer application.

Although the Sense UI’s dialer layout feels a bit cramped, you can still bring up a contact from your address book by simply typing in a few letters of their name. You can cycle through your favorites, your contacts, and different groups. However, it would be more convenient if the Contacts screen worked like a carousel rather than a back-and-forth type of menu screen. Extra dialer settings also take up quite a bit of space at the top of the screen rather than being nested in menus as they are in other phones. Each screen in the Dialer has a different set of settings, which can be a bit confusing. Sense UI’s dialer app—and its overall interface—has a bit of a learning curve to it, but at least the aesthetic is nice.

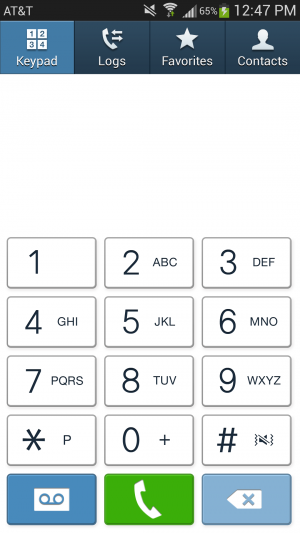

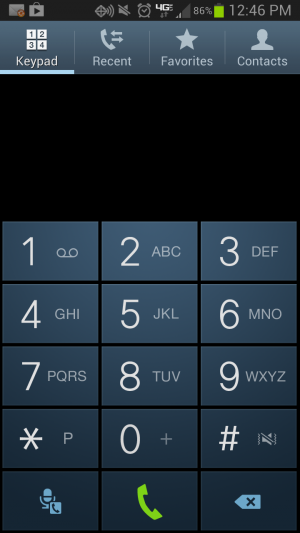

The TouchWiz dialer on a Galaxy S 4 running Android 4.2.2.

The TouchWiz dialer on a Galaxy S III running Android 4.1.2.

Samsung’s Dialer interface also has a favorites list and a separate tab for the keypad, but otherwise It’s much more simplistic looking than the rest of TouchWiz. Sony kept the Dialer relatively untouched too, adding just an extra tab for favorites.

The stock Android dialer app.

In the end, Jelly Bean has the best, cleanest-looking dialer application. While the extra categories are a good idea for users with a hefty number of contacts and groups of people to compartmentalize, sometimes minimizing the number of options is better—especially in the case of an operating system that is wholly barebones to begin with.

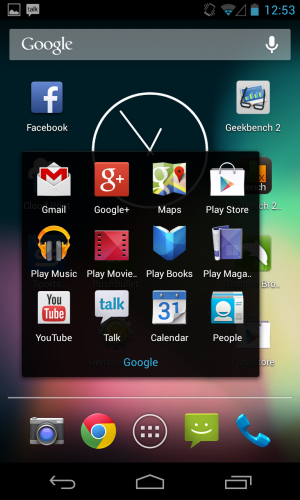

Apps, apps, apps

Google apps as far as the eye can see.

Google packs up a suite of applications with the stock version of Android to perfectly complement the company's offerings. On the Nexus 4 and the Google Play editions of the Samsung Galaxy S 4 and HTC One, you'll encounter applications like Google Calendar, Mail, Currents, Chrome, and Earth. Not all of the manufacturer’s handsets come with this whole set of apps out of the box. The regular edition of the Galaxy S 4, for instance, doesn't include Google Earth or Currents, but it does have the Gmail and Maps application. Some carriers will package up handsets with their own suite of apps, and carriers might also include things like a backup application or an app that lets you check on your minutes and data usage.

For the most part, however, these handsets do include their own versions of calendar and mail apps (though the Google Calendar app is available in the Play store, the non-Gmail email client isn't). They have camera apps with software tweaks that are compatible with the hardware contained on the device. Some handsets also have a bunch of extra features just because; Samsung is especially fond of this.

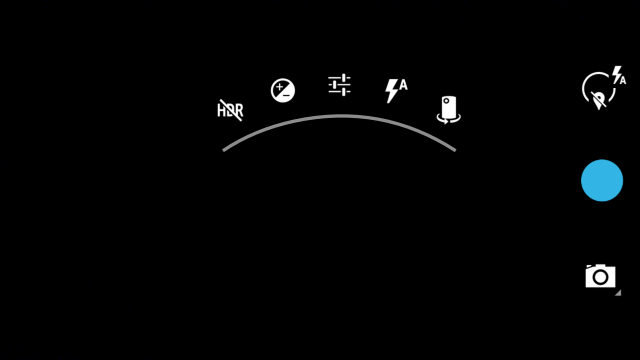

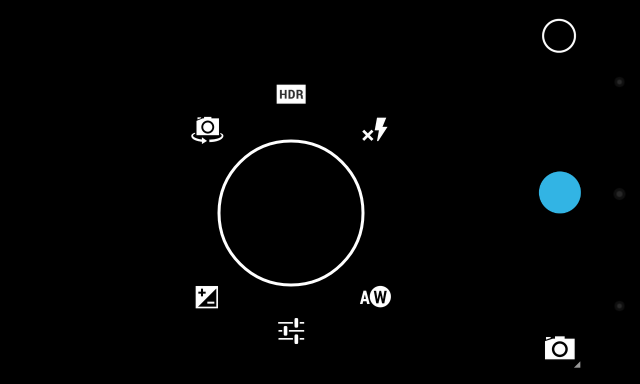

Camera applications

Perhaps the biggest differentiator between interfaces is the camera features and controls. We'll start with the HTC One, with its Ultrapixel camera and myriad features. You can use things like Zoe to stitch together several still images and create a Thrasher-like action photo or just combine two slightly mediocre photos to make one worthy of sharing on social networks. The Ultrapixel camera also automatically adjusts exposure and the like to produce a fine looking photo, as we found out when we tested it out in our comparative review of the Google Play edition of the HTC One and the standard version.

The stock Android camera application isn't totally devoid of features, however. Android 4.2.2's new camera UI has scroll-up controls that make it easy to quickly switch between things like Exposure and Scene settings. And while it could certainly use a little oomph like HTC introduced with its camera application, there is such a thing as too much good stuff—especially when you look at what LG and Samsung have crammed into their camera interfaces.

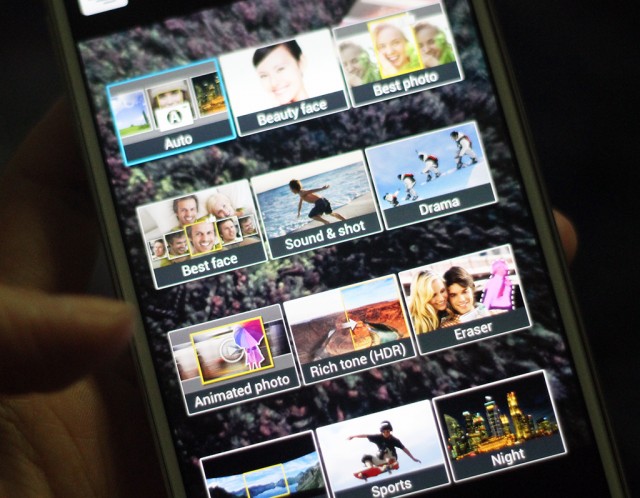

So many different camera modes to choose from on the Galaxy S 4.

Samsung's TouchWiz-provided camera application is a bit of a mess. On the Galaxy S 4, you'll have to deal with buttons and features splattered all over the place. There's a Mode button that lets you cycle between the 12 different camera modes—things like Panorama, HDR, Beauty face (which enhances the facial features of your subject), Sound & shot (which shoots a photo and then some audio to accompany it), and Drama (which works a little like HTC's Zoe feature). Those camera options are all fun to use from time to time, and you can change the menu screen from carousel to grid view so that you're not too overwhelmed by the breadth of options. But there are still so many buttons lining the sides of the viewfinder on the display.

There's also a general Settings button in the bottom corner that will expand out with more icons, taking up even more room on the screen. Below that are two buttons for switching between the front- and rear-facing cameras, as well as the ability to use the dual-camera functionality while snapping a photo. TouchWiz is great at offering a bunch of choices, but it can get a bit exhausting. If there were a more concise way of making these available, it would make the Camera application less intimidating to use.

I'm not entirely sure what all of the symbols in LG's camera application stand for.

LG's Optimus UI camera application isn't any better. Rather than allowing the entire screen to be used as a viewfinder with icons that lay on top of it like with TouchWiz, the menu options take up the top and bottom third of the screen. It’s nice that LG offers a little symbol in the preview window to let you know how your battery life is doing and which mode you’re shooting in, but figuring out what symbol does what takes a bit of time. At least with Samsung’s large catalog of offerings, there’s an explicit description about what each camera function does.

Sony's camera interface is easier to use, but the preview window is still surrounded by buttons and things.

Sony’s camera app is a bit easier to navigate. It has found the right medium between simplicity and feature-filled functionality. Unfortunately for its hardware, that doesn't translate over to how well the camera actually performs. But the interface is something that other OEMs should strive for: a straightforward, easy-to-use preview window that’s just what Goldilocks was looking for.

Where the camera application really matters is with handsets like the HTC One. That extra bit of software that HTC packs up with its One is essential for its camera functionality to operate at its prime. As our own Andrew Cunningham put it in his review of the Google Play edition of the HTC One:

...there are the HTC-specific features that the "ultrapixel" camera on the [GPe] One lacks, namely Zoe (which can stitch together several still images to convey action), the ability to stitch together "highlight movies" from short videos on your phone, and pretty much any feature that lets you combine two unsatisfactory photos to get one satisfactory one (like Always Smile or Object Removal). These have been replaced by a slightly tweaked version of the stock Android camera, which we assume will make it to the Nexus phones and tablets in the next release of Android.

Additionally, the Google Play edition One had some image quality issues when it shot in automatic shooting mode. The standard One—which is fueled by HTC's Sense 5—can adjust its exposure based on its surroundings. The Google Play edition—the stock Android 4.2.2 version—doesn't have those same software tweaks in its camera application.

In some ways, this is actually the best argument for why you would consider an OEM-tied Android handset over an unlocked, stock one: the software has been tweaked to work best with that specific handset's internals.

Calendars

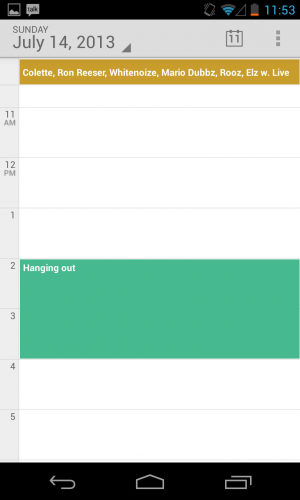

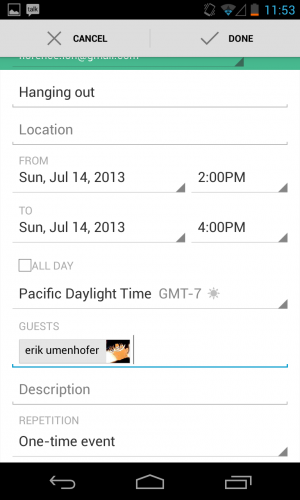

In May, Google Calendar got a makeover in addition to color-coded functionality in order to vary days and events for each calendar. The new interface was particularly focused on streamlining the design aesthetic across all Google applications, and while the update didn't introduce too many new features, it did make Google's Calendar app a little more palatable.

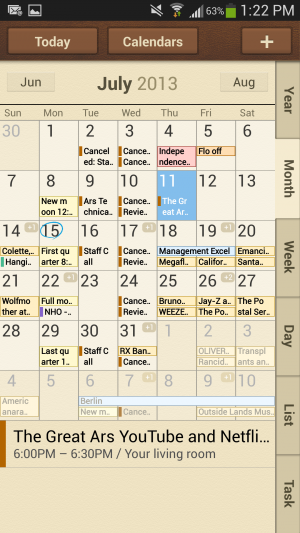

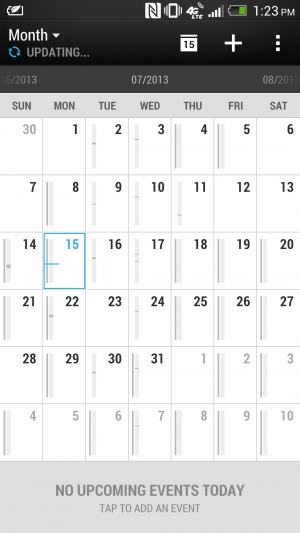

Samsung's TouchWiz calendar application.

Samsung's calendar application is not the prettiest thing to look at, but it's certainly feature-filled. Users can switch between six different calendar view modes and four different view styles, including the ability to view the calendar in a list or pop-up form. There are also a number of minor settings that you can individually adjust, including the ability to select what day your week should start on.

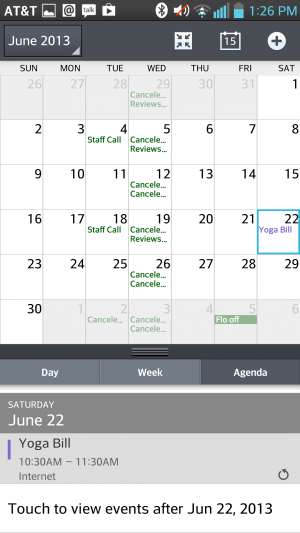

Sense 5's calendar app.

HTC's Sense uses its own proprietary calendar application, too. You can sync your accounts, choose the first day of the week, set the default time zone (and another if you travel frequently), and even display the weather within the calendar app. At the bottom of the screen, Sense will show upcoming events at a glance, and you can tap to add more throughout the day. You can also sort your calendar by meeting invitations.

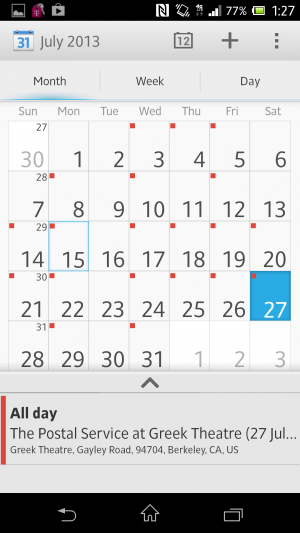

LG's calendar app.

Sony's calendar app.

LG's Optimus UI employs a similar interface to Sense UI’s with the calendar view at the top and tasks for meeting invitations available at the bottom. Still, it doesn't feel as informative as what stock Android and Samsung are putting forward. Sony provides a Calendar app that uses a similar icon to Google's stock app, and while the interface looks the same, it doesn't have the color-coding abilities of Google Cal.

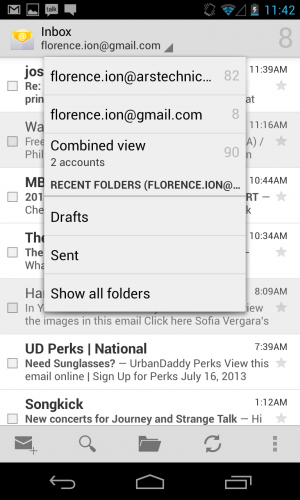

The Nexus 4 and Google Play edition handsets come with their own suite of Google-branded applications, including two e-mail apps: Gmail and a nondescript Email app. While Gmail is a little more feature-filled, with the option to use things like Priority inbox, the Email app's interface appears a little barren. You can add Exchange, Yahoo!, Hotmail, and other POP3/IMAP accounts to it or add your Gmail account to keep them synced up in one app. However, with the app's straightforward nature, there's not much else to it. Mail applications offered by other manufacturers don't veer too far from what's here, though. They essentially offer the same basic functionality and settings across the board.

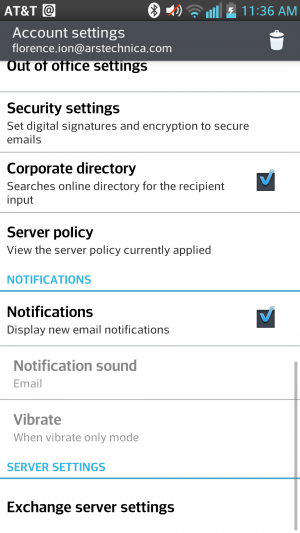

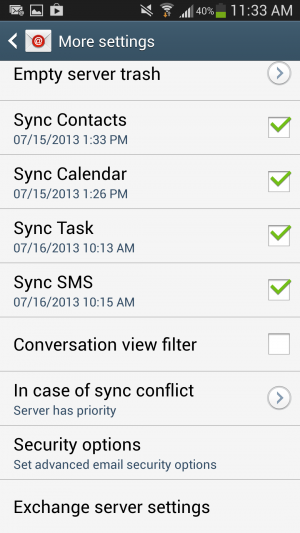

...the server settings are annoyingly featured at the end.

From one interface to the other...

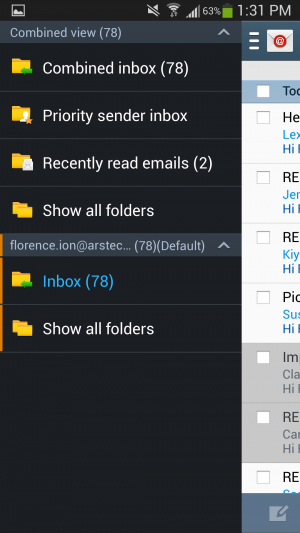

Annoyingly, most of the Mail apps, like Samsung's and LG's, bury server settings at the very end of the settings panel. But all of them take a page out of stock Android's book by providing a combined inbox view, which is especially helpful if you're juggling between a myriad of different accounts.

Samsung features a combined inbox view, just like stock Android's.

Stock Android's combined inbox view.

HTC's e-mail application has the same design as its other OEM-offered proprietary applications, but it's much easier to navigate than other apps. The Settings button resides at the top of the page with a bunch of options, including the ability to add an account or set an "out of office" message. From there, you can even access additional settings. HTC also provides a couple of different sync settings, for instance, to help preserve battery life.

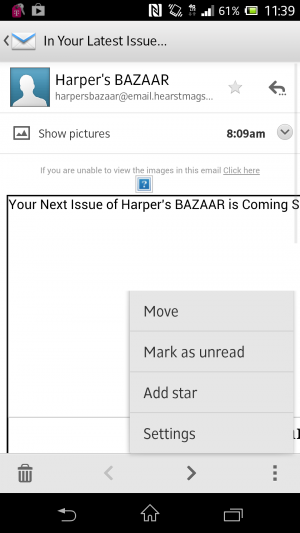

Sony's Mail app is so simple.

Sony's Mail application is nice and easy as well. You can hit the settings button in the bottom right corner to mark a piece as unread, star it, move it to another folder, or access the general settings panel.

Other apps

With each OEM overlay comes a whole set of applications that could prove to be useful in the end. Except Samsung's, that is—the manufacturer has bundled a huge set of features and capabilities that feel redundant and might even suck the life out of your battery if you're not careful.

Samsung includes its own app store with TouchWiz.

The Galaxy S 4 comes preloaded with a bunch of applications, including Samsung's own app store, entertainment hub, and app that enables you to access content on your phone from a desktop computer. The only perk of signing up for a Samsung account and going that route is being able to track where your phone is in case you lose or misplace it. Samsung can back up phone data too.

But there's more where this came from. Samsung includes a remote control application for your television called Samsung WatchON, but it's only compatible if you have an active cable or satellite television subscription. There's also S Health, which helps you manage your lifestyle and well-being. And if you're a hands-free type of user, you can take advantage of gesture-based functionality like Air gestures or enable the screen to keep track of your eye movement.

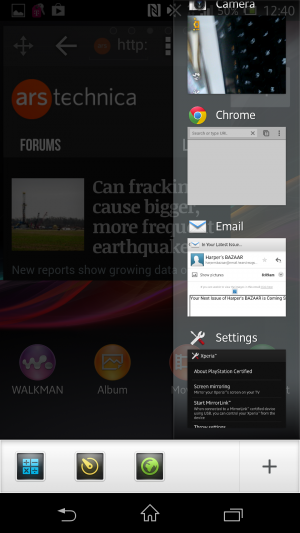

It will pop up in a desktop-like window on your screen.

Select the "small app" you want to use from the running apps screen.

Samsung isn't the only offender when it comes to stuffing applications and features onto its flagship handsets, however. Sony oversaturated its own music and movie store, and it has a Walkman music-playing application alongside Google Music. When you hold down the Menu button, there's a row of "small apps" that gives you quick access to things like the browser, a calculator, and a timer. We covered these briefly in the Xperia Tablet Z review, but they work the same on the Xperia Z handset: once you launch the "small" app, the app will appear in a pop out screen on top of the interface. It's kind of like multitasking.

The Qslide multitasking app lets you do things like take notes while you're doing other stuff.

LG, on the other hand, lets the carrier pack it with apps. LG then includes a multitasking feature called Qslide. You can choose from several Qslide-compatible applications that pop up over an open application in order to do things like leave a note and use the calculator. It also has a setting that turns off the screen when you're not looking.

Among all phones, there are a great number of features and apps to adapt to using. While I don't find things like Samsung's Air gestures and Smart scroll gimmicky, it can get frustrating to venture into the apps drawer only to find it crowded with icons of applications that you will never use. Some of these features and apps are things you'd never find packed up with the stock version of Android—because they're not always necessary.

The future

We're still waiting to hear about Android 4.3 and what it will bring to the mobile platform. Every time Google launches an update, you can bet that the manufacturers will follow suit with their interfaces (you know, eventually). That's what causes the biggest conundrum for Android users. I had things like Quick Settings already available on my Galaxy S III before Google natively implemented them into Android 4.2 Jelly Bean, but for the OEMs that didn't build their own version of this feature, I'm constantly at the mercy of my carrier and the manufacturer. They dictate when I'll receive my update for the latest version of Jelly Bean.

The biggest gripe about OEM overlays is that each company is selling its own brand of Android rather than Google's. Remember the Android update alliance? That didn't work out too well in the end. Carriers and hardware makers aren't keeping their promises, and as that trickles down to the consumer, it eventually confuses the public. As Google attempts to implement interface and performance standards, manufacturers will go ahead and hire a team to make Android look virtually unrecognizable. Samsung's app and media store sort of feels like an insult at times, but it knows that it doesn't have all the clout to make strides with its own mobile operating system.

There is a silver lining to all this, at least for purists: why should you even bother with OEM interfaces when you can now purchase two of the most popular handsets with stock Android on them? Google has already said that it will be working with the providers of Google Play edition phones to provide timely updates, so you certainly don't have to worry about fragmentation or waiting around to get the latest version of Android.

The OEMs have provided several different experiences for both hardcore and novice Android users alike, which has only contributed to the proliferation of the platform. Here at Ars, we prefer the stock version of Android on a Google-backed handset like the Nexus 4 and Google Play editions. Even if they're not chock full of perks and applications, they'll receive the most timely updates from Android headquarters, and their interfaces are mostly free of the cruft you get from the OEMs. For many consumers, it might not matter when Google chooses to update the phone, but for us, we like to know that Google is pushing through the software updates without any setbacks.

In the end, choosing an OEM-branded version of Android means that you're a prisoner of that manufacturer's timeline—an especially unfortunate situation when that manufacturer decides to stop supporting software updates altogether. We've said it time and time again—in the end, it's really your experience that will determine which interface suits you best. So as far as the future of Android goes, it's not just in Google's hands.

Thursday, May 16. 2013

The PC inside your phone: A guide to the system-on-a-chip

Via ars technica

-----

A desktop PC used to need a lot of different chips to make it work. You had the big parts: the CPU that executed most of your code and the GPU that rendered your pretty 3D graphics. But there were a lot of smaller bits too: a chip called the northbridge handled all communication between the CPU, GPU, and RAM, while the southbridge handled communication between the northbridge and other interfaces like USB or SATA. Separate controller chips for things like USB ports, Ethernet ports, and audio were also often required if this functionality wasn't already integrated into the southbridge itself.

As chip manufacturing processes have improved, it's now possible to cram more and more of these previously separate components into a single chip. This not only reduces system complexity, cost, and power consumption, but it also saves space, making it possible to fit a high-end computer from yesteryear into a smartphone that can fit in your pocket. It's these technological advancements that have given rise to the system-on-a-chip (SoC), one monolithic chip that's home to all of the major components that make these devices tick.

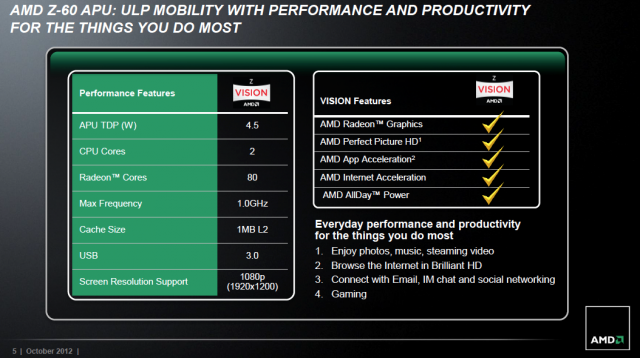

The fact that every one of these chips includes what is essentially an entire computer can make keeping track of an individual chip's features and performance quite time-consuming. To help you keep things straight, we've assembled this handy guide that will walk you through the basics of how an SoC is put together. It will also serve as a guide to most of the current (and future, where applicable) chips available from the big players making SoCs today: Apple, Qualcomm, Samsung, Nvidia, Texas Instruments, Intel, and AMD. There's simply too much to talk about to fit everything into one article of reasonable length, but if you've been wondering what makes a Snapdragon different from a Tegra, here's a start.

Putting a chip together

There's no discussion of smartphone and tablet chips that can happen without a discussion of ARM Holdings, a British company with a long history of involvement in embedded systems. ARM's processors (and the instruction set that they use, also called ARM) are designed to consume very small amounts of power, much less than the Intel or AMD CPUs you might find at the heart of a standard computer. This is one of the reasons why you see ARM chips at the heart of so many phones and tablets today. To better understand how ARM operates (and to explain why so many companies use ARM's CPU designs and instruction sets), we first must talk a bit about Intel.

Intel handles just about everything about its desktop and laptop CPUs in-house: Intel owns the x86 instruction set its processors use, Intel designs its own CPUs and the vast majority of its own GPUs, Intel manufactures its own chips in its own semiconductor fabrication plants (fabs), and Intel handles the sale of its CPUs to both hardware manufacturers and end users. Intel can do all of this because of its sheer size, but it's one of the only companies able to work this way. Even in AMD's heyday, the company was still licensing the x86 instruction set from Intel. More recently, AMD sold off its own fabs—the company now directly handles only the design and sale of its processors, rather than handling everything from start to finish.

ARM's operation is more democratized by design. Rather than making and selling any of its own chips, ARM creates and licenses its own processor designs for other companies to use in their chips—this is where we get things like the Cortex-A9 and the Cortex-A15 that sometimes pop up in Ars phone and tablet reviews. Nvidia's Tegra 3 and 4, Samsung's Exynos 4 and 5, and Apple's A5 processors are all examples of SoCs that use ARM's CPU cores. ARM also licenses its instruction set for third parties to use in their own custom CPU designs. This allows companies to put together CPUs that will run the same code as ARM's Cortex designs but have different performance and power consumption characteristics. Both Apple and Qualcomm (with their A6 and Snapdragon S4 chips, respectively) have made their own custom designs that exceed Cortex-A9's performance but generally use less power than Cortex-A15.

The situation is similar on the graphics side. ARM offers its own "Mali" series GPUs that can be licensed the same way its CPU cores are licensed, or companies can make their own GPUs (Nvidia and Qualcomm both take the latter route). There are also some companies that specialize in creating graphics architectures. Imagination Technologies is probably the biggest player in this space, and it licenses its mobile GPU architectures to the likes of Intel, Apple, and Samsung, among others.

Chip designers take these CPU and GPU bits and marry them to other necessary components—a memory interface is necessary, and specialized blocks for things like encoding and decoding video and processing images from a camera are also frequent additions. The result is a single, monolithic chip called a "system on a chip" (SoC) because of its more-or-less self-contained nature.

There are two things that sometimes don't get integrated into the SoC itself. The first is RAM, which is sometimes a separate chip but is often stacked on top of the main SoC to save space (a method called "package-on-package" or PoP for short). A separate chip is also sometimes used to handle wireless connectivity. However, in smartphones especially, the cellular modem is also incorporated into the SoC itself.

While these different ARM SoCs all run the same basic code, there's a lot of variety between chips from different manufacturers. To make things a bit easier to digest, we'll go through all of the major ARM licensees and discuss their respective chip designs, those chips' performance levels, and products that each chip has shown up in. We'll also talk a bit about each chipmaker's plans for the future, to the extent that we know about them, and about the non-ARM SoCs that are slowly making their way into shipping products. Note that this is not intended to be a comprehensive look at all ARM licensees, but rather a thorough primer on the major players in today's and tomorrow's phones and tablets.

Apple

We'll tackle Apple's chips first, since they show up in a pretty small number of products and are exclusively used in Apple's products. We'll start with the oldest models first and work our way up.

The Apple A4 is the oldest chip still used by current Apple products, namely the fourth generation iPod touch and the free-with-contract iPhone 4. This chip marries a single Cortex A8 CPU core to a single-core PowerVR SGX 535 GPU and either 256MB or 512MB of RAM (for the iPod and iPhone, respectively). This chip was originally introduced in early 2010 with the original iPad, so it's quite long in the tooth by SoC standards. Our review of the fifth generation iPod touch shows just how slow this thing is by modern standards, though Apple's tight control of iOS means that it can be optimized to run reasonably well even on old hardware (the current version of iOS runs pretty well on the nearly four-year-old iPhone 3GS).

Next up is the Apple A5, which despite being introduced two years ago is still used in the largest number of Apple products. The still-on-sale iPad 2, the iPhone 4S, the fifth-generation iPod touch, and the iPad mini all have the A5 at their heart. This chip combines a dual-core Cortex A9 CPU, a dual-core PowerVR SGX 543MP2 GPU, and 512MB of RAM. Along with the aforementioned heavy optimization of iOS, this combination has made for quite a longevous SoC. The A5 also has the greatest number of variants of any Apple chip: the A5X used the same CPU but included the larger GPU, 1GB of RAM, and wider memory interface necessary to power the third generation iPad's then-new Retina display, and a new variant with a single-core CPU was recently spotted in the Apple TV.

Finally, the most recent chip: the Apple A6. This chip, which to date has appeared only in the iPhone 5, marries two of Apple's custom-designed "Swift" CPU cores to a triple-core Imagination Technologies PowerVR SGX 543MP3 GPU and 1GB of RAM, roughly doubling the performance of the A5 in every respect. The CPU doubles the A5's performance both by increasing the clock speed and the number of instructions-per-clock the chip can perform relative to Cortex A9. The GPU gets there by adding another core and increasing clock speeds. As with the A5, the A6 has a special A6X variant used in the full-sized iPad that uses the same dual-core CPU but ups the ante in the graphics department with a quad-core PowerVR SGX 554MP4 and a wider memory interface.

Apple SoCs all prioritize graphics performance over everything else, both to support the large number of games available for the platform and to further Apple's push toward high-resolution display panels. The chips tend to have less CPU horsepower and RAM than the chips used in most high-end Android phones (Apple has yet to ship a quad-core CPU, opting instead to push dual-core chips), but tight control over iOS makes this a non-issue. Apple has a relative handful of iOS devices it needs to support, so it's trivial for Apple and third-party developers to make whatever tweaks and optimizations they need to keep the operating system and its apps running smoothly even if the hardware is a little older. Whatever you think of Apple's policies and its "walled garden" approach to applications, this is where the tight integration between the company's hardware and software pays off.

Knowing what we do about Apple's priorities, we can make some pretty good educated guesses about what we'll see in a hypothetical A7 chip even if the company never gives details about its chips before they're introduced (or even after, since we often have to rely on outfits like Chipworks to take new devices apart before we can say for sure what's in them).

On the CPU side, we'd bet that Apple will focus on squeezing more performance out of Swift, whether by improving the architecture's efficiency or increasing the clock speed. A quad-core version is theoretically possible, but to date Apple has focused on fewer fast CPU cores rather than more, slower ones, most likely out of concern about power consumption and the total die size of the SoC (the larger the chip, the more it costs to produce, and Apple loves its profit margins). As for the GPU, Imagination's next-generation PowerVR SGX 6 series GPUs are right around the corner. Since Apple has used Imagination exclusively in its custom chips up until now, it's not likely to rock this boat.

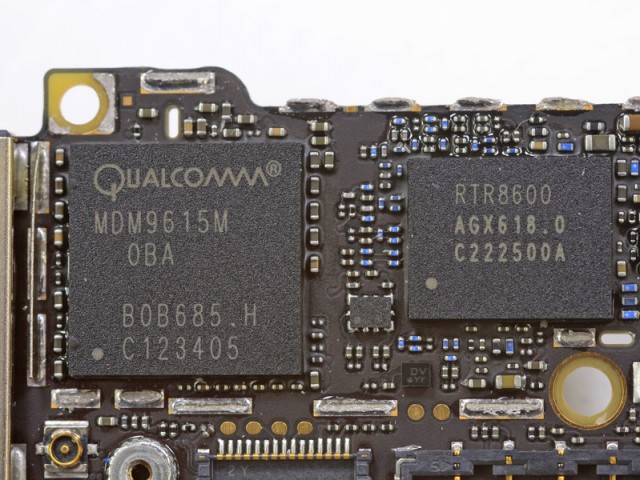

Qualcomm

Qualcomm is hands-down the biggest player in the mobile chipmaking game right now. Even Samsung, a company that makes and ships its own SoCs in the international versions of its phones, often goes with Qualcomm chips in the US. With this popularity comes complexity: Wikipedia lists 19 distinct model numbers in the Snapdragon S4 lineup alone, and those aren't even Qualcomm's newest chips. So we'll pick four of the most prominent to focus on, since these are the ones you're most likely to see in a device you could buy in the next year or so.

Let's start with the basics: Qualcomm is the only company on our list that creates both its own CPU and GPU architectures, rather than licensing one or the other design from ARM or another company. Its current CPU architecture, called "Krait," is faster clock-for-clock than ARM's Cortex A9 but slower than Cortex A15 (the upside is that it's also more power-efficient than A15). Its GPU products are called "Adreno," and they actually have their roots in a mobile graphics division that Qualcomm bought from AMD back in 2009 for a scant $65 million. Both CPU and GPU tend to be among the faster products on the market today, which is one of the reasons why they're so popular.

The real secret to Qualcomm's success, though, is its prowess in cellular modems. For quite a while, Qualcomm was the only company offering chips with an LTE modem integrated into the SoC itself. Plenty of phones make room for separate modems and SoCs, but integrating the modem into the SoC creates space on the phone's logic board, saves a little bit of power, and keeps OEMs from having to buy yet another chip. Even companies that make their own chips use Qualcomm modems—as we noted, almost all of Samsung's US products come with a Qualcomm chip, and phones like the BlackBerry Z10 use a Qualcomm chip in the US even though they use a Texas Instruments chip abroad. Even Apple's current iPhones use one or another (separate) Qualcomm chips to provide connectivity.

Add these modems to Qualcomm's competitive CPUs and GPUs, and it's no wonder why the Snapdragon has been such a success for the company. Qualcomm will finally start to see some real challenge on this front soon: Broadcom, Nvidia, and Intel are all catching up and should be shipping their own LTE modems this year, but for now Qualcomm's solutions are established and mature. Expect Qualcomm to continue to provide connectivity for most devices.

Let's get to the Snapdragon chips themselves, starting with the oldest and working our way up. Snapdragon's S4 Plus, particularly the highest-end model (part number MSM8960), combines two Krait cores running at 1.7GHz with an Adreno 225 GPU. This GPU is roughly comparable to the Imagination Technologies GPU in Apple's A5, while the Krait CPU is somewhere between the A5 and the A6. This chip is practically everywhere: it powers high-end Android phones from a year or so ago (the US version of Samsung's Galaxy S III) as well as high-end phones from other ecosystems (Nokia's Lumia 920 among many other Windows phones, plus BlackBerry's Z10). It's still a pretty popular choice for those who want to make a phone but don't want to spend the money (or provide the larger battery) for Qualcomm's heavy-duty quad-core SoCs. Look for the S4 Plus series to be replaced in mid-range phones by the Snapdragon 400 series chips, which combine the same dual-core Krait CPU with a slightly more powerful Adreno 305 GPU (the HTC First is the first new midrange phone to use it. Others will likely follow).

Next up is the Snapdragon S4 Pro (in particular, part number APQ8064). This chip combines a quad-core Krait CPU with a significantly beefed up Adreno 320 GPU. Both CPU and GPU trade blows with Apple's A6 in our standard benchmarks, but the CPU is usually faster as long as all four of its cores are actually being used by your apps. This chip is common in high-end phones released toward the end of last year, including such noteworthy models as LG's Optimus G, the Nexus 4, and HTC's Droid DNA. It's powerful, but it can get a little toasty: if you've been running the SoC full-tilt for a while, the Optimus G's screen brightness will automatically turn down to reduce the heat, and the Nexus 4 will throttle the chip and slow down if it's getting too hot.

The fastest, newest Qualcomm chip that's actually showing up in phones now is the Snapdragon 600, a chip Qualcomm unveiled at CES back in January. Like the S4 Pro, this Snapdragon features a quad-core Krait CPU and Adreno 320 GPU, but that doesn't mean they're the same chip. The Krait in the Snapdragon 600 is a revision called "Krait 300" that both runs at a higher clock speed than the S4 Pro's Krait (1.9GHz compared to 1.7GHz) and includes a number of architectural tweaks that make it faster than the original Krait at the same clock speed. The Snapdragon 600 will be coming to us in high-end phones like the US version of Samsung's Galaxy S4, HTC's One, and LG's Optimus G Pro. Our benchmarks for the latter phone show the Snapdragon 600 outdoing the S4 Pro by 25 to 30 percent in many tests, which is a sizable step up (though the Adreno 320 GPU is the same in both chips).

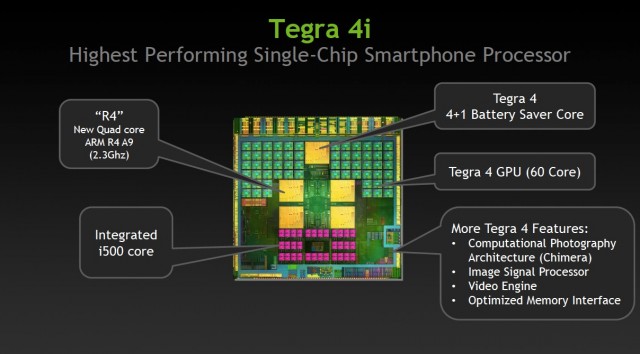

Finally, look ahead to the future and you'll see the Snapdragon 800, Qualcomm's next flagship chip that's due in the second quarter of this year. This chip's quad-core Krait 400 CPU again introduces a few mild tweaks that should make it faster clock-for-clock than the Krait 300, and it also runs at a speedier 2.3GHz. The chip sports an upgraded Adreno 330 GPU that supports a massive 3840×2160 resolution as well as a 64-bit memory interface (everything we've discussed up until now has used a 32-bit interface). All of this extra hardware suggests that this chip is destined for tablets rather than smartphones (a market segment where Qualcomm is less prevalent), but this doesn't necessarily preclude its use in high-end smartphones. We'll know more once the first round of Snapdragon 800-equipped devices are announced.

Qualcomm is in a good position. Its chips are widely used, and its roadmap evolves at a brisk and predictable pace. Things may look less rosy for the company when competing LTE modems start to become more common, but for now it's safe to say that most of the US' high-end phones are going to keep using Qualcomm chips.

Samsung