Entries tagged as network

Related tags

3g gsm lte mobile technology ai algorythm android apple arduino automation crowd-sourcing data mining data visualisation hardware innovation&society neural network programming robot sensors siri software web artificial intelligence big data cloud computing coding fft program amazon cloud ebook google kindle microsoft history ios iphone physical computing satellite drone light wifi botnet security browser chrome firefox ie privacy laptop chrome os os computing farm open source piracy sustainability 3d printing data center energy facebook art car flickr gui internet internet of things maps photos army camera virus social networkTuesday, October 01. 2013

France sanctions Google for European privacy law violations

Via pcworld

-----

Google faces financial sanctions in France after failing to comply with an order to alter how it stores and shares user data to conform to the nation's privacy laws.

The enforcement follows an analysis led by European data protection authorities of a new privacy policy that Google enacted in 2012, France's privacy watchdog, the Commission Nationale de L'Informatique et des Libertes, said Friday on its website.

Google was ordered in June by the CNIL to comply with French data protection laws within three months. But Google had not changed its policies to comply with French laws by a deadline on Friday, because the company said that France's data protection laws did not apply to users of certain Google services in France, the CNIL said.

The company "has not implemented the requested changes," the CNIL said.

As a result, "the chair of the CNIL will now designate a rapporteur for the purpose of initiating a formal procedure for imposing sanctions, according to the provisions laid down in the French data protection law," the watchdog said. Google could be fined a maximum of €150,000 ($202,562), or €300,000 for a second offense, and could in some circumstances be ordered to refrain from processing personal data in certain ways for three months.

What bothers France

The CNIL took issue with several areas of Google's data policies, in particular how the company stores and uses people's data. How Google informs users about data that it processes and obtains consent from users before storing tracking cookies were cited as areas of concern by the CNIL.

In a statement, Google said that its privacy policy respects European law. "We have engaged fully with the CNIL throughout this process, and we'll continue to do so going forward," a spokeswoman said.

Google is also embroiled with European authorities in an antitrust case for allegedly breaking competition rules. The company recently submitted proposals to avoid fines in that case.

Monday, September 09. 2013

Why cards are the future of the web

-----

Cards are fast becoming the best design pattern for mobile devices.

We are currently witnessing a re-architecture of the web, away from pages and destinations, towards completely personalised experiences built on an aggregation of many individual pieces of content. Content being broken down into individual components and re-aggregated is the result of the rise of mobile technologies, billions of screens of all shapes and sizes, and unprecedented access to data from all kinds of sources through APIs and SDKs. This is driving the web away from many pages of content linked together, towards individual pieces of content aggregated together into one experience.

The aggregation depends on:

- The person consuming the content and their interests, preferences, behaviour.

- Their location and environmental context.

- Their friends’ interests, preferences and behaviour.

- The targeting advertising eco-system.

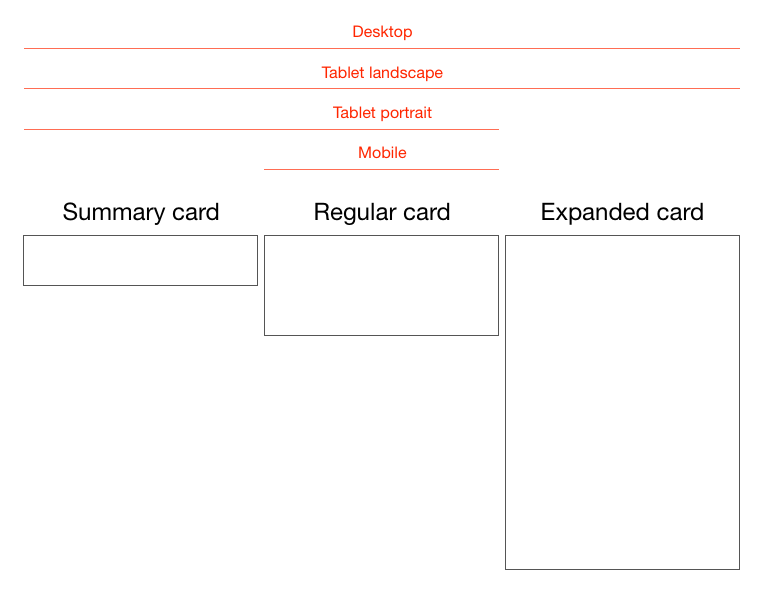

If the predominant medium of our time is set to be the portable screen (think phones and tablets), then the predominant design pattern is set to be cards. The signs are already here…

Twitter is moving to cards

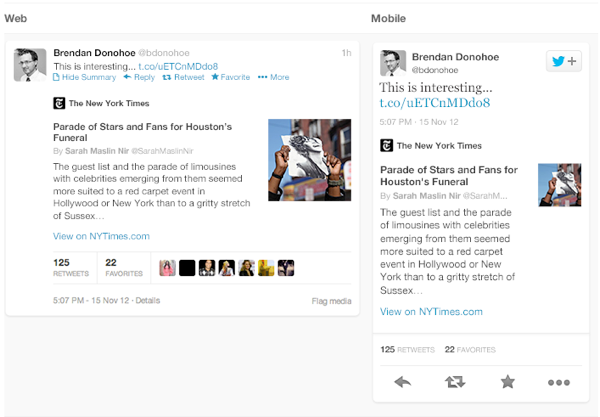

Twitter recently launched Cards, a way to attached multimedia inline with tweets. Now the NYT should care more about how their story appears on the Twitter card (right hand in image above) than on their own web properties, because the likelihood is that the content will be seen more often in card format.

Google is moving to cards

With Google Now, Google is rethinking information distribution, away from search, to personalised information pushed to mobile devices. Their design pattern for this is cards.

Everyone is moving to cards

Pinterest (above left) is built around cards. The new Discover feature on Spotify (above right) is built around cards. Much of Facebook now represents cards. Many parts of iOS7 are now card based, for example the app switcher and Airdrop.

The list goes on. The most exciting thing is that despite these many early card based designs, I think we’re only getting started. Cards are an incredible design pattern, and they have been around for a long time.

Cards give bursts of information

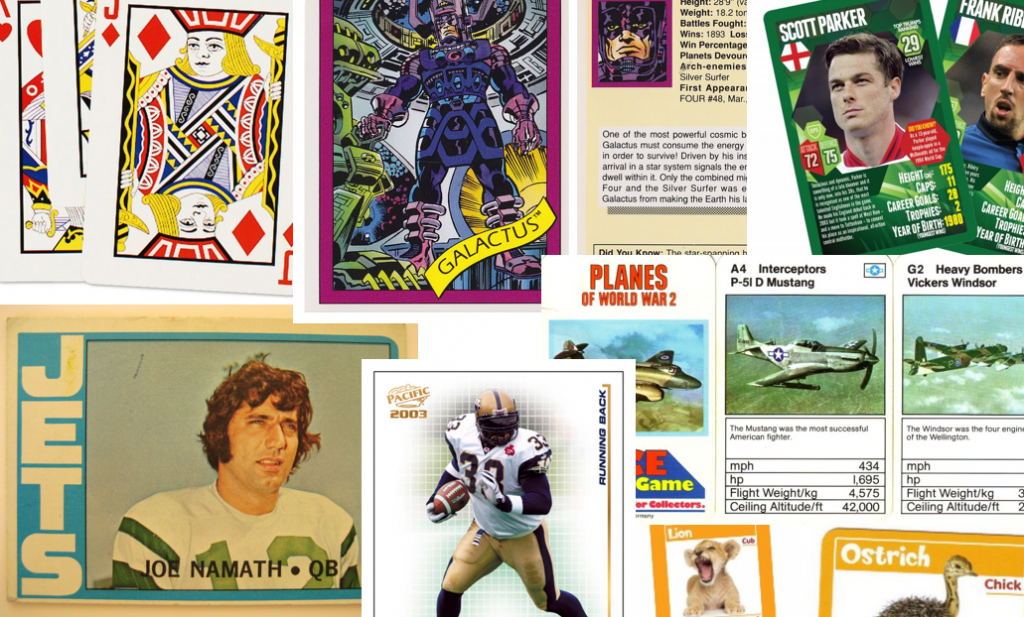

Cards as an information dissemination medium have been around for a very long time. Imperial China used them in the 9th century for games. Trade cards in 17th century London helped people find businesses. In 18th century Europe footmen of aristocrats used cards to introduce the impending arrival of the distinguished guest. For hundreds of years people have handed around business cards.

We send birthday cards, greeting cards. My wallet is full of debit cards, credit cards, my driving licence card. During my childhood, I was surrounded by games with cards. Top Trumps, Pokemon, Panini sticker albums and swapsies. Monopoly, Cluedo, Trivial Pursuit. Before computer technology, air traffic controllers used cards to manage the planes in the sky. Some still do.

Cards are a great medium for communicating quick stories. Indeed the great (and terrible) films of our time are all storyboarded using a card like format. Each card representing a scene. Card, Card, Card. Telling the story. Think about flipping through printed photos, each photo telling it’s own little tale. When we travelled we sent back postcards.

What about commerce? Cards are the predominant pattern for coupons. Remember cutting out the corner of the breakfast cereal box? Or being handed coupon cards as you walk through a shopping mall? Circulars, sent out to hundreds of millions of people every week are a full page aggregation of many individual cards. People cut them out and stick them to their fridge for later.

Cards can be manipulated.

In addition to their reputable past as an information medium, the most important thing about cards is that they are almost infinitely manipulatable. See the simple example above from Samuel Couto Think about cards in the physical world. They can be turned over to reveal more, folded for a summary and expanded for more details, stacked to save space, sorted, grouped, and spread out to survey more than one.

When designing for screens, we can take advantage of all these things. In addition, we can take advantage of animation and movement. We can hint at what is on the reverse, or that the card can be folded out. We can embed multimedia content, photos, videos, music. There are so many new things to invent here.

Cards are perfect for mobile devices and varying screen sizes. Remember, mobile devices are the heart and soul of the future of your business, no matter who you are and what you do. On mobile devices, cards can be stacked vertically, like an activity stream on a phone. They can be stacked horizontally, adding a column as a tablet is turned 90 degrees. They can be a fixed or variable height.

Cards are the new creative canvas

It’s already clear that product and interaction designers will heavily use cards. I think the same is true for marketers and creatives in advertising. As social media continues to rise, and continues to fragment into many services, taking up more and more of our time, marketing dollars will inevitably follow. The consistent thread through these services, the predominant canvas for creativity, will be card based. Content consumption on Facebook, Twitter, Pinterest, Instagram, Line, you name it, is all built on the card design metaphor.

I think there is no getting away from it. Cards are the next big thing in design and the creative arts. To me that’s incredibly exciting.

Wednesday, September 04. 2013

The wireless network with a mile-wide range that the “internet of things” could be built on

Via quartz

-----

Robotics engineer Taylor Alexander needed to lift a nuclear cooling tower off its foundation using 19 high-strength steel cables, and the Android app that was supposed to accomplish it, for which he’d just paid a developer $20,000, was essentially worthless. Undaunted and on deadline—the tower needed a new foundation, and delays meant millions of dollars in losses—he re-wrote the app himself. That’s when he discovered just how hard it is to connect to sensors via the standard long-distance industrial wireless protocol, known as Zigbee.

It took him months of hacking just to create a system that could send him a single number—which represented the strain on each of the cables—from the sensors he was using. Surely, he thought, there must be a better way. And that’s when he realized that the solution to his problem would also unlock the potential of what’s known as the “internet of things” (the idea that every object we own, no matter how mundane, is connected to the internet and can be monitored and manipulated via the internet, whether it’s a toaster, a lightbulb or your car).

The result is an in-the-works project called Flutter. It’s what Taylor calls a “second network”—an alternative to Wi-Fi that can cover 100 times as great an area, with a range of 3,200 feet, using relatively little power, and is either the future of the way that all our connected devices will talk to each other or a reasonable prototype for it.

“We have Wi-Fi in our homes, but it’s not a good network for our things,” says Taylor. Wi-Fi was designed for applications that require fast connections, like streaming video, but it’s vulnerable to interference and has a limited range—often, not enough even to cover an entire house.

For applications with a very limited range—for example anything on your body that you might want to connect with your smartphone—Bluetooth, the wireless protocol used by keyboards and smart watches, is good enough. For industrial applications, the Zigbee standard has been in use for at least a decade. But there are two problems with Zigbee: the first is that, as Alexander discovered, it’s difficult to use. The second is that the Zigbee devices are not open source, which makes them difficult to integrate with the sort of projects that hardware startups might want to create.

Flutter’s nearest competitors, Spark Core and Electric Imp, both use Wi-Fi, which limits their usability to home-bound projects like adding your eggs to the internet of things and klaxons that tell you when your favorite Canadian hockey team has scored a goal.

Flutter’s other differentiator is cost; a Flutter radio costs just $20,

which still allows Taylor a healthy margin above the $6 in parts that

comprise the Flutter.

Making Flutter cheap means that hobbyists can connect that many more devices—say, all the lights in a room, or temperature and moisture sensors in a greenhouse. No one is quite sure what the internet of things will lead to because the enabling technologies, including cheap wireless radios like Flutter, have yet to become widespread. The present day internet of things is a bit like where personal computers were around the time Steve Wozniak and Steve Jobs were showing off their Apple I at the Palo Alto home-brew computer club: It’s mostly hobbyists, with a few big corporations sniffing around the periphery.

“I think the internet of things is not going to start with products, but projects,” says Taylor. His goal is to use the current crowd-funding effort for Flutter to pay for the coding of the software protocol that will run Flutter, since the microchips it uses are already available from manufacturers. The resulting software will allow Flutter to create a “mesh network,” which would allow individual Flutter radios to re-transmit data from any other Flutter radio that’s in range, potentially giving hobbyists or startups the ability to cover whole cities with networks of Flutter radios and their attached sensors.

Saturday, June 29. 2013

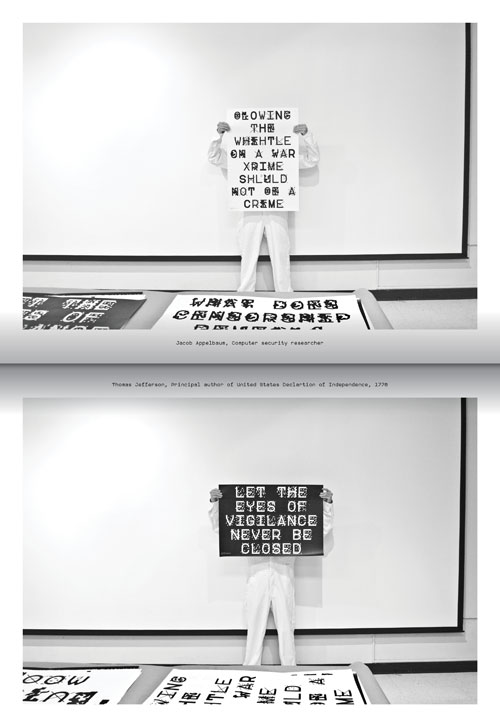

Jumbled Up, No Detection Font Prevents Infringement of Privacy

Via TAXI

-----

Fed up with the NSA’s infringement of privacy, an internet user by the name of Sang Mun has developed a font which cannot be read by computers.

Called ‘ZXX’, which is used by the Library of Congress to

state that a document has “no linguistic content”, the font is garbled

up in such a way that computers with Optical Character Recognition (OCR)

will not be able to recognize it.

Available in four “disguises”, this font uses camouflage

techniques to trick the computers of governments and corporations into

thinking that no useful information can be collated from people, while

remaining readable to the human eye.

The font developer urges users to fight against this infringement of privacy, and has made this font free for all users on his website.

Friday, May 17. 2013

Landlords Double as Energy Brokers

-----

Equinix’s data center in Secaucus is highly coveted space for financial traders, given its proximity to the servers that move trades for Wall Street.

The trophy high-rises on Madison, Park and Fifth Avenues in Manhattan have long commanded the top prices in the country for commercial real estate, with yearly leases approaching $150 a square foot. So it is quite a Gotham-size comedown that businesses are now paying rents four times that in low, bland buildings across the Hudson River in New Jersey.

Why pay $600 or more a square foot at unglamorous addresses like Weehawken, Secaucus and Mahwah? The answer is still location, location, location — but of a very different sort.

Companies are paying top dollar to lease space there in buildings called data centers, the anonymous warrens where more and more of the world’s commerce is transacted, all of which has added up to a tremendous boon for the business of data centers themselves.

The centers provide huge banks of remote computer storage, and the enormous amounts of electrical power and ultrafast fiber optic links that they demand.

Prices are particularly steep in northern New Jersey because it is also where data centers house the digital guts of the New York Stock Exchange and other markets. Bankers and high-frequency traders are vying to have their computers, or servers, as close as possible to those markets. Shorter distances make for quicker trades, and microseconds can mean millions of dollars made or lost.

When the centers opened in the 1990s as quaintly termed “Internet hotels,” the tenants paid for space to plug in their servers with a proviso that electricity would be available. As computing power has soared, so has the need for power, turning that relationship on its head: electrical capacity is often the central element of lease agreements, and space is secondary.

A result, an examination shows, is that the industry has evolved from a purveyor of space to an energy broker — making tremendous profits by reselling access to electrical power, and in some cases raising questions of whether the industry has become a kind of wildcat power utility.

Even though a single data center can deliver enough electricity to power a medium-size town, regulators have granted the industry some of the financial benefits accorded the real estate business and imposed none of the restrictions placed on the profits of power companies.

Some of the biggest data center companies have won or are seeking Internal Revenue Service approval to organize themselves as real estate investment trusts, allowing them to eliminate most corporate taxes. At the same time, the companies have not drawn the scrutiny of utility regulators, who normally set prices for delivery of the power to residences and businesses.

While companies have widely different lease structures, with prices ranging from under $200 to more than $1,000 a square foot, the industry’s performance on Wall Street has been remarkable. Digital Realty Trust, the first major data center company to organize as a real estate trust, has delivered a return of more than 700 percent since its initial public offering in 2004, according to an analysis by Green Street Advisors.

The stock price of another leading company, Equinix, which owns one of the prime northern New Jersey complexes and is seeking to become a real estate trust, more than doubled last year to over $200.

“Their business has grown incredibly rapidly,” said John Stewart, a senior analyst at Green Street. “They arrived at the scene right as demand for data storage and growth of the Internet were exploding.”

Push for Leasing

While many businesses own their own data centers — from stacks of servers jammed into a back office to major stand-alone facilities — the growing sophistication, cost and power needs of the systems are driving companies into leased spaces at a breakneck pace.

The New York metro market now has the most rentable square footage in the nation, at 3.2 million square feet, according to a recent report by 451 Research, an industry consulting firm. It is followed by the Washington and Northern Virginia area, and then by San Francisco and Silicon Valley.

A major orthopedics practice in Atlanta illustrates how crucial these data centers have become.

With 21 clinics scattered around Atlanta, Resurgens Orthopaedics has some 900 employees, including 170 surgeons, therapists and other caregivers who treat everything from fractured spines to plantar fasciitis. But its technological engine sits in a roughly 250-square-foot cage within a gigantic building that was once a Sears distribution warehouse and is now a data center operated by Quality Technology Services.

Eight or nine racks of servers process and store every digital medical image, physician’s schedule and patient billing record at Resurgens, said Bradley Dick, chief information officer at the company. Traffic on the clinics’ 1,600 telephones is routed through the same servers, Mr. Dick said.

“That is our business,” Mr. Dick said. “If those systems are down, it’s going to be a bad day.”

The center steadily burns 25 million to 32 million watts, said Brian Johnston, the chief technology officer for Quality Technology. That is roughly the amount needed to power 15,000 homes, according to the Electric Power Research Institute.

Mr. Dick said that 75 percent of Resurgens’s lease was directly related to power — essentially for access to about 30 power sockets. He declined to cite a specific dollar amount, but two brokers familiar with the operation said that Resurgens was probably paying a rate of about $600 per square foot a year, which would mean it is paying over $100,000 a year simply to plug its servers into those jacks.

While lease arrangements are often written in the language of real estate,“these are power deals, essentially,” said Scott Stein, senior vice president of the data center solutions group at Cassidy Turley, a commercial real estate firm. “These are about getting power for your servers.”

One key to the profit reaped by some data centers is how they sell access to power. Troy Tazbaz, a data center design engineer at Oracle who previously worked at Equinix and elsewhere in the industry, said that behind the flat monthly rate for a socket was a lucrative calculation. Tenants contract for access to more electricity than they actually wind up needing. But many data centers charge tenants as if they were using all of that capacity — in other words, full price for power that is available but not consumed.

Since tenants on average tend to contract for around twice the power they need, Mr. Tazbaz said, those data centers can effectively charge double what they are paying for that power. Generally, the sale or resale of power is subject to a welter of regulations and price controls. For regulated utilities, the average “return on equity” — a rough parallel to profit margins — was 9.25 percent to 9.7 percent for 2010 through 2012, said Lillian Federico, president of Regulatory Research Associates, a division of SNL Energy.

Regulators Unaware

But the capacity pricing by data centers, which emerged in interviews with engineers and others in the industry as well as an examination of corporate documents, appears not to have registered with utility regulators.

Interviews with regulators in several states revealed widespread lack of understanding about the amount of electricity used by data centers or how they profit by selling access to power.

Bernie Neenan, a former utility official now at the Electric Power Research Institute, said that an industry operating outside the reach of utility regulators and making profits by reselling access to electricity would be a troubling precedent. Utility regulations “are trying to avoid a landslide” of other businesses doing the same.

Some data center companies, including Digital Realty Trust and DuPont Fabros Technology, charge tenants for the actual amount of electricity consumed and then add a fee calculated on capacity or square footage. Those deals, often for larger tenants, usually wind up with lower effective prices per square foot.

Regardless of the pricing model, Chris Crosby, chief executive of the Dallas-based Compass Datacenters, said that since data centers also provided protection from surges and power failures with backup generators, they could not be viewed as utilities. That backup equipment “is why people pay for our business,” Mr. Crosby said.

Melissa Neumann, a spokeswoman for Equinix, said that in the company’s leases, “power, cooling and space are very interrelated.” She added, “It’s simply not accurate to look at power in isolation.”

Ms. Neumann and officials at the other companies said their practices could not be construed as reselling electrical power at a profit and that data centers strictly respected all utility codes. Alex Veytsel, chief strategy officer at RampRate, which advises companies on data center, network and support services, said tenants were beginning to resist flat-rate pricing for access to sockets.

“I think market awareness is getting better,” Mr. Veytsel said. “And certainly there are a lot of people who know they are in a bad situation.”

The Equinix Story

The soaring business of data centers is exemplified by Equinix. Founded in the late 1990s, it survived what Jason Starr, director of investor relations, called a “near death experience” when the Internet bubble burst. Then it began its stunning rise.

Equinix’s giant data center in Secaucus is mostly dark except for lights flashing on servers stacked on black racks enclosed in cages. For all its eerie solitude, it is some of the most coveted space on the planet for financial traders. A few miles north, in an unmarked building on a street corner in Mahwah, sit the servers that move trades on the New York Stock Exchange; an almost equal distance to the south, in Carteret, are Nasdaq’s servers.

The data center’s attraction for tenants is a matter of physics: data, which is transmitted as light pulses through fiber optic cables, can travel no faster than about a foot every billionth of a second. So being close to so many markets lets traders operate with little time lag.

As Mr. Starr said: “We’re beachfront property.”

Standing before a bank of servers, Mr. Starr explained that they belonged to one of the lesser-known exchanges located in the Secaucus data center. Multicolored fiber-optic cables drop from an overhead track into the cage, which allows servers of traders and other financial players elsewhere on the floor to monitor and react nearly instantaneously to the exchange. It all creates a dense and unthinkably fast ecosystem of postmodern finance.

Quoting some lyrics by Soul Asylum, Mr. Starr said, “Nothing attracts a crowd like a crowd.” By any measure, Equinix has attracted quite a crowd. With more than 90 facilities, it is the top data center leasing company in the world, according to 451 Research. Last year, it reported revenue of $1.9 billion and $145 million in profits.

But the ability to expand, according to the company’s financial filings, is partly dependent on fulfilling the growing demands for electricity. The company’s most recent annual report said that “customers are consuming an increasing amount of power per cabinet,” its term for data center space. It also noted that given the increase in electrical use and the age of some of its centers, “the current demand for power may exceed the designed electrical capacity in these centers.”

To enhance its business, Equinix has announced plans to restructure itself as a real estate investment trust, or REIT, which, after substantial transition costs, would eventually save the company more than $100 million in taxes annually, according to Colby Synesael, an analyst at Cowen & Company, an investment banking firm.

Congress created REITs in the early 1960s, modeling them on mutual funds, to open real estate investments to ordinary investors, said Timothy M. Toy, a New York lawyer who has written about the history of the trusts. Real estate companies organized as investment trusts avoid corporate taxes by paying out most of their income as dividends to investors.

Equinix is seeking a so-called private letter ruling from the I.R.S. to restructure itself, a move that has drawn criticism from tax watchdogs.

“This is an incredible example of how tax avoidance has become a major business strategy,” said Ryan Alexander, president of Taxpayers for Common Sense, a nonpartisan budget watchdog. The I.R.S., she said, “is letting people broaden these definitions in a way that they kind of create the image of a loophole.”

Equinix, some analysts say, is further from the definition of a real estate trust than other data center companies operating as trusts, like Digital Realty Trust. As many as 80 of its 97 data centers are in buildings it leases, Equinix said. The company then, in effect, sublets the buildings to numerous tenants.

Even so, Mr. Synesael said the I.R.S. has been inclined to view recurring revenue like lease payments as “good REIT income.”

Ms. Neumann, the Equinix spokeswoman, said, “The REIT framework is designed to apply to real estate broadly, whether owned or leased.” She added that converting to a real estate trust “offers tax efficiencies and disciplined returns to shareholders while also allowing us to preserve growth characteristics of Equinix and create significant shareholder value.”

Wednesday, May 15. 2013

Researchers track megacity carbon footprints using mounted sensors

Via SlashGear

-----

Researchers with the NASA Jet Propulsion Laboratory have undertaken a large project that will allow them to measure the carbon footprint of megacities – those with millions of residents, such as Los Angeles and Paris. Such an endevour is achieved using sensors mounted in high locations above the cities, such as a peak in the San Gabriel Mountains and a high-up level on the Eiffel Tower that is closed to tourist traffic.

The sensors are designed to detect a variety of greenhouse gases, including methane and carbon dioxide, augmenting other stations that are already located in various places globally that measure greenhouse gases. These particular sensors are designed to achieve two purposes: monitor the specific carbon footprint effects of large cities, and as a by-product of that information to show whether such large cities are meeting – or are even capable of meeting – their green initiative goals.

Such measuring efforts will be intensified this year. In Los Angeles, for example, scientists working on the project will add a dozen gas analyzers to various rooftop locations throughout the city, as well as to a Prius, which will be driven throughout the city and a research aircraft to be navigated to “methane hotspots.” The data gathered from all these sensors, both present and slated for installation, is then analyzed using software that looks at whether levels have increased, decreased, or are stable, as well as determining where the gases originated from.

One of the examples given is vehicle emissions, with scientists being able to determine (using this data) the effects of switching to green vehicles over more traditional ones and whether its results indicate that it is something worth pursuing or whether it needs to be further analyzed for potential effectiveness. Reported the Associated Press, three years ago California saw 58-percent of its carbon dioxide come from gasoline-powered cars.

California is looking to reducing its emissions levels to a sub-35-percent level over 1990 by the year 2030, a rather ambitious goal. In 2010, it was responsible for producing 408 million tons of carbon dioxide, which outranks just about every country on the planet, putting it about on par with all of Spain. Thus far into the project, both the United States and France have individually spent approximately $3 million the project.

Thursday, May 02. 2013

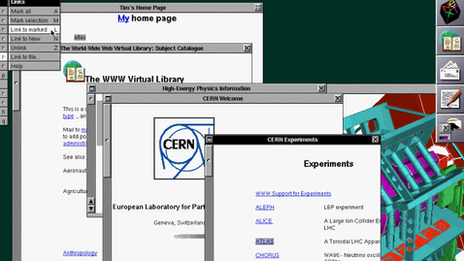

Cern re-creating first web page to revere early ideals

Via BBC

-----

Lost to the world: The first website. At the time, few imagined how ubiquitous the technology would become

A team at the European Organisation for Nuclear Research (Cern) has launched a project to re-create the first web page.

The aim is to preserve the original hardware and software associated with the birth of the web.

The world wide web was developed by Prof Sir Tim Berners-Lee while working at Cern.

The initiative coincides with the 20th anniversary of the research centre giving the web to the world.

According to Dan Noyes, the web manager for Cern's communication group, re-creation of the world's first website will enable future generations to explore, examine and think about how the web is changing modern life.

"I want my children to be able to understand the significance of this point in time: the web is already so ubiquitous - so, well, normal - that one risks failing to see how fundamentally it has changed," he told BBC News

"We are in a unique moment where we can still switch on the first web server and experience it. We want to document and preserve that".

The hope is that the restoration of the first web page and web site will serve as a reminder and inspiration of the web's fundamental values.

At the heart of the original web is technology to decentralise control and make access to information freely available to all. It is this architecture that seems to imbue those that work with the web with a culture of free expression, a belief in universal access and a tendency toward decentralising information.

SubversiveIt is the early technology's innate ability to subvert that makes re-creation of the first website especially interesting.

While I was at Cern it was clear in speaking to those involved with the project that it means much more than refurbishing old computers and installing them with early software: it is about enshrining a powerful idea that they believe is gradually changing the world.

I went to Sir Tim's old office where he worked at Cern's IT department trying to find new ways to handle the vast amount of data the particle accelerators were producing.

I was not allowed in because apparently the present incumbent is fed up with people wanting to go into the office.

But waiting outside was someone who worked at Cern as a young researcher at the same time as Sir Tim. James Gillies has since risen to be Cern's head of communications. He is occasionally referred to as the organisation's half-spin doctor, a reference to one of the properties of some sub-atomic particles.

Amazing dream

Mr Gillies is among those involved in the project. I asked him why he wanted to restore the first website.

"One of my dreams is to enable people to see what that early web experience was like," was the reply.

"You might have thought that the first browser would be very primitive but it was not. It had graphical capabilities. You could edit into it straightaway. It was an amazing thing. It was a very sophisticated thing."

Those not heavily into web technology may be sceptical of the idea that using a 20-year-old machine and software to view text on a web page might be a thrilling experience.

But Mr Gillies and Mr Noyes believe that the first web page and web site is worth resurrecting because embedded within the original systems developed by Sir Tim are the principles of universality and universal access that many enthusiasts at the time hoped would eventually make the world a fairer and more equal place.

The first browser, for example, allowed users to edit and write directly into the content they were viewing, a feature not available on present-day browsers.

Ideals erodedAnd early on in the world wide web's development, Nicola Pellow, who worked with Sir Tim at Cern on the www project, produced a simple browser to view content that did not require an expensive powerful computer and so made the technology available to anyone with a simple computer.

According to Mr Noyes, many of the values that went into that original vision have now been eroded. His aim, he says, is to "go back in time and somehow preserve that experience".

Soon to be refurbished: The NeXT computer that was home to the world's first website

"This universal access of information and flexibility of delivery is something that we are struggling to re-create and deal with now.

"Present-day browsers offer gorgeous experiences but when we go back and look at the early browsers I think we have lost some of the features that Tim Berners-Lee had in mind."

Mr Noyes is reaching out to ask those who were involved in the NeXT computers used by Sir Tim for advice on how to restore the original machines.

AweThe machines were the most advanced of their time. Sir Tim used two of them to construct the web. One of them is on show in an out-of-the-way cabinet outside Mr Noyes's office.

I told him that as I approached the sleek black machine I felt drawn towards it and compelled to pause, reflect and admire in awe.

"So just imagine the reaction of passers-by if it was possible to bring the machine back to life," he responded, with a twinkle in his eye.

The initiative coincides with the 20th anniversary of Cern giving the web away to the world free.

There was a serious discussion by Cern's management in 1993 about whether the organisation should remain the home of the web or whether it should focus on its core mission of basic research in physics.

Sir Tim and his colleagues on the project argued that Cern should not claim ownership of the web.

Great giveawayManagement agreed and signed a legal document that made the web publicly available in such a way that no one could claim ownership of it and that would ensure it was a free and open standard for everyone to use.

Mr Gillies believes that the document is "the single most valuable document in the history of the world wide web".

He says: "Without it you would have had web-like things but they would have belonged to Microsoft or Apple or Vodafone or whoever else. You would not have a single open standard for everyone."

The web has not brought about the degree of social change some had envisaged 20 years ago. Most web sites, including this one, still tend towards one-way communication. The web space is still dominated by a handful of powerful online companies.

A screen shot from the first browser: Those who saw it say it was "amazing and sophisticated". It allowed people to write directly into content, a feature that modern-day browsers no longer have

But those who study the world wide web, such as Prof Nigel Shadbolt, of Southampton University, believe the principles on which it was built are worth preserving and there is no better monument to them than the first website.

"We have to defend the principle of universality and universal access," he told BBC News.

"That it does not fall into a special set of standards that certain organisations and corporations control. So keeping the web free and freely available is almost a human right."

Tuesday, April 23. 2013

In a Cyber War Is It OK to Kill Enemy Hackers?

Via Big Think

-----

The new Tallinn Manual on the International Law Applicable to Cyber Warfare, which lays out 95 core rules on how to conduct a cyber war, may end up being one of the most dangerous books ever written. Reading through the Tallinn Manual, it's possible to come to the conclusion that - under certain circumstances - nations have the right to use “kinetic force” (real-world weapons like bombs or armed drones) to strike back against enemy hackers. Of course, this doesn’t mean that a bunch of hackers in Shanghai are going to be taken out by a Predator Drone strike anytime soon – but it does mean that a nation abiding by international law conventions – such as the United States – would now have the legal cover to deal with enemy hackers in a considerably more muscular way that goes well beyond just jawboning a foreign government.

Welcome to the brave new world of cyber warfare.

The nearly 300-page Tallinn Manual, which was created by an independent group of twenty international law experts at the request of the NATO Cooperative Cyber Defense Center of Excellence, works through a number of different cyber war scenarios, being careful to base its legal logic on international conventions of war that already exist. As a result, there's a clear distinction between civilians and military combatants and a lot of clever thinking about everything -- from what constitutes a "Cyber Attack" (Rule #30) to what comprises a "Cyber Booby Trap" (Rule #44).

So what, exactly, would justify the killing of an enemy hacker by a sovereign state?

First, you’d have to determine if the cyber attack violated a state’s sovereignty. Most cyber attacks directed against the critical infrastructure or the command-and-control systems of another state would meet that standard. Then, you’d have to determine whether the cyber attack was of sufficient scope and intensity so as to constitute a “use of force” against that sovereign state. Shutting down the power grid for a few hours just for the lulz probably would not be a “use of force,” but if that attack happened to cause death, destruction, and mayhem, then it would presumably meet that threshold and would escalate the legal situation to one of "armed conflict." In such cases, warns the Tallinn Manual, sovereign states should first attempt diplomacy and all other measures before engaging in a retaliatory cyber-strike of proportional scale and scope.

But here's where it gets tricky - once we're in an "armed conflict," hackers could be re-classified as military targets rather than civilian targets, opening them up to military reprisals. They could then be targeted by whatever "kinetic force" we have available.

For now, enemy hackers in places like China can breathe easy. Most of what passes for a cyber attack today – “acts of cyber intelligence gathering and cyber theft” or “cyber operations that involve brief or periodic interruption of non-essential cyber services” would not fall into the “armed attack” category. Even cyber attacks on, say, a power grid, would have to have catastrophic consequences before it justifies a military lethal response. As Nick Kolakowski of Slashdot points out:

"In theory, that means a nation under cyber-attack that reaches a certain level—the “people are dying and infrastructure is destroyed” level—can retaliate with very real-world weapons, although the emphasis is still on using cyber-countermeasures to block the incoming attack."

That actually opens up a big legal loophole, and that's what makes the Tallinn Manual potentially so dangerous. Even the lead author of the Tallinn Manual (Michael Schmitt, chairman of the international law department at the U.S. Naval War College) admits that there's actually very little in the manual that specifically references the word "hacker" (and a quick check of the manual's glossary didn't turn up a single entry for "hacker").

Theoretically, a Stuxnet-like hacker attack on a nuclear reactor that spun out of control and resulted in a Fukushima-type scenario could immediately be classified as an act of war, putting the U.S. into "armed conflict." Once we reach that point, anything is fair game. We're already at the point where the U.S. Air Force is re-classifying some of its cyber tools as weapons and preparing its own rules of engagement for dealing with the growing cyber threat from China. It's unclear which, if any, of these "cyber-weapons" would meet the Tallinn Manual's definitional requirement of a cyber counter-attack.

The Tallinn Manual’s recommendations (i.e. the 95 rules) are not binding, but they will likely be considered by the Obama Administration as it orchestrates its responses against escalating hacker threats from China. Rational voices would seem to tell us that the "kinetic force" scenario could never occur, that a state like China would never let things escalate beyond a certain point, and that the U.S. would never begin targeting hackers around the world. Yet, the odds of a catastrophic cyber attack are no longer microscopically small. As a result, will the day ever come when sovereign states take out enemy hackers the same way the U.S. takes out foreign terrorists abroad, and then hide behind the rules of international law embodied within the Tallinn Manual?

Tuesday, March 05. 2013

Google Spectrum Database hits public FCC trial

Via Slash Gear

-----

Google will be conducting a 45-day public trial with the FCC to create a centralized database containing information on free spectrum. The Google Spectrum Database will analyze TV white spaces, which are unused spectrum between TV stations, that can open many doors for possible wireless spectrum expansion in the future. By unlocking these white spaces, wireless providers will be able to provide more coverage in places that need it.

The public trial brings Google one step closer to becoming a certified database administrator for white spaces. Currently the only database administrators are Spectrum Bridge, Inc. and Telcordia Technologies, Inc. Many other companies are applying to be certified, including a big dog like Microsoft. With companies like Google and Microsoft becoming certified, discovery of white spaces should increase monumentally.

Google’s trial allows all industry stakeholders, including broadcasters, cable, wireless microphone users, and licensed spectrum holders, to provide feedback to the Google Spectrum Database. It also allows anyone to track how much TV white space is available in their given area. This entire process is known as dynamic spectrum sharing.

Google’s trial, as well as the collective help of all the other spectrum data administrators, will help unlock more wireless spectrum. It’s a necessity as there is an increasing number of people who are wirelessly connecting to the internet via smartphones, laptops, tablets, and other wireless devices. This trial will open new doors to more wireless coverage (especially in dead zones), Wi-Fi hotspots, and other “wireless technologies”.

Wednesday, December 12. 2012

Why the world is arguing over who runs the internet

Via NewScientist

-----

The ethos of freedom from control that underpins the web is facing its first serious test, says Wendy M. Grossman

WHO runs the internet? For the past 30 years, pretty much no one. Some governments might call this a bug, but to the engineers who designed the protocols, standards, naming and numbering systems of the internet, it's a feature.

The goal was to build a network that could withstand damage and would enable the sharing of information. In that, they clearly succeeded - hence the oft-repeated line from John Gilmore, founder of digital rights group Electronic Frontier Foundation: "The internet interprets censorship as damage and routes around it." These pioneers also created a robust platform on which a guy in a dorm room could build a business that serves a billion people.

But perhaps not for much longer. This week, 2000 people have gathered for the World Conference on International Telecommunications (WCIT) in Dubai in the United Arab Emirates to discuss, in part, whether they should be in charge.

The stated goal of the Dubai meeting is to update the obscure International Telecommunications Regulations (ITRs), last revised in 1988. These relate to the way international telecom providers operate. In charge of this process is the International Telecommunications Union (ITU), an agency set up in 1865 with the advent of the telegraph. Its $200 million annual budget is mainly funded by membership fees from 193 countries and about 700 companies. Civil society groups are only represented if their governments choose to include them in their delegations. Some do, some don't. This is part of the controversy: the WCIT is effectively a closed shop.

Vinton Cerf, Google's chief internet evangelist and co-inventor of the TCP/IP internet protocols, wrote in May that decisions in Dubai "have the potential to put government handcuffs on the net".

The need to update the ITRs isn't surprising. Consider what has happened since 1988: the internet, Wi-Fi, broadband, successive generations of mobile telephony, international data centres, cloud computing. In 1988, there were a handful of telephone companies - now there are thousands of relevant providers.

Controversy surrounding the WCIT gathering has been building for months. In May, 30 digital and human rights organisations from all over the world wrote to the ITU with three demands: first, that it publicly release all preparatory documents and proposals; second, that it open the process to civil society; and third that it ask member states to solicit input from all interested groups at national level. In June, two academics at George Mason University in Virginia - Jerry Brito and Eli Dourado - set up the WCITLeaks site, soliciting copies of the WCIT documents and posting those they received. There were still gaps in late November when .nxt, a consultancy firm and ITU member, broke ranks and posted the lot on its own site.

The issue entered the mainstream when Greenpeace and the International Trade Union Confederation (ITUC) launched the Stop the Net Grab campaign, demanding that the WCIT be opened up to outsiders. At the launch of the campaign on 12 November, Sharan Burrow, general secretary of the ITUC, pledged to fight for as long it took to ensure an open debate on whether regulation was necessary. "We will stay the distance," she said.

This marks the first time that such large, experienced, international campaigners, whose primary work has nothing to do with the internet, have sought to protect its freedoms. This shows how fundamental a technology the internet has become.

A week later, the European parliament passed a resolution stating that the ITU was "not the appropriate body to assert regulatory authority over either internet governance or internet traffic flows", opposing any efforts to extend the ITU's scope and insisting that its human rights principles took precedence. The US has always argued against regulation.

Efforts by ITU secretary general Hamadoun Touré to spread calm have largely failed. In October, he argued that extending the internet to the two-thirds of the world currently without access required the UN's leadership. Elsewhere, he has repeatedly claimed that the more radical proposals on the table in Dubai would not be passed because they would require consensus.

These proposals raise two key fears for digital rights campaigners. The first concerns censorship and surveillance: some nations, such as Russia, favour regulation as a way to control or monitor content transiting their networks.

The second is financial. Traditional international calls attract settlement fees, which are paid by the operator in the originating country to the operator in the terminating country for completing the call. On the internet, everyone simply pays for their part of the network, and ISPs do not charge to carry each other's traffic. These arrangements underpin network neutrality, the principle that all packets are delivered equally on a "best efforts" basis. Regulation to bring in settlement costs would end today's free-for-all, in which anyone may set up a site without permission. Small wonder that Google is one of the most vocal anti-WCIT campaigners.

How worried should we be? Well, the ITU cannot enforce its decisions, but, as was pointed out at the Stop the Net Grab launch, the system is so thoroughly interconnected that there is plenty of scope for damage if a few countries decide to adopt any new regulatory measures.

This is why so many people want to be represented in a dull, lengthy process run by an organisation that may be outdated to revise regulations that can be safely ignored. If you're not in the room you can't stop the bad stuff.

Wendy M. Grossman is a science writer and the author of net.wars (NYU Press)

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131082)

- Norton cyber crime study offers striking revenue loss statistics (101714)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100103)

- Norton cyber crime study offers striking revenue loss statistics (57943)

- The PC inside your phone: A guide to the system-on-a-chip (57527)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

March '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 | 31 | |||||