Entries tagged as network

Monday, January 23. 2012

Via eurekalert

-----

Researchers have succeeded in combining the power of quantum

computing with the security of quantum cryptography and have shown that

perfectly secure cloud computing can be achieved using the principles of

quantum mechanics. They have performed an experimental demonstration of

quantum computation in which the input, the data processing, and the

output remain unknown to the quantum computer. The international team of

scientists will publish the results of the experiment, carried out at

the Vienna Center for Quantum Science and Technology (VCQ) at the

University of Vienna and the Institute for Quantum Optics and Quantum

Information (IQOQI), in the forthcoming issue of Science.

Quantum computers are expected to play an important role in future

information processing since they can outperform classical computers at

many tasks. Considering the challenges inherent in building quantum

devices, it is conceivable that future quantum computing capabilities

will exist only in a few specialized facilities around the world – much

like today's supercomputers. Users would then interact with those

specialized facilities in order to outsource their quantum computations.

The scenario follows the current trend of cloud computing: central

remote servers are used to store and process data – everything is done

in the "cloud." The obvious challenge is to make globalized computing

safe and ensure that users' data stays private.

The latest research, to appear in Science, reveals that

quantum computers can provide an answer to that challenge. "Quantum

physics solves one of the key challenges in distributed computing. It

can preserve data privacy when users interact with remote computing

centers," says Stefanie Barz, lead author of the study. This newly

established fundamental advantage of quantum computers enables the

delegation of a quantum computation from a user who does not hold any

quantum computational power to a quantum server, while guaranteeing that

the user's data remain perfectly private. The quantum server performs

calculations, but has no means to find out what it is doing – a

functionality not known to be achievable in the classical world.

The scientists in the Vienna research group have demonstrated the

concept of "blind quantum computing" in an experiment: they performed

the first known quantum computation during which the user's data stayed

perfectly encrypted. The experimental demonstration uses photons, or

"light particles" to encode the data. Photonic systems are well-suited

to the task because quantum computation operations can be performed on

them, and they can be transmitted over long distances.

The process works in the following manner. The user prepares qubits –

the fundamental units of quantum computers – in a state known only to

himself and sends these qubits to the quantum computer. The quantum

computer entangles the qubits according to a standard scheme. The actual

computation is measurement-based: the processing of quantum information

is implemented by simple measurements on qubits. The user tailors

measurement instructions to the particular state of each qubit and sends

them to the quantum server. Finally, the results of the computation are

sent back to the user who can interpret and utilize the results of the

computation. Even if the quantum computer or an eavesdropper tries to

read the qubits, they gain no useful information, without knowing the

initial state; they are "blind."

The research at the Vienna Center for

Quantum Science and Technology (VCQ) at the University of Vienna and at

the Institute for Quantum Optics and Quantum Information (IQOQI) of the

Austrian Academy of Sciences was undertaken in collaboration with the

scientists who originally invented the protocol, based at the University

of Edinburgh, the Institute for Quantum Computing (University of

Waterloo), the Centre for Quantum Technologies (National University of

Singapore), and University College Dublin.

Publication: "Demonstration of Blind Quantum Computing"

Stefanie Barz, Elham Kashefi, Anne Broadbent, Joseph Fitzsimons, Anton Zeilinger, Philip Walther.

DOI: 10.1126/science.1214707

Tuesday, January 10. 2012

Via TechnologyReview

-----

Microsoft has developed a new kind of Wi-Fi network that performs at

its top speed even in the face of interference. It takes advantage of a

new Wi-Fi standard that uses more of the electromagnetic spectrum, but

also hops between the narrow bands of unused spectrum within television

broadcast frequencies.

In 2008, the U.S. Federal Communications Commission approved limited use of "white spaces"—portions

of spectrum adjacent to existing television transmissions. The ruling,

in effect, expanded the available spectrum. Microsoft developed the new

network partly as a way to push Congress to allow much broader use of

white spaces, despite some concerns over interference with some other

types of wireless devices, such as wireless microphones.

The fastest Wi-Fi networks, which can transmit data at up to a

gigabit per second, use as much spectrum as possible, up to 160

megahertz, to maximize bandwidth. Krishna Chintalapudi and his team at

Microsoft Research have pioneered an approach, called WiFi-NC, which

makes efficient use of these white spaces at these speeds.

Rather than using a conventional Wi-Fi radio, it uses an array of

tiny, low-data rate transmitters and receivers. Each of these broadcast

and receive via a different, narrow range of spectrum. Bundled together,

they work just like a regular Wi-Fi radio, but can switch between

white-space frequencies far more efficiently.

That means the system is compatible with existing equipment. "The

entire reception and transmission logic could be reused from existing

Wi-Fi implementations," says Chintalapudi.

The team calls these transmitters and receivers "receiver-lets" and

"transmitter-lets." Together, they make up what's known as a "compound

radio."

The resulting wireless network doesn't increase data rates in

specific ranges of spectrum above what's currently achieved with

latest-generation technology. It does, however, make more efficient use

of the entire range of spectrum, and especially the white spaces freed

up by the FCC.

The new radio integrates with a previous Microsoft project that provides a wireless device with access to a database of available white-space

spectrum in any part of the United States. That system, called

SenseLess, tells a device where it can legally broadcast and receive.

WiFi-NC then chooses the bands of spectrum that have the least

interference, and broadcasts over them.

By sending its signal over many smaller radios that operate in

slivers of the available spectrum, WiFi-NC suffers less interference and

experiences faster speeds even when a user is at the intersection of

overlapping networks. This is important because the white spaces that

may be authorized for commercial use by the FCC are at the lower ends of

the electromagnetic spectrum, where signals can travel much further

than existing Wi-Fi transmissions.

Whether or not Microsoft's WiFi-NC technology gets commercialized depends on Congress, says Kevin Werbach,

a professor at the University of Pennsylvania's Wharton Business

School, and an expert on the FCC's effort to make more spectrum

available for wireless data transmission.

"The problem is that many of the Congressional proposals to give the

FCC [the authority to auction off currently unused bandwidth] also

restrict it from making available white spaces for devices around that

spectrum," says Werbach.

Microsoft hopes WiFi-NC will persuade Congress to approve wider use of white spaces.

"It is our opinion that WiFi-NC's approach of using multiple narrow

channels as opposed to the current model of using wider channels in an

all-or-nothing style is the more prudent approach for the future of

Wi-Fi and white spaces," says Chintalapudi. The team's ultimate goal, he

adds, is to propose WiFi-NC as a new wireless standard for the hardware

and software industries.

Wednesday, January 04. 2012

Via PCWorld

-----

Hackers reportedly plan to fight back against Internet censorship by

putting their own communications satellites into orbit and developing a

grid of ground stations to track and communicate with them.

The news comes as the tech world is up in arms about proposed legislation that many feel would threaten online freedom.

According to BBC News, the satellite plan was recently outlined at the Chaos Communication Congress in Berlin. It's being called the "Hackerspace Global Grid."

If you don't like the idea of hackers being able to communicate better,

hacker activist Nick Farr said knowledge is the only motive of the

project, which also includes the development of new electronics that can

survive in space, and launch vehicles that can get them there.

Farr and his cohorts are working on the project along with Constellation, a German aerospace research initiative that involves interlinked student projects.

You might think it would be hard for just anybody to put a satellite

into space, but hobbyists and amateurs have been able in recent years to

use balloons to get them up there. However, without the deep pockets of

national agencies or large companies they have a hard time tracking the

devices.

To

better locate their satellites, the German hacker group came up with

the idea of a sort of reverse GPS that uses a distributed network of

low-cost ground stations that can be bought or built by individuals.

Supposedly, these stations would be able to pinpoint satellites at any

given time while improving the transmission of data from the satellites

to Earth.

The plan isn't without limitations.

For one thing, low orbit satellites don't stay in a single place. And

any country could go to the trouble of disabling them. At the same time,

outer space isn’t actually governed by the countries over which it

floats.

The scheme

discussed by hackers follows the introduction of the controversial Stop

Online Piracy Act (SOPA) in the United States, which many believe to be

a threat to online freedom.

As PC World's Tony Bradley put it, the bill is

a combination of an overzealous drive to fight Internet piracy, with

elected representatives who don't know the difference between DNS, IM,

and MP3. In short, SOPA is a "draconian legislation that far exceeds its intended scope, and threatens the Constitutional rights of law abiding citizens," he wrote.

And apparently those who typically don't follow the law -- hackers -- think there's something they can do about it.

Friday, December 23. 2011

Via TomsGuide

-----

Microsoft announced that it will be launching silent updates for IE9 in January.

Despite

the controversy of user control, Microsoft especially has a reason to

make this move to react to browser "update fatigue" that has resulted in

virtually "stale" IE users who won't upgrade their browsers unless they

upgrade their operating system as well. Despite

the controversy of user control, Microsoft especially has a reason to

make this move to react to browser "update fatigue" that has resulted in

virtually "stale" IE users who won't upgrade their browsers unless they

upgrade their operating system as well.

The most recent upgrade of Google's Chrome browser

shows just how well the silent update feature works. Within five days

of introduction, Chrome 15 market share fell from 24.06 percent to just

6.38 percent, while the share of Chrome 16 climbed from 0.35 percent to

19.81 percent, according to StatCounter.

Within five days, Google moved about 75 percent of its user base - more

than 150 million users - from one browser to another. Within three

days, Chrome 16 market share surpassed the market share of IE9

(currently at about 10.52 percent for this month), in four days it

surpassed Firefox 8 (currently at about 15.60 percent) and will be

passing IE8 today, StatCounter data indicates.

What makes this data so important is the fact that Google is

dominating HTML5 capability across all operating system platforms and

not just Windows 7, where IE9 has a slight advantage, according to

Microsoft (StatCounter does not break out data for browser share on

individual operating systems). IE9 was introduced on March 14 of 2011,

has captured only 10.52 percent market share and has followed a similar

slow upgrade pattern as its predecessors. For example, IE, which was

introduced in March 2009, reached its market share peak in the month IE9

was introduced - at 30.24 percent. Since then, the browser has declined

to only 22.17 percent and 57.52 percent of the IE user base still uses

IE8 today.

With the silent updates becoming available for IE8 and IE9,

Microsoft is likely to avoid another IE6 disaster with IE8. Even more

important for Microsoft is that those users who update to IE9 may be

less likely to switch to Chrome.

Tuesday, December 20. 2011

Via ZDNet

-----

owncloud.com

Everyone likes personal cloud services, like Apple’s iCloud, Google Music, and Dropbox.

But, many of aren’t crazy about the fact that our files, music, and

whatever are sitting on someone else’s servers without our control.

That’s where ownCloud comes in.

OwnCloud is an open-source cloud program. You use it to set up your

own cloud server for file-sharing, music-streaming, and calendar,

contact, and bookmark sharing project. As a server program it’s not that

easy to set up. OpenSUSE, with its Mirall installation program and desktop client makes it easier to set up your own personal ownCloud, but it’s still not a simple operation. That’s going to change.

According to ownCloud’s business crew,

“OwnCloud offers the ease-of-use and cost effectiveness of Dropbox and

box.net with a more secure, better managed offering that, because it’s

open source, offers greater flexibility and no vendor lock in. This

makes it perfect for business use. OwnCloud users can run file sync and

share services on their own hardware and storage or use popular public

hosting and storage offerings.” I’ve tried it myself and while setting

it up is still mildly painful, once up ownCloud works well.

OwnCloud enables universal access to files through a Web browser or WebDAV.

It also provides a platform to easily view and sync contacts, calendars

and bookmarks across all devices and enables basic editing right on the

Web. Programmers will be able to add features to it via its open

application programming interface (API).

OwnCloud is going to become an easy to run and use personal, private

cloud thanks to a new commercial company that’s going to take ownCloud

from interesting open-source project to end-user friendly program. This

new company will be headed by former SUSE/Novell executive Markus Rex.

Rex, who I’ve known for years and is both a business and technology

wizard, will serve as both CEO and CTO. Frank Karlitschek, founder of

the ownCloud project, will be staying.

To make this happen, this popular–350,000 users-program’s commercial

side is being funded by Boston-based General Catalyst, a high-tech.

venture capital firm. In the past, General Catalyst has helped fund such

companies as online travel company Kayak and online video platform leader Brightcove.

General Catalyst came on board, said John Simon, Managing Director at

General Catalyst in a statement, because, “With the explosion of

unstructured data in the enterprise and increasingly mobile (and

insecure) ways to access it, many companies have been forced to lock

down their data–sometimes forcing employees to find less than secure

means of access, or, if security is too restrictive, risk having all

that unavailable When we saw the ease-of-use, security and flexibility

of ownCloud, we were sold.”

“In a cloud-oriented world, ownCloud is the only tool based on a

ubiquitous open-source platform,” said Rex, in a statement. “This

differentiator enables businesses complete, transparent, compliant

control over their data and data storage costs, while also allowing

employees simple and easy data access from anywhere.”

As a Linux geek, I already liked ownCloud. At the company releases

mass-market ownCloud products and service in 2012, I think many of you

are going to like it as well. I’m really looking forward to seeing where

this program goes from here.

Wednesday, November 16. 2011

Via GeekOSystem

-----

A new installation at the Amsterdam Foam gallery by Erik Kessels takes a literal look at the digital deluge of photos online by printing out 24 hours worth of uploads to Flickr. The result is rooms filled with over 1,000,000 printed photos, piled up against the walls.

There’s a sense of waste and a maddening disorganization to it all, both of which are apparently intentional. According to Creative Review, Kessels said of his own project:

“We’re exposed to an overload of images nowadays,” says Kessels. “This glut is in large part the result of image-sharing sites like Flickr, networking sites like Facebook, and picture-based search engines. Their content mingles public and private, with the very personal being openly and un-selfconsciously displayed. By printing all the images uploaded in a 24-hour period, I visualise the feeling of drowning in representations of other peoples’ experiences.”

Humbling, and certainly thought provoking, Kessel’s work challenges the notion that everything can and should be shared, which has become fundamental to the modern web. Then again, perhaps it’s only wasteful and overwhelming when you print all the pictures and divorce them from their original context.

Tuesday, November 08. 2011

Via Recursivity.blog()

-----

At the beginning of last week, I launched GreedAndFearIndex

- a SaaS platform that automatically reads thousands of financial news

articles daily to deduce what companies are in the news and whether

financial sentiment is positive or negative.

It’s an app built largely on Scala, with MongoDB and Akka playing prominent roles to be able to deal with the massive amounts of data on a relatively small and cheap amount of hardware.

The app itself took about 4-5 weeks to build, although the underlying

technology in terms of web crawling, data cleansing/normalization, text

mining, sentiment analysis, name recognition, language grammar

comprehension such as subject-action-object resolution and the

underlying “God”-algorithm that underpins it all took considerably

longer to get right.

Doing it all was not only lots of late nights of coding, but also

reading more academic papers than I ever did at university, not only on

machine learning but also on neuroscience and research on the human

neocortex.

What I am getting at is that financial news and sentiment analysis

might be a good showcase and the beginning, but it is only part of a

bigger picture and problem to solve.

Unlocking True Machine Intelligence & Predictive Power

The

human brain is an amazing pattern matching & prediction machine -

in terms of being able to pull together, associate, correlate and

understand causation between disparate, seemingly unrelated strands of

information it is unsurpassed in nature and also makes much of what has

passed for “Artificial Intelligence” look like a joke.

However, the human brain is also severely limited: it is slow, it’s

immediate memory is small, we can famously only keep track of 7 (+-)

things at any one time unless we put considerable effort into it. We are

awash in amounts of data, information and noise that our brain is

evolutionary not yet adapted to deal with.

So the bigger picture of what I’m working on is not a SaaS sentiment

analysis tool, it is the first step of a bigger picture (which

admittedly, I may not solve, or not solve in my lifetime):

What if we could make machines match our own ability to find patterns

based on seemingly unrelated data, but far quicker and with far more

than 5-9 pieces of information at a time?

What if we could accurately predict the movements of financial

markets, the best price point for a product, the likelihood of natural

disasters, the spreading patterns of infectious diseases or even unlock

the secrets of solving disease and aging themselves?

The Enablers

I see a number of enablers that are making this future a real possibility within my lifetime:

- Advances in neuroscience: our understanding of

the human brain is getting better year by year, the fact that we can now

look inside the brain on a very small scale and that we are starting to

build a basic understanding of the neocortex will be the key to the

future of machine learning. Computer Science and Neuroscience must

intermingle to a higher degree to further both fields.

- Cloud Computing, parallelism & increased computing power:

Computing power is cheaper than ever with the cloud, the software to

take advantage of multi-core computers is finally starting to arrive and

Moore’s law is still advancing at ever (the latest generation of

MacBook Pro’s have roughly 2.5 times the performance of my barely 2 year

old MBP).

- “Big Data”: we have the data needed to both train

and apply the next generation of machine learning algorithms on

abundantly available to us. It is no longer locked away in the silos of

corporations or the pages of paper archives, it’s available and

accessible to anyone online.

- Crowdsourcing: There are two things that are very

time intensive when working with machine learning - training the

algorithms, and once in production, providing them with feedback (“on

the job training”) to continually improve and correct. The internet and

crowdsourcing lowers the barriers immensely. Digg, Reddit, Tweetmeme,

DZone are all early examples of simplistic crowdsourcing with little

learning, but where participants have a personal interest in

participating in the crowdsourcing. Combine that with machine learning

and you have a very powerful tool at your disposal.

Babysteps & The Perfect Storms

All

things considered, I think we are getting closer to the perfect storm of

taking machine intelligence out of the dark ages where they have

lingered far too long and quite literally into a brave new world where

one day we may struggle to distinguish machine from man and artificial

intelligence from biological intelligence.

It will be a road fraught with setbacks, trial and error where the

errors will seem insurmountable, but we’ll eventually get there one

babystep at a time.

I’m betting on it and the first natural step is

predictive analytics & adaptive systems able to automatically detect

and solve problems within well-defined domains.

Tuesday, November 01. 2011

Via LUKEW

By Luke Wroblewski

-----

As mobile devices have continued to evolve and spread,

so has the process of designing and developing Web sites and services

that work across a diverse range of devices. From responsive Web design

to future friendly thinking, here's how I've seen things evolve over the

past year and a half.

If

you haven't been keeping up with all the detailed conversations about

multi-device Web design, I hope this overview and set of resources can

quickly bring you up to speed. I'm only covering the last 18 months

because it has been a very exciting time with lots of new ideas and

voices. Prior to these developments, most multi-device Web design

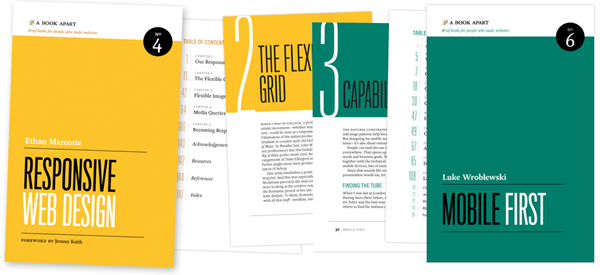

problems were solved with device detection and many still are. But the introduction of Responsive Web Design really stirred things up.

Responsive Web Design

Responsive

Web Design is a combination of fluid grids and images with media

queries to change layout based on the size of a device viewport. It uses

feature detection (mostly on the client) to determine available screen

capabilities and adapt accordingly. RWD is most useful for layout but

some have extended it to interactive elements as well (although this

often requires Javascript).

Responsive Web Design allows you to

use a single URL structure for a site, thereby removing the need for

separate mobile, tablet, desktop, etc. sites.

For a short overview read Ethan Marcotte's original article. For the full story read Ethan Marcotte's book. For a deeper dive into the philosophy behind RWD, read over Jeremy Keith's supporting arguments. To see a lot of responsive layout examples, browse around the mediaqueri.es site.

Challenges

Responsive

Web Design isn't a silver bullet for mobile Web experiences. Not only

does client-side adaptation require a careful approach, but it can also

be difficult to optimize source order, media, third-party widgets, URL

structure, and application design within a RWD solution.

Jason Grigsby has written up many of the reasons RWD doesn't instantly provide a mobile solution especially for images. I've documented (with concrete) examples why we opted for separate mobile and desktop templates in my last startup -a technique that's also employed by many Web companies like Facebook, Twitter, Google, etc. In short, separation tends to give greater ability to optimize specifically for mobile.

Mobile First Responsive Design

Mobile

First Responsive Design takes Responsive Web Design and flips the

process around to address some of the media query challenges outlined

above. Instead of starting with a desktop site, you start with the

mobile site and then progressively enhance to devices with larger

screens.

The Yiibu team was one of the first to apply this approach and wrote about how they did it. Jason Grigsby has put together an overview and analysis of where Mobile First Responsive Design is being applied. Brad Frost has a more high-level write-up of the approach. For a more in-depth technical discussion, check out the thread about mobile-first media queries on the HMTL5 boilerplate project.

Techniques

Many

folks are working through the challenges of designing Web sites for

multiple devices. This includes detailed overviews of how to set up

Mobile First Responsive Design markup, style sheet, and Javascript

solutions.

Ethan Marcotte has shared what it takes for teams of developers and designers to collaborate on a responsive workflow based on lessons learned on the Boston Globe redesign. Scott Jehl outlined what Javascript is doing (PDF) behind the scenes of the Globe redesign (hint: a lot!).

Stephanie Rieger assembled a detailed overview (PDF)

of a real-world mobile first responsive design solution for hundreds of

devices. Stephan Hay put together a pragmatic overview of designing with media queries.

Media

adaptation remains a big challenge for cross-device design. In

particular, images, videos, data tables, fonts, and many other "widgets"

need special care. Jason Grigsby has written up the situation with images and compiled many approaches for making images responsive. A number of solutions have also emerged for handling things like videos and data tables.

Server Side Components

Combining

Mobile First Responsive Design with server side component (not full

page) optimization is a way to extend client-side only solutions. With

this technique, a single set of page templates define an entire Web site

for all devices but key components within that site have device-class

specific implementations that are rendered server side. Done right, this

technique can deliver the best of both worlds without the challenges

that can hamper each.

I've put together an overview of how a Responsive Design + Server Side Components structure can work with concrete examples. Bryan Rieger has outlined an extensive set of thoughts on server-side adaption techniques and Lyza Gardner has a complete overview of how all these techniques can work together. After analyzing many client-side solutions to dynamic images, Jason Grigsby outlined why using a server-side solution is probably the most future friendly.

Future Thinking

If

all the considerations above seem like a lot to take in to create a Web

site, they are. We are in a period of transition and still figuring

things out. So expect to be learning and iterating a lot. That's both

exciting and daunting.

It also prepares you for what's ahead.

We've just begun to see the onset of cheap networked devices of every

shape and size. The zombie apocalypse of devices is coming. And while we can't know exactly what the future will bring, we can strive to design and develop in a future-friendly way so we are better prepared for what's next.

Resources

I

referenced lots of great multi-device Web design resources above. Here

they are in one list. Read them in order and rock the future Web!

Thursday, October 20. 2011

Via Troy Hunt

-----

In the beginning, there was the web and you accessed it though the

browser and all was good. Stuff didn’t download until you clicked on

something; you expected cookies to be tracking you and you always knew

if HTTPS was being used. In general, the casual observer had a pretty

good idea of what was going on between the client and the server.

Not

so in the mobile app world of today. These days, there’s this great big

fat abstraction layer on top of everything that keeps you pretty well

disconnected from what’s actually going on. Thing is, it’s a trivial

task to see what’s going on underneath, you just fire up an HTTP proxy

like Fiddler, sit back and watch the show.

Let

me introduce you to the seedy underbelly of the iPhone, a world where

not all is as it seems and certainly not all is as it should be.

There’s no such thing as too much information – or is there?

Here’s

a good place to start: conventional wisdom says that network efficiency

is always a good thing. Content downloads faster, content renders more

quickly and site owners minimise their bandwidth footprint. But even

more importantly in the mobile world, consumers are frequently limited

to fairly meagre download limits, at least by today’s broadband

standards. Bottom line: bandwidth optimisation in mobile apps is very important, far more so than in your browser-based web apps of today.

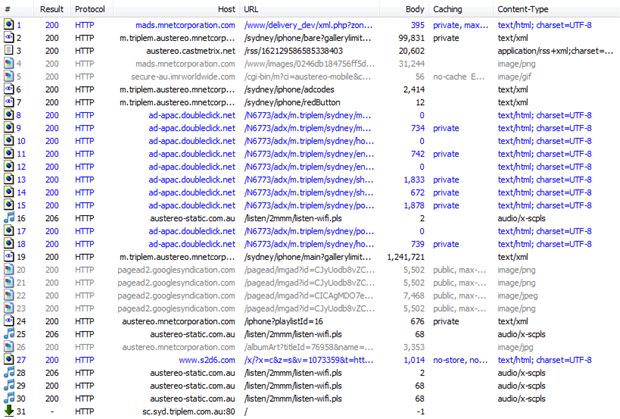

Let me give you an example of where this all starts to go wrong with mobile apps. Take the Triple M app, designed to give you a bunch of info about one of Australia’s premier radio stations and play it over 3G for you. Here’s how it looks:

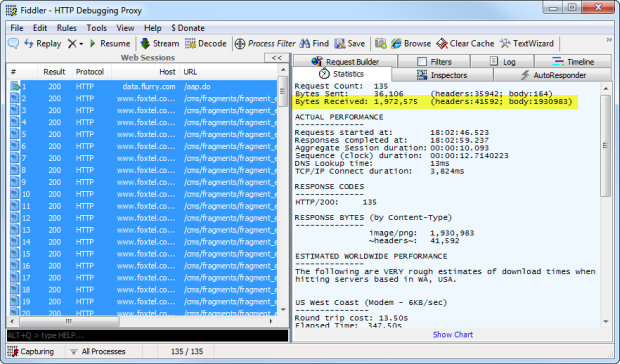

Where it all starts to go wrong is when you look at the requests being made just to load the app, you’re up to 1.3MB alone:

Why?

Well, part of the problem is that you’ve got no gzip compression.

Actually, that’s not entirely true, some of the small stuff is

compressed, just none of the big stuff. Go figure.

But there’s

also a lot of redundancy. For example, on the app above you can see the

first article titled “Manly Sea Eagles’ 2013 Coach…” and this is

returned in request #2 as is the body of the story. So far, so good. But

jump down to request #19 – that massive 1.2MB one – and you get the

whole thing again. Valuable bandwidth right out the window there.

Now

of course this app is designed to stream audio so it’s never going to

be light on bandwidth (as my wife discovered when she hit her cap “just

by listening to the radio”), and of course some of the upfront load is

also to allow the app to respond instantaneously when you drill down to

stories. But the patterns above are just unnecessary; why send redundant

data in an uncompressed format?

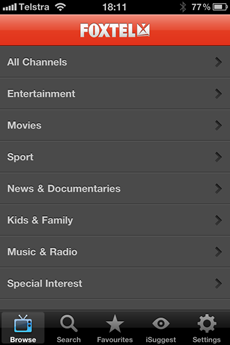

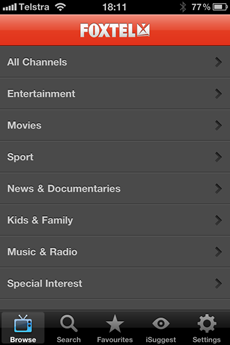

Here’s a dirty Foxtel secret;

what do you reckon it costs you in bandwidth to load the app you see

below? A few KB? Maybe a hundred KB to pull down a nice gzipped JSON

feed of all the channels? Maybe nothing because it will pull data on

demand when you actually do something?

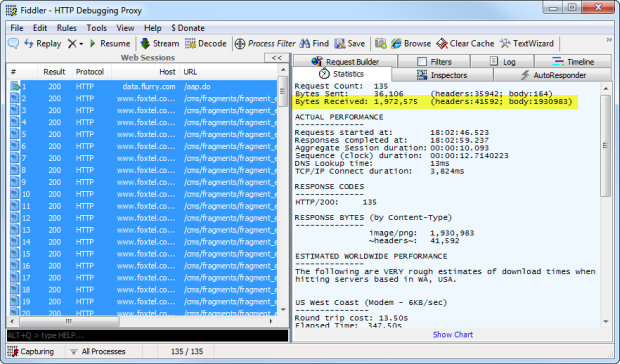

Guess again, you’ll be needing a couple of meg just to start the app:

Part

of the problem is shown under the Content-Type heading above; it’s

nearly all PNG files. Actually, it’s 134 PNG files. Why? I mean what on

earth could justify nearly 2 meg of PNGs just to open the app? Take a

look at this fella:

This is just one of the actual images at original size. And why is this humungous PNG required? To generate this screen:

Hmmm, not really a use case for a 425x243, 86KB PNG. Why? Probably because as we’ve seen before,

developers like to take something that’s already in existence and

repurpose it outside its intended context, and just as in that

aforementioned link, this can start causing all sorts of problems.

Unfortunately it makes for an unpleasant user experience as you sit

there waiting for things to load while it does unpleasant things to your

(possibly meagre) data allocation.

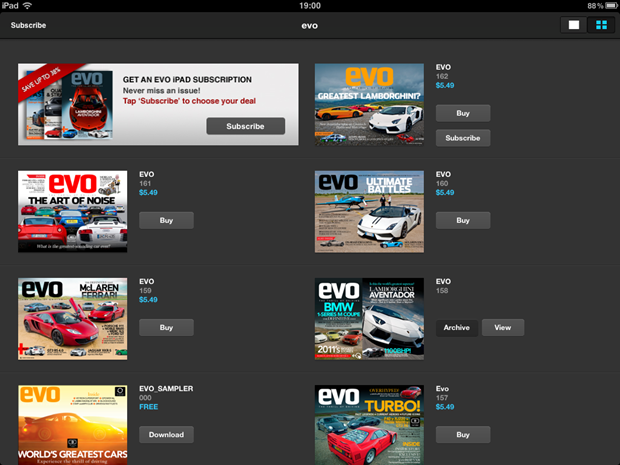

But we’re only just warming up. Let’s take a look at the very excellent, visually stunning EVO magazine on the iPad. The initial screen is just a list of editions like so:

Let’s

talk in real terms for a moment; the iPad resolution is 1024x768 or

what we used to think of as “high-res” not that long ago. The image

above has been resized down to about 60% of original but on the iPad,

each of those little magazine covers was originally 180x135 which even

saved as a high quality PNG, can be brought down well under 50KB apiece.

However:

Thirteen and a half meg?! Where an earth did all that go?! Here’s a clue:

Go on, click it, I’ll wait.

Yep, 1.6MB.

4,267 pixels wide, 3,200 pixels high.

Why?

I have no idea, perhaps the art department just sent over the originals

intended for print and it was “easy” to dump that into the app. All you

can do in the app without purchasing the magazine (for which you expect

a big bandwidth hit), is just look at those thumbnails. So there you

go, 13.5MB chewed out of your 3G plan before you even do anything just

because images are being loaded with 560 times more pixels than they

need.

The secret stalker within

Apps you install

directly onto the OS have always been a bit of a black box. I mean it’s

not the same “view source” world that we've become so accustomed to with

the web over the last decade and a half where it’s pretty easy to see

what’s going on under the covers (at least under the browser covers).

With the volume and affordability of iOS apps out there, we’re now well

and truly back in the world of rich clients which performs all sorts of

things out of your immediate view, and some of them are rather

interesting.

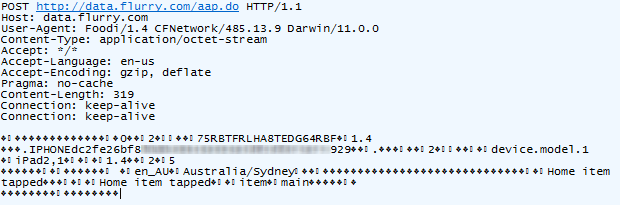

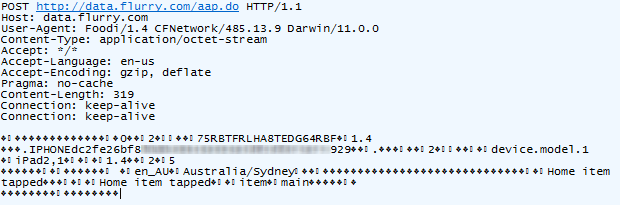

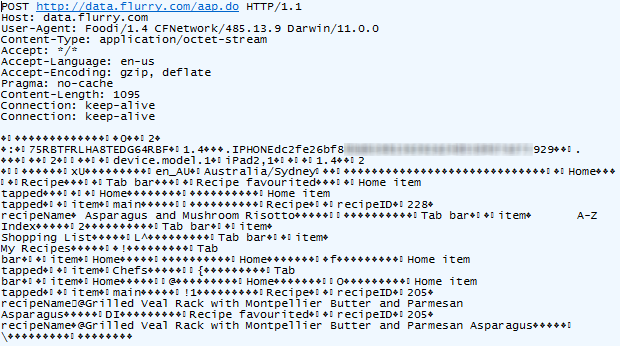

Let’s take cooking as an example; the ABC Foodi app is a beautiful piece of work. I mean it really is visually delightful and a great example of what can be done on the iPad:

But

it’s spying on you and phoning home at every opportunity. For example,

you can’t just open the app and start browsing around in the privacy of

your own home, oh no, this activity is immediately reported (I’m

deliberately obfuscating part of the device ID):

Ok, that’s a mostly innocuous, but it looks like my location – or at least my city

– is also in there so obviously my movements are traceable. Sure, far

greater location fidelity can usually be derived from the IP address

anyway (and they may well be doing this on the back end), but it’s

interesting to see this explicitly captured.

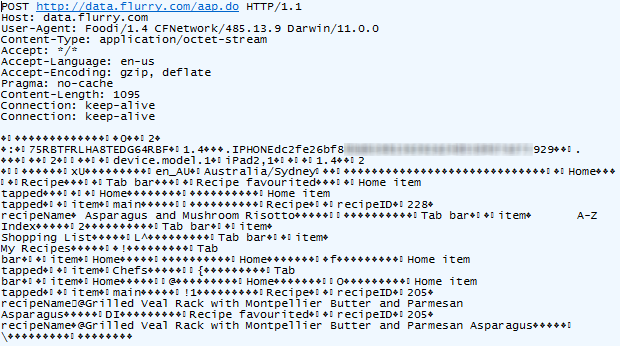

Let’s try something else, say, favouriting a dish:

Not the asparagus!!! Looks like you can’t even create a favourite

without your every move being tracked. But it’s actually even more than

that; I just located a nice chocolate cake and emailed it to myself

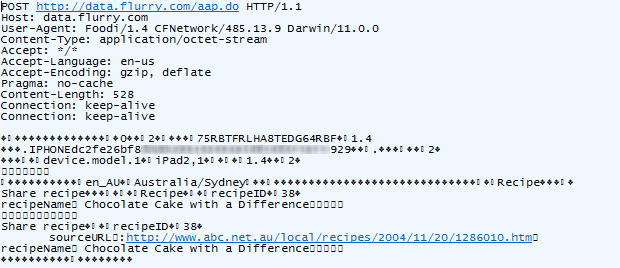

using the “share” feature. Here’s what happened next:

The app tracks your every move and sends it back to base in small batches. In this case, that base is at flurry.com, and who are these guys? Well it’s quite clear from their website:

Flurry powers acquisition, engagement and monetization for the new

mobile app economy, using powerful data and applying game-changing

insight.

Funny, I knew I’d seen that somewhere before!

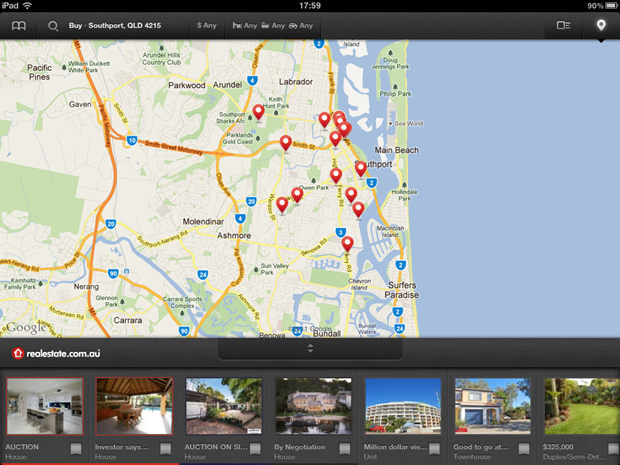

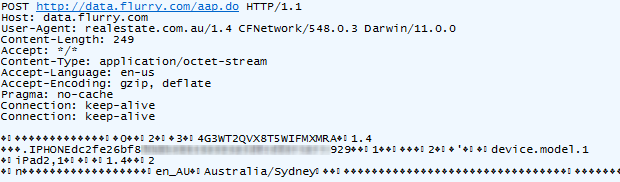

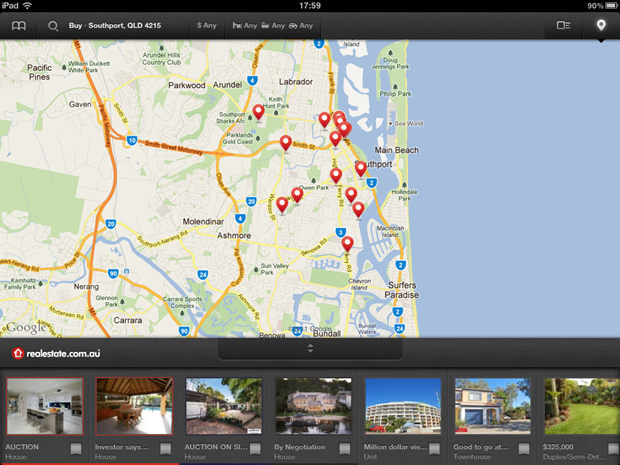

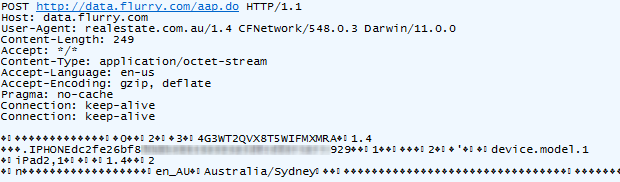

Here’s something I’ve seen before: POST requests to data.flurry.com. It’s perfectly obvious when you use the realestate.com.au iPad app:

Uh, except it isn’t really obvious, it’s another sneaky backdoor to

help power acquisitions and monetise the new app economy with

game-changing insight. Here’s what it’s doing and clearly it has nothing

to do with finding real estate:

Hang on – does that partially obfuscated device ID look a bit

familiar?! Yes it does, so Flurry now knows both what I’m cooking and

which kitchen I’d like to be cooking it in. And in case you missed it,

the first request when the Foxtel app was loaded earlier on was also to

data.flurry.com. Oh, and how about those travel plans with TripIt – the cheerful blue sky looks innocuous enough:

But under the covers:

Suddenly monetisation with powerful data starts to make more sense.

But this is no different to a tracking cookie on a website, right?

Well, yes and no. Firstly, tracking cookies can be disabled. If you

don’t like ‘em, turn ‘em off. Not so the iOS app as everything is hidden

under the covers. Actually, it’s in much the same way as a classic app

that gets installed on any OS although in the desktop world, we’ve

become accustomed to being asked if we’re happy to share our activities “for product improvement purposes”.

These privacy issues simply come down to this: what does the user

expect? Do they expect to be tracked when browsing a cook book installed

on their local device? And do they expect this activity to be

cross-referenceable with the use of other apparently unrelated apps? I

highly doubt it, and therein lays the problem.

Security? We don’t need no stinkin’ security!

Many people are touting mobile apps as the new security frontier, and rightly so IMHO. When I wrote about Westfield

last month I observed how easy it was for the security of services used

by apps to be all but ignored as they don’t have the same direct public

exposure as the apps themselves. A browse through my iPhone collection

supports the theory that mobile app security is taking a back seat to

their browser-based peers.

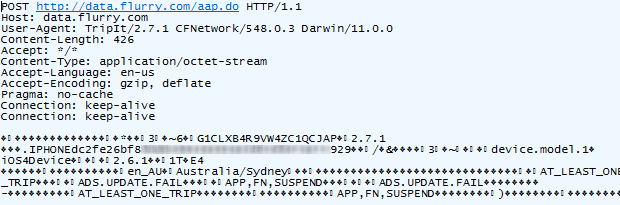

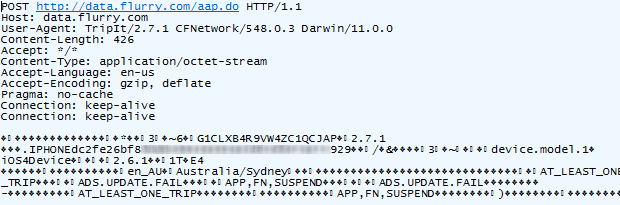

Let’s take the Facebook app. Now to be honest, this one surprised me a

little. Back in Jan of this year, Facebook allowed opt-in SSL or in or in the words of The Register,

this is also known as “Turn it on yourself...bitch”. Harsh, but fair –

this valuable security feature was going to be overlooked by many, many

people. “SS what?”

Unfortunately, the very security that is offered to browser-based

Facebook users is not accessible on the iPhone client. You know, the

device which is most likely to be carried around to wireless hotspots

where insecure communications are most vulnerable. Here’s what we’re

left with:

This is especially surprising as that little bit of packet-sniffing magic that is Firesheep was no doubt the impetus for even having the choice of enable SSL. But here we are, one year on and apparently Facebook is none the wiser.

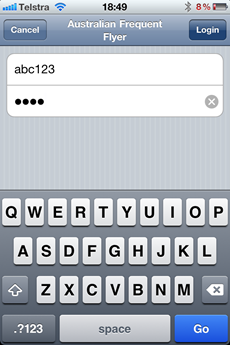

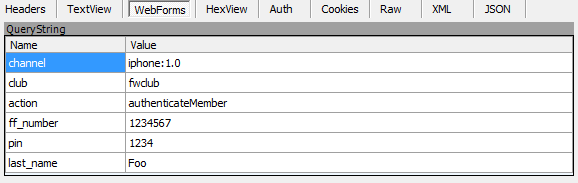

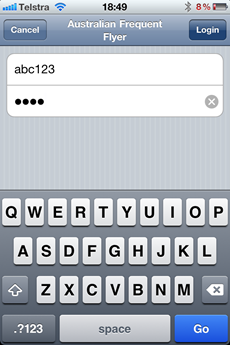

Let’s change pace a little and take a look inside the Australian Frequent Flyer app.

This is “Australia's leading Frequent Flyer Community” and clearly a

community of such standing would take security very, very seriously.

Let’s login:

As with the Qantas example above, you have absolutely no idea

how these credentials are being transported across the wire. Well,

unless you have Fiddler then it’s perfectly clear they’re just being

posted to this HTTP address: http://www.australianfrequentflyer.com.au/mobiquo-withsponsor/mobiquo.php

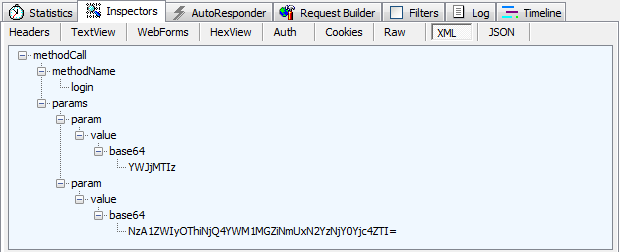

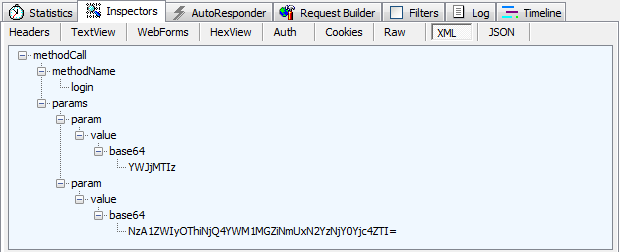

And of course it’s all very simple to see the post body which in this case, is little chunk of XML:

Bugger, clearly this is going to tack some sort of hacker mastermind

to figure out what these credentials are! Except it doesn’t, it just

takes a bit of Googling. There are two parameters and they’re both Base64

encoded. How do we know this? Well, firstly it tells us in one of the

XML nodes and secondly, it’s a pretty common practice to encode data in

this fashion before shipping it around the place (refer the link in this

paragraph for the ins and outs of why this is useful).

So we have a value of “YWJjMTIz” and because Base64 is designed to allow for simple encoding and decoding (unlike a hash which is a one way process), you can simply convert this back to plain text using any old Base64 decoder. Here’s an online one right here and it tells us this value is “abc123”. Well how about that!

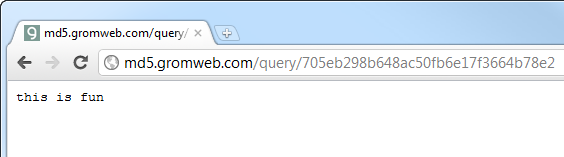

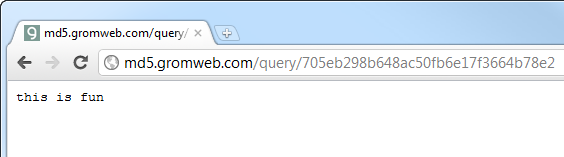

The next value is “NzA1ZWIyOThiNjQ4YWM1MGZiNmUxN2YzNjY0Yjc4ZTI=”

which is obviously going to be the password. Once we Base64 decode this,

we have something a little different:

“705eb298b648ac50fb6e17f3664b78e2?”. Wow, that’s an impressive password!

Except that as we well know, people choosing impressive passwords is a

very rare occurrence indeed so in all likelihood this is a hash. Now

there are all sorts of nifty brute forcing tools out there but when it

comes to finding the plain text of a hash, nothing beats Google. And

what does the first result Google gives us? Take a look:

I told you that was a useless password

dammit! You see the thing is, there’s a smorgasbord of hashes and their

plain text equivalents just sitting out there waiting to be searched

which is why it’s always important to apply a cryptographically random salt to the plain text before hashing. A straight hash of most user-created passwords – which we know are generally crap – can very frequently be resolved to plain text in about 5 seconds via Google.

The bottom line is that this is almost no better than plain

text credentials and it’s definitely no alternative to transport layer

security. I’ve had numerous conversations with developers before trying

to explain the difference between encoding, hashing and encryption and

if I were to take a guess, someone behind this thinks they’re

“encrypted” the password. Not quite.

But is this really any different to logging onto the Australian Frequent Flyer website

which also (sadly) has no HTTPS? Yes, what’s different is that firstly,

on the website it’s clear to see that no HTTPS has been employed, or at

least not properly employed (the login form isn’t loaded over HTTPS). I

can then make a judgement call on the trust and credibility of the

site; I can’t do that with the app. But secondly, this is a mobile app – a mobile travel

app – you know, the kind of thing that’s going to be used while roaming

around wireless hotspots vulnerable to eavesdropping. It’s more

important than ever to protect sensitive communications and the app

totally misses the mark on that one.

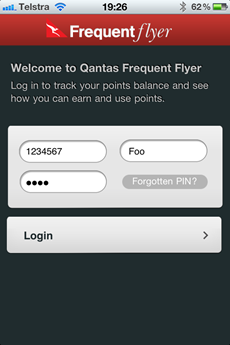

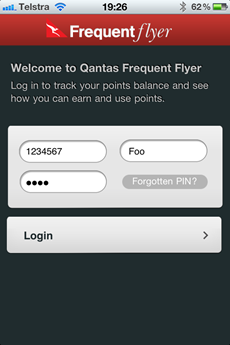

While we’re talking travel apps, let’s take another look at Qantas. I’ve written about these guys before, in fact they made my Who’s who of bad password practices list earlier in the year. Although they made a small attempt at implementing security by posting to SSL, as I’ve said before, SSL is not about encryption and loading login forms over HTTP is a big no-no. But at least they made some attempt.

So why does the iPhone app just abandon all hope and do everything

without any transport layer encryption at all? Even worse, it just

whacks all the credentials in a query string. So it’s a GET request.

Over HTTP. Here’s how it goes down:

This is a fairly typical login screen. So far, so good, although of

course there’s no way to know how the credentials are handled. At least,

that is, until you take a look at the underlying request that’s made.

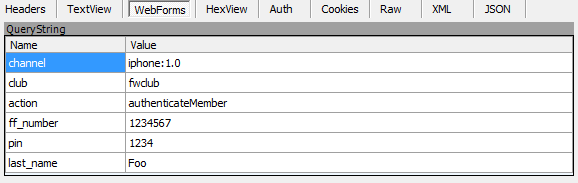

Here’s those query string parameters:

Or put simply, it’s just a request to this URL: http://wsl.qantas.com.au/qffservices/?channel=iphone:1.0&club=fwclub&action=authenticateMember&ff_number=1234567&pin=1234&last_name=Foo

Go ahead, give it a run. What I find most odd about this situation is that clearly a conscious decision was made to apply some

degree of transport encryption to the browser app, why not the mobile

app? Why must the mobile client remain the poor cousin when it comes to

basic security measures? It’s not it’s going to be used inside any

highly vulnerable public wifi hotspots like, oh I don’t know, an airport

lounge, right?

Apps continue to get security wrong. Not just iOS apps mind you,

there are plenty of problems on Android and once anyone buys a Windows

Mobile 7 device we’ll see plenty of problems on those too. It’s just too

easy to get wrong and when you do get it wrong, it’s further out of

sight than your traditional web app. Fortunately the good folks at OWASP are doing some great work around a set of Top 10 mobile security risks so there’s certainly acknowledge of the issues and work being done to help developers get mobile security right.

Summary

What I discovered about is the result of casually observing some of

only a few dozen apps I have installed on my iOS devices. There are

about half a million more out there and if I were a betting man, my

money would be the issues above only being the tip of the iceberg.

You can kind of get how these issues happen; every man and his dog

appears to be building mobile apps these days and a low bar to entry is

always going to introduce some quality issues. But Facebook? And Qantas?

What are their excuses for making security take a back seat?

Developers can get away with more sloppy or sneaky practices in mobile apps as the execution is usually further out of view.

You can smack the user with a massive asynchronous download as their

attention is on other content; but it kills their data plan.

You can track their moves across entirely autonomous apps; but it erodes their privacy.

And most importantly to me, you can jeopardise their security without

their noticing; but the potential ramifications are severe.

We live in interesting times.

Via ars technica

By Matthew Lasar

-----

When Tim Berners-Lee arrived at CERN,

Geneva's celebrated European Particle Physics Laboratory in 1980, the

enterprise had hired him to upgrade the control systems for several of

the lab's particle accelerators. But almost immediately, the inventor of

the modern webpage noticed a problem: thousands of people were floating

in and out of the famous research institute, many of them temporary

hires.

"The big challenge for contract programmers was to try to

understand the systems, both human and computer, that ran this fantastic

playground," Berners-Lee later wrote. "Much of the crucial information

existed only in people's heads."

So in his spare time, he wrote up some software to address this

shortfall: a little program he named Enquire. It allowed users to create

"nodes"—information-packed index card-style pages that linked to other

pages. Unfortunately, the PASCAL application ran on CERN's proprietary

operating system. "The few people who saw it thought it was a nice idea,

but no one used it. Eventually, the disk was lost, and with it, the

original Enquire."

Some years later Berners-Lee returned to CERN.

This time he relaunched his "World Wide Web" project in a way that

would more likely secure its success. On August 6, 1991, he published an

explanation of WWW on the alt.hypertext usegroup. He also released a code library, libWWW, which he wrote with his assistant

Jean-François Groff. The library allowed participants to create their own Web browsers.

"Their efforts—over half a dozen browsers within 18 months—saved

the poorly funded Web project and kicked off the Web development

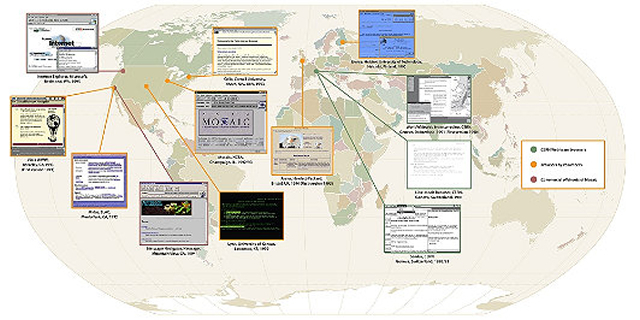

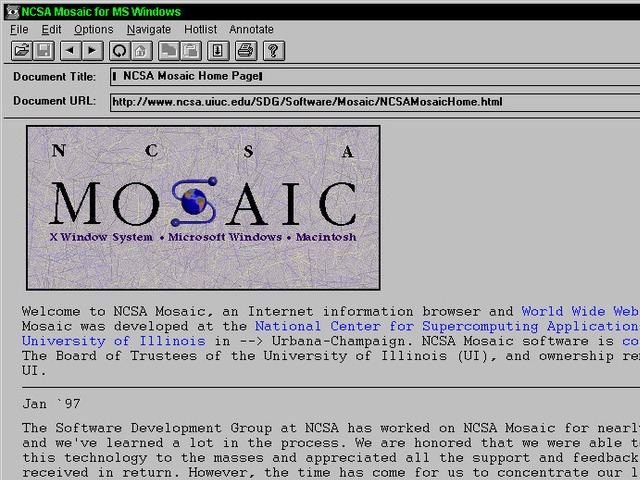

community," notes a commemoration of this project by the Computer History Museum in Mountain View, California. The best known early browser was Mosaic, produced by Marc Andreesen and Eric Bina at the

National Center for Supercomputing Applications (NCSA).

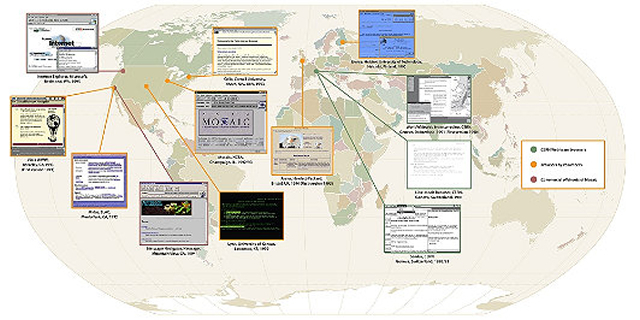

Mosaic was soon spun into Netscape, but it was not the first browser. A map

assembled by the Museum offers a sense of the global scope of the early

project. What's striking about these early applications is that they

had already worked out many of the features we associate with later

browsers. Here is a tour of World Wide Web viewing applications, before

they became famous.

The CERN browsers

Tim Berners-Lee's original 1990 WorldWideWeb browser was both a

browser and an editor. That was the direction he hoped future browser

projects would go. CERN has put together a reproduction

of its formative content. As you can see in the screenshot below, by

1993 it offered many of the characteristics of modern browsers.

The software's biggest limitation was that it ran on the NeXTStep

operating system. But shortly after WorldWideWeb, CERN mathematics

intern Nicola Pellow wrote a line mode browser that could function

elsewhere, including on UNIX and MS-DOS networks. Thus "anyone could

access the web," explains Internet historian Bill Stewart, "at that point consisting primarily of the CERN phone book."

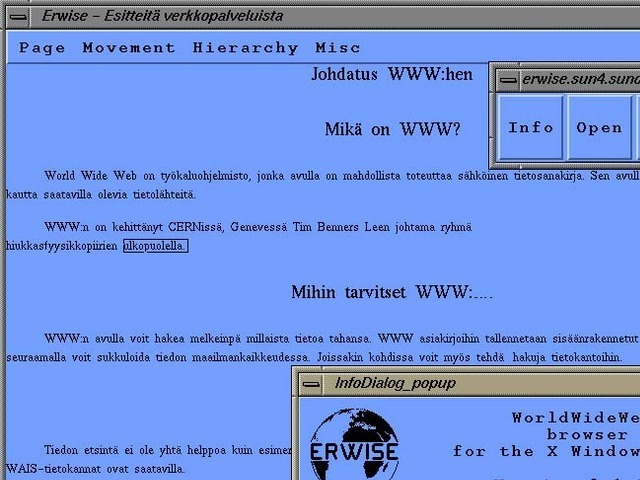

Erwise

Erwise came next. It was written by four Finnish college students in 1991 and released in 1992. Erwise is credited as the first browser that offered a graphical interface. It could also search for words on pages.

Berners-Lee wrote a review

of Erwise in 1992. He noted its ability to handle various fonts,

underline hyperlinks, let users double-click them to jump to other

pages, and to host multiple windows.

"Erwise looks very smart," he declared, albeit puzzling over a

"strange box which is around one word in the document, a little like a

selection box or a button. It is neither of these—perhaps a handle for

something to come."

So why didn't the application take off? In a later interview, one of

Erwise's creators noted that Finland was mired in a deep recession at

the time. The country was devoid of angel investors.

"We could not have created a business around Erwise in Finland then," he explained.

"The only way we could have made money would have been to continue our

developing it so that Netscape might have finally bought us. Still, the

big thing is, we could have reached the initial Mosaic level with

relatively small extra work. We should have just finalized Erwise and

published it on several platforms."

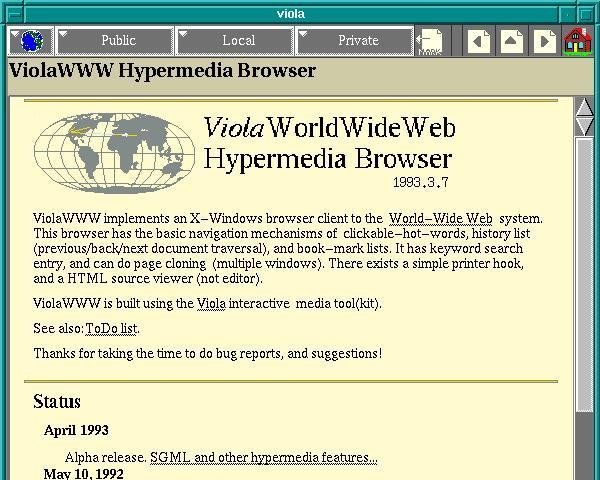

ViolaWWW

ViolaWWW was released in April

of 1992. Developer

Pei-Yuan Wei wrote it at the University of California at Berkeley via

his UNIX-based Viola programming/scripting language. No, Pei Wei didn't

play the viola, "it just happened to make a snappy abbreviation" of

Visually Interactive Object-oriented Language and Application, write

James Gillies and Robert Cailliau in their history of the World Wide

Web.

Wei appears to have gotten his inspiration from the early Mac

program HyperCard, which allowed users to build matrices of formatted

hyper-linked documents. "HyperCard was very compelling back then, you

know graphically, this hyperlink thing," he later recalled. But the

program was "not very global and it only worked on Mac. And I didn't

even have a Mac."

But he did have access to UNIX X-terminals at UC Berkeley's

Experimental Computing Facility. "I got a HyperCard manual and looked at

it and just basically took the concepts and implemented them in

X-windows." Except, most impressively, he created them via his Viola

language.

One of the most significant and innovative features of ViolaWWW was

that it allowed a developer to embed scripts and "applets" in the

browser page. This anticipated the huge wave of Java-based applet

features that appeared on websites in the later 1990s.

In his documentation, Wei also noted various "misfeatures" of ViolaWWW, most notably its inaccessibility to PCs.

- Not ported to PC platform.

- HTML Printing is not supported.

- HTTP is not interruptable, and not multi-threaded.

- Proxy is still not supported.

- Language interpreter is not multi-threaded.

"The author is working on these problems... etc," Wei acknowledged

at the time. Still, "a very neat browser useable by anyone: very

intuitive and straightforward," Berners-Lee concluded in his review

of ViolaWWW. "The extra features are probably more than 90% of 'real'

users will actually use, but just the things which an experienced user

will want."

Midas and Samba

In September of 1991, Stanford Linear Accelerator physicist Paul

Kunz visited CERN. He returned with the code necessary to set up the

first North American Web server at SLAC. "I've just been to CERN," Kunz

told SLAC's head librarian Louise Addis, "and I found this wonderful

thing that a guy named Tim Berners-Lee is developing. It's just the

ticket for what you guys need for your database."

Addis agreed. The site's head librarian put the research center's

key database over the Web. Fermilab physicists set up a server shortly

after.

Then over the summer of 1992 SLAC physicist Tony Johnson wrote Midas, a graphical browser for the Stanford physics community. The big draw

for Midas users was that it could display postscript documents, favored

by physicists because of their ability to accurately reproduce

paper-scribbled scientific formulas.

"With these key advances, Web use surged in the high energy physics community," concluded a 2001 Department of Energy assessment of SLAC's progress.

Meanwhile, CERN associates Pellow and Robert Cailliau released the

first Web browser for the Macintosh computer. Gillies and Cailliau

narrate Samba's development.

For Pellow, progress in getting Samba up and running was slow,

because after every few links it would crash and nobody could work out

why. "The Mac browser was still in a buggy form,' lamented Tim

[Berners-Lee] in a September '92 newsletter. 'A W3 T-shirt to the first

one to bring it up and running!" he announced. The T shirt duly went to

Fermilab's John Streets, who tracked down the bug, allowing Nicola

Pellow to get on with producing a usable version of Samba.

Samba "was an attempt to port the design of the original WWW

browser, which I wrote on the NeXT machine, onto the Mac platform,"

Berners-Lee adds,

"but was not ready before NCSA [National Center for Supercomputing

Applications] brought out the Mac version of Mosaic, which eclipsed it."

Mosaic

Mosaic was "the spark that lit the Web's explosive growth in 1993,"

historians Gillies and Cailliau explain. But it could not have been

developed without forerunners and the NCSA's University of Illinois

offices, which were equipped with the best UNIX machines. NCSA also had

Dr. Ping Fu, a PhD computer graphics wizard who had worked on morphing

effects for Terminator 2. She had recently hired an assistant named Marc Andreesen.

"How about you write a graphical interface for a browser?" Fu

suggested to her new helper. "What's a browser?" Andreesen asked. But

several days later NCSA staff member Dave Thompson gave a demonstration

of Nicola Pellow's early line browser and Pei Wei's ViolaWWW. And just

before this demo, Tony Johnson posted the first public release of Midas.

The latter software set Andreesen back on his heels. "Superb!

Fantastic! Stunning! Impressive as hell!" he wrote to Johnson. Then

Andreesen got NCSA Unix expert Eric Bina to help him write their own

X-browser.

Mosaic offered many new web features, including support for video

clips, sound, forms, bookmarks, and history files. "The striking thing

about it was that unlike all the earlier X-browsers, it was all

contained in a single file," Gillies and Cailliau explain:

Installing it was as simple as pulling it across the network and

running it. Later on Mosaic would rise to fame because of the

<IMG> tag that allowed you to put images inline for the first

time, rather than having them pop up in a different window like Tim's

original NeXT browser did. That made it easier for people to make Web

pages look more like the familiar print media they were use to; not

everyone's idea of a brave new world, but it certainly got Mosaic

noticed.

"What I think Marc did really well," Tim Berners-Lee later wrote,

"is make it very easy to install, and he supported it by fixing bugs via

e-mail any time night or day. You'd send him a bug report and then two

hours later he'd mail you a fix."

Perhaps Mosaic's biggest breakthrough, in retrospect, was that it

was a cross-platform browser. "By the power vested in me by nobody in

particular, X-Mosaic is hereby released," Andreeson proudly declared on

the www-talk group on January 23, 1993. Aleks Totic unveiled his Mac

version a few months later. A PC version came from the hands of Chris

Wilson and Jon Mittelhauser.

The Mosaic browser was based on Viola and Midas, the Computer History museum's exhibit notes.

And it used the CERN code library. "But unlike others, it was reliable,

could be installed by amateurs, and soon added colorful graphics within

Web pages instead of as separate windows."

A guy from Japan

But Mosaic wasn't the only innovation to show up on the scene around that same time. University of Kansas student Lou Montulli

adapted a campus information hypertext browser for the Internet and

Web. It launched in March, 1993. "Lynx quickly became the preferred web

browser for character mode terminals without graphics, and remains in

use today," historian Stewart explains.

And at Cornell University's Law School, Tom Bruce was writing a Web

application for PCs, "since those were the computers that lawyers tended

to use," Gillies and Cailliau observe. Bruce unveiled his browser Cello on June 8, 1993, "which was soon being downloaded at a rate of 500 copies a day."

Six months later, Andreesen was in Mountain View, California, his

team poised to release Mosaic Netscape on October 13, 1994. He, Totic,

and Mittelhauser nervously put the application up on an FTP server. The

latter developer later recalled the moment. "And it was five minutes and

we're sitting there. Nothing has happened. And all of a sudden the

first download happened. It was a guy from Japan. We swore we'd send him

a T shirt!"

But what this complex story reminds is that is that no innovation is

created by one person. The Web browser was propelled into our lives by

visionaries around the world, people who often didn't quite understand

what they were doing, but were motivated by curiosity, practical

concerns, or even playfulness. Their separate sparks of genius kept the

process going. So did Tim Berners-Lee's insistence that the project stay

collaborative and, most importantly, open.

"The early days of the web were very hand-to-mouth," he writes. "So many things to do, such a delicate flame to keep alive."

Further reading

- Tim Berners-Lee, Weaving the Web: The Original Design and Ultimate Destiny of the World Wide Web

- James Gillies and R. Cailliau, How the web was born

- Bill Stewart, Living Internet (www.livinginternet.com)

|

Despite

the controversy of user control, Microsoft especially has a reason to

make this move to react to browser "update fatigue" that has resulted in

virtually "stale" IE users who won't upgrade their browsers unless they

upgrade their operating system as well.

Despite

the controversy of user control, Microsoft especially has a reason to

make this move to react to browser "update fatigue" that has resulted in

virtually "stale" IE users who won't upgrade their browsers unless they

upgrade their operating system as well.