Tuesday, July 12. 2011

Kindle Direct Publishing (KDP) program

Via keirthomas.com (by Keir Thomas)

-----

There’s been a lot of fuss recently about $0.99 Kindle eBooks.

Essentially, ‘publishers’ are buying-in cheap content and packaging it into eBooks that they sell through the Kindle Direct Publishing (KDP) program for $0.99. KDP lets anybody create and list a Kindle eBook for free. All you need is an Amazon account.

Amazon check all eBooks for quality but that’s to make sure things like layout are acceptable. They don’t really care what the content is, provided it fits within acceptability guidelines. Quite rightly too. Who are they to be censors or arbiters? And how could they even find time to read each book?

Giving it a try

As part of my regular but foolish publishing experiments, I put some $0.99 computer books on sale though KDP in March 2011 — Working at the Ubuntu Command-Line, and font-size: 14px; font-style: italic; font-weight: 400; line-height: 17px;" class="style"> Managing the Ubuntu Software System. Mine contain content written by myself, however, and not bought-in or culled from the web.

So does $0.99 publishing work, at least when it comes to computer books?

Yes and no. Did you really expect a straight answer?

Do it right* and you’ll sell thousands of copies. Working at the Ubuntu Command-Line is usually glued to the top of the Amazon best-seller charts for Linux and also for Operating Systems.

Something I’ve created all on my lonesome is besting efforts by publishing titans like O’Reilly, Prentice Hall, Wiley, and others.

Admittedly, a new release of Ubuntu came along in April. That helped. However, my Excel charts show sales of my eBooks ramping up, rather than tailing off now the hype about that release is over.

(It’s tempting to think of publishing a book as like exploding a bomb, in that sales die down after an initial peak. The truth is it’s more like a crescendo as the book slowly builds-up a reputation, along with those all-important Amazon reviews.)

Making money

But... You’re not going to make a huge amount of money. The royalty on a $0.99 book is $0.35. If you sell 1,000 copies, you’re going to make $350. The people who make a fortune at this are turning over 500,000 copies of their $0.99 eBooks.

I hear that Amazon frowns on the whole $0.99 eBook thing, and that critics are calling for the zero entry-barrier of KDP to be removed. They suggest Amazon charge a listing fee for each book — perhaps $10 or $20. This would limit the scams mentioned above.

This would be a huge shame. Through things like KDP and CreateSpace, Amazon is making proper publishing truly democratic and accessible to all. To get a Kindle eBook on sale, all you need is a computer with a word processor. That’s it. You don’t need up-front fees. You don’t need to be a publisher. You don’t need technical knowledge.

If you don’t believe that’s mind-blowing then, well, I feel sorry for you.

Check your sources

If you hear people campaigning against KDP, check-out their background. Are they authors who are listed with major publishers? Are they publishers themselves? It’s in the interest of anybody involved in traditional publishing to stop things like KDP in its tracks.

KDP is a dangerous thing. That’s why it’s so good. I’d like to see more companies offer services like KDP. Can you imagine what it’d be like if a major publisher embraced a similar concept?

The only bad thing from my perspective is that sales of my self-published Ubuntu book have dropped like a stone. This corroborates my theory that there’s only a set of amount of users and money within the Ubuntu ecosystem. But that’s a topic for another time.

Incidentally, if you’re interested in computer eBooks, check out WalrusInk.

* ‘Doing it right’ includes having high-quality content, correctly laid-out in the book, and putting together a professional cover. Having a good author background helps too. If the cover look like it’s designed in MS Paint and the author neglects to provide a biography in the blurb, you should know what to expect.

Early Glimpse of Firefox 8 Shows Vast Performance Increases

Via OStatic

-----

Mozilla has faced some backlash from IT administrators for its move to a rapid release cycle with the Firefox browser,

but you have to hand it to Mozilla for staying the course. For years,

Firefox saw upgrades arrive far less frequently than they arrove for

competitive browsers such as Google Chrome. Since announcing its new

rapid release cycle earlier this year,

Mozilla has released versions 4 and 5 of Firefox, and steadily gotten

better at ironing out short-term kinks, most of which have had to do

with extensions causing problems. Now, Firefox 8 is already being seen

in nightly builds, although it's not released in final form yet, and

early reports show it to be faster than current versions of Chrome

across many benchmarks.

Mozilla has faced some backlash from IT administrators for its move to a rapid release cycle with the Firefox browser,

but you have to hand it to Mozilla for staying the course. For years,

Firefox saw upgrades arrive far less frequently than they arrove for

competitive browsers such as Google Chrome. Since announcing its new

rapid release cycle earlier this year,

Mozilla has released versions 4 and 5 of Firefox, and steadily gotten

better at ironing out short-term kinks, most of which have had to do

with extensions causing problems. Now, Firefox 8 is already being seen

in nightly builds, although it's not released in final form yet, and

early reports show it to be faster than current versions of Chrome

across many benchmarks.

Firefox 7 and 8 run a new graphics engine called Azure, which you can read more about here. And, in broad benchmark tests, ExtremeTech reports the following results:

"Firefox 8, which only just appeared on the Nightly channel, is already 20% faster than Firefox 5 in almost every metric: start up, session restore, first paint, JavaScript execution, and even 2D canvas and 3D WebGL rendering. The memory footprint of Firefox 7 (and thus 8) has also been drastically reduced, along with much-needed improvements to garbage collection."

Mozilla has already done extensive work on how memory is handled in Firefox 7, and these issues are likely to be addressed further with release 8. At this point, Chrome is Firefox's biggest competition, and ExtremeTech also reports:

"While comparison with other browsers has become a little passe in recent months — they’re all so damn similar! — it’s worth noting that Firefox 8 is as fast or faster than the latest Dev Channel build of Chrome 14. Chrome’s WebGL implementation is still faster, but with Azure, Firefox’s 2D performance is actually better than Chrome. JavaScript performance is also virtually identical."

I use Firefox and Chrome, but my primary reason for using Chrome is that it has been faster. With the early glimpse of Firefox 8, the performance gap stands a chance of being closed, and it looks like these two open source browsers have never competed more closely than they do now.

Monday, July 11. 2011

Five reasons Android can fail

-----

I use Android every day both on my Droid II smartphone and my Barnes & Noble Nook Color e-reader/tablet. I like it a lot. But, I also have concerns about how it’s being developed and being presented to customers.

I use Android every day both on my Droid II smartphone and my Barnes & Noble Nook Color e-reader/tablet. I like it a lot. But, I also have concerns about how it’s being developed and being presented to customers.

Before jumping into why I think Android faces trouble in the long run, let me mention one problem I don’t see as standing in Android’s way: The Oracle lawsuits Yes, Oracle claims that Google owes them billions in damages for using unlicensed Java technology in Android’s core Dalvik virtual machine.

I follow patent lawsuits and here’s what going to happen with this one. It will take years and millions of dollars in legal fees, but eventually Google will either beat Oracle’s claims or pay them hefty licensing fees. So, yes, one way or the other Google, and to a lesser extent Oracle, will spend hundreds of millions on this matter before it’s done. But, so what?

The mobile technology space is filled with patent and licensing lawsuits. When I checked on these lawsuits in mid-October there were dozens of them. Since then, Apple has sued Samsung; Dobly has sued RIM; and Lodsys, a patent troll, vs. Apple and all its iOS developers, By the time I finish writing this column someone will probably have sued someone else!

The end-result of all this, besides lining the pockets of lawyers, is that we’re all going to have pay more for our tablets and smartphones. It doesn’t matter who wins or who loses. Thanks to the U.S.’s fouled up patent system, everyone who’s a customer, everyone who’s a developer, and everyone’s who in business to make something useful is the loser.

That said, here’s where Android is getting it wrong.

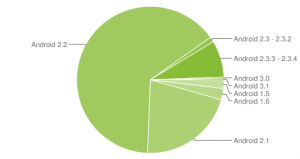

1. Too many developer versions

When Google first forked Android into two versions–The 2.x branch for smartphones and the 3.x for tablets–I didn’t like the idea. I like it even less now.

According to the Android Developers site, there are eight (8!) different versions of Android with market presence. If we ignore the out-dated Android 1.5 and 1.6, that still leaves us with six shipping versions that a developer needs to keep in mind when he or she is creating or updating a program. In the case of the 2.x and 3.x lines that’s a lot of work. Oh, and yes there are now two versions of 3.x: 3.0 and 3.1.

Who can keep up with this? I couldn’t. But, wait there’s more!

2. Too many OEM versions

You’d think that Android 2.2 on a Droid II would be the same on the Samsung Galaxy Pro. You’d think wrong. Every original equipment manufacturer (OEM) insists on tweaking the software and adding their own particular programs to each phone. Sometimes, as James Kendrick points out, the same hardware doesn’t even work with Android on the exact same model.

Kendrick has found that the useless microSD card slot in the Motorola XOOM, even after its Android 3.1 update, still doesn’t work. Or, to be exact, it won’t work in the U.S. In Europe, XOOM users will get a fix that will let them use microSD cards.

Argh!

Here’s a history lesson for Google and the rest of the movers and shakers of Android. I’ve seen a “common” operating system used in this way before during a technology boom. Once, it was with the pre-PC microcomputers. They all ran CP/M-80, but every vendor had their own little tricks they added to make their computers “better.” Then along came PC-DOS, soon to be followed by MS-DOS, and all those companies-KayPro, Osborne, and IMSAI-became answers in computer trivia games.

How did Microsoft make its first step to becoming the Evil Empire? By delivering the same blasted operating system on every PC. If users can’t count on using the same programs and the same hardware accessories, like microSD cards, on Android, they’re not going to stick with Android devices. If things don’t get better with Android, who knows, maybe Windows 8 will have a shot on tablets after all!

3. Still not open enough

Google, for reasons that still elude me, decided not to open-source Android 3.x’s source code. This is so dumb!

I’m not talking about playing fast and loose with open-source licenses or ethics-so Google really stuck its foot into a mess with this move. No, I’m saying this is dumb because the whole practical point of open source make development easier by sharing the code. Honeycomb’s development depends now on a small number of Google and big OEM developers. Of them, the OEM staffers will be spending their time making Honeycomb, Android 3.0, work better with their specific hardware or carrier. That doesn’t help anyone else.

4. Security Holes

This one really ticks me off. There is no reason for Android to be insecure. In fact, in some ways it’s Not insecure. So why do you keep reading about Android malware?

Here’s how it works. Or, rather, how it doesn’t work. Android itself, based on Linux, is relatively secure. But, if you voluntary, albeit unknowingly, install malware from the Android Market, your Android tablet or smartphone can’t stop you. Google must start checking “official” Android apps for malware.

Google has made some improvements to how it handles Android malware. It’s not enough.

So until things get better, if you’re going to download Android programs by unknown developers, get an Android anti-virus program like Lookout. Heck, get it anyway; it’s only a matter of time until someone finds a way to add malware to brand-name programs.

5. Pricing

Seriously. What’s with Android tablet pricing? Apple owns the high-end of tablets. If someone has the money, they’re going to get an iPad 2. Deal with it. Apple’s the luxury brand. Android’s hope is to be the affordable brand. So long as OEMs price Android’s tablets at $500 and up, they’re not going to move. People will buy a good $250 Android tablet, which is one reason why the Nook is selling well. They’re not buying $500 Android tablets.

Here’s what I see happening. Android will still prosper… right up to the point where some other company comes out with an affordable platform and a broad selection of compatible software and hardware. Maybe that will be webOS, if HP drops the price on its TouchPads. Maybe it will be MeeGo. Heck, it could even be Windows 8. What it won’t be though in the long run, unless Android gets its act together, will be Android.

-----

Personal Comments:

In order to counteract a bit what is a kind of severe or pessimistic view for Android's future, I would like to underline that Android has to face major hardware evolution in a very short time. Duplicity of hardware constructors that have jumped in the Android adventure has also participated to version split. But as far as I have seen, it seems to me that constructors are pretty fast in proposing to their customers upgrades of their in-house Android versions, in order to stick to the very last 'official' Android version (the only one that all consumers expect to have on their mobile device). For example, HTC has nicely managed this while adding a very impressive GUI on the top of Android, that kind of GUI that has hardly suggested that Android can be a real competitor to iOS. So I do not see multiple Android versions as a negative point but more as a rewarding one.

In the same time Apple pushes users to upgrade their devices to the very last iOS version without that much concern about user's wishes. When compiling a program dedicated to Apple devices, you have to declare if it is targeting iPhone/iPod and/or iPad with specifying a minimum compatible version (because of core libraries evolution), which seems very similar to me to handle kind of distinct versions of a similar OS... thus being similar to what's occur at the Android OS level, but may be in less 'democratic' way for the Apple's OS.

About the points 3 and 4, I think this concerns absolutely every mobile OS, and for some of them we may even not being aware about existing problems or privacy issues (refer to GPS tracking issue on iPhone etc...).

Concerning the pricing, the ASUS eee Pad Transformer

is an excellent example to what we can expect about (affordable) prices

for mobile device based on Android OS. New mobile phones/tablets models are pretty expensive

mainly because they include very last chips (Tegra 2, Tegra 3, dual-core

CPU etc...). 6 months later the same 'top-level' device can be acquire

for one buck just by renewing a mobile network subscription.

Android OS is moving pretty fast in comparison to its direct competitors (on-screen widgets are typically something that is missing in the iOS), and MeeGo or Bada seems like just born dead OS. It sounds pretty clear that a ready to use and effective mobile OS

is much more easier to adopt for a hardware constructor than a brand

new one built in-house. It looks like much more an optimal solution to

invest effort in customizing Android OS like HTC did in a pretty

effective way than re-inventing the wheel.

The strength of Android is its community, which is just growing fast... very fast!

Toolbox Review: iMSO-104 Oscilloscope for iPad & iPhone

Via makezine

-----

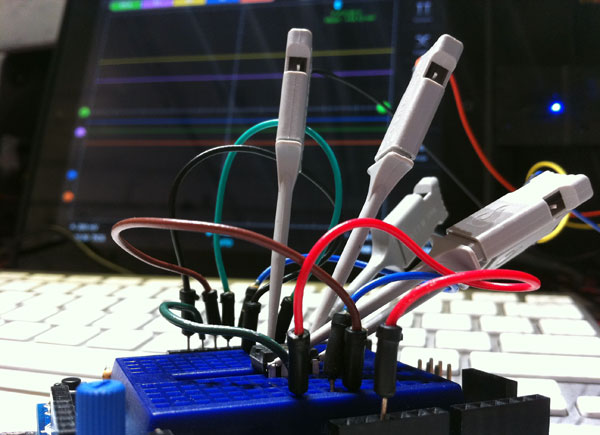

Included tangibles are as follows:

- iMSO-104 Mixed Signal Oscilloscope Hardware

- 1x/10 Analog Probe

- Logic Harness (4 Digital + 1 Ground)

- SMD Grabbers (4 Digital + 1 Ground)

- Screwdriver for Analog Waveform Compensation Adjustment

- Analog tip covers (2 pieces)

The core hardware, based on the Cypress PSoC 3 chip, is housed in a slim enclosure with requisite Apple dock connector, SMB jack for the analog probe, and 5-pin male header to accommodate 4 digital channels + ground. The included set of probes appear well-built, and I estimate they’d withstand the level abuse my other test equipment is subject to. The unit also includes a small blue LED power indicator which turns off whenever the device’s related software becomes inactive.

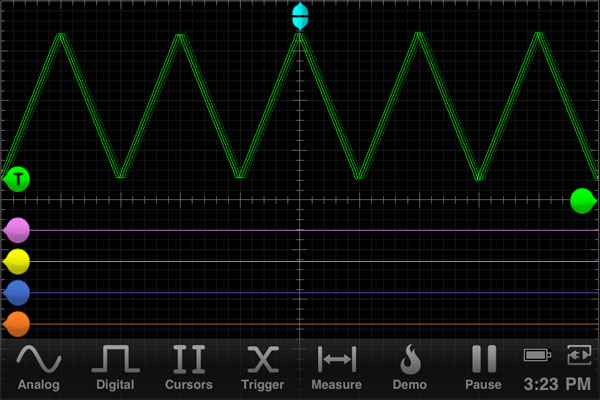

Software

The iMSO’s software portion is available for free as a Universal app and includes an interactive demo mode to give you an idea of how the unit handles analog signals. The app implements standard digital scope functionality (zoom, trigger, cursor/measurement) with thankfully little UI clutter. In addition to standard menu controls, users can employ familiar pinch-to-zoom gestures as well as control voltage trigger level via onscreen sliders, and double-tap to toggle display infos.

Usage

Right off the bat, I was intrigued at the thought of using the iPad’s 9.7? display and touch input for highly portable ‘scoping. And yes, viewing a sinewave in such a manner does feel satisfyingly slick and moreover, imbues Apple’s hardware with an air of technical sophistication rarely achieved while editing a Pages document or playing Angry Birds. The iMSO software was easily controllable via my iPhone, but I really can’t imagine using it much on that platform when given the option of a much larger display.

While it won’t replace my big ol’ 50MHz CRT benchtop dinosaur, the iMSO’s comparatively humble 5MHz analog bandwidth works well for inspecting audio signals (which I do quite often). Additionally, max voltage limits on the devices inputs (±40V analog 10x, -0.5V/+13V digital) mean I’m likely to reserve use exclusively for low-power audio work. On the digital side, the unit did prove capable when I attempted peeking in on some serial communications between an Arduino board and MCP4921 DAC chip.

The fact that iOS devices use a single port for both power & data, means you’ll have to rely on battery power while using the iMSO. Thankfully, the device + software went easy on my iPad 2?s battery – so power is likely only a concern for those who plan on marathon testing/debugging sessions.

The ~$300 pricetag and bandwidth limitations will likely limit the iMSO’s initial audience – but if those points don’t pose a problem for you, well, this thing is pretty dang sweet. As the IMSO-104 is the first in its category, It will be interesting to see what future developments hold for iOS test equipment – see, we shall.

Saturday, July 09. 2011

The real veterans of technology

Via Businessweek

-----

The Real Veterans of Technology To survive for more than 100 years, tech companies such as Siemens, Nintendo, and IBM have had to innovate

By Antoine Gara

When IBM turned 100 on June 16, it joined a surprising number of tech companies that have been evolving in order to survive since long before there was a Silicon Valley. Here’s a sampling of tech centenerians, and some of the breakthroughs that helped fuel their longevity.

Siemens: Topical Press Agency/Getty Images; Western Union: Hulton Archive/Getty Images; Diebold: Diebold Inc; Ericsson: SSPL/Getty Images; Nintendo: Tim Whitby/Alamy; Kodak: George Eastman House

Friday, July 08. 2011

USA : chronique de la mort annoncée de l’Internet mobile illimité

Via ITespresso

-----

Les offres mobile Internet 3G étaient en danger outre-Atlantique depuis un an. Leur fin est maintenant actée : Verizon, deuxième opérateur national, n'en vendra plus.

Les offres 3G illimitées sont en passe de disparaître aux États-Unis.

En cause, la limite théorique de débit des réseaux mobiles, qui serait atteinte rapidement si les mobinautes ne sont pas limités dans leur consommation effrénée. Une ritournelle souvent entendue en France également.

Le plus important opérateur du pays, AT&T, les avait déjà supprimées l’année dernière.

Son principal concurrent, Verizon Wireless, le rejoint ce 7 juillet. Le modèle choisi par les deux opérateurs repose sur un forfait fixe et des surcharges en cas de dépassement explique le site eWeek.

Par exemple, Verizon demandera 30 dollars par mois pour 2 Go de données, puis facturera 10 dollars par Go supplémentaire.

T-Mobile, le petit quatrième du marché, offre encore des abonnements illimités « à la française » où le débit est fortement réduit passé un certain seuil.

Mais avec son rachat par AT&T pour 39 milliards de dollars, il ne restera probablement bientôt plus qu’un seul opérateur majeur pour proposer de vraies offres illimitées : Sprint Nextel.

Aux États-Unis, cet abandon provoque pourtant la colère des consommateurs.

Parul Desai, conseiller sur les politiques de télécommunication pour Consumers Union, l’équivalent américaion de l’UFC – Que Choisir, a exprimé le désarroi des mobinautes américains au journal Boston Globe.

Il craint que même si les mobinautes utilisent moins de 2 Go de volume par mois (présentés par les opérateurs comme la moyenne de consommation, et donc utilisés comme base pour leurs offres standard), ils se limiteront dans leurs usages . « Peut-être que vous n’utiliserez pas [le service de VoD] Netflix, ou peut-être que vous n’essaierez pas une nouvelle application« , explique-t-il.

Sachant que le haut débit, fixe et mobile, était censé devenir un terreau pour l’innovation et la croissance lors du plan de relance de Barrack Obama en 2009, de telles limites pourraient facilement tuer dans l’œuf tout nouvel usage innovant.

En espérant que les opérateurs français ne suivent pas l’exemple de leurs comparses américains.

Thursday, July 07. 2011

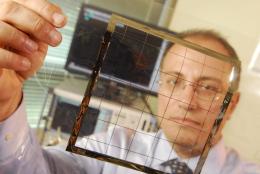

Ambient electromagnetic energy harnessed for small electronic devices

Via Physorg

-----

Researchers have discovered a way to capture and harness energy transmitted by such sources as radio and television transmitters, cell phone networks and satellite communications systems. By scavenging this ambient energy from the air around us, the technique could provide a new way to power networks of wireless sensors, microprocessors and communications chips.

Georgia Tech School of Electrical and Computer Engineering professor Manos Tentzeris holds a sensor (left) and an ultra-broadband spiral antenna for wearable energy-scavenging applications. Both were printed on paper using inkjet technology.

"There is a large amount of electromagnetic energy all around us, but nobody has been able to tap into it," said Manos Tentzeris, a professor in the Georgia Tech School of Electrical and Computer Engineering who is leading the research. "We are using an ultra-wideband antenna that lets us exploit a variety of signals in different frequency ranges, giving us greatly increased power-gathering capability."

A presentation on this energy-scavenging technology was scheduled for delivery July 6 at the IEEE Antennas and Propagation Symposium in Spokane, Wash. The discovery is based on research supported by multiple sponsors, including the National Science Foundation, the Federal Highway Administration and Japan's New Energy and Industrial Technology Development Organization (NEDO).

Communications devices transmit energy in many different frequency ranges, or bands. The team's scavenging devices can capture this energy, convert it from AC to DC, and then store it in capacitors and batteries. The scavenging technology can take advantage presently of frequencies from FM radio to radar, a range spanning 100 megahertz (MHz) to 15 gigahertz (GHz) or higher.

Georgia Tech School of Electrical and Computer Engineering professor Manos Tentzeris displays an inkjet-printed rectifying antenna used to convert microwave energy to DC power. It was printed on flexible material. (Georgia Tech Photo: Gary Meek).

Georgia Tech School of Electrical and Computer Engineering professor Manos Tentzeris displays an inkjet-printed rectifying antenna used to convert microwave energy to DC power. It was printed on flexible material. (Georgia Tech Photo: Gary Meek).

Utilizing ambient electromagnetic energy could also provide a form of system backup. If a battery or a solar-collector/battery package failed completely, scavenged energy could allow the system to transmit a wireless distress signal while also potentially maintaining critical functionalities.

When Tentzeris and his research group began inkjet printing of antennas in 2006, the paper-based circuits only functioned at frequencies of 100 or 200 MHz, recalled Rushi Vyas, a graduate student who is working with Tentzeris and graduate student Vasileios Lakafosis on several projects.

"We can now print circuits that are capable of functioning at up to 15 GHz -- 60 GHz if we print on a polymer," Vyas said. "So we have seen a frequency operation improvement of two orders of magnitude."

The researchers believe that self-powered, wireless paper-based sensors will soon be widely available at very low cost. The resulting proliferation of autonomous, inexpensive sensors could be used for applications that include:

Wednesday, July 06. 2011

Afghan City Builds DIY Internet Out Of Trash

Via Insteading

-----

Is there such a thing as DIY internet? An amazing open-source project in Afghanistan proves you don’t need millions to get connected.

While visiting family last week, the topic of conversation turned to the internet, net neutrality, and both corporate and government attempts to police the online world. A family member remarked that if they wanted to, the U.S. government could simply turn off the internet and the entire world would be screwed.

Having read this inspiring article by Douglas Rushkoff on Shareable.net, I surprised the room by disagreeing. I said that we didn’t need the corporate built internet, and that if we had to, the people could build their own. Of course, not being that technically minded, I couldn’t offer a concrete idea of how this could be achieved. Until now.

A recent Fast Company article shines a spotlight on the Afghan city of Jalalabad which has a high-speed Internet network whose main components are built out of trash found locally. Aid workers, mostly from the United States, are using the provincial city in Afghanistan’s far east as a pilot site for a project called FabFi.

FabFi is an open-source, FabLab-grown system using common building materials and off-the-shelf electronics to transmit wireless ethernet signals across distances of up to several miles. With Fabfi, communities can build their own wireless networks to gain high-speed internet connectivity—thus enabling them to access online educational, medical, and other resources.

Residents who desire an internet connection can build a FabFi node out of approximately $60 worth of everyday items such as boards, wires, plastic tubs, and cans that will serve a whole community at once.

Jalalabad’s longest link is currently 2.41 miles, between the FabLab and the water tower at the public hospital in Jalalabad, transmitting with a real throughput of 11.5Mbps (compared to 22Mbps ideal-case for a standards compliant off-the-shelf 802.11g router transitting at a distance of only a few feet). The system works consistently through heavy rain, smog and a couple of good sized trees.

With millions of people still living without access to high-speed internet, including much of rural America, an open-source concept like FabFi could have profound ramifications on education and political progress.

Because FabFi is fundamentally a technological and sociological research endeavor, it is constantly growing and changing. Over the coming months expect to see infrastructure improvements to improve stability and decrease cost, and added features such as meshing and bandwidth aggregation to support a growing user base.

In addition to network improvements, there are plans to leverage the provided connectivity to build online communities and locally hosted resources for users in addition to MIT OpenCourseWare, making the system much more valuable than the sum of its uplink bandwidth. Follow the developments on the FabFi Blog.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131046)

- Norton cyber crime study offers striking revenue loss statistics (101656)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100057)

- Norton cyber crime study offers striking revenue loss statistics (57885)

- The PC inside your phone: A guide to the system-on-a-chip (57446)