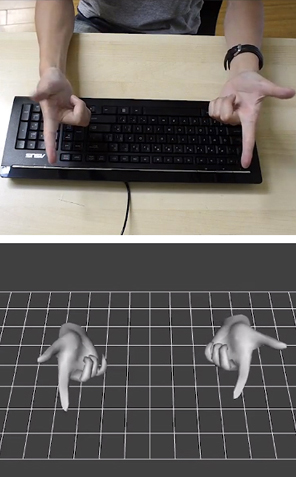

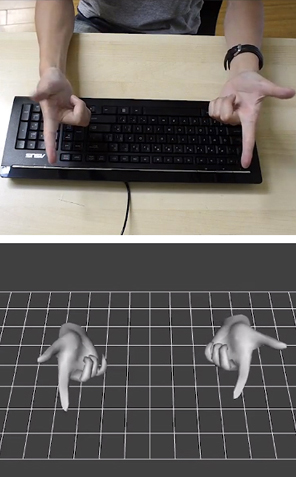

Finger mouse: 3Gear uses depth-sensing cameras to track finger movements.

3Gear Systems

Tuesday, October 30. 2012The Pirate Bay switches to cloud-based serversVia Slash Gear -----

It isn’t exactly a secret that authorities and entertainment groups don’t like The Pirate Bay, but today the infamous site made it a little bit harder for them to bring it down. The Pirate Bay announced today that it has move its servers to the cloud. This works in a couple different ways: it helps the people who run The Pirate Bay save money, while it makes it more difficult for police to carry out a raid on the site.

“All attempts to attack The Pirate Bay from now on is an attack on everything and nothing,” a Pirate Bay blog post reads. “The site that you’re at will still be here, for as long as we want it to. Only in a higher form of being. A reality to us. A ghost to those who wish to harm us.” The site told TorrentFreak after the switch that its currently being hosted by two different cloud providers in two different countries, and what little actual hardware it still needs to use is being kept in different countries as well. The idea is not only to make it harder for authorities to bring The Pirate Bay down, but also to make it easier to bring the site back up should that ever happen. Even if authorities do manage to get their hands on The Pirate Bay’s remaining hardware, they’ll only be taking its transit router and its load balancer – the servers are stored in several Virtual Machine instances, along with all of TPB’s vital data. The kicker is that these cloud hosting companies aren’t aware that they’re hosting The Pirate Bay, and if they discovered the site was using their service, they’d have a hard time digging up any dirt on users since the communication between the VMs and the load balancer is encrypted. In short, it sounds like The Pirate Bay has taken a huge step in not only protecting its own rear end, but those of users as well. If all of this works out the way The Pirate Bay is claiming it will, then don’t expect to hear about the site going down anytime soon. Still, there’s nothing stopping authorities from trying to bring it down, or from putting in the work to try and figure out who the people behind The Pirate Bay are. Stay tuned Monday, October 29. 2012Don’t Call The New Microsoft Surface RT A Tablet, This Is A PCVia Tech Crunch -----

The Microsoft Surface RT is a PC. It’s not a mobile device and it’s not a tablet, it’s a PC. And Microsoft’s first self-branded computer. It is, in short, the physical incarnation of Microsoft’s Windows 8. The expectations and competition for the Surface are daunting. It’s been said that Microsoft built the Surface to show up HP, Dell, and the rest of the personal computing establishment. PC sales are stagnant while Apple is selling the iPad at an incredible pace. But the Surface is something different from other tablets. Microsoft built a PC for the post-PC consumer and chose to power it with a limited operating system called Windows RT. These trade-offs, real or imagined, are what really makes or breaks this device.

While the Surface RT is a solid piece of hardware, there are a few things that makes the device a bit hard to handle. The Surface RT is a widescreen tablet. It’s 16×9, making it a lot wider than it is tall, so using this 10.6-inch tablet is slightly different from an iPad or Galaxy Note 10.1. When holding it properly, that is, in landscape, it’s a bit too long to be held with one hand. Likewise, when holding it in portrait, it’s too tall to be held comfortably one-handed. In fact, it’s slightly awkward overall. For starters, it’s rather tough to type efficiently on the Surface RT’s on-screen keyboard when holding the tablet. I’m 6 feet tall and have normal size hands; I cannot grasp the Surface and hit all the on-screen keys without shifting my hands. Moreover, when holding the Surface in this orientation, I cannot touch the Windows home button. Windows 8 compensates for this flawed design by incorporating an additional Windows home button into a slide-out virtual tray activated by a bezel gesture. It’s clear Microsoft designed the Surface RT to be a convertible PC rather than a tablet with an optional keyboard. This is an important distinction. By comparison, tablets such as the iPad and Galaxy Note 10.1 feel complete without anything else. I own a Logitech Ultrathin Keyboard and use it daily. But only for work. I enjoy using the iPad without it. Due equally to Windows 8 and the large 16:9 form factor, the Surface RT is naked without a $119 Touch Cover. Without a Touch Cover, the Surface RT feels incomplete in design and function. The problem here is that the Surface is basically a big laptop screen without the keyboard. The cover rights the design’s wrongs by forcing the user to use the physical keyboard rather than the on-screen keyboard. Microsoft knows this. After all, Surface is rarely advertised without a Touch Cover, but that doesn’t alleviate the sting of paying another $100+ for a keyboard. The Surface RT runs a limited version of Windows 8 called Windows RT. It shares the same ebb and flow as Windows 8, which is a radical take on the classic operating system. The operating system was clearly built for the mobile era and it shows promise. But right now, at launch, Windows RT needs work. Most of the apps are limited in features and the touch version of Internet Explorer is slow and clunky. Pages load very slowly and the bezel gesture to switch between tabs is unreliable and buggy. And worse yet, due to Windows RT’s lack of apps like Facebook or Twitter, you’re forced to use Internet Explorer for nearly everything. Don’t expect to load your favorite Windows applications on the Surface RT. It’s just not possible. Think of this as Windows 8 Lite, a cross between a desktop and a mobile OS. Despite its shortcomings, the Surface RT is a very functional productivity device in a traditional sense. I wrote the majority of this review on the Surface with the $129 Type Cover. It feels like an ultraportable Ultrabook. Using Windows RT’s classic Desktop mode, I was able to compose and edit within TechCrunch’s WordPress back-end as if I was sitting at my Windows 7 desktop. In this use, the Surface outshines other tablets as it allows for a full desktop workspace. But here is where the problem lies – the Surface acts like a tablet and ends up working more like a notebook. This interface between flat tablet and horizontal laptop is frustrating and confusing.

Physically, the Surface feels like it’s from the future. It employs just the right amount of neo-brutalist industrial design. The casing is made out of magnesium alloy, called VaporMg by Microsoft, which is more durable and scratch resistant than aluminum. The iPad feels pedestrian compared to the Surface. But the iPad is a different sort of device. Where the iPad is a tablet, the Surface is convertible PC. The Surface’s marque feature is a large kickstand that props the tablet at a 22 degree angle. The Kickstand pops out with an air of confidence. A hidden hinge snaps it away from the body and likewise, when collapsing the kickstand, the hinge forcefully snaps it back in place. Unlike most tablets, the Surface sports a large range of I/O ports. Along with the bottom-mounted Touch Cover port, there’s a microHDMI output, microSDXC slot, and a full-size USB port for connecting a camera, phone, USB flash drive, or XBOX 360 controller. This last feature sets the Surface apart from other tablets, allowing the Surface to nearly match the functions of a laptop. You cannot pick up a Surface and be disappointed by the feel.Remember, the Surface is a PC, not a tablet in the traditional sense. It should have all these ports and, although it looks comical, a full-sized USB port on this tablet is absolutely necessary. There are front and rear cameras, 802.11a/b/g/n Wi-Fi, Bluetooth 4.0 and a non-removable battery. There are no 3G or 4G wireless connectivity options. The speakers are nice and loud (but not booming), though there is very little bass in the sound. The Surface feels fantastic. The hardware is its strongest selling point. You cannot pick up a Surface and be disappointed by the feel. There isn’t another tablet on the market with the same build quality or connectivity options in such a svelte package, including the iPad.

Microsoft didn’t just build the Surface. The company also spent resources developing the Surface’s Touch Covers. These covers, one with physical keys and the other with touch-sensitive keypads, magnetically snap onto the bottom of the Surface like Apple’s Smart Covers. Both versions of the Touch Covers enhance the overall feel of the device. It’s impossible not to appreciate the Surface’s design when you snap one of these covers onto the Surface, close it up, and carry it around. I’m completely taken by the feel of the Surface. The Surface is ungainly large when deployed.The Touch Covers work equally as well as they look. There are two versions: one has chicklet keys that feel a lot like the keyboards used in Ultrabooks. This version is called the Type Cover and costs $129. The $119 Touch Cover uses touch-sensitive buttons that do not physically move. This Touch Cover is half as thin as its brother, but after spending a week with both, I found I was about half as productive on the Touch Cover versus the Type Cover (see WPP chart below). Both have little touchpads with right and left clicking buttons under the keyboard. However, the Touch Covers reveal the Surface’s fundamental flaw: The Surface is ungainly large when deployed. When used with the Surface’s kickstand and a Touch Cover, the whole contraption is 10-inches deep. That’s the same depth as a 15-inch MacBook Pro. An iPad with a Logitech Ultrathin Keyboard is only 7-inches deep; most ultrabooks are 9-inches or under. A Surface with a Touch Cover barely fits on most airplane seat-back trays; it doesn’t work at all on the trays that pull out of an armrest. That’s a problem. The sheer size of the Surface negates its appeal. At 10-inches deep with a Touch Cover, when used, it’s larger than many more capable ultrabooks. Additionally, with the nicer Type Cover, the Surface isn’t much thinner or less expensive than many full-powered notebooks. The Touch Covers of course are optional, but Microsoft saw them as a key component for the device. Look at the market or pre-release press coverage: a good amount of the message is about these Touch Covers and not the Surface RT itself. Microsoft is attempting to see them as one unit. To Microsoft, it’s not if Surface buyers are going to purchase a Touch Cover, but rather when. It’s expected. Unfortunately the Touch Covers are not as functional as keyboards for other tablets. As previously mentioned, the Touch Covers magnetically snap onto the bottom of the Surface and then the kickstand props the screen at a pleasing angle. But the connection point is not rigid. Pick up the Surface and the Touch Cover dangles in place. This design makes it very hard to use the Surface with a Touch Cover anywhere but a tabletop. It needs a 10-inch deep flat surface. I could not use the Surface with a Touch Cover sitting in an armchair, walking around, or laying on my back in bed. Forget about using it on the commode; it sits too precariously on the legs for comfort. These are use-cases that I do nearly daily with my iPad and Logitech Ultrathin Keyboard. The Surface is only usable when seated at a table or desk.

The Surface RT uses a 10.6-inch 16×9 ClearType HD Display at 1366×768. The screen can handle 5 points of contact at once. Indoors, the screen is deep and rich with vibrant colors. Colors wash out in direct sunlight and the glossy overlay results in a lot of glare — but slightly less glare than the iPad’s. The screen is fairly sharp, but overall is far inferior to the Retina screen in the new iPad or even the screen found in the iPad 2. When sitting side-by-side, it’s easy to see the difference between the Surface RT and new iPad, both $499 tablets. The new iPad is more detailed and the colors are much more accurate. There is no contest, really. The Retina display in the new iPad has nearly twice the resolution of the Surface’s and can handle 10 points of contact instead of just 5. The Retina screen is brighter, has a deeper color palette, and, most importantly, makes text easier to read thanks to the higher resolution screen. Microsoft advertises its tablet with a keyboard and mouse.I found the Surface RT’s screen to be a tad frustrating at times. It seemingly doesn’t register touches properly. I often had to reattempt selecting a particular on-screen item. The Surface RT (or maybe it’s Windows RT?) is not as accurate as I would like it to be. This happened more often in the classic Desktop environment where the buttons and elements are lot smaller than those found in the new tile interface. But per Microsoft’s marketing, the interaction with the display is not as critical with the Surface as, say, with an iPad or Galaxy Note 10.1. That’s where the Touch Covers with their little trackpads come into play. Apple and Samsung advertise their tablets’ touchscreen capabilities. Microsoft advertises its tablet with a keyboard and mouse.

The Surface RT packs two 1MP cameras: one on the front, one on the back. They’re a joke. The picture quality is horrible under any lighting condition and completely unacceptable for a $500 device. See the examples below.

Note: Results are based on a standardized test that requires the device to search Google Images until the battery is depleted. The screens are set to max brightness.

The Surface RT cannot write its own story. Unfortunately for the Surface RT, its fate rests solely in the hands of Windows 8, and moreover, Windows RT. No matter how good the hardware is, the operating system makes or breaks the device. As it sits right now, at the launch of Windows 8/RT, the experience is a mish-mash of interfaces and the experience is poor. At launch, Windows 8 feels like a brand-new playground built in an affluent retirement complex. It’s pretty, full of bold colors, seemingly fun, but built for a different generation. Microsoft is clearly attempting to bring relevance to Windows with the touch-focused interface and post PC concepts but is unwilling and unable to fully commit completely to touch. Again, the Surface RT runs a version of Windows called Windows RT. This is not Windows 8, although they look and work very similarly. It’s stripped down, and designed to run better on mobile computing platforms. The main difference is Windows RT requires apps be coded for ARM processors rather than x86/x64 chips that have powered Windows computers since Windows 3.1. This means users cannot replace Internet Explorer with Chrome (even in Desktop) until Google releases a version of Chrome coded specifically for Windows RT. Likewise, a trusty version of the Print Shop Pro application cannot be installed on Windows RT, or for that matter, nearly any other Windows application built over the last 20 years. For that, you’ll need a Surface Pro which runs Windows 8 on an Intel CPU. As of this review’s writing, Microsoft had yet to detail the price or release schedule of the Surface Pro. It is expected to hit stores by the end of 2012 for around $1,000.

Windows 8/RT is different. It’s a stark departure from previous Windows builds. It’s built for the post-PC world but still holds onto the past with a classic desktop mode. It boots to the touch-friendly UI, previously called Metro and now called Modern UI, where apps are presented in a pleasing grid. This is also the Start Menu, so there is no longer a comfortable Windows button to click to get around the OS. Like Windows Phone, most of the tiles representing the apps are live, providing quick insight into the content held within. The tile for the application called Photo previews the pics within the app. Mail shows the sender and subject line of the last received message. Likewise, Weather shows the current weather, Messages shows the top message, and People shows the latest social media update. It’s a smooth, versatile and smart take on a mobile tablet OS. The Surface seems built specifically for the touch interface. The 16:9 screen matches the widescreen flow of the operating system perfectly. The Surface utilizes bezel gestures to hide menus. Starting with your finger on the Surface’s black bezel, slide onto the screen to reveal a menu tray. Done on the right side displays the main menu where most options are held. Done on the left switches between applications. Make a quick on and off swipe on the left side to display the application switcher. Most apps also have customized option drawers on the top and bottom of the screen. These hidden menus allow the apps to take full advantage of the screen; there’s no need to display a menu bar when they’re just a bezel swipe away. Microsoft itself didn’t fully commit to the touch interface.As great as Windows 8/RT looks, it sadly fails to provide a cohesive user experience. Even though Windows RT features myriad next-gen features, most of them are half-baked at launch. The application store is missing key apps, the single sign-on fails to sync user profiles across devices, and the social sharing features do not nativity include Twitter or Facebook. Microsoft itself didn’t fully commit to the touch interface. Windows 8’s dependency on the classic environment will not allow the Surface to be a tablet. Half the apps pre-installed on the Surface RT launches in the classic desktop interface, most notably Microsoft Office, where the smaller user elements do not play nicely with the touch interface. Windows traditionalists will find the Classic interface a lovely memory of the good ol’ times. Most everything is where it’s supposed to be – besides the Start Menu – and it runs and acts like Windows 7. But for the Surface, with its 10.6-inch 16:9 screen, it’s hard to use with the touchscreen alone. The elements are too small to efficiently be controlled with just touch. After all, the Desktop emulates an environment designed a several generations ago for use with a keyboard and mouse.

Windows 8/RT ships with two different versions of Internet Explorer. One lives in the new touch interface and the other lives a different life in Desktop; both are completely oblivious to each other’s existence. Open up a couple of tabs in one and the other does not replicate the behavior. The Internet Explorer in Desktop is the good ol’ IE — it should be very familiar to most. The one in the touch interface is completely different. It’s slow and clunky. Thanks to larger buttons, it’s easier to use by touch, but that’s its only redeeming quality. I hate using it. The lack of apps is one of the Surface’s main downfalls. The sad state of Windows RT’s application store is eerily similar to that of Windows Phone. Launched nearly two years ago, the application selection on Windows Phone still pales in comparison to that of Android or iOS. Microsoft has spent two years attempting to get developers on-board its smartphone platform. Will developers ignore Windows RT as well? No one knows and that’s the trick. It’s true that the iPad launched with very few apps, but owners could also run iPhone apps while the legions of iOS developers furiously adapted to the larger screen. Windows 8/RT lacks the ability to run mobile apps and Microsoft seemingly doesn’t have the same sort of rabid developers at its disposal (otherwise there would already be apps). Bing AppsMoments after the Surface is turned on for the first time, it’s easy to dive right into content thanks to Bing Apps. Bing Daily, Bing Sports, Bing Sports, Bing Travel, and Bing Finance look fantastic and they’re loaded with content. With large lead photos and strong headlines, they’re sure to be some of the most used apps — until Windows 8 is embraced by 3rd party devs at least. These apps offer a lot of info. For instance, Bing Sports leads with the news, but swipe left and it reveals the schedule for user-designated teams. Swipe a little more to reveal standings charts. The next section shows panels of leading players followed by panels of the leading teams of the default sport. Swipe a little bit more and you get a large advertisement. The Surface ships with ad-supported apps installed. Every Bing App has a single ad located at the end of its news feed. It’s a little shady but not exactly scandalous. VideoThe Surface seems designed specifically to watch movies with the 16:9 screen. Launch the video player app and it immediately loads the Xbox Video store. This app can playback movies loaded on the internal storage or microSD card, but it’s primarily designed to be a video store. And like the Xbox dashboard it emulates, there are ads here too.

The Surface RT lacks a powerful video playback solution. Microsoft didn’t include Windows Media Player or Windows Media Center in Windows RT. The included video app is very limited but it did manage to playback 720p .avi files off of a microSD card. Fans of sideloading content, however, may find themselves disappointed. PhotosThe Windows RT photo app is a thing of beauty. It’s fast, flexible and easily pulls in photos from Facebook and Flickr. It’s deeply integrated into Windows RT so sharing and printing is built in.

While the app runs great, it lacks any editing tools — even basic items like cropping are missing. It’s mostly designed to show off the screen and UI elements.

The Surface launches to a crowded market dominated by just a few players. The $499 iPad is the top tablet in the world, currently commanding a dominant portion of the tablet market share. The $199 tablets, the Kindle Fire HD, Nexus 7, and Nook Tablet HD, offer a lot of tablet for the money. But Surface is fundamentally different from the aforementioned options. The Surface is a convertible PC, meaning it’s a personal computer with a detachable keyboard, not a consumer tablet. The Surface is incomplete without a Touch Cover. It’s not designed to simply be a tablet. It’s too large and clunky to be used without a keyboard. With its awkward size and incomplete operating system, the Surface fails to excel at anything particular in the way other tablets have. The iPad provides better casual computing. The Android tablets sync beautifully with Google accounts and the media tablets by Amazon and B&N offer an unmatched content selection. The Surface’s closest natural competitor is a budget Ultrabook. But even here, most Ultrabooks run Windows 8 rather than Windows RT, allowing for the full Windows experience. Plus most Ultrabooks have a larger screen but smaller footprint when used, offer longer battery life, and are generally more powerful. Microsoft built the Surface to be a Jack of all trades, but failed to make sure it was even competent at any one task.

The Surface RT is a product of unfortunate timing. The hardware is great. The Type Cover turns it into a small convertible tablet powered by a promising OS in Windows RT. That said, there are simply more mature options available right now. Microsoft needs to court developers for Windows RT. As a consumer tablet, the Surface lacks all of the appeal of the iPad. There aren’t any mainstream apps and Microsoft has failed to connect Windows desktop and mobile ecosystem in any meaningful way like Android or iOS/OS X. Windows RT is a brand new operating system that is incompatible with legacy Windows software. This immediately limits the appeal since the Surface RT is dependent on Windows RT’s application Store – a storefront that is currently devoid of anything useful. The Surface RT isn’t a tablet. It’s not a legitimate alternative to the iPad or Galaxy Note 10.1. That’s not a bad thing. With the Touch Covers, the Surface RT is a fine alternative to a laptop, offering a slightly limited Windows experience in a small, versatile form. Just don’t call it an iPad killer. If properly nurtured, Windows RT and the Surface RT could be something worthwhile. But right now, given Microsoft’s track record with Windows Phone, buying the Surface RT is a huge risk. The built-in apps are very limited and the Internet experience is fairly poor. Skip this generation of the Surface RT or at least wait until it offers a richer, more useful experience. While we’re bullish on Windows 8, the RT incarnation just isn’t quite there.

Is it heavy?

How much does the Surface cost?

Who should buy one?

Is there a lot of glare?

Is the Kickstand adjustable?

Can I open up the Surface?

Can I download pictures from my camera to the Surface?

Does the Surface work with 3G/4G networks?

Does Windows RT have a lot of apps?

Images made by our graphics ninja, Bryce Durbin. A Stuxnet Future? Yes, Offensive Cyber-Warfare is Already HereVia ISN ETHZ By Mihoko Matsubara ----- Mounting Concerns and Confusion

Stuxnet proved that any actor with sufficient know-how in terms of cyber-warfare can physically inflict serious damage upon any infrastructure in the world, even without an internet connection. In the words of former CIA Director Michael Hayden: “The rest of the world is looking at this and saying, ‘Clearly someone has legitimated this kind of activity as acceptable international conduct’.” Governments are now alert to the enormous uncertainty created by cyber-instruments and especially worried about cyber-sabotage against critical infrastructure. As US Secretary of Defense Leon Panetta warned in front of the Senate Armed Services Committee in June 2011; “the next Pearl Harbor we confront could very well be a cyber-attack that cripples our power systems, our grid, our security systems, our financial systems, our governmental systems.” On the other hand, a lack of understanding about instances of cyber-warfare such as Stuxnet has led to confused expectations about what cyber-attacks can achieve. Some, however, remain excited about the possibilities of this new form of warfare. For example, retired US Air Force Colonel Phillip Meilinger expects that “[a]… bloodless yet potentially devastating new method of war” is coming. However, under current technological conditions, instruments of cyber-warfare are not sophisticated enough yet to deliver a decisive blow to the adversary. As a result, cyber-capabilities still need to be used alongside kinetic instruments of war. Advantages of Cyber-Capabilities Cyber-capabilities provide three principal advantages to those actors that possess them. First, they can deny or degrade electronic devices including telecommunications and global positioning systems in a stealthy manner irrespective of national borders. This means potentially crippling an adversary’s intelligence, surveillance, and reconnaissance capabilities, delaying an adversary’s ability to retaliate (or even identify the source of an attack), and causing serious dysfunction in an adversary’s command and control and radar systems. Second, precise and timely attribution is particularly challenging in cyberspace because skilled perpetrators can obfuscate their identity. This means that responsibility for attacks needs to be attributed forensically which not only complicates retaliatory measures but also compromises attempts to seek international condemnation of the attacks. Finally, attackers can elude penalties because there is currently no international consensus as to what actually constitutes an ‘armed attack’ or ‘imminent threat’ (which can invoke a state’s right of self-defense under Article 51 of the UN Charter) involving cyber-weapons. Moreover, while some countries - including the United States and Japan - insist that the principles of international law apply to the 'cyber' domain, others such as China argue that cyber-attacks “do not threaten territorial integrity or sovereignty.” Disadvantages of Cyber-Capabilities On the other hand, high-level cyber-warfare has three major disadvantages for would-be attackers. First, the development of a sophisticated ‘Stuxnet-style’ cyber-weapon for use against well-protected targets is time- and resource- intensive. Only a limited number of states possess the resources required to produce such weapons. For instance, the deployment of Stuxnet required arduous reconnaissance and an elaborate testing phase in a mirrored environment. F-Secure Labs estimates that Stuxnet took more than ten man-years of work to develop, underscoring just how resource- and labor- intensive a sophisticated cyber-weapon is to produce. Second, sophisticated and costly cyber-weapons are unlikely to be adapted for generic use. As Thomas Rid argues in "Think Again: Cyberwar,” different system configurations need to be targeted by different types of computer code. The success of a highly specialized cyber-weapon therefore requires the specific vulnerabilities of a target to remain in place. If, on the contrary, a targeted vulnerability is ‘patched’, the cyber-operation will be set back until new malware can be prepared. Moreover, once the existence of malware is revealed, it will tend to be quickly neutralized – and can even be reverse-engineered by the target to assist in future retaliation on their part. Finally, it is difficult to develop precise, predictable, and controllable cyber-weapons for use in a constantly evolving network environment. The growth of global connectivity makes it difficult to assess the implications of malware infection and challenging to predict the consequences of a given cyber-attack. Stuxnet , for instance, was not supposed to leave Iran’s Natanz enrichment facility, yet the worm spread to the World Wide Web, infecting other computers and alerting the international community to its existence. According to the Washington Post , US forces contemplated launching cyber-attacks on Libya’s air defense system before NATO’s airstrikes. But this idea was quickly abandoned due to the possibility of unintended consequences for civilian infrastructure such as the power grid or hospitals. Implications for Military Strategy Despite such disadvantages, cyber-attacks are nevertheless part of contemporary military strategy including espionage and offensive operations. In August 2012, US Marine Corps Lieutenant General Richard Mills confirmed that operations in Afghanistan included cyber-attacks against the adversary. Recalling an incident in 2010, he said, "I was able to get inside his nets, infect his command-and-control, and in fact defend myself against his almost constant incursions to get inside my wire, to affect my operations." His comments confirm that the US military now employs a combination of cyber- and traditional offensive measures in wartime. As Thomas Mahnken points out in “Cyber War and Cyber Warfare,” cyber-attacks can produce disruptive and surprising effects. And while cyber-attacks are not a direct cause of death, their consequences may lead to injuries and loss of life. As Mahnken argues, it would be inconceivable to directly cause Hiroshima-type damage and casualties merely with cyber-attacks. While a “cyber Pearl Harbor” might shock the adversary on a similar scale as its namesake in 1941, the ability to inflict a decisive, extensive, and foreseeable blow requires kinetic support – at least under current technological conditions.

Implications for Critical Infrastructure

Nevertheless, the shortcomings of cyber-attacks will not discourage malicious actors in peacetime. The anonymous, borderless, and stealthy nature of the 'cyber' domain offers an extremely attractive asymmetrical platform for inflicting physical and psychological damage to critical infrastructure such as finance, energy, power and water supply, telecommunication, and transportation as well as to society at large. Since well before Stuxnet, successful cyber-attacks have been launched against vulnerable yet important infrastructure systems. For example, in 2000 a cyber-attack against an Australian sewage control system resulted in millions of liters of raw sewage leaking into a hotel, parks, and rivers. Accordingly, the safeguarding of cyber-security is an increasingly important consideration for heads of state. In July 2012, for example, President Barack Obama published an op-ed in the Wall Street Journal warning about the unique risk cyber-attacks pose to national security. In particular, the US President emphasized that cyber-attacks have the potential to severely compromise the increasingly wired and networked lifestyle of the global community. Since the 1990s, the process control systems of critical infrastructures have been increasingly connected to the Internet. This has unquestionably improved efficiencies and lowered costs, but has also left these systems alarmingly vulnerable to penetration. In March 2012, McAfee and Pacific Northwest National Laboratory released a report which concluded that power grids are rendered vulnerable due to their common computing technologies, growing exposure to cyberspace, and increased automation and interconnectivity. Despite such concerns, private companies may be tempted to prioritize short-term profits rather than allocate more funds to (or accept more regulation of) cyber-security, especially in light of prevailing economic conditions. After all, it takes time and resources to probe vulnerabilities and hire experts to protect them. Nevertheless, leadership in both the public and private sectors needs to recognize that such an attitude provides opportunities for perpetrators to take advantage of security weaknesses to the detriment of economic and national security. It is, therefore, essential for governments to educate and encourage --- and if necessary, fund --- the private sector to provide appropriate cyber-security in order to protect critical infrastructure. Implications for Espionage The sophistication of the Natanz incident, in which Stuxnet was able to exploit Iranian vulnerabilities, stunned the world. Advanced Persistent Threats (APTs) were employed to find weaknesses by stealing data which made it possible to sabotage Iran’s nuclear program. Yet APTs can also be used in many different ways, for example against small companies in order to exploit larger business partners that may be possession of valuable information or intellectual property. As a result, both the public and private sectors must brace themselves for daily cyber-attacks and espionage on their respective infrastructures. Failure to do so may result in the theft of intellectual property as well as trade and defense secrets that could undermine economic competitiveness and national security. In an age of cyber espionage, the public and private sectors must also reconsider what types of information should be deemed as “secret,” how to protect that information, and how to share alerts with others without the sensitivity being compromised. While realization of the need for this kind of wholesale re-evaluation is growing, many actors remain hesitant. Indeed, such hesitancy is driven often out of fears that doing so may reveal their vulnerabilities, harm their reputations, and benefit their competitors. Of course, there are certain types of information that should remain unpublicized so as not to damage the business, economy, and national security. However, such classifications must not be abused against balance between public interest and security.

Cyber-Warfare Is Here to Stay The Stuxnet incident is set to encourage the use of cyber-espionage and sabotage in warfare. However, not all countries can afford to acquire offensive cyber-capabilities as sophisticated as Stuxnet and a lack of predictability and controllability continues to make the deployment of cyber-weapons a risky business. As a result, many states and armed forces will continue to combine both kinetic and 'cyber' tactics for the foreseeable future. Growing interconnectivity also means that the number of potential targets is set to grow. This, in turn, means that national cyber-security strategies will need to confront the problem of prioritization. Both the public and private sectors will have to decide which information and physical targets need to be protected and work together to share information effectively.

Posted by Christian Babski

in Innovation&Society

at

10:57

Defined tags for this entry: innovation&society, security

Wednesday, October 24. 2012Wi-Fi LED Light Bulbs: Gateway to the Smart Home?-----

In the future, we’re told, homes will be filled with smart gadgets connected to the Internet, giving us remote control of our homes and making the grid smarter. Wireless thermostats and now lighting appear to be leading the way. Startup Greenwave Reality today announced that its wireless LED lighting kit is available in the U.S., although not through retail sales channels. The company, headed by former consumer electronics executives, plans to sell the set, which includes four 40-watt-equivalent bulbs and a smartphone application, through utilities and lighting companies for about $200, according to CEO Greg Memo. The Connected Lighting Solution includes four EnergyStar-rated LED light bulbs, a gateway box that connects to a home router, and a remote control. Customers also download a smartphone or tablet app that lets people turn lights on or off, dim lights, or set up schedules. Installation is extremely easy. Greenwave Reality sent me a set to try out, and I had it operating within a few minutes. The bulbs each have their own IP address and are paired with the gateway out of the box, so there’s no need to configure the bulbs, which communicate over the home wireless network or over the Internet for remote access. Using the app is fun, if only for the novelty. When’s the last time you used your iPhone to turn off the lights downstairs? It also lets people put lights on a schedule (they can be used outside in a sheltered area but not exposed directly to water) or set custom scenes. For instance, Memo set some of the wireless bulbs in his kitchen to be at half dimness during the day. Many smart-home or smart-building advocates say that lighting is the toehold for a house full of networked gadgets. “The thing about lighting is that it’s a lot more personal than appliances or a thermostat. It’s actually something that affects people’s moods and comfort,” Memo says. “We think this will move the needle on the automated home.” Rather than sell directly to consumers, as most other smart lighting products are, Greenwave Reality intends to sell through utilities and service companies. While gadget-oriented consumers may be attracted to wireless light bulbs, utilities are interested in energy savings. And because these lights are connected to the Internet, energy savings can be quantified. In Europe and many states, utilities are required to spend money on customer efficiency programs, such as rebates for efficient appliances or subsidizing compact fluorescent bulbs. But unlike traditional CFLs, network-connected LEDs can report usage information. That allows Greenwave Reality to see how many bulbs are actually in use and verify the intended energy savings of, for example, subsidized light bulbs. (The reported data would be anonymized, Memo says.) Utilities could also make lighting part of demand response programs to lower power during peak times. As for performance of the bulbs, there is essentially no latency when using the smartphone app. The remote control essentially brings dimmers to fixtures that don’t have them already. For people who like the idea of bringing the Internet of things to their home with smart gadgets, LED lights (and thermostats) seem like a good way to start. But in the end, it may be the energy savings of better managed and more efficient light bulbs that will give wireless lighting a broader appeal.

Molecular 3D bioprinting could mean getting drugs through emailVia dvice ----- What happens when you combine advances in 3D printing with biosynthesis and molecular construction? Eventually, it might just lead to printers that can manufacture vaccines and other drugs from scratch: email your doc, download some medicine, print it out and you're cured. This concept (which is surely being worked on as we speak) comes from Craig Venter, whose idea of synthesizing DNA on Mars we posted about last week. You may remember a mention of the possibility of synthesizing Martian DNA back here on Earth, too: Venter says that we can do that simply by having the spacecraft email us genetic information on whatever it finds on Mars, and then recreate it in a lab by mixing together nucleotides in just the right way. This sort of thing has already essentially been done by Ventner, who created the world's first synthetic life form back in 2010. Vetner's idea is to do away with complex, expensive and centralized vaccine production and instead just develop one single machine that can "print" drugs by carefully combining nucleotides, sugars, amino acids, and whatever else is needed while u wait. Technology like this would mean that vaccines could be produced locally, on demand, simply by emailing the appropriate instructions to your closes drug printer. Pharmacies would no longer consists of shelves upon shelves of different pills, but could instead be kiosks with printers inside them. Ultimately, this could even be something you do at home. While the benefits to technology like this are obvious, the risks are equally obvious. I mean, you'd basically be introducing the Internet directly into your body. Just ingest that for a second and think about everything that it implies. Viruses. LOLcats. Rule 34. Yeah, you know what, maybe I'll just stick with modern American healthcare and making ritual sacrifices to heathen gods, at least one of which will probably be effective.

Tuesday, October 16. 2012Google must change privacy policy demand EU watchdogsVia Slash Gear -----

European data protection regulators have demanded Google change its privacy policy, though the French-led team did not conclude that the search giant’s actions amounted to something illegal. The investigation, by the Commission Nationale de l’Informatique (CNIL), argued that Google’s decision to condense the privacy policies of over sixty products into a single agreement – and at the same time increase the amount of inter-service data sharing – could leave users unclear as to how different types of information (as varied as search terms, credit card details, or phone numbers) could be used by the company.

“The Privacy Policy makes no difference in terms of processing between the innocuous content of search query and the credit card number or the telephone communications of the user” the CNIL points out, “all these data can be used equally for all the purposes in the Policy.” That some web users merely interact passively with Google products, such as adverts, also comes in for heightened attention, with those users getting no explanation at all as to how their actions might be tracked or stored.

In a letter to Google [pdf link] – signed by the CNIL and other authorities from across Europe – the concerns are laid out in full, together with some suggestions as to how they can be addressed. For instance, the search company could “develop interactive presentations that allow users to navigate easily through the content of the policies” and “provide additional and precise information about data that have a significant impact on users (location, credit card data, unique device identifiers, telephony, biometrics).” Ironically, one of Google’s arguments for initially changing its policy system was that a single, harmonized agreement would be easier for users to read through and understand. It also insisted that the data-sharing aspects were little changed from before. “The CNIL, all the authorities among the Working Party and data protection authorities from other regions of the world expect Google to take effective and public measures to comply quickly and commit itself to the implementation of these recommendations” the commission concluded. Google has a 3-4 month period to enact the changes requested, or it could face the threat of sanctions. “We have received the report and are reviewing it now” Peter Fleischer, Google’s global privacy counsel, told TechCrunch. “Our new privacy policy demonstrates our long-standing commitment to protecting our users’ information and creating great products. We are confident that our privacy notices respect European law.”

Monday, October 15. 2012Constraints on the Universe as a Numerical SimulationSilas R. Beane, Zohreh Davoudi, Martin J. Savage ----- Observable consequences of the hypothesis that the observed universe is a numerical simulation performed on a cubic space-time lattice or grid are explored. The simulation scenario is first motivated by extrapolating current trends in computational resource requirements for lattice QCD into the future. Using the historical development of lattice gauge theory technology as a guide, we assume that our universe is an early numerical simulation with unimproved Wilson fermion discretization and investigate potentially-observable consequences. Among the observables that are considered are the muon g-2 and the current differences between determinations of alpha, but the most stringent bound on the inverse lattice spacing of the universe, b^(-1) >~ 10^(11) GeV, is derived from the high-energy cut off of the cosmic ray spectrum. The numerical simulation scenario could reveal itself in the distributions of the highest energy cosmic rays exhibiting a degree of rotational symmetry breaking that reflects the structure of the underlying lattice.

Posted by Christian Babski

in Technology

at

12:06

Defined tags for this entry: simulation, technology

Thursday, October 11. 2012Rain Room, 2012

Rain Room is a hundred square metre field of falling water through which it is possible to walk, trusting that a path can be navigated, without being drenched in the process. As you progress through The Curve, the sound of water and a suggestion of moisture fill the air, before you are confronted by this carefully choreographed downpour that responds to your movements and presence. ----- Water, injection moulded tiles, solenoid valves, pressure regulators,

custom software, 3D tracking cameras, wooden frames, steel beams,

hydraulic

management system, grated floor Wednesday, October 10. 2012The mouse faces extinction as computer interaction evolves-----

Swipe, swipe, pinch-zoom. Fifth-grader Josephine Nguyen is researching the definition of an adverb on her iPad and her fingers are flying across the screen. Her 20 classmates are hunched over their own tablets doing the same. Conspicuously absent from this modern scene of high-tech learning: a mouse. Nguyen, who is 10, said she has used one before — once — but the clunky desktop computer/monitor/keyboard/mouse setup was too much for her. “It was slow,” she recalled, “and there were too many pieces.” Gilbert Vasquez, 6, is also baffled by the idea of an external pointing device named after a rodent. “I don’t know what that is,” he said with a shrug. Nguyen and Vasquez, who attend public schools here, are part of the first generation growing up with a computer interface that is vastly different from the one the world has gotten used to since the dawn of the personal-computer era in the 1980s. This fall, for the first time, sales of iPads are cannibalizing sales of PCs in schools, according to Charles Wolf, an analyst for the investment research firm Needham & Co. And a growing number of even more sophisticated technologies for communicating with your computer — such as the Leap Motion boxes and Sony Vaio laptops that read hand motions, as well as voice recognition services such as Apple’s Siri — are beginning to make headway in the commercial market. John Underkoffler, a former MIT researcher who was the adviser for the high-tech wizardry that Tom Cruise used in “Minority Report,” says that the transition is inevitable and that it will happen in as soon as a few years. Underkoffler, chief scientist for Oblong, a Los Angeles-based company that has created a gesture-controlled interface for computer systems, said that for decades the mouse was the primary bridge to the virtual world — and that it was not always optimal. “Human hands and voice, if you use them in the digital world in the same way as the physical world, are incredibly expressive,” he said. “If you let the plastic chunk that is a mouse drop away, you will be able to transmit information between you and machines in a very different, high-bandwidth way.” This type of thinking is turning industrial product design on its head. Instead of focusing on a single device to access technology, innovators are expanding their horizons to gizmos that respond to body motions, the voice, fingers, eyes and even thoughts. Some devices can be accessed by multiple people at the same time. Keyboards might still be used for writing a letter, but designing, say, a landscaped garden might be more easily done with a digital pen, as would studying a map of Lisbon by hand gestures, or searching the Internet for Rihanna’s latest hits by voice. And the mouse — which many agree was a genius creation in its time — may end up as a relic in a museum. The mouse is born The first computer mouse, built at the Stanford Research Institute in Palo Alto, Calif., by Douglas Englebart and Bill English in 1963, was just a block of wood fashioned with two wheels. It was just one of a number of interfaces the team experimented with. There were also foot pedals, head-pointing devices and knee-mounted joysticks. But the mouse proved to be the fastest and most accurate, and with the backing of Apple founder Steve Jobs — who bundled it with shipments of Lisa, the predecessor to the Macintosh, in the 1980s — the device suddenly became a mainstream phenomenon. Englebart’s daughter, Christina, a cultural anthropologist, said that her father was able to predict many trends in technology over the years, but she said the one thing he has been surprised about is that the mouse has lasted as long as it has. “He never assumed the mouse would be it,” said the younger Englebart, who wrote her father’s biography. “He always figured there would be newer ways of exploring a computer.” She was 8 years old when her father invented the mouse. Now 57, she says she is finally seeing glimpses of the next stage of computing with the surging popularity of the iPad. These days her two children, 20 and 23, do not use a mouse anymore. San Antonio and LUCHA elementary schools in eastern San Jose, just 17 miles south of where Englebart conducted his research, provide a glimpse at the future. The schools, which share a campus, have integrated iPod Touches and iPads into the curriculum for all 700 students. The teachers all get Mac Airbooks with touch pads. “Most children here have never seen a computer mouse,” said Hannah Tenpas, 24, a kindergarten teacher at San Antonio. Kindergartners, as young as 4, use the iPod Touch to learn letter sounds. The older students use iPads to research historical information and prepare multimedia slide-show presentations about school rules. The intuitive touch-screen interface has allowed the school to introduce children to computers at an age that would have been impossible in the past, said San Antonio Elementary’s principal, Jason Sorich. Even toddlers are able manipulate a touch screen. A popular YouTube video shows a baby trying to swipe the pages of a fashion magazine that she assumes is a broken iPad. “For my one-year-old daughter, a magazine is an iPad that does not work. It will remain so for her whole life,” the creator of the video says in a slide at the end of the clip. The iPad side of the brain “The popularity of iPads and other tablets is changing how society interacts with information,” said Aniket Kittur, an assistant professor at the Human-Computer Interaction Institute at Carnegie Mellon University. “.?.?. Direct manipulation with our fingers, rather than mediated through a keyboard/mouse, is intuitive and easy for children to grasp.” Underkoffler said that while desktop computers helped activate the language and abstract-thinking parts of a child’s brain, new interfaces are helping open the spatial part. “Once our user interface can start to talk to us that way .?.?. we sort of blow the barn doors off how we learn,” he said. That may explain why iPads are becoming so popular in schools. Apple said in July that the iPad outsold the Mac 2 to 1 for the second consecutive quarter in the education market. In all, the company sold 17 million iPads in the April-to-June quarter; at the same time, mouse sales in the United States are down, some manufacturers say. “The adoption rate of iPad in education is something I’d never seen from any technology product in history,” Apple chief executive Tim Cook said in July. At San Antonio Elementary and LUCHA, which started their $300,000 iPad and iPod experiment last school year, the school board president, Esau Herrera, said he is thrilled by the results. Test scores have gone up (although officials say they cannot directly correlate that to the new technology), and the level of engagement has increased. The schools are now debating what to do with the handful of legacy desktop PCs, each with its own keyboard and mouse, and whether they should bother teaching students to move a pointer around a monitor. “Things are moving so fast,” said LUCHA Principal Kristin Burt, “that we’re not sure the computer and mouse will even be around when they get old enough to really use them.”

Tuesday, October 09. 2012Meet the Nimble-Fingered Interface of the Future-----

Finger mouse: 3Gear uses depth-sensing cameras to track finger movements.

Microsoft's Kinect, a 3-D camera and software for gaming, has made a big impact since its launch in 2010. Eight million devices were sold in the product's first two months on the market as people clamored to play video games with their entire bodies in lieu of handheld controllers. But while Kinect is great for full-body gaming, it isn't useful as an interface for personal computing, in part because its algorithms can't quickly and accurately detect hand and finger movements. Now a San Francisco-based startup called 3Gear has developed a gesture interface that can track fast-moving fingers. Today the company will release an early version of its software to programmers. The setup requires two 3-D cameras positioned above the user to the right and left. The hope is that developers will create useful applications that will expand the reach of 3Gear's hand-tracking algorithms. Eventually, says Robert Wang, who cofounded the company, 3Gear's technology could be used by engineers to craft 3-D objects, by gamers who want precision play, by surgeons who need to manipulate 3-D data during operations, and by anyone who wants a computer to do her bidding with a wave of the finger. One problem with gestural interfaces—as well as touch-screen desktop displays—is that they can be uncomfortable to use. They sometimes lead to an ache dubbed "gorilla arm." As a result, Wang says, 3Gear focused on making its gesture interface practical and comfortable. "If I want to work at my desk and use gestures, I can't do that all day," he says. "It's not precise, and it's not ergonomic." The key, Wang says, is to use two 3-D cameras above the hands. They are currently rigged on a metal frame, but eventually could be clipped onto a monitor. A view from above means that hands can rest on a desk or stay on a keyboard. (While the 3Gear software development kit is free during its public beta, which lasts until November 30, developers must purchase their own hardware, including cameras and frame.) "Other projects have replaced touch screens with sensors that sit on the desk and point up toward the screen, still requiring the user to reach forward, away from the keyboard," says Daniel Wigdor, professor of computer science at the University of Toronto and author of Brave NUI World, a book about touch and gesture interfaces. "This solution tries to address that." 3Gear isn't alone in its desire to tackle the finer points of gesture tracking. Earlier this year, Microsoft released an update that enabled people who develop Kinect for Windows software to track head position, eyebrow location, and the shape of a mouth. Additionally, Israeli startup Omek, Belgian startup SoftKinetic, and a startup from San Francisco called Leap Motion—which claims its small, single-camera system will track movements to a hundredth of a millimeter—are all jockeying for a position in the fledgling gesture-interface market.

(Page 1 of 2, totaling 16 entries)

» next page

|

QuicksearchPopular Entries

CategoriesShow tagged entriesSyndicate This BlogCalendarBlog Administration |