Thursday, August 11. 2011

Geeks Without Frontiers Pursues Wi-Fi for Everyone

Via OStatic

-----

Recently, you may have heard about new efforts to bring online access to regions where it has been economically nonviable before. This idea is not new, of course. The One Laptop Per Child (OLPC) initiative was squarely aimed at the goal until it ran into some significant hiccups. One of the latest moves on this front comes from Geeks Without Frontiers, which has a stated goal of positively impacting one billion lives with technology over the next 10 years. The organization, sponsored by Google and The Tides Foundation, is working on low cost, open source Wi-Fi solutions for "areas where legacy broadband models are currently considered to be uneconomical."

According to an announcement from Geeks Without Frontiers:

"GEEKS expects that this technology, built mainly by Cozybit, managed by GEEKS and I-Net Solutions, and sponsored by Google, Global Connect, Nortel, One Laptop Per Child, and the Manna Energy Foundation, will enable the development and rollout of large-scale mesh Wi-Fi networks for atleast half of the traditional network cost. This is a major step in achieving the vision of affordable broadband for all."

It's notable that One Laptop Per Child is among the sponsors of this initiative. The organization has open sourced key parts of its software platform, and could have natural synergies with a global Wi-Fi effort.

“By driving down the cost of metropolitan and village scale Wi-Fi networks, millions more people will be able to reap the economic and social benefits of significantly lower cost Internet access,” said Michael Potter, one of the founders of the GEEKS initiative.

The Wi-Fi technology that GEEKS is pursuing is mesh networking technology. Specifically, open80211s (o11s), which implements the AMPE (Authenticated Mesh Peering Exchange) enabling multiple authenticated nodes to encrypt traffic between themselves. Mesh networks are essentially widely distributed wireless networks based on many repeaters throught a specific location.

You can read much more about the open80211s standard here. The GEEKS initiative has significant backers, and with sponsorship from OLPC, will probably benefit from good advice on the topic of bringing advanced technologies to disadvantaged regions of the world. The effort will be worth watching.

Tuesday, August 09. 2011

The Subjectivity of Natural Scrolling

Via Slash Gear

-----

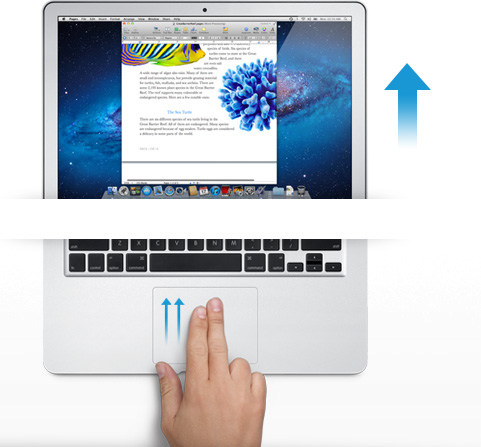

Apple released its new OS X Lion for Mac computers recently, and there was one controversial change that had the technorati chatting nonstop. In the new Lion OS, Apple changed the direction of scrolling. I use a MacBook Pro (among other machines, I’m OS agnostic). On my MacBook, I scroll by placing two fingers on the trackpad and moving them up or down. On the old system, moving my fingers down meant the object on the screen moved up. My fingers are controlling the scroll bars. Moving down means I am pulling the scroll bars down, revealing more of the page below what is visible. So, the object moves upwards. On the new system, moving my fingers down meant the object on screen moves down. My fingers are now controlling the object. If I want the object to move up, and reveal more of what is beneath, I move my fingers up, and content rises on screen.

The scroll bars are still there, but Apple has, by default, hidden them in many apps. You can make them reappear by hunting through the settings menu and turning them back on, but when they do come back, they are much thinner than they used to be, without the arrows at the top and bottom. They are also a bit buggy at the moment. If I try to click and drag the scrolling indicator, the page often jumps around, as if I had missed and clicked on the empty space above or below the scroll bar instead of directly on it. This doesn’t always happen, but it happens often enough that I have trained myself to avoid using the scroll bars this way.

So, the scroll bars, for now, are simply a visual indicator of where my view is located on a long or wide page. Clearly Apple does not think this information is terribly important, or else scroll bars would be turned on by default. As with the scroll bars, you can also hunt through the settings menu to turn off the new, so-called “natural scrolling.” This will bring you back to the method preferred on older Apple OSes, and also on Windows machines.

So, the scroll bars, for now, are simply a visual indicator of where my view is located on a long or wide page. Clearly Apple does not think this information is terribly important, or else scroll bars would be turned on by default. As with the scroll bars, you can also hunt through the settings menu to turn off the new, so-called “natural scrolling.” This will bring you back to the method preferred on older Apple OSes, and also on Windows machines.

Some disclosure: my day job is working for Samsung. We make Windows computers that compete with Macs. I work in the phones division, but my work machine is a Samsung laptop running Windows. My MacBook is a holdover from my days as a tech journalist. When you become a tech journalist, you are issued a MacBook by force and stripped of whatever you were using before.

"Natural scrolling will seem familiar to those of you not frozen in an iceberg since World War II"I am not criticizing or endorsing Apple’s new natural scrolling in this column. In fact, in my own usage, there are times when I like it, and times when I don’t. Those emotions are usually found in direct proportion to the amount of NyQuil I took the night before and how hot it was outside when I walked my dog. I have found no other correlation.

The new natural scrolling method will probably seem familiar to those of you not frozen in an iceberg since World War II. It is the same direction you use for scrolling on most touchscreen phones, and most tablets. Not all, of course. Some phones and tablets still use styli, and these phone often let you scroll by dragging scroll bars with the pointer. But if you have an Android or an iPhone or a Windows Phone, you’re familiar with the new method.

My real interest here is to examine how the user is placed in the conversation between your fingers and the object on screen. I have heard the argument that the new method tries, and perhaps fails, to emulate the touchscreen experience by manipulating objects as if they were physical. On touchscreen phones, this is certainly the case. When we touch something on screen, like an icon or a list, we expect it to react in a physical way. When I drag my finger to the right, I want the object beneath to move with my finger, just as a piece of paper would move with my finger when I drag it.

This argument postulates a problem with Apple’s natural scrolling because of the literal distance between your fingers and the objects on screen. Also, the angle has changed. The plane of your hands and the surface on which they rest are at an oblique angle of more than 90 degrees from the screen and the object at hand.

Think of a magic wand. When you wave a magic wand with the tip facing out before you, do you imagine the spell shooting forth parallel to the ground, or do you imagine the spell shooting directly upward? In our imagination, we do want a direct correlation between the position of our hands and the reaction on screen, this is true. However, is this what we were getting before? Not really.

The difference between classic scrolling and ‘natural’ scrolling seems to be the difference between manipulating a concept and manipulating an object. Scroll bars are not real, or at least they do not correspond to any real thing that we would experience in the physical world. When you read a tabloid, you do not scroll down to see the rest of the story. You move your eyes. If the paper will not fit comfortably in your hands, you fold it. But scrolling is not like folding. It is smoother. It is continuous. Folding is a way of breaking the object into two conceptual halves. Ask a print newspaper reporter (and I will refrain from old media mockery here) about the part of the story that falls “beneath the fold.” That part better not be as important as the top half, because it may never get read.

Natural scrolling correlates more strongly to moving an actual object. It is like reading a newspaper on a table. Some of the newspaper may extend over the edge of the table and bend downward, making it unreadable. When you want to read it, you move the paper upward. In the same way, when you want to read more of the NYTimes.com site, you move your fingers upward.

"Is it better to create objects on screen that appropriate the form of their physical world counterparts?"The argument should not be over whether one is more natural than the other. Let us not forget that we are using an electronic machine. This is not a natural object. The content onscreen is only real insofar as pixels light up and are arranged into a recognizable pattern. Those words are not real, they are the absence of light, in varying degrees if you have anti-aliasing cranked up, around recognizable patterns that our eyes and brain interpret as letters and words.

The argument should be over which is the more successful design for a laptop or desktop operating system. Is it better to create objects on screen that appropriate the form of their physical world counterparts? Should a page in Microsoft Word look like a piece of paper? Should an icon for a hard disk drive look like a hard disk? What percentage of people using a computer have actually seen a hard disk drive? What if your new ultraportable laptop uses a set of interconnected solid state memory chips instead? Does the drive icon still look like a drive?

Or is it better to create objects on screen that do not hew to the physical world? Certainly their form should suggest their function in order to be intuitive and useful, but they do not have to be photorealistic interpretations. They can suggest function through a more abstract image, or simply by their placement and arrangement.

"How should we represent a Web browser, a feature that has no counterpart in real life?"In the former system, the computer interface becomes a part of the users world. The interface tries to fit in with symbols that are already familiar. I know what a printer looks like, so when I want to set up my new printer, I find the picture of the printer and I click on it. My email icon is a stamp. My music player icon is a CD. Wait, where did my CD go? I can’t find my CD?! What happened to my music!?!? Oh, there it is. Now it’s just a circle with a musical note. I guess that makes sense, since I hardly use CDs any more.

In the latter system, the user becomes part of the interface. I have to learn the language of the interface design. This may sound like it is automatically more difficult than the former method of photorealism, but that may not be true. After all, when I want to change the brightness of my display, will my instinct really be to search for a picture of a cog and gears? And how should we represent a Web browser, a feature that has no counterpart in real life? Are we wasting processing power and time trying to create objects that look three dimensional on a two dimensional screen in a 2D space?

I think the photorealistic approach, and Apple’s new natural scrolling, may be the more personal way to design an interface. Apple is clearly thinking of the intimate relationship between the user and the objects that we touch. It is literally a sensual relationship, in that we use a variety of our senses. We touch. We listen. We see.

But perhaps I do not need, nor do I want, to have this relationship with my work computer. I carry my phone with me everywhere. I keep my tablet very close to me when I am using it. With my laptop, I keep some distance. I am productive. We have a lot to get done.

DNA circuits used to make neural network, store memories

Via ars technica

By Kyle Niemeyer

-----

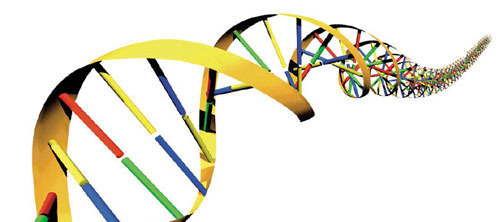

Even as some scientists and engineers develop improved versions of current computing technology, others are looking into drastically different approaches. DNA computing offers the potential of massively parallel calculations with low power consumption and at small sizes. Research in this area has been limited to relatively small systems, but a group from Caltech recently constructed DNA logic gates using over 130 different molecules and used the system to calculate the square roots of numbers. Now, the same group published a paper in Nature that shows an artificial neural network, consisting of four neurons, created using the same DNA circuits.

The artificial neural network approach taken here is based on the perceptron model, also known as a linear threshold gate. This models the neuron as having many inputs, each with its own weight (or significance). The neuron is fired (or the gate is turned on) when the sum of each input times its weight exceeds a set threshold. These gates can be used to construct compact Boolean logical circuits, and other circuits can be constructed to store memory.

As we described in the last article on this approach to DNA computing, the authors represent their implementation with an abstraction called "seesaw" gates. This allows them to design circuits where each element is composed of two base-paired DNA strands, and the interactions between circuit elements occurs as new combinations of DNA strands pair up. The ability of strands to displace each other at a gate (based on things like concentration) creates the seesaw effect that gives the system its name.

In order to construct a linear threshold gate, three basic seesaw gates are needed to perform different operations. Multiplying gates combine a signal and a set weight in a seesaw reaction that uses up fuel molecules as it converts the input signal into output signal. Integrating gates combine multiple inputs into a single summed output, while thresholding gates (which also require fuel) send an output signal only if the input exceeds a designated threshold value. Results are read using reporter gates that fluoresce when given a certain input signal.

To test their designs with a simple configuration, the authors first constructed a single linear threshold circuit with three inputs and four outputs—it compared the value of a three-bit binary number to four numbers. The circuit output the correct answer in each case.

For the primary demonstration on their setup, the authors had their linear threshold circuit play a computer game that tests memory. They used their approach to construct a four-neuron Hopfield network, where all the neurons are connected to the others and, after training (tuning the weights and thresholds) patterns can be stored or remembered. The memory game consists of three steps: 1) the human chooses a scientist from four options (in this case, Rosalind Franklin, Alan Turing, Claude Shannon, and Santiago Ramon y Cajal); 2) the human “tells” the memory network the answers to one or more of four yes/no (binary) questions used to identify the scientist (such as, “Did the scientist study neural networks?” or "Was the scientist British?"); and 3) after eight hours of thinking, the DNA memory guesses the answer and reports it through fluorescent signals.

They played this game 27 total times, for a total of 81 possible question/answer combinations (34). You may be wondering why there are three options to a yes/no question—the state of the answers is actually stored using two bits, so that the neuron can be unsure about answers (those that the human hasn't provided, for example) using a third state. Out of the 27 experimental cases, the neural network was able to correctly guess all but six, and these were all cases where two or more answers were not given.

In the best cases, the neural network was able to correctly guess with only one answer and, in general, it was successful when two or more answers were given. Like the human brain, this network was able to recall memory using incomplete information (and, as with humans, that may have been a lucky guess). The network was also able to determine when inconsistent answers were given (i.e. answers that don’t match any of the scientists).

These results are exciting—simulating the brain using biological computing. Unlike traditional electronics, DNA computing components can easily interact and cooperate with our bodies or other cells—who doesn’t dream of being able to download information into your brain (or anywhere in your body, in this case)? Even the authors admit that it’s difficult to predict how this approach might scale up, but I would expect to see a larger demonstration from this group or another in the near future.

Help CERN in the hunt for the Higgs Boson

Via bit-tech

-----

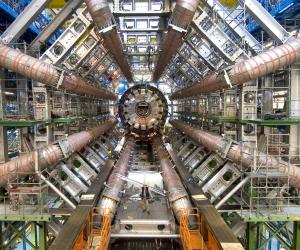

The Citizen Cyberscience Centre based at CERN, launched a new version of LHC@home today.

You've probably heard of Folding@Home or Seti@Home - both are

distributed computing programs designed to harness the power of your

average home PC to number crunch data, aiding real scientific research.

LHC@home, as science fans will probably have already guessed, is a

similar venture for the Large Hadron Collider (LHC). The latest version

of the Citizen Cyberscience Centre's software, called LHC@home 2.0,

simulates collisions between two beams of protons travelling at close to

the speed of light in the LHC.

Professor Dave Britton of the University of Glasgow is Project Leader of

the GridPP project that provides Grid computing for particle physics

throughout the UK. He is a member of the ATLAS collaboration, one of the

experiments at the LHC and had this to say about LHC@home 2.0 and the

Citizen Cyberscience Centre:

'Scientists like me are trying to answer fundamental questions about

the structure and origin of the Universe. Through the Citizen

Cyberscience Centre and its volunteers around the world, the Grid

computing tools and techniques that I use everyday are available to

scientists in developing countries, giving them access to the latest

computing technology and the ability to solve the problems that they are

facing, such as providing clean water.

Whether you’re interested in finding the Higgs boson, playing a part in

humanitarian aid or advancing knowledge in developing countries, this is

a great project to get involved with.;

You can check out the LHC@home home page here. Have you taken part in Seti or Folding? Maybe you're already in the hunt for the Higgs Boson?

Wednesday, August 03. 2011

WIMM Wearable Platform

Via SlashGear

-----

Wearable sub-displays keep coming around, and WIMM Labs is the latest company to try its hand at the segment. Its WIMM Wearable Platform – a 1.4-inch color touchscreen, scaled to be wearable on your wrist, and paired with WiFi, Bluetooth, various sensors and running Android-based “Micro Apps” – obviously stood out of the crowd, having caught the attention of Foxconn and two rounds of financing from the huge manufacturing/ODM company. We caught up with the WIMM Labs team to check out the Wearable Platform and find out if it stood more chance of success than, say, Sony Ericsson’s LiveView.

The basic premise of the Wearable Platform isn’t new. People carry their phones and tablets in pockets and bags, not in their hand, and so when nuggets of information, alerts and updates come in, they need to be taken out in order to check them. A wearable display – strapped to your wrist, hanging on a lanyard from your belt or bag, or otherwise attached to you – means at-a-glance review, allowing you to decide whether you need to fish out your phone or if it can wait until later.

WIMM’s One developer kit hardware is a step ahead of some systems we’ve already seen. The 1.4-inch capacitive touch display operates in dual-modes, either as a regular screen or transflective backlight-free so that it’s still readable (albeit in monochrome) but battery life is extended. This passive display mode works in tandem with a 667MHz Samsung microcontroller, WiFi, Bluetooth, GPS, an accelerometer and a digital compass, all contained in a waterproof casing – made of either plastic or of a new wireless-compatible ceramic – measuring 32 x 36 x 12.5 mm and weighing 22g.

That casing, WIMM says, should be standardized so that each unit – the Wearable Platform will be licensed out to third-party companies – will hopefully be interchangeable with the same mounting pins. That way, if you buy a new model from another firm, it should be able to snap into your existing watch strap. Up to 32GB of memory is supported, and the One can notify you with audio or tactile alerts. Battery life is said to last at least a full day, and it recharges using a magnetic charger (that plugs in via microUSB) or a collapsable desk-stand that adds an integrated battery good for up to four recharge cycles and useful if you’re on the road.

Control is via a series of taps, swipes and physical gestures (the sensors allow the Wearable Platform to react to movement), including a two-finger swipe to pull down the watch face. WIMM will preload a handful of apps – the multifunction clock, a real-time information viewer showing weather, etc, and a cellphone companion which can show incoming caller ID, a preview of new SMS messages, and assist you in finding a lost phone. Pairing can be either via WiFi or Bluetooth, and the micro-display will work with Android, iOS and BlackBerry devices. Apps are selected from a side-scrolling carousel, loaded by flicking them up toward the top of the screen, and closed by flicking them down.

However, the preloaded apps are only to get you interested: the real movement, WIMM expects, is in the Micro App store. The Android-based SDK means there’s few hurdles for existing Android developers to get up to speed, and licensees of the Wearable Platform will be able to roll out their own app stores, based on WIMM’s white label service, picking and choosing which titles they want to include. The company is targeting performance sport and medical applications initially, with potential apps including exercise trackers, heart rate monitors and medication reminder services.

A DIY UAV That Hacks Wi-Fi Networks, Cracks Passwords, and Poses as a Cell Phone Tower

Via POPSCI

By Clay Dillow

-----

Just a Boy and His Cell-Snooping, Password-Cracking, Hacktastic Homemade Spy Drone via Rabbit-Hole

Last year at the Black Hat and Defcon security conferences in Las Vegas, a former Air Force cyber security contractor and a former Air Force engineering systems consultant displayed their 14-pound, six-foot-long unmanned aerial vehicle, WASP (Wireless Aerial Surveillance Platform). Last year it was a work in progress, but next week when they unveil an updated WASP they’ll be showing off a functioning homemade spy drone that can sniff out Wi-Fi networks, autonomously crack passwords, and even eavesdrop on your cell phone calls by posing as a cell tower.

WASP is built from a retired Army target drone, and creators Mike Tassey and Richard Perkins have crammed all kinds of technology aboard, including an HD camera, a small Linux computer packed with a 340-million-word dictionary for brute-forcing passwords as well as other network hacking implements, and eleven different antennae. Oh, and it’s autonomous; it requires human guidance for takeoff and landing, but once airborne WASP can fly a pre-set route, looping around an area looking for poorly defended data.

And on top of that, the duo has taught their WASP a new way to surreptitiously gather intel from the ground: pose as a GSM cell phone tower to trick phones into connecting through WASP rather than their carriers--a trick Tassey and Perkins learned from another security hacker at Defcon last year.

Tassey and Perkins say they built WASP so show just how easy it is, and just how vulnerable you are. “We wanted to bring to light how far the consumer industry has progressed, to the point where public has access to technologies that put companies, and even governments at risk from this new threat vector that they’re not aware of,” Perkins told Forbes.

Consider yourself warned. For details on the WASP design--including pointers on building your own--check out Tassey and Perkins site here.

Monday, August 01. 2011

Switched On: Desktop divergence

Via Engadget

By Ross Rubin

-----

Last week's Switched On discussed

how Lion's feature set could be perceived differently by new users or

those coming from an iPad versus those who have used Macs for some time,

while a previous Switched On discussed

how Microsoft is preparing for a similar transition in Windows 8. Both

OS X Lion and Windows 8 seek to mix elements of a tablet UI with

elements of a desktop UI or -- putting it another way -- a

finger-friendly touch interface with a mouse-driven interface. If Apple

and Microsoft could wave a wand and magically have all apps adopt

overnight so they could leave a keyboard and mouse behind, they probably

would. Since they can't, though, inconsistency prevails.

Yet, while the OS X-iOS mashup that is Lion

exhibits is share of growing pains, the fall-off effect isn't as

pronounced as it appears it will be for Windows 8. The main reasons for

this are, in order of increasing importance, legacy, hardware, and

Metro.

Legacy. Microsoft has an incredibly strong commitment

to backward compatibility. As long as Microsoft supports older Windows

apps (which will be well into the future), there will be a more

pronounced gap between that old user interface and the new. This will

likely become more of a difference between Microsoft and Apple over

time. For now, however, Apple is also treading lightly, and several of

Lion's user interface changes -- including "natural" scrolling

directions, Dashboard as a space, and the hiding of the hard drive on

the desktop -- can be reversed. Even some of Lion's "full-screen" apps

are only a cursor movement away from revealing their menus.

Hardware. As Apple continues to keep touchscreens off

the Mac, it brings over the look but not the input experience of iPad

apps, relying instead on the precision of a mouse or trackpad.

Therefore, these Mac apps do not have to embrace finger-friendliness. In

contrast, the "tablet" UI of Windows 8 is designed for fingertips and

therefore demands a cleaner break with an interface designed for mice

(although Microsoft preserves pointer control as well so these apps can

be used on PCs without touchscreens).

Metro. A late entrant to the gesture-driven touchscreen

handset wars, Microsoft sought to differentiate Windows Phone 7 with

its panoramic user interface. When Joe Belfiore introduced

Windows Phone 7 at Mobile World Congress in 2010, he repeatedly noted

that "the phone is not a PC." That's an accurate assessment, and perhaps

one worth repeating in light of all the feedback

Microsoft ignored over the years in the design of Pocket PC and Windows

Mobile. It also of couse holds true beond the user interface for design

around context and support of location-based services.

But now that the folks in Redmond have created an enjoyable phone

interface, have things actually changed? Was it true only that the phone

and PC shoud not have the same old Windows interface, or is it also

still true that the PC and phone should not have the same new Windows

Phone interface? Was it the nature of the user interface itself that was

at fault, or the notion of the same user interface across PC and phone

regardless of how good it is?

There is certainly room for more consistency across PCs, tablets and

handsets. However, Microsoft did not just differentiate Windows Phone 7

from iOS and Android, it differentiated it from Windows as well. And that

is the main reason why the shift in context between a classic Windows

app and a "tablet" Windows 8 app seems more striking at this point than

the difference between a classic Mac app and "full screen" Lion app.

Lion's full-screen apps could be the new point of crossover with Windows

8's "tablet" user interface mode. Based on what we've seen on the

handset side, it is certainly possible for developers to write the same

apps for the iPhone and Windows Phone 7, but these are generally simpler

apps (and then there are games, which generally ignore most user

interface conventions anyway).

Apple and Microsoft are both clearly striving for a simpler user

experience, but Microsoft is also trying to adapt its desktop OS to a

new form factor in the process of doing so. The balancing act for both

companies will be making their new combinations of software and hardware

(from partners in the case of Microsoft) embrace a new generation of

users while minimizing alienation for the existing one.

-----

Personal comments:

See also this article

World's first 'printed' plane snaps together and flies

Via CNET

By Eric Mach

-----

English engineers have produced what is believed to be the world's first printed plane. I'm not talking a nice artsy lithograph of the Wright Bros. first flight. This is a complete, flyable aircraft spit out of a 3D printer.

The SULSA began life in something like an inkjet and wound up in the air. (Credit: University of Southhampton)

The SULSA (Southampton University Laser Sintered Aircraft) is an unmanned air vehicle that emerged, layer by layer, from a nylon laser sintering machine that can fabricate plastic or metal objects. In the case of the SULSA, the wings, access hatches, and the rest of the structure of the plane were all printed.

As if that weren't awesome enough, the entire thing snaps together in minutes, no tools or fasteners required. The electric plane has a wingspan of just under 7 feet and a top speed of 100 mph.

Jim Scanlon, one of the project leads at the University of Southhampton, explains in a statement that the technology allows for products to go from conception to reality much quicker and more cheaply.

"The flexibility of the laser-sintering process allows the design team to revisit historical techniques and ideas that would have been prohibitively expensive using conventional manufacturing," Scanlon says. "One of these ideas involves the use of a Geodetic structure... This form of structure is very stiff and lightweight, but very complex. If it was manufactured conventionally it would require a large number of individually tailored parts that would have to be bonded or fastened at great expense."

So apparently when it comes to 3D printing, the sky is no longer the limit. Let's just make sure someone double-checks the toner levels before we start printing the next international space station.

-----

Personal comments:

Industrial production tools back to people?

Fab@Home project

Adobe dives into HTML with new Edge software

Via CNET

By Stephen Shankland

-----

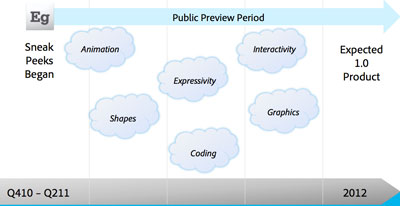

Adobe Systems has dipped its toes in the HTML5 pool, but starting today it's taking the plunge with the public preview release of software called Edge.

For years, the company's answer to doing fancy things on the Web was Flash Player, a browser plug-in installed nearly universally on computers for its ability to play animated games, stream video, and level the differences among browsers.

But allies including Opera, Mozilla, Apple, Google, and eventually even Microsoft began to advance what could be done with Web standards. The three big ones here are HTML (Hypertext Markup Language for describing Web pages), CSS (Cascading Style Sheets for formatting and now animation effects), and JavaScript (the programming language used for Web apps).

Notably, these new standards worked on new smartphones when Flash either wasn't available, ran sluggishly, or was barred outright in the case of iPhones and iPads. Adobe is working hard to keep Flash relevant for three big areas--gaming, advanced online video, and business apps--but with Edge, it's got a better answer to critics who say Adobe is living in the past.

"What we've seen happening is HTML is getting much richer. We're seeing more workflow previously reserved for Flash being done with Web standards," said Devin Fernandez, product manager of Adobe's Web Pro group.

A look at the Adobe Edge interface.

(Credit: Adobe Systems)The public preview release is just the beginning for Edge. It lets people add animation effects to Web pages, chiefly with CSS controlled by JavaScript. For example, when a person loads a Web page designed with Edge, text and graphics elements gradually slide into view.

It all can be done today with programming experience, but Adobe aims to make it easier for the design crowd used to controlling how events take place by using a timeline that triggers various actions.

As new versions arrive, more features will be added, and Adobe plans to begin selling the finished version of Edge in 2012.

"[For] the first public preview release, we focused on animation," Fernandez said. "Over the public preview period, we'll be adding additional functionality. We'll be incorporating feedback from the community, taking those requests into account."

The Edge preview product now is available at the Adobe Labs site. Adobe showed an early look at Edge in June.

What exactly is next in the pipeline? Adobe has a number of features in mind, including the addition of video and audio elements alongside the SVG, PNG, GIF, and JPEG graphics it can handle now.

Some of the features Adobe has in mind before a planned 2012 release of Edge. (Credit: Adobe Systems)

Other items on the to-do list:

• More shapes than just rectangles and rounded-corner rectangles.

• Actions that are triggered by events.

• Support for Canvas, an HTML5 standard for 2D drawing surface for graphics, in particular combined with SVG animation. (Note that Adobe began the SVG effort while before it acquired Macromedia, whose Flash technology was a rival to SVG.)

"We still have a lot of features we have not implemented," said Mark Anders, an Adobe fellow working on Edge.

The software integrates with Dreamweaver, Adobe's Web design software package, or other Web tools. It integrates its actions with the Web page so that Edge designers can marry their additions with the other programming work.

The software itself has a WebKit-based browser whose window is prominent in the center of the user interface. A timeline below lets designers set events, copy and paste effects to different objects, and make other scheduling changes.

Adobe, probably not happy with being a punching bag for Apple fans who disliked Flash, seems eager to be able to show off Edge to counter critics' complaints. The company will have to overcome skepticism and educate the market that it's serious, but real software beats keynote comments any day.

Anders, who before Edge worked on Flash programming tools and led work on Microsoft's .Net Framework, is embracing the new ethos.

"In the the last 15 years, if you looked at a Web page and saw this, you would say that is Flash because Flash is the only thing that can do that. That is not true today," Anders said. "You can use HTML and the new capabilities of CSS to do this really amazing stuff."

How an argument with Hawking suggested the Universe is a hologram

The proponents of string theory seem to think they can provide a more elegant description of the Universe by adding additional dimensions. But some other theoreticians think they've found a way to view the Universe as having one less dimension. The work sprung out of a long argument with Stephen Hawking about the nature of black holes, which was eventually solved by the realization that the event horizon could act as a hologram, preserving information about the material that's gotten sucked inside. The same sort of math, it turns out, can actually describe any point in the Universe, meaning that the entire content Universe can be viewed as a giant hologram, one that resides on the surface of whatever two-dimensional shape will enclose it.

That was the premise of panel at this summer's World Science Festival, which described how the idea developed, how it might apply to the Universe as a whole, and how they were involved in its development.

The whole argument started when Stephen Hawking attempted to describe what happens to matter during its lifetime in a balck hole. He suggested that, from the perspective of quantum mechanics, the information about the quantum state of a particle that enters a black hole goes with it. This isn't a problem until the black hole starts to boil away through what's now called Hawking radiation, which creates a separate particle outside the event horizon while destroying one inside. This process ensures that the matter that escapes the black hole has no connection to the quantum state of the material that had gotten sucked in. As a result, information is destroyed. And that causes a problem, as the panel described.

As far as quantum mechanics is concerned, information about states is never destroyed. This isn't just an observation; according to panelist Leonard Susskind, destroying information creates paradoxes that, although apparently minor, will gradually propagate and eventually cause inconsistencies in just about everything we think we understand. As panelist Leonard Susskind put it, "all we know about physics would fall apart if information is lost."

Unfortunately, that's precisely what Hawking suggested was happening. "Hawking used quantum theory to derive a result that was at odds with quantum theory," as Nobel Laureate Gerard t'Hooft described the situation. Still, that wasn't all bad; it created a paradox and "Paradoxes make physicists happy."

"It was very hard to see what was wrong with what he was saying," Susskind said, "and even harder to get Hawking to see what was wrong."

The arguments apparently got very heated. Herman Verlinde, another physicist on the panel, described how there would often be silences when it was clear that Hawking had some thoughts on whatever was under discussion; these often ended when Hawking said "rubbish." "When Hawking says 'rubbish,'" he said, "you've lost the argument."

t'Hooft described how the disagreement eventually got worked out. It's possible, he said, to figure out how much information has gotten drawn in to the black hole. Once you do that, you can see that the total amount can be related to the surface area of the event horizon, which suggested where the information could be stored. But since the event horizon is a two-dimensional surface, the information couldn't be stored in regular matter; instead, the event horizon forms a hologram that holds the information as matter passes through it. When that matter passes back out as Hawking radiation, the information is restored.

Susskind described just how counterintuitive this is. The holograms we're familiar with store an interference pattern that only becomes information we can interpret once light passes through them. On a micro-scale, related bits of information may be scattered far apart, and it's impossible to figure out what bit encodes what. And, when it comes to the event horizon, the bits are vanishingly small, on the level of the Planck scale (1.6 x 10-35 meters). These bits are so small, as t'Hooft noted, that you can store a staggering amount of information in a reasonable amount of space—enough to describe all the information that's been sucked into a black hole.

The price, as Susskind noted, was that the information is "hopelessly scrambled" when you do so.

From a black hole to the Universe

Berkeley's Raphael Bousso was on hand to describe how these ideas were expanded out to encompass the Universe as a whole. As he put it, the math that describes how much information a surface can store works just as well if you get rid of the black hole and event horizon. (This shouldn't be a huge surprise, given that most of the Universe is far less dense than the area inside a black hole.) Any surface that encloses an area of space in this Universe has sufficient capacity to describe its contents. The math, he said, works so well that "it seems like a conspiracy."

To him, at least. Verlinde pointed out that things in the Universe scale with volume, so it's counterintuitive that we should expect its representation to them to scale with a surface area. That counterintuitiveness, he thinks, is one of the reasons that the idea has had a hard time being accepted by many.

When it comes to the basic idea—the Universe can be described using a hologram—the panel was pretty much uniform, and Susskind clearly felt there was a consensus in its favor. But, he noted, as soon as you stepped beyond the basics, everybody had their own ideas, and those started coming out as the panel went along. Bousso, for example, felt that the holographic principle was "your ticket to quantum gravity." Objects are all attracted via gravity in the same way, he said, and the holographic principle might provide an avenue for understanding why (if he had an idea about how, though, he didn't share it with the audience). Verlinde seemed to agree, suggesting that, when you get to objects that are close to the Planck scale, gravity is simply an emergent property.

But t'Hooft seemed to be hoping that the holographic principle could solve a lot more than the quantum nature of gravity—to him, it suggested there might be something underlying quantum mechanics. For him, the holographic principle was a bit of an enigma, since disturbances happen in three dimensions, but propagate to a scrambled two-dimensional representation, all while obeying the Universe's speed limit (that of light). For him, this suggests there's something underneath it all, and he'd like to see it be something that's a bit more causal than the probabilistic world of quantum mechanics; he's hoping that a deterministic world exists somewhere near the Planck scale. Nobody else on the panel seemed to be all that excited about the prospect, though.

What was missing from the discussion was an attempt to tackle one of the issues that plagues string theory: the math may all work out and it could provide a convenient way of looking at the world, but is it actually related to anything in the actual, physical Universe? Nobody even attempted to tackle that question. Still, the panel did a good job of describing how something that started as an attempt to handle a special case—the loss of matter into a black hole—could provide a new way of looking at the Universe. And, in the process, how people could eventually convince Stephen Hawking he got one wrong.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131044)

- Norton cyber crime study offers striking revenue loss statistics (101656)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100056)

- Norton cyber crime study offers striking revenue loss statistics (57885)

- The PC inside your phone: A guide to the system-on-a-chip (57445)