Entries tagged as software

Related tags

3d camera flash game hardware headset history mobile mobile phone technology tracking virtual reality web wiki www 3d printing 3d scanner crowd-sourcing diy evolution facial copy food innovation&society medecin microsoft physical computing piracy programming rapid prototyping recycling robot virus advertisements ai algorythm android apple arduino automation data mining data visualisation network neural network sensors siri artificial intelligence big data cloud computing coding fft program amazon cloud ebook google kindle ad app htc ios linux os sdk super collider tablet usb API facial recognition glass interface mirror windows 8 app store open source iphone ar augmented reality army drone art car privacy super computer botnet security browser chrome firefox ieTuesday, August 30. 2011

Automatic spelling corrections on Github

By Holden Karau

-----

English has never been one of my strong points (as is fairly obvious by reading my blog), so my latest side project might surprise you a bit. Inspired by the results of tarsnap’s bug bounty and the first pull request received for a new project(slashem - a type safe rogue like DSL for querying solr in scala) I decided to write a bot for github to fix spelling mistakes.

The code its self is very simple (albeit not very good, it was written after I got back from clubbing @ JWZ’s club [DNA lounge]). There is something about a lack of sleep which makes perl code and regexs seem like a good idea. If despite the previous warnings you still want to look at the codehttps://github.com/holdenk/holdensmagicalunicorn is the place to go. It works by doing a github search for all the README files in markdown format and then running a limited spell checker on them. Documents with a known misspelled word are flagged and output to a file. Thanks to the wonderful github api the next steps is are easy. It forks the repo and clones it locally, performs the spelling correction, commits, pushes and submits a pull request.

The spelling correction is based on Pod::Spell::CommonMistakes, it works using a very restricted set of misspelled words to corrections.

Writing a “future directions” sections always seems like such a cliche, but here it is anyways. The code as it stands is really simple. For example it only handles one repo of a given name, and the dictionary is small, etc. The next version should probably also try and only submit corrections against the conical repo. Some future plans extending the dictionary. In the longer term I think it would be awesome to attempt detect really simple bugs in actual code (things like memcpy(dest,0,0)).

You can follow the bot on twitter holdensunicorn .

Comments, suggestions, and patches always appreciated. -holdenkarau (although I’m going to be AFK at burning man for awhile, you can find me @ 6:30 & D)

Wednesday, August 24. 2011

Google TV add-on for Android SDK gives developers a path to the big screen

Via ars technica

-----

At the Google I/O conference earlier this year, Google revealed that the Android Market would come to the Google TV set-top platform. Some evidence of the Honeycomb-based Google TV refresh surfaced in June when screenshots from developer hardware were leaked. Google TV development is now being opened to a broader audience.

In a post on the official Google TV blog, the search giant has announced the availability of a Google TV add-on for the Android SDK. The add-on is an early preview that will give third-party developers an opportunity to start porting their applications to Google TV.

The SDK add-on will currently only work on Linux desktop systems because it relies on Linux's native KVM virtualization system to provide a Google TV emulator. Google says that other environments will be supported in the future. Unlike the conventional phone and tablet versions of Android, which are largely designed to run on ARM devices, the Google TV reference hardware uses x86 hardware. The architecture difference might account for the lack of support in Android's traditional emulator.

We are planning to put the SDK add-on to the test later this week so we can report some hands-on findings. We suspect that the KVM-based emulator will offer better performance than the conventional Honeycomb emulator that Google's SDK currently provides for tablet development.

In addition to the SDK add-on, Google has also published a detailed user interface design guideline document that offers insight into best practices for building a 10-foot interface that will work will on Google TV hardware. The document addresses a wide range of issues, including D-pad navigation and television color variance.

The first iteration of Google TV flopped in the market and didn't see much consumer adoption. Introducing support for third-party applications could make Google TV significantly more compelling to consumers. The ability to trivially run applications like Plex could make Google TV a lot more useful. It's also worth noting that Android's recently added support for game controllers and other similar input devices could make Google TV hardware serve as a casual gaming console.

Tuesday, August 09. 2011

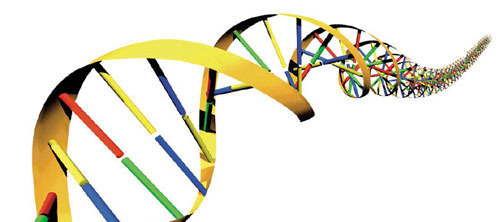

DNA circuits used to make neural network, store memories

Via ars technica

By Kyle Niemeyer

-----

Even as some scientists and engineers develop improved versions of current computing technology, others are looking into drastically different approaches. DNA computing offers the potential of massively parallel calculations with low power consumption and at small sizes. Research in this area has been limited to relatively small systems, but a group from Caltech recently constructed DNA logic gates using over 130 different molecules and used the system to calculate the square roots of numbers. Now, the same group published a paper in Nature that shows an artificial neural network, consisting of four neurons, created using the same DNA circuits.

The artificial neural network approach taken here is based on the perceptron model, also known as a linear threshold gate. This models the neuron as having many inputs, each with its own weight (or significance). The neuron is fired (or the gate is turned on) when the sum of each input times its weight exceeds a set threshold. These gates can be used to construct compact Boolean logical circuits, and other circuits can be constructed to store memory.

As we described in the last article on this approach to DNA computing, the authors represent their implementation with an abstraction called "seesaw" gates. This allows them to design circuits where each element is composed of two base-paired DNA strands, and the interactions between circuit elements occurs as new combinations of DNA strands pair up. The ability of strands to displace each other at a gate (based on things like concentration) creates the seesaw effect that gives the system its name.

In order to construct a linear threshold gate, three basic seesaw gates are needed to perform different operations. Multiplying gates combine a signal and a set weight in a seesaw reaction that uses up fuel molecules as it converts the input signal into output signal. Integrating gates combine multiple inputs into a single summed output, while thresholding gates (which also require fuel) send an output signal only if the input exceeds a designated threshold value. Results are read using reporter gates that fluoresce when given a certain input signal.

To test their designs with a simple configuration, the authors first constructed a single linear threshold circuit with three inputs and four outputs—it compared the value of a three-bit binary number to four numbers. The circuit output the correct answer in each case.

For the primary demonstration on their setup, the authors had their linear threshold circuit play a computer game that tests memory. They used their approach to construct a four-neuron Hopfield network, where all the neurons are connected to the others and, after training (tuning the weights and thresholds) patterns can be stored or remembered. The memory game consists of three steps: 1) the human chooses a scientist from four options (in this case, Rosalind Franklin, Alan Turing, Claude Shannon, and Santiago Ramon y Cajal); 2) the human “tells” the memory network the answers to one or more of four yes/no (binary) questions used to identify the scientist (such as, “Did the scientist study neural networks?” or "Was the scientist British?"); and 3) after eight hours of thinking, the DNA memory guesses the answer and reports it through fluorescent signals.

They played this game 27 total times, for a total of 81 possible question/answer combinations (34). You may be wondering why there are three options to a yes/no question—the state of the answers is actually stored using two bits, so that the neuron can be unsure about answers (those that the human hasn't provided, for example) using a third state. Out of the 27 experimental cases, the neural network was able to correctly guess all but six, and these were all cases where two or more answers were not given.

In the best cases, the neural network was able to correctly guess with only one answer and, in general, it was successful when two or more answers were given. Like the human brain, this network was able to recall memory using incomplete information (and, as with humans, that may have been a lucky guess). The network was also able to determine when inconsistent answers were given (i.e. answers that don’t match any of the scientists).

These results are exciting—simulating the brain using biological computing. Unlike traditional electronics, DNA computing components can easily interact and cooperate with our bodies or other cells—who doesn’t dream of being able to download information into your brain (or anywhere in your body, in this case)? Even the authors admit that it’s difficult to predict how this approach might scale up, but I would expect to see a larger demonstration from this group or another in the near future.

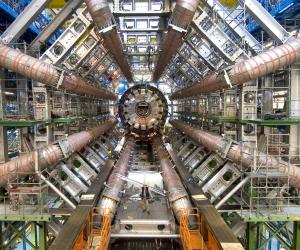

Help CERN in the hunt for the Higgs Boson

Via bit-tech

-----

The Citizen Cyberscience Centre based at CERN, launched a new version of LHC@home today.

You've probably heard of Folding@Home or Seti@Home - both are

distributed computing programs designed to harness the power of your

average home PC to number crunch data, aiding real scientific research.

LHC@home, as science fans will probably have already guessed, is a

similar venture for the Large Hadron Collider (LHC). The latest version

of the Citizen Cyberscience Centre's software, called LHC@home 2.0,

simulates collisions between two beams of protons travelling at close to

the speed of light in the LHC.

Professor Dave Britton of the University of Glasgow is Project Leader of

the GridPP project that provides Grid computing for particle physics

throughout the UK. He is a member of the ATLAS collaboration, one of the

experiments at the LHC and had this to say about LHC@home 2.0 and the

Citizen Cyberscience Centre:

'Scientists like me are trying to answer fundamental questions about

the structure and origin of the Universe. Through the Citizen

Cyberscience Centre and its volunteers around the world, the Grid

computing tools and techniques that I use everyday are available to

scientists in developing countries, giving them access to the latest

computing technology and the ability to solve the problems that they are

facing, such as providing clean water.

Whether you’re interested in finding the Higgs boson, playing a part in

humanitarian aid or advancing knowledge in developing countries, this is

a great project to get involved with.;

You can check out the LHC@home home page here. Have you taken part in Seti or Folding? Maybe you're already in the hunt for the Higgs Boson?

Monday, August 01. 2011

Switched On: Desktop divergence

Via Engadget

By Ross Rubin

-----

Last week's Switched On discussed

how Lion's feature set could be perceived differently by new users or

those coming from an iPad versus those who have used Macs for some time,

while a previous Switched On discussed

how Microsoft is preparing for a similar transition in Windows 8. Both

OS X Lion and Windows 8 seek to mix elements of a tablet UI with

elements of a desktop UI or -- putting it another way -- a

finger-friendly touch interface with a mouse-driven interface. If Apple

and Microsoft could wave a wand and magically have all apps adopt

overnight so they could leave a keyboard and mouse behind, they probably

would. Since they can't, though, inconsistency prevails.

Yet, while the OS X-iOS mashup that is Lion

exhibits is share of growing pains, the fall-off effect isn't as

pronounced as it appears it will be for Windows 8. The main reasons for

this are, in order of increasing importance, legacy, hardware, and

Metro.

Legacy. Microsoft has an incredibly strong commitment

to backward compatibility. As long as Microsoft supports older Windows

apps (which will be well into the future), there will be a more

pronounced gap between that old user interface and the new. This will

likely become more of a difference between Microsoft and Apple over

time. For now, however, Apple is also treading lightly, and several of

Lion's user interface changes -- including "natural" scrolling

directions, Dashboard as a space, and the hiding of the hard drive on

the desktop -- can be reversed. Even some of Lion's "full-screen" apps

are only a cursor movement away from revealing their menus.

Hardware. As Apple continues to keep touchscreens off

the Mac, it brings over the look but not the input experience of iPad

apps, relying instead on the precision of a mouse or trackpad.

Therefore, these Mac apps do not have to embrace finger-friendliness. In

contrast, the "tablet" UI of Windows 8 is designed for fingertips and

therefore demands a cleaner break with an interface designed for mice

(although Microsoft preserves pointer control as well so these apps can

be used on PCs without touchscreens).

Metro. A late entrant to the gesture-driven touchscreen

handset wars, Microsoft sought to differentiate Windows Phone 7 with

its panoramic user interface. When Joe Belfiore introduced

Windows Phone 7 at Mobile World Congress in 2010, he repeatedly noted

that "the phone is not a PC." That's an accurate assessment, and perhaps

one worth repeating in light of all the feedback

Microsoft ignored over the years in the design of Pocket PC and Windows

Mobile. It also of couse holds true beond the user interface for design

around context and support of location-based services.

But now that the folks in Redmond have created an enjoyable phone

interface, have things actually changed? Was it true only that the phone

and PC shoud not have the same old Windows interface, or is it also

still true that the PC and phone should not have the same new Windows

Phone interface? Was it the nature of the user interface itself that was

at fault, or the notion of the same user interface across PC and phone

regardless of how good it is?

There is certainly room for more consistency across PCs, tablets and

handsets. However, Microsoft did not just differentiate Windows Phone 7

from iOS and Android, it differentiated it from Windows as well. And that

is the main reason why the shift in context between a classic Windows

app and a "tablet" Windows 8 app seems more striking at this point than

the difference between a classic Mac app and "full screen" Lion app.

Lion's full-screen apps could be the new point of crossover with Windows

8's "tablet" user interface mode. Based on what we've seen on the

handset side, it is certainly possible for developers to write the same

apps for the iPhone and Windows Phone 7, but these are generally simpler

apps (and then there are games, which generally ignore most user

interface conventions anyway).

Apple and Microsoft are both clearly striving for a simpler user

experience, but Microsoft is also trying to adapt its desktop OS to a

new form factor in the process of doing so. The balancing act for both

companies will be making their new combinations of software and hardware

(from partners in the case of Microsoft) embrace a new generation of

users while minimizing alienation for the existing one.

-----

Personal comments:

See also this article

Adobe dives into HTML with new Edge software

Via CNET

By Stephen Shankland

-----

Adobe Systems has dipped its toes in the HTML5 pool, but starting today it's taking the plunge with the public preview release of software called Edge.

For years, the company's answer to doing fancy things on the Web was Flash Player, a browser plug-in installed nearly universally on computers for its ability to play animated games, stream video, and level the differences among browsers.

But allies including Opera, Mozilla, Apple, Google, and eventually even Microsoft began to advance what could be done with Web standards. The three big ones here are HTML (Hypertext Markup Language for describing Web pages), CSS (Cascading Style Sheets for formatting and now animation effects), and JavaScript (the programming language used for Web apps).

Notably, these new standards worked on new smartphones when Flash either wasn't available, ran sluggishly, or was barred outright in the case of iPhones and iPads. Adobe is working hard to keep Flash relevant for three big areas--gaming, advanced online video, and business apps--but with Edge, it's got a better answer to critics who say Adobe is living in the past.

"What we've seen happening is HTML is getting much richer. We're seeing more workflow previously reserved for Flash being done with Web standards," said Devin Fernandez, product manager of Adobe's Web Pro group.

A look at the Adobe Edge interface.

(Credit: Adobe Systems)The public preview release is just the beginning for Edge. It lets people add animation effects to Web pages, chiefly with CSS controlled by JavaScript. For example, when a person loads a Web page designed with Edge, text and graphics elements gradually slide into view.

It all can be done today with programming experience, but Adobe aims to make it easier for the design crowd used to controlling how events take place by using a timeline that triggers various actions.

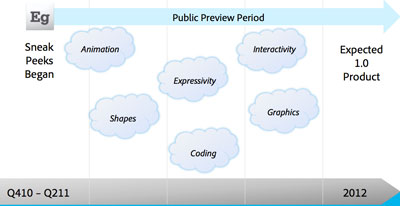

As new versions arrive, more features will be added, and Adobe plans to begin selling the finished version of Edge in 2012.

"[For] the first public preview release, we focused on animation," Fernandez said. "Over the public preview period, we'll be adding additional functionality. We'll be incorporating feedback from the community, taking those requests into account."

The Edge preview product now is available at the Adobe Labs site. Adobe showed an early look at Edge in June.

What exactly is next in the pipeline? Adobe has a number of features in mind, including the addition of video and audio elements alongside the SVG, PNG, GIF, and JPEG graphics it can handle now.

Some of the features Adobe has in mind before a planned 2012 release of Edge. (Credit: Adobe Systems)

Other items on the to-do list:

• More shapes than just rectangles and rounded-corner rectangles.

• Actions that are triggered by events.

• Support for Canvas, an HTML5 standard for 2D drawing surface for graphics, in particular combined with SVG animation. (Note that Adobe began the SVG effort while before it acquired Macromedia, whose Flash technology was a rival to SVG.)

"We still have a lot of features we have not implemented," said Mark Anders, an Adobe fellow working on Edge.

The software integrates with Dreamweaver, Adobe's Web design software package, or other Web tools. It integrates its actions with the Web page so that Edge designers can marry their additions with the other programming work.

The software itself has a WebKit-based browser whose window is prominent in the center of the user interface. A timeline below lets designers set events, copy and paste effects to different objects, and make other scheduling changes.

Adobe, probably not happy with being a punching bag for Apple fans who disliked Flash, seems eager to be able to show off Edge to counter critics' complaints. The company will have to overcome skepticism and educate the market that it's serious, but real software beats keynote comments any day.

Anders, who before Edge worked on Flash programming tools and led work on Microsoft's .Net Framework, is embracing the new ethos.

"In the the last 15 years, if you looked at a Web page and saw this, you would say that is Flash because Flash is the only thing that can do that. That is not true today," Anders said. "You can use HTML and the new capabilities of CSS to do this really amazing stuff."

Thursday, July 28. 2011

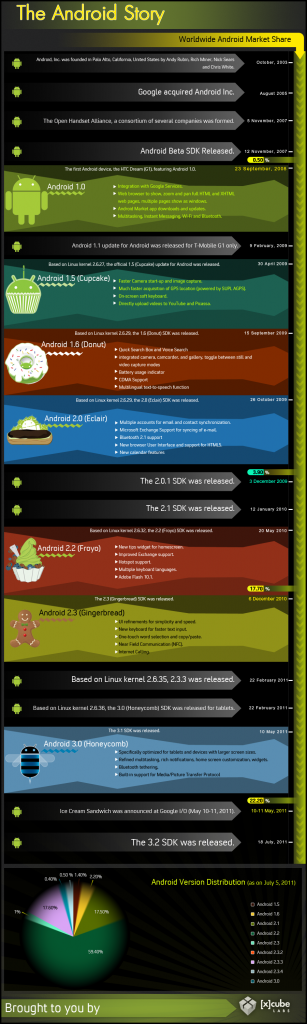

The History of Android Version Releases

Via Android Tapp

-----

![The History of Android Version Releases [Infographic]](http://blog.computedby.com/cby/images/46_1313483255_0.jpg)

Check out this infographic by [x]cubelabs showing the history of Android version releases to date… even tossing in a factoid of when Android was officially started then acquired by Google. The graphic shows key feature highlights in each milestone and concludes with today’s snapshot, which shows most Android devices with Android 2.2 (Froyo). Have a look!

Wednesday, July 27. 2011

Qualcomm’s Awesome Augmented Reality SDK Now Available For iOS

Via TechCrunch

-----

Back around July of last year, Qualcomm launched a software development kit for building Augmented Reality apps on Android. The idea was to allow Android developers to build all sorts of crazy AR stuff (like games and apps that render things in live 3D on top of a view pulled in through your device’s camera) without having to reinvent the wheel by coding up their own visual-recognition system. It is, for lack of a better word, awesome.

And now it’s available for iOS.

For those unfamiliar with Augmented Reality — or for those who just want to see something cool — check out this demo video I shot a year or so back:

Sometime in the past few hours, Qualcomm quietly rolled a beta release of the iOS-compatible SDK into their developer center. This came as a bit of a shock; Qualcomm had previously expressed that, while an iOS port would come sooner or later, their main focus was building this platform for devices running their Snapdragon chips (read: not Apple devices).

And yet, here we are. This first release of the SDK supports the iPhone 4, iPad 2, and fourth generation iPod Touch — none of which have Snapdragon CPUs in them. Furthermore, this release supports Unity (a WYSIWYG-style rapid game development tool) right off the bat, whereas the Android release didn’t get Unity support until a few months. Developers can also work in straight in Xcode if they so choose.

This platform lowers the “You must be this crazy of a developer to ride this ride” bar considerably, so expect an onslaught of Augmented Reality apps in the App Store before too long.

Monday, July 25. 2011

Three remote desktop apps worth a look

Via TechRepublic

-----

By Scott Lowe

If your organization allows remote access to systems via remote desktop tools, there are a number of apps for the iPhone and for Android devices that make it a breeze to work anytime from anywhere. In this app roundup, I feature three remote desktop tools that work in slightly different ways.

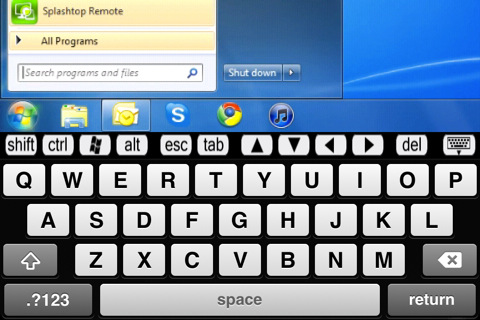

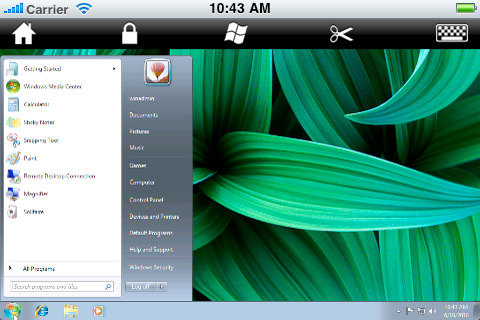

Splashtop Remote Desktop

Splashtop Remote Desktop is a high-performance app that supports multiple monitors and desktop-based video. The mobile device-based Splashtop Remote Desktop app connects to a small client that is installed on your desktop PC, which can be running Windows XP, Windows Vista, or Windows 7 or Mac OS X 10.6.Perhaps the most significant downside to Splashtop Remote Desktop is that connections are required to be made solely over Wi-Fi networks; this limits, to a point, the locations from which the tool can be used. However, most Wi-Fi connections are faster than 3G, so performance should be good.

Splashtop Remote Desktop is available for the iPhone and for Android devices. At $1.99 for the iPhone version and $4.99 for the Android version, this app will certainly not break the bank.

Figures A and B are screenshots of the app from iPhone and Android devices, respectively.

Figure A

Splashtop Remote Desktop for the iPhone

Figure B

The Android version of Splashtop Remote Desktop

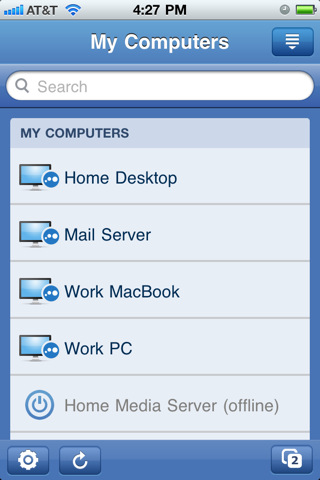

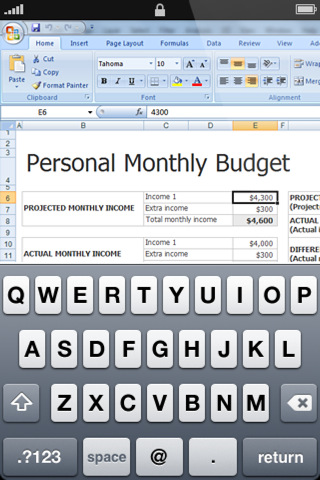

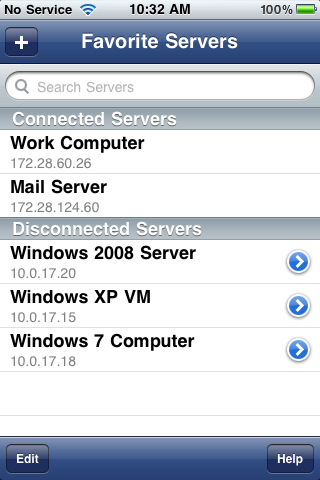

LogMeIn Ignition

LogMeIn provides a robust, comprehensive remote desktop tool. LogMeIn Ignition requires you to install a client component on the desktop computer you wish to control.LogMeIn Ignition supports 32-bit and 64-bit Windows 7, Windows Vista, Windows XP, Windows Server 2003, Windows 2008 and 32-bit Windows 2000, and Mac OS X 10.4, 10.5, and 10.6 (PPC and Intel processors are supported). The Mac version is missing features such as drag and drop file transfer, remote sound, and integration with LogMeIn’s centralized reporting tool; although, for occasional remote access from a handheld device, these features are probably not that critical.

LogMeIn Ignition is a client component that works on Android and Apple devices including the iPhone and the iPad. At $29.99, you will need to be able to realize real value from the app in order to justify the purchase. LogMeIn Ignition definitely isn’t a “drive by download.”

LogMeIn Ignition is not intended to be a “one off” remote access tool; it aggregates all of your remote connections into one view, making it easier to manage a plethora of remote systems (Figure C). Again, each managed system must have the LogMeIn client installed.

Figure C

LogMeIn Ignition’s computer selection page

Figures D and E are screenshots of LogMeIn Ignition on an Android device and an iPhone, respectively.Figure D

Android-based version of LogMeIn Ignition

Figure E

iPhone-based version of LogMeIn Ignition

WinAdmin

WinAdmin is another tool I have used for remote access. The app relies on Microsoft’s standard RDP implementation and does not require the installation of additional client software on managed computers, which makes it a good solution for remote desktop access as well as remote server desktop access. If you’re using WinAdmin to remotely access servers, you’ll probably need some kind of VPN tunnel in place, or you’ll need to be sitting behind your organization’s firewall in order to allow the tool to work its magic.WinAdmin is available for the iPhone, iPad, and iPod Touch; there is no Android version. At $7.99, this app might be considered in the moderately high price range for some, but if it’s being used to support a server farm, it’s certainly affordable.

The screenshots in Figures F, G, and H give you a look at WinAdmin.

Figure F

WinAdmin’s landscape-mode view is more natural for most users.

Figure G

Store connection information for all of your remote systems… just lock your phone when not using WinAdmin.

Figure H

WinAdmin’s portrait mode shows the keyboard at the bottom and menu across the top of the display.

What remote desktop app do you recommend?

These are just three tools that are worthy of consideration for your organization’s remote access needs.

-----

Personal comments:

Remote access to desktops are not a 21th century innovation, as it almost exists since the first network was set up, but above solutions and Splashtop in particular bring to us one cross platform solution (streamer available on Mac and PC and remote clients available on all mobile platforms) that may help us to determine what can we seriously do with these tablets! :-)

Tuesday, July 19. 2011

Is the Desktop Having an Identity Crisis?

Both Apple and Microsoft's new desktop operating systems borrow elements from mobile devices, in sometimes confusing ways.

Apple is widely expected to unveil a major update this week to OS X Lion, its operating system for desktop and laptop computers. Microsoft, meanwhile, is working on an even bigger overhaul of Windows, with a version called Windows 8.

Both new operating systems reflect a tectonic shift in personal computing. They incorporate elements from mobile operating systems alongside more conventional desktop features. But demos of both operating systems suggest that users could face a confusing mishmash of design ideas and interaction methods.

Windows 8 and OS X Lion include elements such as touch interaction and full-screen apps that will facilitate the kind of "unitasking" (as opposed to multitasking) that users have become accustomed to on mobile devices and tablets.

"The rise of the tablets, or at least the iPad, has suggested that there is a latent, unmet need for a new form of computing," says Peter Merholz, president of the user-experience and design firm Adaptive Path. However, he adds, "moving PCs in a tablet direction isn't necessarily sensible."

Cathy Shive, an independent software developer, would agree. She developed software for Mac desktop applications for six years before she switched and began developing for iOS (Apple's operating system for the iPhone and iPad). "When I first saw Steve Jobs's demo of Lion, I was really surprised—I was appalled, actually," she says.

Shive is surprised by the direction both Apple and Microsoft are taking. One fundamental dictate of usability design is that an interface should be tailored to the specific context—and hardware—in which it lives. A desktop PC is not the same thing as a tablet or a mobile device, yet in that initial demo, "It seemed like what [Jobs] was showing us was a giant iPad," says Shive.

A subsequent demonstration of Windows 8 by Microsoft vice president Julie Larson-Green confirmed that Redmond was also moving toward touch as a dominant interaction mechanism. One of the devices used in that demonstration, a "media tablet" from Taiwan-based ASUS, resembled an LCD monitor with no keyboard.

Not everyone is so skeptical about Apple and Microsoft's plans. Lukas Mathis, a programmer and usability expert, thinks that, on balance, this shift is a good thing. "If you watch casual PC users interact with their computers, you'll quickly notice that the mouse is a lot harder to use than we think," he says. "I'm glad to see finger-friendly, large user interface elements from phones and tablets make their way into desktop operating systems. This change was desperately needed, and I was very happy to see it."

Mathis argues that experienced PC users don't realize how crowded with "small buttons, unclear icons, and tiny text labels" typical desktop operating systems are.

Lion and Windows 8 solve these problems in slightly different ways. In Lion, file management is moving toward an iPhone/iPad-style model, where users launch applications from a "Launchpad," and their files are accessible from within those applications. In Windows 8, files, along with applications, bookmarks, and just about anything else, can be made accessible from a customizable start screen.

Some have criticized Mission Control, Apple's new centralized app and window management interface, saying that it adds complexity rather than introducing the simplicity of a mobile interface. At the other extreme, Lion allows any app to be rendered full-screen, which blocks out distractions but also forces users to switch applications more often than necessary.

"The problem [with a desktop OS] is that it's hard to manage windows," says Mathis. "The solution isn't to just remove windows altogether; the solution is to fix window management so it's easier to use, but still allows you to, say, write an essay in one window, but at the same time look at a source for your essay in a different window."

Windows 8, meanwhile, attempts to solve this problem in a more elegant way, with a "Windows Snap," which allows apps to be viewed side-by-side while eliminating the need to manage their dimensions by dragging them from the corner.

A problem with moving toward a touch-centric interface is that the mouse is absolutely necessary for certain professional applications. "I can't imagine touch in Microsoft Excel," says Shive. "That's going to be terrible," she says.

The most significant difference between Apple's approach and Microsoft's is that Windows 8 will be the same OS no matter what device it's on, from a mobile phone to a desktop PC. To accommodate a range of devices, Microsoft has left intact the original Windows interface, which users can switch to from the full-screen start screen and full-screen apps option.

Merholz believes Microsoft's attempt to make its interface consistent across all devices may be a mistake. "Microsoft has a history of overemphasizing the value of 'Windows everywhere.' There's a fear they haven't learned appropriateness, given the device and its context," he says.

Shive believes the same could be said of Apple. "Apple has been seduced by their own success, and they're jumping to translate that over to the desktop ... They think there's some kind of shortcut, where everyone is loving this interface on the mobile device, so they will love it on their desktop as well," she says.

In a sense, both Apple and Microsoft are about to embark on a beta test of what the PC should be like in an era when consumers are increasingly accustomed to post-PC modes of interaction. But it could be a bumpy process. "I think we can get there, but we've been using the desktop interface for 30 years now, and it's not going to happen overnight," says Shive.

-----

Personal Comments:

From my personal point of view and based on my 30 years IT/Dev experience, I do not see the change of desktop Look&Feel as a crisis but more as a simple and efficient aesthetic evolution.

Why? Because what was made for mobile phone first and then for new coming mobile devices like tablets is what some people were trying to do on laptop/desktop computer's GUI for years: trying to make the GUI/desktop experience simple enough in order to make computers accessible to anyone of us, even to the more recusant to technology (see evolution of windows and Linux GUI). That specific goal was successfully reached on mobile phones/devices in a very short time, pushing common people to change of device every two years and making them enjoy new functionality/technology without having to read one single page of an instruction manual (by the way, mobile phones are delivered without any!).

It looks like technological constraints and restrictions were needed in order to invent this kind of interface. Touch screen only mobile phones were available since years prior Apple produces its first iPhone (2007), remember the Sony-Ericsson P800 (2002) and its successor the P900 (2003), technically everything was here (they are close to the "classic" smartphone we are used to have in our pocket nowadays), but an efficient GUI and in a more general way, an efficient OS was dramatically missing. What was done by Apple with iOS, Google with Android and HTC with its UISense GUI on top of Android brings out and demonstrates the obvious potential of these mobile devices.

The adaptation of these GUI/OS on tablet (iOS, Android 3.0), still with the touch-only constraint, rises up new solutions for GUI while extending what can be done through few basic finger gestures. It sounds not surprising that classic desktop/laptop computers are now trying to integrate the good of all this in their own environment as they did not succeed in doing so on their own before. I would even say that this is an obvious step forward as many ideas are adaptable to desktop computer world. For example, making easier the installation of applications by making available App store concept to desktop computers is an obvious step, one does not have to think if the application is compatible with the local hardware etc... the App store just focus on compatible applications, seamlessly.

So more than entering a crisis/revolution, I would say that desktop computer world will just exploit from the mobile devices world what can be adapted in order to make the desktop computer experience for the end-user as seamless as it is on mobile devices... but for some basic tasks only.

You can embed a desktop OS in a very

nice and simple box making things looking very similar to mobile

device's simplicity, but this is just a kind of gift package which is

not valuable for all usages you can face on a desktop computer...

making this step forward looking like a set of cosmetic changes, and not more... because it just can not be more!

Today, one is used to glorious declaration each time a new OS is proposed to end-user, many so-called "new" features mentioned are not more than already existing ones that were re-design and pushed on the scene in order to obtain a kind of revolutionary OS impression: who can seriously consider full screen app or automatic save as new key features for a 21th century's new OS?

Let's go through some of the key new features announced by Apple in Mac OS X Lion:

- Multi-touch: this is not a new feature, it "just" adds some new functionality to map to already available multi-touch gestures.

- Full Screen management: it basically attached a virtual desktop to any application running in full screen. Thus, you can switch from/to full screen applications... the same way you were already able to do so by switching from one virtual desktop to another.

- LaunchPad: this is basically a graphical interface/shortcuts for the 'Applications' folder in the Finder. Ok it looks like the Apps grid on a tablet or a mobile phone... but as it was already presented as a list, the other option was... guess what... a grid!

- Mission Control: this is also an evolution of something that was already existing. The ability to see all your windows in addition to all your virtual desktops.

I'm pretty convinced that these new features are going to be really useful and pleasant to use, making the usage of the touchpad on MacBook even more primordial, but I do not see here a real revolution, neither a crisis, in the way we are going to work on desktop/laptop computers.

Quicksearch

Popular Entries

- The great Ars Android interface shootout (131026)

- Norton cyber crime study offers striking revenue loss statistics (101637)

- MeCam $49 flying camera concept follows you around, streams video to your phone (100042)

- Norton cyber crime study offers striking revenue loss statistics (57866)

- The PC inside your phone: A guide to the system-on-a-chip (57427)

Categories

Show tagged entries

Syndicate This Blog

Calendar

|

|

February '26 | |||||

| Mon | Tue | Wed | Thu | Fri | Sat | Sun |

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | |